-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The CPU determines GPU performance. On Nvidia anyway.

- Thread starter jigger

- Start date

More options

Thread starter's postsIn short Nvidia's CPU scheduling is through software, so the driver uses a lot of CPU cycles just to work.

AMD's CPU scheduling on hardware based, its on the GPU its self, so it doesn't use the CPU.

The result of that is if you're running a high end Nvidia GPU with anything but the latest AMD or Intel CPU your performance can be bottlenecked to something that is slower than a mid range AMD GPU.

AMD's CPU scheduling on hardware based, its on the GPU its self, so it doesn't use the CPU.

The result of that is if you're running a high end Nvidia GPU with anything but the latest AMD or Intel CPU your performance can be bottlenecked to something that is slower than a mid range AMD GPU.

So would you say a rtx 3070 paired with a Ryzen 7 2700x is why my graphics card handles games like a piece of poo?

No, you have 8 cores.

...if you have a slow CPUThe solution is and has always been - Please stay away from Nvidia's poo products!

Number of cores is not that relevant for this, it is mostly about IPC and latency. 2700X is going to be a bottleneck for 3070 below 1440pNo, you have 8 cores.

No, you have 8 cores.

Yes but with Zen+ IPC and if Nvidia's software is not optimised properly for the Zen microarchitecture well, you get a terrible mess.

...if you have a slow CPU

Number of cores is not that relevant for this, it is mostly about IPC and latency. 2700X is going to be a bottleneck for 3070 below 1440p

It actually is because the driver will use cores that are not being used by the game if it can.

It actually is because the driver will use cores that are not being used by the game if it can.

I am quite sure that the CPU itilisation never even approaches anything remotely close to 100%

The problem is in Nvidia's software engineers, and maybe Microsoft.

OK, I used term IPC completely wrong here. What I meant is basic single thread performance.

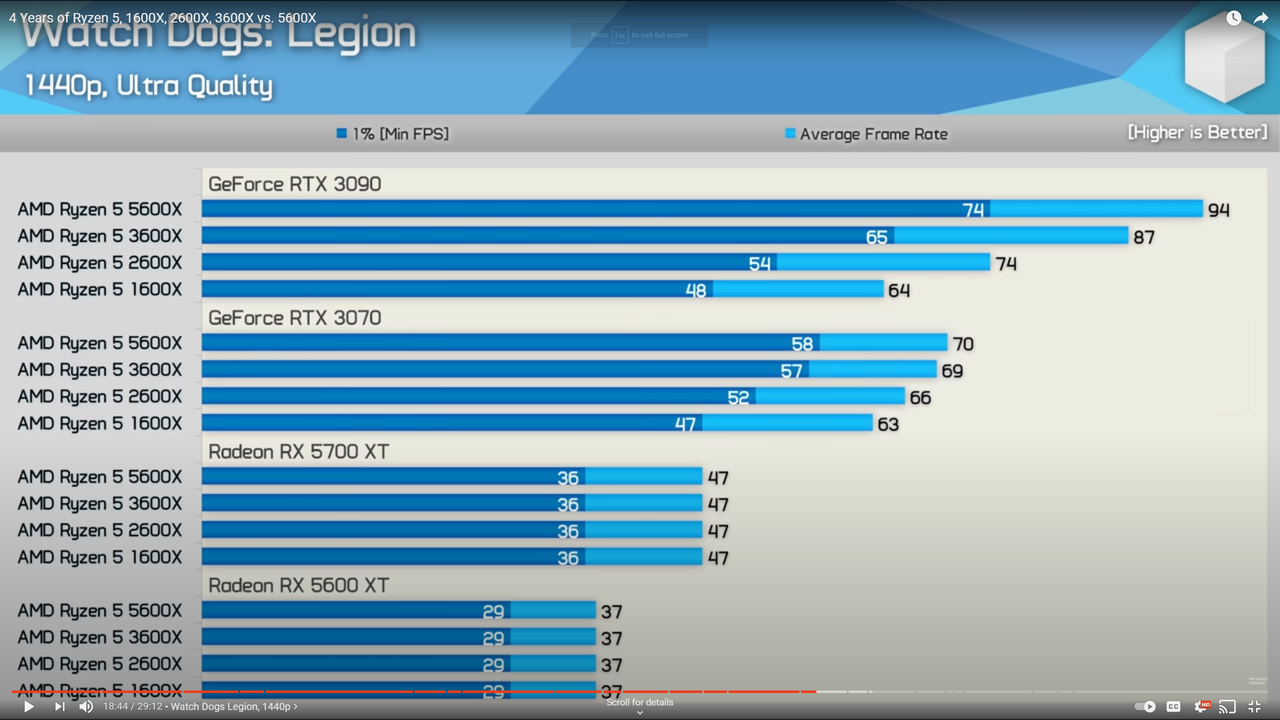

Whatever in graphic driver that cause this overhead is very little multithreaded. And so 2600X used in the video will perform very close to 2700X, minus the clock difference.

Whatever in graphic driver that cause this overhead is very little multithreaded. And so 2600X used in the video will perform very close to 2700X, minus the clock difference.

If your playing at 1080p then you will have some bottleneck but at 1440P you should be fine for the most part.So would you say a rtx 3070 paired with a Ryzen 7 2700x is why my graphics card handles games like a piece of poo?

Last edited:

The CPU utilization in this is up to 80% on the Nvidia GPU vs 60% on the AMD GPU, this on an RTX 3070 locked to 60 FPS.

The second image there is a spike to 100%, the 3070 is at 46 FPS with the 6800 still at 60.

The CPU on the Nvidia GPU isn't always going to be fully loaded, however imagine this same scenario without the 60 FPS fame lock, on the Nvidia side the CPU has 20% before its locked to 100%, on the AMD side its 40%

Its not that Nvidia's software engineers are bad, they aren't, they are probably pretty good, the problem is Nvidia use software for CPU scheduling and that puts an extra load on the CPU that AMD with hardware scheduling don't have.

The second image there is a spike to 100%, the 3070 is at 46 FPS with the 6800 still at 60.

The CPU on the Nvidia GPU isn't always going to be fully loaded, however imagine this same scenario without the 60 FPS fame lock, on the Nvidia side the CPU has 20% before its locked to 100%, on the AMD side its 40%

I am quite sure that the CPU itilisation never even approaches anything remotely close to 100%

The problem is in Nvidia's software engineers, and maybe Microsoft.

Its not that Nvidia's software engineers are bad, they aren't, they are probably pretty good, the problem is Nvidia use software for CPU scheduling and that puts an extra load on the CPU that AMD with hardware scheduling don't have.

This is why when recomending CPU's to people with high end Nvidia GPU's i lean toward 8 core CPU's, particularly Zen 3 CPU's as they have the most headroom of all. They will give you the most consistent performance.

Associate

- Joined

- 11 Jun 2021

- Posts

- 1,027

- Location

- Earth

Zen + IPC is pretty much the same as Coffeelake.

In Cinebench R20, look at the rest of the tests, specifically the game tests as that is what we are discussing.

https://www.techspot.com/article/2143-ryzen-5000-ipc-performance/

Buy a console, graphics cards today are a rip-off period.

£1200 graphics card, £1000 for the rest of the PC and it runs Watch Dogs at 1080P/medium with 112fps average when £450 consoles run it at 4K/60fps? not to mention no monitors have good HDR. I'd rather have that than minor texture upgrades and other subtle effects that tank performance for very little visual benefit.

£1200 graphics card, £1000 for the rest of the PC and it runs Watch Dogs at 1080P/medium with 112fps average when £450 consoles run it at 4K/60fps? not to mention no monitors have good HDR. I'd rather have that than minor texture upgrades and other subtle effects that tank performance for very little visual benefit.

Last edited:

In Cinebench R20, look at the rest of the tests, specifically the game tests as that is what we are discussing.

https://www.techspot.com/article/2143-ryzen-5000-ipc-performance/

That is true, Zen + has a high Intercore Latency which is why the disparity between its IPC and gaming performance, its the same reason Rocketlake has a 20% higher IPC vs Coffeelake but the gaming performance is identical, because the Ring Bus, the Intercore communications system is the same in Rocketlake as it is in Coffeelake.

Zen 3 Intercore latency is the fastest of all, and guess what...

But Nvidia's software scheduling isn't gaming.

Associate

- Joined

- 11 Jun 2021

- Posts

- 1,027

- Location

- Earth

That is true, Zen + has a high Intercore Latency which is why the disparity between its IPC and gaming performance, its the same reason Rocketlake has a 20% higher IPC vs Coffeelake but the gaming performance is identical, because the Ring Bus, the Intercore communications system is the same in Rocketlake as it is in Coffeelake.

Zen 3 Intercore latency is the fastest of all, and guess what...

But Nvidia's software scheduling isn't gaming.

So you would say that GPU architecture and drivers have an equal effect on both CPU targeted production tasks and gaming ? Or are you just throwing a red herring in there

That is true, Zen + has a high Intercore Latency which is why the disparity between its IPC and gaming performance, its the same reason Rocketlake has a 20% higher IPC vs Coffeelake but the gaming performance is identical, because the Ring Bus, the Intercore communications system is the same in Rocketlake as it is in Coffeelake.

Zen 3 Intercore latency is the fastest of all, and guess what...

But Nvidia's software scheduling isn't gaming.

Seemed like in the old DX11 era Nvidia's method worked pretty well but not so much on DX12/Vulkan? Shame I don't have a 6800XT to see how my old 5820K CPU would fare in a matchup between the 3080 and 6800XT.