-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

*** The Official Alder Lake owners thread ***

- Thread starter mrk

- Start date

More options

View all postsPersonally I'm going to forget about getting low latency RAM as have realised it's chasing a non-issue really. Instead I will add another 32GB as that's what will benefit me the most as my workloads are more and more utilising 25+ GB at times.

Interesting note, Gigabyte have updated their mobo page to show a RAM compatibility list: https://www.gigabyte.com/Motherboard/Z690-GAMING-X-DDR4-rev-10/support#support-memsup

I note that in this listing my 32GB Corsair Vengeance kit of 2rx16 is not listed which probably explains why when I enable XMP it won't boot but all is well when I manually set the timings and frequency.

Anyways I have eyelined some 64GB kit whihc I will likely order shortly and the one I have on my saved basket is CMK64GX4M2D3600C18 @ £289 for the 64GB kit.

Also interesting to note that Gigabyte don't list most modules as compatible when occupying all 4 slots, only single slot or dual slot so that was interesting.

I note that in this listing my 32GB Corsair Vengeance kit of 2rx16 is not listed which probably explains why when I enable XMP it won't boot but all is well when I manually set the timings and frequency.

Anyways I have eyelined some 64GB kit whihc I will likely order shortly and the one I have on my saved basket is CMK64GX4M2D3600C18 @ £289 for the 64GB kit.

Also interesting to note that Gigabyte don't list most modules as compatible when occupying all 4 slots, only single slot or dual slot so that was interesting.

Will an i7 12700K help with gaming over my i7 4790k? Or are games still mostly GPU limited?

Well I upgraded form an i7 6700K and the gaming performance with an RTX 2070 Super have improved somewhat. The 1% lows especially are sustained much better and as a result those minimum frames are consistently higher levelled out.

I'm just about to run Metro Exodus and RDR2 as those are games I have extended playtime on with the 6700K, that will give you a good idea of what to expect since the 4790k isn't far off that.

RDR2

(note: I can't remember the exact graphics settings I used last time but I know the res and pretty good idea, as in mostly all ultra with some high/medium for volumetric shadows and water. The latest build of the game has DLSS and the updated engine bits which weren't there back when I played the game last. This bench was done with DLSS turned off too.

These were also at 3440x1440 so a GPU bound resolution so really not something to judge the CPU on but even still the minimum framerate has increased.

6700K / 2070 Super / DDR4 3600MHz / 3440x1440 Windows 10

Min: 30.67

Max: 75.36

Average: 55.49

12700K / 2070 Super / DDR4 3600MHz / 3440x1440 Windows 11

Min: 37.40

Max: 76.56

Average: 57.78

Metro Exodus Enhanced Edition

I ran this at 2560x1080 as 1440+ would be completely GPU bound, but found out even with DLSS enabled that the 2070 was 99%/100% utilised leaving the 12700K basically twiddling its thumbs lol.

The below is with Ray tracing set to ultra, hairworks on, advanced physx on and everything else on ultra/extreme. DLSS was set to quality.

I think a new GPU is definitely on the cards for me when these 40 series are announced!

(note: I can't remember the exact graphics settings I used last time but I know the res and pretty good idea, as in mostly all ultra with some high/medium for volumetric shadows and water. The latest build of the game has DLSS and the updated engine bits which weren't there back when I played the game last. This bench was done with DLSS turned off too.

These were also at 3440x1440 so a GPU bound resolution so really not something to judge the CPU on but even still the minimum framerate has increased.

6700K / 2070 Super / DDR4 3600MHz / 3440x1440 Windows 10

Min: 30.67

Max: 75.36

Average: 55.49

12700K / 2070 Super / DDR4 3600MHz / 3440x1440 Windows 11

Min: 37.40

Max: 76.56

Average: 57.78

Metro Exodus Enhanced Edition

I ran this at 2560x1080 as 1440+ would be completely GPU bound, but found out even with DLSS enabled that the 2070 was 99%/100% utilised leaving the 12700K basically twiddling its thumbs lol.

The below is with Ray tracing set to ultra, hairworks on, advanced physx on and everything else on ultra/extreme. DLSS was set to quality.

Code:

FPS 99% CPU% GPU%

54 44 26 99

54 45 17 99

56 45 22 100

56 45 22 100

56 44 24 99

56 44 24 99

56 44 34 99

56 44 34 99

56 44 36 99

56 44 36 99

56 44 30 99

56 44 30 99

59 44 24 99

59 44 24 99

60 44 24 99

60 45 31 99

64 42 21 99

64 42 21 99

64 57 26 99

64 57 26 99

62 57 26 99I think a new GPU is definitely on the cards for me when these 40 series are announced!

The CPU helps for sure in GPU bound resolutions still, it's the minimum fps that the CPU takes care of during those moments that require the CPU to do its job effectively.Whilst the games I tested above are mostly GPU bound games, other games like Cyberpunk now have a minimum fps baseline around 60fps which was never possible when I had the 6700K and as such I am now enjoying the city with RTX enabled which I could not do before.

Be interested to hear how you find the Freezer! Especially any noises etc you notice!

On mine I have the rpms set low in the BIOS until the CPU hits 60+, so 400rpm to 500rpm. I notice that sometimes when editing or doing other stuff it sounds like the pump chirps for a second then back to the very very faint pump noise you normally get from an AIO. To me it sounds like a by-design sound, like the pump is running some programmed command or something. It's weird and you wouldn't notice it I guess if your case fans were running at standard rpms using the default fan profiles on your system. I can't really record it on video as it's quite faint but will try next time.

Just wondered if others get the same noise is all!

On mine I have the rpms set low in the BIOS until the CPU hits 60+, so 400rpm to 500rpm. I notice that sometimes when editing or doing other stuff it sounds like the pump chirps for a second then back to the very very faint pump noise you normally get from an AIO. To me it sounds like a by-design sound, like the pump is running some programmed command or something. It's weird and you wouldn't notice it I guess if your case fans were running at standard rpms using the default fan profiles on your system. I can't really record it on video as it's quite faint but will try next time.

Just wondered if others get the same noise is all!

Still can't get over how quick Lightroom responds now to edits, and in general just navigating the whole app itself. Everything is snap snap instant. The generational gap between 6th gen and 12th gen is huge for productivity

64GB kit of 3600 LPX arriving Thursday so can't wait to get that installed too.

64GB kit of 3600 LPX arriving Thursday so can't wait to get that installed too.

Hi,

No major issues to note from this board really other than the XMP profile not working with my current RAM so have set frequency etc manually which is all good. Stability, features and performance wise I can't fault it really. Gigabyte tends to have solid mobo components which is why I generally stick to their boards. It's a new platform though and a new board not even a month old so only time will tell how things progress and what BIOS updates bring to the table too.

For cooling, seems the heatsinks used are of good quality, even under full load the VRMs and such are kept cool and I've only really got 2 exhaust case fans excluding the AIO's 2x exhaust fans pulling air through the rad as intake.

I have the 12700 of course so can't speak in terms of power and thermals that a 12900 would command but can't see it being any issue really as long as the CPU cooler is up to standard and the thermal paste used is good too.

No major issues to note from this board really other than the XMP profile not working with my current RAM so have set frequency etc manually which is all good. Stability, features and performance wise I can't fault it really. Gigabyte tends to have solid mobo components which is why I generally stick to their boards. It's a new platform though and a new board not even a month old so only time will tell how things progress and what BIOS updates bring to the table too.

For cooling, seems the heatsinks used are of good quality, even under full load the VRMs and such are kept cool and I've only really got 2 exhaust case fans excluding the AIO's 2x exhaust fans pulling air through the rad as intake.

I have the 12700 of course so can't speak in terms of power and thermals that a 12900 would command but can't see it being any issue really as long as the CPU cooler is up to standard and the thermal paste used is good too.

Voltages etc all auto/default for me. The only things I changed manually were RAM to get to the XMP timings and speed and voltage, pretty easy to do and settings saved all fine.

That's fair, you spend £200 on a mobo and it's reasonable to expect everything to work out the box. On the whole I've been impressed. I recall when I got the Gigabyte Z170 X Gaming 5 mobo back in 2016 that there were some bugs but got fixed fairly quick. Previous to that various Asus/MSI boards I had also had bugs that needed a few BIOS updates to solve too so it seems a running trend with all the big brands really. Asus have already released a Z690 BIOS for some boards that improved performance and regulated the voltage range too.

Thankfully for now the XMP issue has a workaround by manually changing the RAM values, not so sure about voltages not sticking though as I don't OC so no need to change any voltages of LLC etc.

For me as long as there's a workaround then I'm fine with waiting it out. Like Windows 11 missing various features with the taskbar and start menu, we know these will come back in future updates, but for now there are workarounds like StartAllBack and others.

No problems with performance on my end though which is the main thing that would have annoyed me. All my benchmarks seem to fall well inline with what reviewers have been showing on their CPU reviews so I'm good on that front with normal DDR4 RAM lol.

Thankfully for now the XMP issue has a workaround by manually changing the RAM values, not so sure about voltages not sticking though as I don't OC so no need to change any voltages of LLC etc.

For me as long as there's a workaround then I'm fine with waiting it out. Like Windows 11 missing various features with the taskbar and start menu, we know these will come back in future updates, but for now there are workarounds like StartAllBack and others.

No problems with performance on my end though which is the main thing that would have annoyed me. All my benchmarks seem to fall well inline with what reviewers have been showing on their CPU reviews so I'm good on that front with normal DDR4 RAM lol.

Adobe have a workaround for the Photoshop 2022 crash bug on 12th gen CPUs, quite impressed by teh one to one support from the devs on their forum really. The workaround sorts it for me and they said they are working on a proper fix.

Details: https://community.adobe.com/t5/phot...-12th-gen-cpu-pc/idc-p/12531833/page/2#M31516

Edit*

The crash now happens when saving files, Adobe looking into it!

Details: https://community.adobe.com/t5/phot...-12th-gen-cpu-pc/idc-p/12531833/page/2#M31516

Edit*

The crash now happens when saving files, Adobe looking into it!

Last edited:

I forgot about the cashback as I changed to the Gigabyte board. Oops

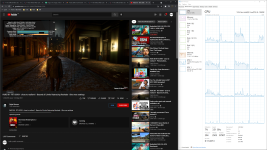

Out of curiosity I watched an 8k video on youtube and saw 30% CPU usage on the 12700. That's much higher than I was expecting, wonder what previous gen CPUs get if anyone has a system and wants to try the same video?

Link: https://youtu.be/06a9YETMQRk

P-Core 6 is brimmed here

Interesting that whilst the RTX is 30% utilised too, the CPU plays a big factor which I guess that's the load on the browser in the most part during 8K playback.

My 6700K certainly would frameskip all over that lol. Setting the stream to 4k is just 3% CPU usage.

Edit*

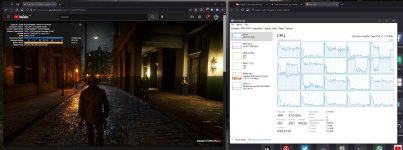

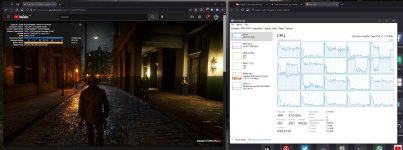

I just wanted to check what cores are used during gaming so fired up Cyberpunk and it looks like games do indeed use the E-Cores, or at least Thread Director will make use of them in gaming when no other app needs to do stuff in the BG:

Link: https://youtu.be/06a9YETMQRk

P-Core 6 is brimmed here

Interesting that whilst the RTX is 30% utilised too, the CPU plays a big factor which I guess that's the load on the browser in the most part during 8K playback.

My 6700K certainly would frameskip all over that lol. Setting the stream to 4k is just 3% CPU usage.

Edit*

I just wanted to check what cores are used during gaming so fired up Cyberpunk and it looks like games do indeed use the E-Cores, or at least Thread Director will make use of them in gaming when no other app needs to do stuff in the BG:

Last edited:

Yeah on the Asus cashback page it said how long the claim period is so you have a window to make the claim before it's gone. You can check claim status too on the top right of the page if you have already submitted.

Interesting, yours shows some dropped frames there but cool seeing the core usage there, basically no HT threads being used although I wonder how much Thread Director factors in different things running in the BG too based on what threads it allocates to stuff like this on different systems.

Last edited:

Welcome to the club!

You will need the serial off the box yep.