Associate

- Joined

- 20 Jun 2016

- Posts

- 1,384

"AMD publishes...."

still the same 6 cherry picked games with no RT.

next.

Roll on the real reviews I say.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

"AMD publishes...."

If you just go with the pricing and "AMD are not a charity" logic...

AMD are confident that the 6800 is faster than the 3070.

AMD think the the 6800XT is close to the 3080 (maybe $50 discount for inferior ray tracing?)

AMD are confident that the 6900XT is faster than the 3080.

People won't get to spend actuall money until after the reviews come out, so the pricing has to hold up in the light of independent reviews.

I'm hopeful that AMD's first gen ray tracing can't be used to try and justify the pricing and AMD is offering legit raw performance.

Because:

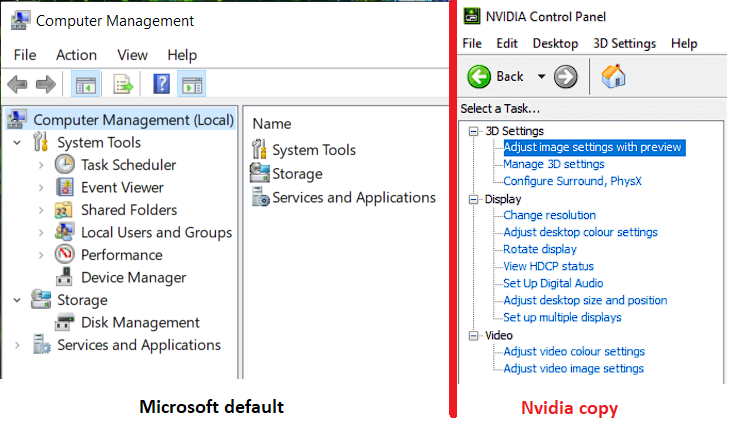

-better driver user interface - modern;

/QUOTE]

It's hilarious that you are writing this as though you have any idea of the actual facts of what you are talking about.Same rubbish as before with 3070Ti 16gb and 3080 20GB... ohh wait nvidia cancelled .. lol they never existed in any form. Same again with 3080Ti thats now a 3090 with 20GB and all same spec for $899-999... come on really...

I almost wet meself.

Scraping the bottom of that justification barrel eh?

still the same 6 cherry picked games with no RT.

next.

Roll on the real reviews I say.

The internet is full of rubbish information at moment regarding the new tech, it's all made up... Great example here :-

NVIDIA GeForce RTX 3080 Ti Graphics Card To Feature 10,496 CUDA Cores, 20 GB GDDR6X Memory & 320W TGP, Tackles AMD’s RX 6800 XT $899-$999

https://wccftech.com/nvidia-geforce-rtx-3080-ti-20-gb-graphics-card-specs-leak/

Same rubbish as before with 3070Ti 16gb and 3080 20GB... ohh wait nvidia cancelled .. lol they never existed in any form. Same again with 3080Ti thats now a 3090 with 20GB and all same spec for $899-999... come on really...

Okay buddy, easy there, stealing MS' default appearance??? what are you smoking man?At least, AMD puts a bit more effort to update its user interface and make it more user friendly, while Nvidia puts exactly 0 effort and steals the Microsoft default appearance.

, anyway what drove me up the wall with NvCP was how long it took me to access settings for a specific program if I wanted to fx. have one program run without Gsync.

, anyway what drove me up the wall with NvCP was how long it took me to access settings for a specific program if I wanted to fx. have one program run without Gsync.Okay buddy, easy there, stealing MS' default appearance???

what are you smoking man?

As much as I like AMD's drivers as a whole, their navigation took a step back with the last UI update. Let's be real here okay. It needs some tweaking. Now I can agree that NvCP is ugly and outdated but that doesn't really matter as long as you have access to all the features... ohh snap.., anyway what drove me up the wall with NvCP was how long it took me to access settings for a specific program if I wanted to fx. have one program run without Gsync.

This is being asked on almost every page, noDo we know when the 68/900 will appear on OCUK to gauge what the UK pricing is going to come out like?

Do we know when the 68/900 will appear on OCUK to gauge what the UK pricing is going to come out like?

ooo excellent. So the story is as we thought. it either matches it, or out performs it in AMD optimised titles, and loses to Nvidia optimised titles

and its 50 bucks cheaper.

and its 50 bucks cheaper.

ooo excellent. So the story is as we thought. it either matches it, or out performs it in AMD optimised titles, and loses to Nvidia optimised titlesand its 50 bucks cheaper.

So this will come down to RT performance and how important that is to you

These benchmarks are fake - they can't also even calculate the percentage difference going from only 10GB VRAM to as much as 16GB VRAM.

Hint - it's 60% more VRAM, not 37% more.

Also, who told them to bench with Core i9-10900K and not with Ryzen 9 5900X or Ryzen 9 5950X?

These benchmarks are fake - they can't also even calculate the percentage difference going from only 10GB VRAM to as much as 16GB VRAM.

Hint - it's 60% more VRAM, not 37% more.

Also, who told them to bench with Core i9-10900K and not with Ryzen 9 5900X or Ryzen 9 5950X?