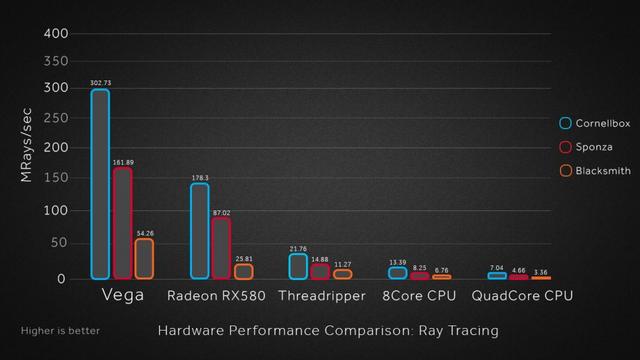

There's absolutely nothing wrong with proprietary hardware, Nvidia's cuda cores are proprietary. Proprietary hardware can speed up open source software with no problems. There is no problem adding ray tracing specific cores. Why have faster gpu cores today than the ones 10 years ago... obvious answer, because they are faster. If a gpu 10 years ago cuts performance by 90% doing 10 ray tracing beams per scene and 1 year old gpus drop 70% performance doing it, then adding some accelerators that are better optimised for ray tracing reduces that performance loss to say 40%, then as long as the new hardware takes up less space(by a fair margin) than just adding more normal gpu cores to bring performance to the same level, then it's worth doing.

But making hardware that can accelerate industry standard ray tracing code that doesn't impede what other hardware makers are doing and doesn't impede software makers having to cater to specific locked in code for one manufacturer would be bad. I doubt Nvidia has done that. The hardware can likely accelerate most general ray tracing code as their gameworks version won't be that far removed from industry standards. Just with some code backed in that makes it run worse on other hardware or not run at all.

Adding new hardware for accelerating features isn't a bad thing in general for anyone. For tessellation to even start being programmed for the hardware needed to be there, then once game devs start planning to program for it, adding more hardware to actually use it effectively becomes worthwhile. I won't hit Nvidia for adding accelerators to push the industry, I will hit them for pushing software lock ins and generally pushing flash over substance with most of their gameworks effects. Physx being added in after the fact and running like **** on cpu and AMD hardware on purpose for years held the industry back years.

The question for Nvidia uses is, how much die space does the ray tracing take up, is it mostly wasted or does it bring value yet and will Nvidia actually implement it in a way that is useful or will it kill performance, provide bad looking effects that stand out from the rest of the scene in such a way as to actually worsen the overall image (having shiny lighting in one part of a scene and very different lighting in the rest can look worse than a 'worse' lighting applied evenly to a scene).

I suspect the answer is, card prices are increasing, performance gains don't look great and performance hit for using ray tracing still looks huge for effects that aren't being implemented well.

On ray tracing itself, since the really early 90s ray tracing was held up as the ultimate quality effect... but compared to early/mid 90s rasterisation this was night and day. The thing is ray tracing hasn't moved on, because in reality it can't move on, it's the end game and it can't really be done better. But the other side, rasterisation and every trick learned in 25 years means the gap is now exceptionally small.

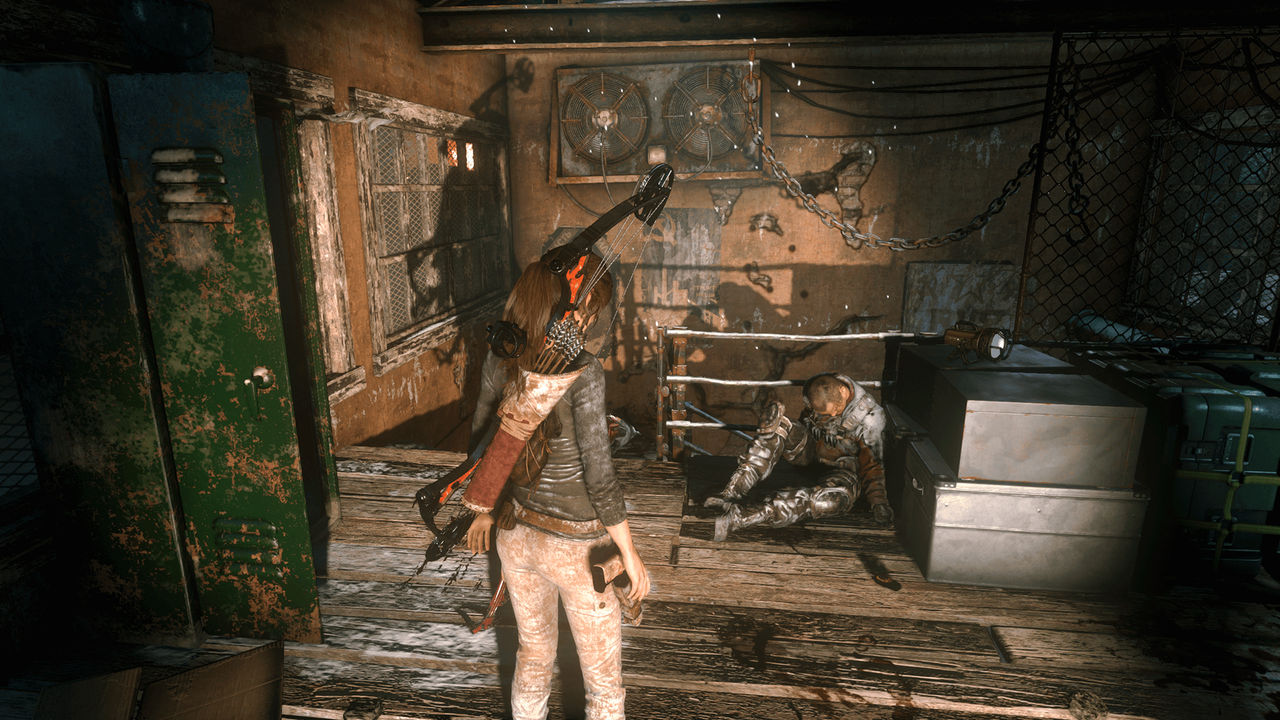

Humbug is talking about how the reflection was more accurate if you look carefully... but the other 99% of the scene looked pretty much the same. More over when you're actually moving and playing a game and not spending time just looking at a reflection under a bridge, it's nearly unnoticeable. That is the same thing I said about Physx. Estimating where a piece falls, at what rate and where it ends up really makes no difference to getting a realistic outcome because you can estimate well enough that they look nearly identical.

If you round up mavity in physics to 10m/s to make calculations easier, you can't physically feel the difference when you play. If a piece of wall falls 3 inches to the left and bounces twice or falls 3 inches to the right and bounces 4 times, again in reality it makes no difference. A huge amount of power to get the 'right' answer, when maybe 30% of the power can get you 99.8% of the same result is just not particularly efficient.

The strange thing is that since that early 90s when ray tracing was held up as the ultimate goal and going to be a massive game changer, the fundamental belief hasn't changed, even though obviously the amount of game changing it would have achieved early 90s compared to now is radically different.

That video Humbug posted of the gems, ultimately for all the realistic lighting, the entire scene felt fake to me. It didn't feel like the real world, it seemed too shiny, everything was 'clean', the metal disc that the stand was rotating on looked fake, basically the whole thing looked fake and the human eye couldn't detect between real reflections in the gems or estimated reflections in the first place because the detail is far too small and so many slightly different ones that if each reflection was switched to a different edge, we really couldn't tell.

)

)