AMD offer no rivalry. Nvidia dominate and screw us all over.

RX570>1050

RX580>1060

Vega56>1070

Vega64>1080

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

AMD offer no rivalry. Nvidia dominate and screw us all over.

http://www.pcgameshardware.de/Grafi...ormance-in-Shadow-of-the-Tomb-Raider-1263244/

this was on display at the event achieving between 30-60fps, good right? yeah until you realize that its only running at 1080p.... its no wonder there was no benchmarks at the event, i taught amd was bad with the vega launch but this could be on a totally different level

Yeah, I think we need time to reflect on that...

This has got to be a wind up surely.

Shadow of the Tomb Raider at 1080p dropping to the low 30s at points?

I would expect my 1080ti to smash the hell out of this, let alone next gen.

100fps 3440 x 1440 is the sweet spot maxed.....

30 fps is unplayable imo....

At the meagre price of 1200 big ones....

Is that a real quote? I actually burst out laughing.

Not when Ray tracing, yourb1080ti might be doing 6FPS etc.

But then you just turn Raytracing off and enjoy 100fps?

If we go by their RTX on/off BF comparison, with ROTTR.

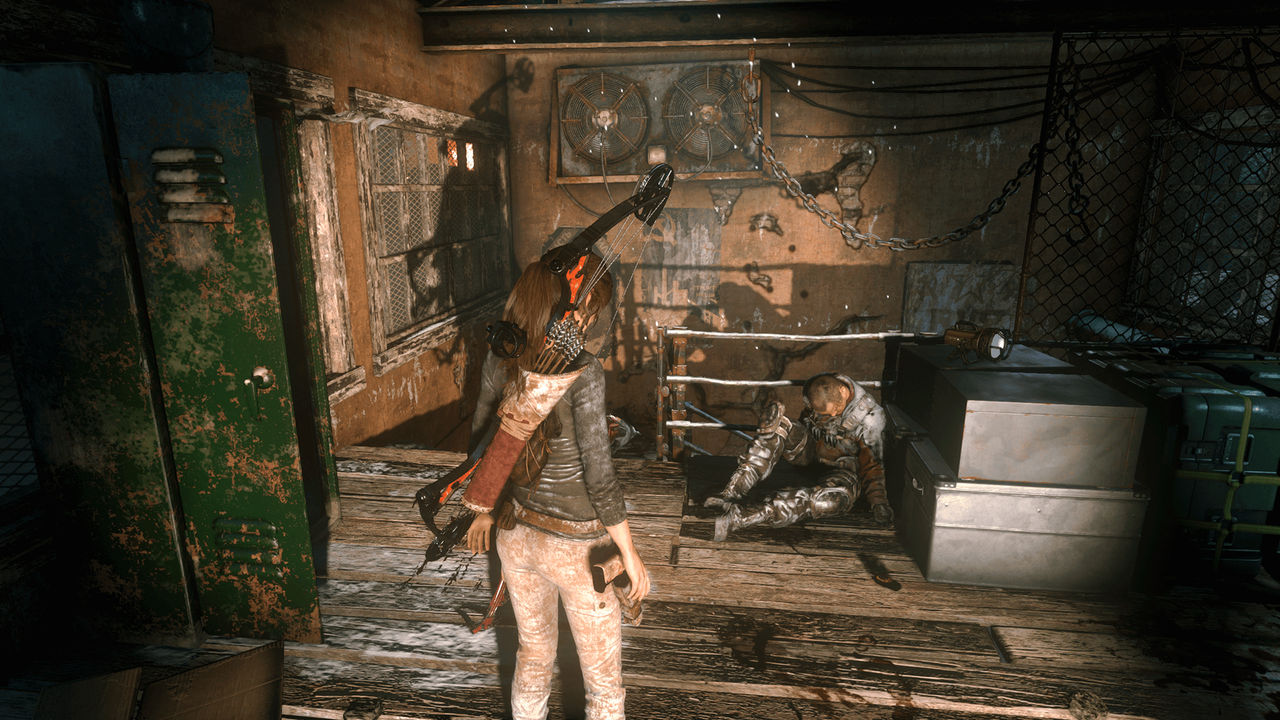

Here we have RTX on (light source behind Laura, off screen, just like the tanks flame in BF, which was off screen, to the left):-

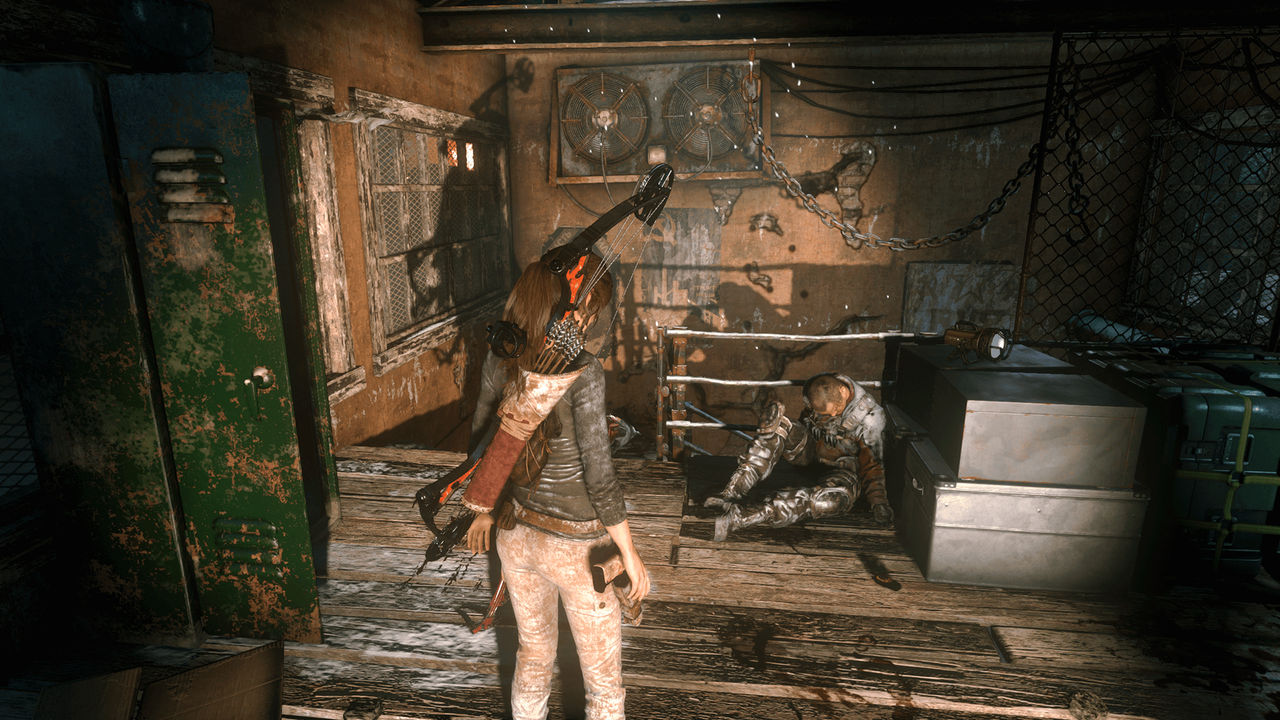

Same shot, RTX off :-

Bravo!! Pay the hiked pricing for the main selling point but only to have it disabled in games because the hardware is not actually fast enough to handle it real-world gaming rofl

That's like paying the price premium for a Bluetooth headphone but it sound awful via Bluetooth, so the solution is to use it in wired mode

Eh assuming that ray tracing was making up half the GPU load then in situations where the 2080ti was getting 30 fps the 1080ti would be getting 12 fps at maximum.

Ultimately even for ray tracing no one wants (well very few) to be playing at 30 fps or below.

Isnt your maths off ? 1080ti is 1.2Grey's, 2080Ti is 10Grey. Basically 8 into 30 doesnt equal 12.

Bare in mind I said maximum 12 and that the bottleneck isn't just the pure ray tracing load.

It may well be huge when implemented correctly (e.g. complete scene raytraced), or even just when the artists figure out how to use it correctly (again BF V - cars shouldn't be shiny - they should be covered in dust etc), but the demos are currently underwhelming ("oh it's just a bit shinier") or the opposite of photo-realistic, with there being chrome or glossy objects everywhere for the sake of it.

Does RT all have to be done by the GPU, can't any of the instructions be done by the CPU ?

It blows my mind that people have handed over £700+ on a graphics card without seeing how it performs against older generations of GPU’s.

Theses fancy new lighting effect do look good, but if a game runs like complete turd with them enabled what’s the point?

Am I missing something? I just can’t get my head around people buying these

.

.Yeah only time will tell, as soon as they get in consumers hands we will see some real hands on benchmarks, I don’t really care about the ray tracing stuff, I just want brute force to run 4k properly, ideally at around the £500 mark, so the 2070.People claiming they will cancel their pre orders if it doesn't satisfy.

However, some posters have sold their 1080 ti's and pre ordered which is insanity to me. Pretty big leap of faith there that these cards will offer a worthwhile performance bump worthy of the 1.2 grand price tag....

It's ok though each to their own! As long as you guys admit you've been fleeced by shillvidia we are cool. No need to get defensive about it!

Or maybe I will be the one eating my words if they turn out to be super powerful

.

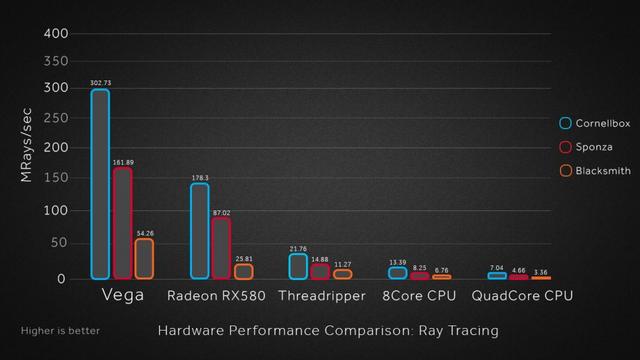

The entire point of doing rays on the GPU is because CPUs suck at it:Does RT all have to be done by the GPU, can't any of the instructions be done by the CPU ?