Are you a believer?

No not really...

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Are you a believer?

hahaha

That would put it ahead of the RTX 2080 TI at 60 CUs.

That leak looks promising ... while I don't think I'll be drawn to AMDs stuff unless they're crazy cheap+powerful or also have the other bits like DLSS (and maybe something that'll use DirectStorage)... hoooopefully the larger VRAM numbers might make nVidia release stuff like the 16GB 3070 (super?) earlier on...

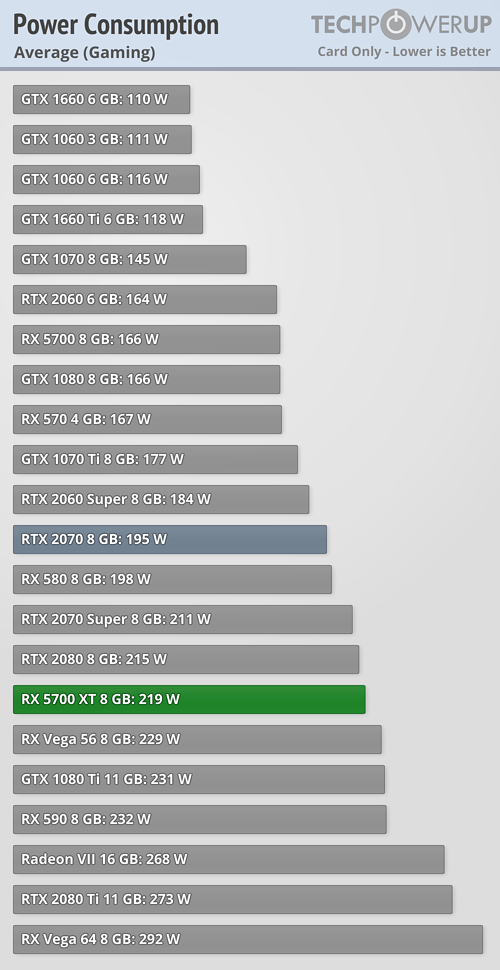

Not power efficient? MMmmm, mine pulls on maximum overclocks 192W... Series 3000 granted is twice as quick but it pulling 380W when overclocked... so, no I believe that the 5700XT IS actually pretty darn efficient considering... standard mine never goes about 170W!

I've got a 3080 on order just for RT. How important is RT to those who are waiting on AMD?

I hope AMD beat Nvidia on rasterisation, but I can't see how they can get close with raytracing.

I've got a 3080 on order just for RT. How important is RT to those who are waiting on AMD?

I hope AMD beat Nvidia on rasterisation, but I can't see how they can get close with raytracing.

I've got a 3080 on order just for RT. How important is RT to those who are waiting on AMD?

The whole quote from David Wang of AMD is as follows "We have developed an all-new hardware-accelerated ray tracing architecture as part of RDNA 2. It is a common architecture used in the next-generation game consoles. With that, you will greatly simplify the content development -- developers can develop on one platform and easily port it to the other platform. This will definitely help speed up the adoption [of ray tracing]."

As well as the plural 'consoles' giving a nod to the main next-gen players, the quote also includes a nod to developers being able to port between platforms. The latter being rather handy if you're creating content for multiple game consoles and systems.

It's not in the least bit important. I'd go as far as to say that the graphic design on the box is probably slightly more important to me than RT.

That's a lovely internet picture but I can tell you now, right at this moment in time and over 4 hours of gaming in warzone, MY 5700XT maxed at 192W running a constant overclock at 2164mhz core (2200mhz set) and 1900mhz memory... so, that bar chart is not what I'm seeing either through GPUZ OR more importantly my actual power supply monitor on the wall. Hey how, we shall differ here.

Standard it's WAYYY lower... and is around 42% slower than a 2080TI based on same site in performance terms... so, no, I don't believe it's inefficient in the slightest for the juice it sucks.

You mean the same guy who said the 3080 would have a co-processor on the board?

Now I would have thought that when he caught himself being wrong the 1st time he would re-evaluate his "sources" take a seat and think twice before posting more "rumors". If, and I mean a BIG IF, he was told that a top end RDNA 2, Big Navi, was just as fast as a 3070 something should have clicked, in his thought processes. And, he should have filed it away until he can actually verify that the information was valid. <---Most important part here as he can't validate that the information is true.

However, lets look at what he does do. He doubles down on those same "sources" and now claims thata 6900xt is no faster then a 3070 or there abouts. Claiming that he's getting a "card" when it was rumored that AMD hasn't released those skus to AIBs. All with in 'hours' of being wrong about the co-processor. Now I don't know about you. But I find it highly suspect of his "sources". As it starting to look like he's just making it up.

But with any of this we will see once AMD release their skus.

Nothing he predicted was accurate about Ampere. End of discussion.To be fair, this is going to happen at some point. Lots of ray-tracing work can be separate from other graphics work. If what it does is some really clever compression, same thing. If it's just a general chiplet, except for heat concerns, no reason they MUST be on the same side of the board, especially with a shroud like Nvidia chose. Chances are, since development takes 3 or 4 years, some people could have seen at least conceptual information on the next GPU series 16-24 months out.

He's off the list...

He's off the list...

Nothing he predicted was accurate about Ampere. End of discussion.

He was the only person claiming the co-processor. No other leak I've come across ever mentioned a separate co-processor on the card. He even had diagrams of it!! LOL.

He claimed far better RT performance then what's shown in reviews. For example.

Now he's trying to use his platform to downplay RDNA 2? Am I suppose to ignore what he said about Ampere, that amounted to nothing more then cheerleading, and take him downplaying RDNA 2 as valid? When all I have to do is look at his prior 4 or so videos show he was wrong about Ampere? NahHe's off the list...

Nothing he predicted was accurate about Ampere. End of discussion.

He was the only person claiming the co-processor. No other leak I've come across ever mentioned a separate co-processor on the card. He even had diagrams of it!! LOL.

He claimed far better RT performance then what's shown in reviews. For example.

Now he's trying to use his platform to downplay RDNA 2? Am I suppose to ignore what he said about Ampere, that amounted to nothing more then cheerleading, and take him downplaying RDNA 2 as valid? When all I have to do is look at his prior 4 or so videos show he was wrong about Ampere? NahHe's off the list...

Edit:

Wait, wait, wait...lets see about this magical card he saysing he's getting before everyone else.

he did yesNot that I believe him to be particularly accurate, but in all fairness I’m fairly sure he qualified the coprocessor as his own speculation, not sourced info.

Still wrong though!

Nothing he predicted was accurate about Ampere. End of discussion.

He was the only person claiming the co-processor. No other leak I've come across ever mentioned a separate co-processor on the card. He even had diagrams of it!! LOL.

He claimed far better RT performance then what's shown in reviews. For example.

Now he's trying to use his platform to downplay RDNA 2? Am I suppose to ignore what he said about Ampere, that amounted to nothing more then cheerleading, and take him downplaying RDNA 2 as valid? When all I have to do is look at his prior 4 or so videos show he was wrong about Ampere? NahHe's off the list...

Edit:

Wait, wait, wait...lets see about this magical card he saying he's getting before everyone else.