Haha. Yeah. It is a poor showing by NVidia, that is for sure. But still more people have it than a 6900

hahah yer but the 6900 doesn't actually exist as yet, which is no different to the 3080 I guess

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Haha. Yeah. It is a poor showing by NVidia, that is for sure. But still more people have it than a 6900

Well if you want to be completely fair, no one is forcing anyone to preorder. Just wait until January and buy then

You can also have it now by paying scalpers. Lol.

The laugh is the same logic gets used to say AMD is somehow late to the party, bizarre. I think AMD have done exactly the right thing, day very little and let your product speak for itself. There will be plenty of buyers out there if it is good enough.

What happened? All gone now.

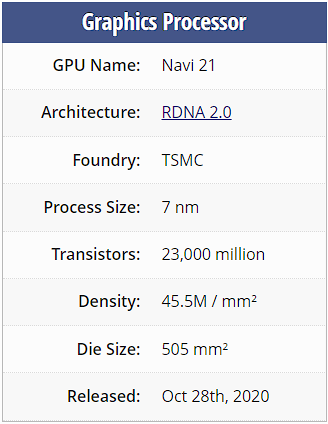

The Navi 10 die was 251mm². The TU106 die was 445mm² (77% larger). The 5700 XT was and is the faster card when compared to the 2070. Nvidia may have improved their manufacturing process since then, but Samsung's 8nm is still hugely inferior to TSMC's 7nm in terms of density. There is quite literally no basis to believe that AMD cannot compete judging by die sizes alone.The likelihood that with a relatively smaller die they will achieve at least performance parity with the large GA102 (628 mm²) is quite low.

The Navi 10 die was 251mm². The TU106 die was 445mm² (77% larger). The 5700 XT was and is the faster card when compared to the 2070. Nvidia may have improved their manufacturing process since then, but Samsung's 8nm is still hugely inferior to TSMC's 7nm in terms of density. There is quite literally no basis to believe that AMD cannot compete judging by die sizes alone.

Nvidia are not using TSMC's 7nm though, so your argument is meaningless. Samsung's 8nm isn't even close in terms of density. I know you're a troll, but let's try to stick to reality and not imaginationland.At least try to normalise for the TSMC 12nm and TSMC's N7 process lol

TU106 is around 200 mm² on N7.

You literally only post negative stuff about AMD. It's 90% of your posts. I notice you're now trying to redirect the conversation and dodging the point that Samsung's 8nm isn't even close to being close to TSMC's 7nm in terms of density. We're talking 61.18MTr/mm² versus 91.20MTr/mm² here, not a small difference. Your claims based on die size have no basis in fact. You're a low-grade troll.

I thought of rechecking the Xbox specs

15.3 B transistors on 360.4 mm2

Around 42.5 M transistors per sq mm

At 536 mm2 for Big Navi.. works out to approx 23 B transistors which is nowhere close to Coretek's 27 B estimate.. are there further efficiency gains expected over Xbox SOC? cuz that chip can theoretically pack in 50 B transistors

"have to" lol. So do Pascal and Turing owners, and arguably so will Ampere owners. But then you're making the assumption that ray tracing will come to PC gaming. Seeing greater adoption of RT because of the consoles doesn't automatically mean PC games and ports will use it. And even then, if you believe the rumours and naysayers, the consoles' RT performance - and by extension Big Navi - is so woeful you won't be missing anything. We never needed ray tracing to enjoy games before, so I hardly think it's the end of the world if PC gamers with low/none RT cards have to disable some half-assed reflections on a handful of surfaces that shouldn't even be reflective in the first place.

So, your point is what?

Yes, and GA102 is 45.06 MTr/mm², so Samsung's 8nm is perfectly in line with whatever TSMC has.

sorry what?

turing and ampere users can enable raytracing ( hell even pascal ones but perf is terrible since no RT cores) for equal ( turing ) or better ( ampere ) perf/ visuals than consoles.

rx57xx users? They can’t. They need to buy RDNA2 gpus.

And like i said before, pc gamers are all like ‘pff consoles, pcs have better graphics anyway’. Seems to me like every single AMD user will have to upgrade to RDNA2 or have to admit that consoles have higher settings than their already obsolete gpus.

sorry what?

turing and ampere users can enable raytracing ( hell even pascal ones but perf is terrible since no RT cores) for equal ( turing ) or better ( ampere ) perf/ visuals than consoles.

rx57xx users? They can’t. They need to buy RDNA2 gpus.

And like i said before, pc gamers are all like ‘pff consoles, pcs have better graphics anyway’. Seems to me like every single AMD user will have to upgrade to RDNA2 or have to admit that consoles have higher settings than their already obsolete gpus.

When will we see benchmarks/reviews?

As will most nVidia users.. so I'm not sure what your point is?

Remind me why turing users have to upgrade please. Even rtx2060s will be able to run RT at console level, maybe with a 90% res scaling or so.