Did you even bother to watch the video from hardware unboxed?You realise that the difference between PCIe 3 8x and 16x is 2% with a 2080ti..... and 8x has 50% less bandwidth.

How much do you think you are going to get from PCIe4?

2080ti scales between pcie3x16 and x8 by 10% to 15% across the low and max fps

when you look at the 5700xt the higher the res the larger the performance loss when used in pcie3. The lower 1% low is significant as some games seems to have same peak fps but the 1% low is higher under pcie4.

no matter how you cut it the bigger bandwidth will facilitate faster transfer between GPU and system especially under the heavier workloads.

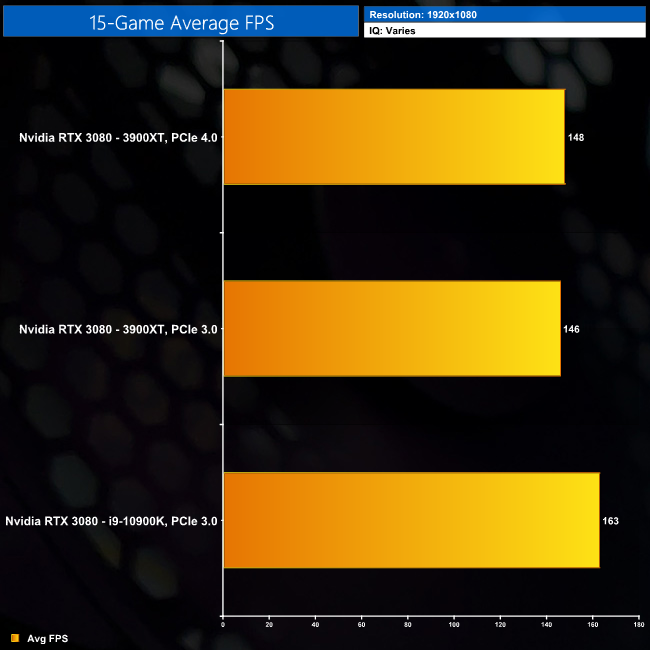

Say 3080 is twice as much performance than 5700xt and when that gets translated into fps I wouldn’t want to guess but if I have to you will be looking at around 10%-20% loss in performance on AAA titles on average @ pcie 3 vs 4.

Although I feel I'm just around the corner from getting a good deal -.-

Although I feel I'm just around the corner from getting a good deal -.-