Again, I think you don't understand my point. Of course the 12 gb on the 70ti or the 10GB on the 3080 can be a limitation. I've managed to run out of memory with my 3090 on rdr2, without mods. The question is, does that happen on sane settings that you'd actually play with, and if it does, is it fixable without sacrificing a ton of image quality? Until this point, I don't think that was the case in any game..There is hope. Although lets not turn hogwarts into the only talking point. "its just one game"

His point is valid, particularly those using DLLS. I will use Trick as a good example:

At least people are recognising it, but if your running at lower resolutions your mitigating the problem which we talked about at the very beginning.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10GB vram enough for the 3080? Discuss..

- Thread starter Perfect_Chaos

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsSoldato

- Joined

- 24 Sep 2013

- Posts

- 2,890

- Location

- Exmouth, Devon

There is hope. Although lets not turn hogwarts into the only talking point. "its just one game"

His point is valid, particularly those using DLLS. I will use Trick as a good example:

At least people are recognising it, but if your running at lower resolutions your mitigating the problem which we talked about at the very beginning.

I don't think anyone EVER said a game couldn't run out of VRAM - it's people using the just released games which these days is basically alpha or beta then saying it's a hardware design fault because the game is so amazing that it actually deserves to use so much VRAM. Making out that cards are obsolete. Well they are if you want to play the beta version of a game - which nearly all arguments around VRAM have been - newly released games. I have no issue with people being led to believe they should be paying £1000+ for a card to play the beta if they are too stupid to believe that and base their decisions on such advice.

The VRAM argument revolves around new releases and their apparent release state. Some taking the performance of a new game, released in beta at best state, as the guiding light for future hardware requirements - hats off to those standing by their beleifs - but how many of the 3/4/5/6 since FC6 (I dunno) games that had VRAM issues, still now, have issues with a 10GB 3080? - or still has the same game performance as first release because the game was released in a perfectly optimized state?

Are they all still having terrible issues relating to VRAM?

The websites that review the game, don't want to miss out on clicks - the share of the clicks (revenue) for the beta review - it's revenue. This leads stupid people who watch these beta tests to jump up and down that they need more VRAM. (saw it in BF4 with people running out to buy i7's when it ran fine on an i5 on release). Same stupidity.

You need to make games for the hardware and not send them out in today's seemingly unplayable states. Many cant see this and think the mitigation is by spending more on cards with more VRAM to play the beta.

Or, just wait for the game to be half price - then it's usually been fixed and is much more playable.

Any seasoned gamer will know that these beta releases or Alpha most of the time, used to be sign up to test or wait for free beta testing. Or like Dice still does - for a fee of £3 for 10 hrs - still charging for it.

You can make any game, if it has mods, fall over by running out of VRAM - but if you want to sell a game for MSRP then it needs to be much more polished than the drivel released today - people paying MSRP for these beta games - must accept the fact that until it's fixed - you are going to need a £1000+ card to get the best beta experience.

Or any sensible person will have realized all games are poor on release, carry on playing other games until the game in question is fixed, about when it's half price.

If folk want to believe that new game releases are warranted for such high VRAM usage, then I hope they aren't the ones also moaning about prices - if you cant afford the card then you cant afford to play newly released games - that privilege falls only to those with high end cards and even then - still a poor experience.

The way it's meant to be played.................

Soldato

- Joined

- 21 Jul 2005

- Posts

- 21,171

- Location

- Officially least sunny location -Ronskistats

I don't think anyone EVER said a game couldn't run out of VRAM - it's people using the just released games which these days is basically alpha or beta then saying it's a hardware design fault because the game is so amazing that it actually deserves to use so much VRAM. Making out that cards are obsolete. Well they are if you want to play the beta version of a game - which nearly all arguments around VRAM have been - newly released games. I have no issue with people being led to believe they should be paying £1000+ for a card to play the beta if they are too stupid to believe that and base their decisions on such advice.

...

Or any sensible person will have realized all games are poor on release, carry on playing other games until the game in question is fixed, about when it's half price.

...

The way it's meant to be played.................

Totally agree with you on the games releases. I got in recent past RDR2, cyberpunk, days gone, HZD and a few others between the £10-20 range which is happily what I will pay for a game. The £40+ releases make no sense and are clearly being shipped with many bugs and glitches.

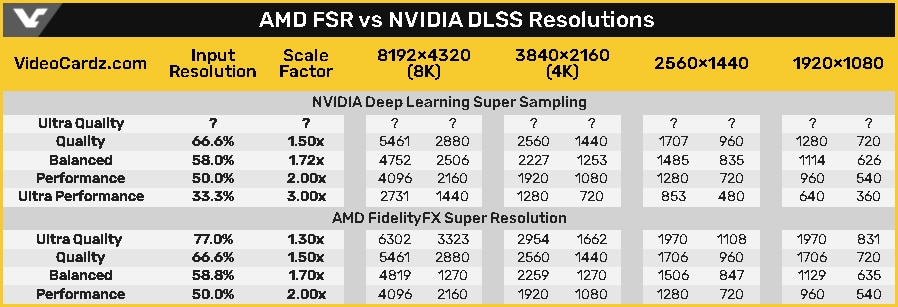

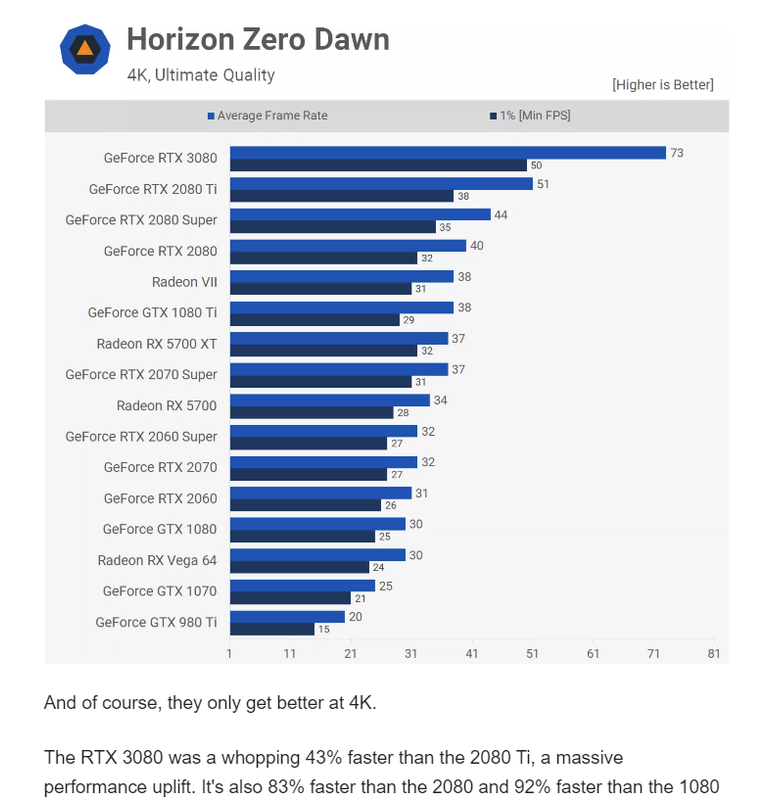

To counter your opening statement, I don't think anyone EVER said you couldn't turn down a setting.. or use compression etc, but we were talking about a flagship card from last gen at the moment and not a mid range 1060 where you would expect to reduce settings to play modern releases. The original point some were making was is 10GB a weakness and at the time the easiest way to test this was playing native resolution 4k on some of them titles - not 1440p or dlss which upscales defeating the purpose of seeing if there was a limitation at the hardware level.

But then you are ignoring the fact that you have to turn down settings even on this year's flagship cards regardless of the vram. Why is only a problem for the 3080?Totally agree with you on the games releases. I got in recent past RDR2, cyberpunk, days gone, HZD and a few others between the £10-20 range which is happily what I will pay for a game. The £40+ releases make no sense and are clearly being shipped with many bugs and glitches.

To counter your opening statement, I don't think anyone EVER said you couldn't turn down a setting.. or use compression etc, but we were talking about a flagship card from last gen at the moment and not a mid range 1060 where you would expect to reduce settings to play modern releases. The original point some were making was is 10GB a weakness and at the time the easiest way to test this was playing native resolution 4k on some of them titles - not 1440p or dlss which upscales defeating the purpose of seeing if there was a limitation at the hardware level.

It's a lot more civil these days, and the Dons love this thread.Geez cant we mothball this thread already ?

It was a cut down flagship, you got a GA102 die and top tier performance within 10-15% of the 3090 but with less VRAM which many were happy to compromise on given its asking price of £650.To counter your opening statement, I don't think anyone EVER said you couldn't turn down a setting.. or use compression etc, but we were talking about a flagship card from last gen at the moment and not a mid range 1060 where you would expect to reduce settings to play modern releases. The original point some were making was is 10GB a weakness and at the time the easiest way to test this was playing native resolution 4k on some of them titles - not 1440p or dlss which upscales defeating the purpose of seeing if there was a limitation at the hardware level.

The 2080ti was a £1000+ flagship and that also needed to drop settings to hit 60fps on some games by the time the 3080 was released.

Soldato

- Joined

- 24 Sep 2013

- Posts

- 2,890

- Location

- Exmouth, Devon

Totally agree with you on the games releases. I got in recent past RDR2, cyberpunk, days gone, HZD and a few others between the £10-20 range which is happily what I will pay for a game. The £40+ releases make no sense and are clearly being shipped with many bugs and glitches.

To counter your opening statement, I don't think anyone EVER said you couldn't turn down a setting.. or use compression etc, but we were talking about a flagship card from last gen at the moment and not a mid range 1060 where you would expect to reduce settings to play modern releases. The original point some were making was is 10GB a weakness and at the time the easiest way to test this was playing native resolution 4k on some of them titles - not 1440p or dlss which upscales defeating the purpose of seeing if there was a limitation at the hardware level.

Pretty sure some were saying turning a setting down was a no and Ultra preset is what has to be used - the same as techpress reviews use - as everyone games with those settings

. I'm asking if those same games that people keep using as hardware failures are still showing the hardware is an issue now, and 10GB inst enough, or has the game been fixed and it now works fine?

. I'm asking if those same games that people keep using as hardware failures are still showing the hardware is an issue now, and 10GB inst enough, or has the game been fixed and it now works fine?A weakness....well 24GB is a weakness with Hogwarts then - hampering performance. Game would run at 120fps @ 4k with 48GB VRAM?

VRAM will ALWAYS be a weakness for poorly performing games - hence why I'm saying, even at 4k does the 10GB 3080 still have the same issues with crippled performance due to VRAM or have the games been fixed? If it was a hardware design flaw then all these games would still run as terribly as the release date if indeed the VRAM usage was by design.

Of course if you want to play games that are in a mess - a card with more VRAM would do better - so pay extra +£1000 to play alpha/beta release games........................ or wait until it's fixed?

If none of the games have been fixed and are still running the exact same performance as first raged about, then 10GB isn't enough as the game ran perfect on release.

If these games ARE now running much better on 10GB & 4k (was always the Q) - then the games have been fixed and like many of us thought, wasn't by design.

Even a broken game can be benchmarked between cards - just 60fps for hogwarts on a 4090 is unacceptable - is the game borked, or it was designed for 48GB gaming cards that don't exist yet?

Too many were saying that this is simply the direction that games are going - I agree if the graphical fidelity was visible compared to other games.

Frame generation is another spanner in the works and I'm still on the fence about - I get another frame - just rendered differently from pure raster. Do I accept if its good enough?

Understand too people saying it's cheating and only pure raster will do too. Better to have it than not if implemented well though?

It's a lot more civil these days, and the Dons love this thread.

Yeah. @montymint seems to have taken a liking in me in particular these days. Love you too monty mate

Pro tip guys: if you add OP to your ignore list you will no longer see this thread in the forum

Nah. I prefer seeing

Of course I was delighted to compromise@£650 it would be madness to suggest it was a terrible product because mine has ran out of vram/frame times collapse causing stutter because of the 10Gb limit, it was the best (granted very hard to get)gpu for £650 hands down!It was a cut down flagship, you got a GA102 die and top tier performance within 10-15% of the 3090 but with less VRAM which many were happy to compromise on given its asking price of £650.

My issue in this thread has always been, despite running out of vram/stutter because of lack of vram with my 3080, the continual drowning out by highly vocal users insistence that 10Gb has not, never will break 10Gb because of the excuses: ~system error/user error/you don't know how it works/it doesn't have enough grunt to use 10Gb/my vid with zero active rt'ing shows it not running out/the constant words in mouth, last but not least, the classic-those games garbage anyway-just because they broke 10Gb!

Yes for £650, couldn't be happier, I eventually got the best £650 value money could buy, but when people wanting to buy one reading the nonsense that it's bulletproof and then went and spent £1200 to actually buy an aib, feel sorry not sorry.

Last edited:

My issue in this thread has always been, despite running out of vram/stutter because of lack of vram with my 3080, the continual drowning out by highly vocal users insistence that 10Gb has not, never will break 10Gb because of the excuses

Making stuff up again I see tommy. Please find these users and their posts where they said that.

You seem to just enjoy the wind up. You even made a 12gb is not enough thread for a wind up and to try and bait.

Of course it can run out of vram. I managed to run my 3090 out of vram as well, in rdr2. So? What's the pointOf course I was delighted to compromise@£650 it would be madness to suggest it was a terrible product because mine has ran out of vram/frame times collapse causing stutter because of the 10Gb limit, it was the best (granted very hard to get)gpu for £650 hands down!

My issue in this thread has always been, despite running out of vram/stutter because of lack of vram with my 3080, the continual drowning out by highly vocal users insistence that 10Gb has not, never will break 10Gb because of the excuses: ~system error/user error/you don't know how it works/it doesn't have enough grunt to use 10Gb/my vid with zero active rt'ing shows it not running out/the constant words in mouth, last but not least, the classic-those games garbage anyway-just because they broke 10Gb!

Yes for £650, couldn't be happier, I eventually got the best £650 value money could buy, but when people wanting to buy one reading the nonsense that it's bulletproof and then went and spent £1200 to actually buy an aib, feel sorry not sorry.

Associate

- Joined

- 1 Oct 2020

- Posts

- 1,280

Just to answer the question posed by the thread, that's all. The problem is the question doesn't have enough parameters. For me, the takeaway was as follows:Of course it can run out of vram. I managed to run my 3090 out of vram as well, in rdr2. So? What's the point

Is 10 GB enough for 4K everything on, full RT without upscaling? No, not in all cases.

Is 10gb enough when able to use upscaling? Yeah, I think so.

Is 10gb enough for 2020 (when released)? Probably just about? Not sure on this, I'm happy but can see why others wouldn't be.

Is 10gb enough for the money spent? Again, I was happy but others may not be. Moving forwards this could be a baseline for game requirements much sooner than previous flagships (or high end cards, your choice, I'm not getting into what is and isn't a flagship)

Is 10gb as much as 16gb? No. I have established this with some maths.

Does it matter? Does any of it? I have 10gb, but the vast majority of my games are nowhere near it. 8gb is fine for the vast majority tbf. To paraphrase Frank Zappa:

Shut up and play yer games!

I agree with everything, but the thing is, is any card regardless of vram enough for 4k ultra? No, not it all cases. It's not something that only applies to the 3080Just to answer the question posed by the thread, that's all. The problem is the question doesn't have enough parameters. For me, the takeaway was as follows:

Is 10 GB enough for 4K everything on, full RT without upscaling? No, not in all cases.

Is 10gb enough when able to use upscaling? Yeah, I think so.

Is 10gb enough for 2020 (when released)? Probably just about? Not sure on this, I'm happy but can see why others wouldn't be.

Is 10gb enough for the money spent? Again, I was happy but others may not be. Moving forwards this could be a baseline for game requirements much sooner than previous flagships (or high end cards, your choice, I'm not getting into what is and isn't a flagship)

Is 10gb as much as 16gb? No. I have established this with some maths.

Does it matter? Does any of it? I have 10gb, but the vast majority of my games are nowhere near it. 8gb is fine for the vast majority tbf. To paraphrase Frank Zappa:

Shut up and play yer games!

Associate

- Joined

- 1 Oct 2020

- Posts

- 1,280

That isn't "the thing". That is a separate "thing". I agree, but that isn't the question.I agree with everything, but the thing is, is any card regardless of vram enough for 4k ultra? No, not it all cases. It's not something that only applies to the 3080.

I've been lucky with mine. Only once have I stumbled into a VRAM issue and that was a single room on GTFO where there was a ridiculous amount of vegetation and performance plummeted. The developers patched it as something was definitely wrong.

I play at 3840x1600, which is about 25% smaller than UHD "4K".

Perfect card for me at the time coming from a 1080Ti. Got the FE @ £650, an absolute bargain compared to current line up.

Anybody insisting on playing the latest games at maxed UHD "4K" with RT need to go for the top card each gen really.

I play at 3840x1600, which is about 25% smaller than UHD "4K".

Perfect card for me at the time coming from a 1080Ti. Got the FE @ £650, an absolute bargain compared to current line up.

Anybody insisting on playing the latest games at maxed UHD "4K" with RT need to go for the top card each gen really.

Last edited:

My issue in this thread has always been, despite running out of vram/stutter because of lack of vram with my 3080, the continual drowning out by highly vocal users insistence that 10Gb has not, never will break 10Gb because of the excuses: ~system error/user error/you don't know how it works/it doesn't have enough grunt to use 10Gb/my vid with zero active rt'ing shows it not running out/the constant words in mouth, last but not least, the classic-those games garbage anyway-just because they broke 10Gb!

I sense bait but I'll bite anyway.....

Problem is assuming you aren't trolling...... you don't like it when you are proven wrong and/or refuse to acknowledge that there is more going on, it's not a clear cut "oh it's run out vram", "oh it's using 14gb of. vram, therefore 10gb not enough" and refuse to acknowledge any other benchmarks/users experience if it doesn't fit the "10gb isn't enough" narrative.

Remember how you mentioned RE 2 remake "******** the bed" yet turns out it didn't, I uploaded a video showing nice long gameplay and showed no issues in the problematic spot, likewise with all the official benchmarks etc. showing no issues, although DF highlighted rdna 2 ******** the bed (even with optimised settings, sorry I forgot, they don't count as nvidia shills or maybe when it comes to amd hardware, it's "local system issue") but I digress.... then you went on to say something like oh maybe they fixed it then

Same with MFSF, you went on to mention issues, I uploaded 4k footage showing similar fps (with no frame latency issues) to gpus with more vram and pointed out how in patch notes, they mention of how vram usage had been improved and so on, you take that as "gimping the game to make it work within 10GB buffer" then go on to say "oh wait, hang on, it's the modded versions of msfs that cause issues with 10gb".....

Same with MFSF, you went on to mention issues, I uploaded 4k footage showing similar fps (with no frame latency issues) to gpus with more vram and pointed out how in patch notes, they mention of how vram usage had been improved and so on, you take that as "gimping the game to make it work within 10GB buffer" then go on to say "oh wait, hang on, it's the modded versions of msfs that cause issues with 10gb".....Likewise with all the other games that have been mentioned, all debunked with evidence from various sources and the games, which run like **** also run like **** on the best of the best so it's a moot point "zOMG VRAM not enough!", should have paid the extra £750 to get that lovely 25 fps instead of 20 fps.....

Sorry but I find it hard to take one liners and so on when I and many others have yet to encounter all these so called issues in the "many" games all because of the 10gb vram and benchmarks showing no issues.

The fact when I or others post hard evidence debunking such claims and the thread goes quiet until the next problematic title/situation from the usual lot says it all

The only one so far is fc 6 but as said, the only way I could get it to **** the bed was by sabotaging myself by setting rebar on, refusing to use FSR with max settings at 4k in the benchmark, also, the video did have active RT, shadows are cast by the sun in FC 6 although it is highly gimped RT so bit pointless anyway. Oh and the only video to date still showing a 100% genuine vram issue is mine in cp 2077 when using several 4-8k texture packs

Making stuff up again I see tommy. Please find these users and their posts where they said that.

You seem to just enjoy the wind up. You even made a 12gb is not enough thread for a wind up and to try and bait.

Exactly, pretty obvious now but what do you expect when most people take likes of MLID one liner comments seriously with no evidence

I and others have openly pointed out where "genuine" vram issues are such as when modding a game heavily, playing at high res. and refusing to use upscaling tech. but nope.... doesn't fit the narrative.

The point you, I and others have made has proved to be true as per history, we have run out of grunt before vram (for 99% of cases), the fact that a 3090 ***** the bed in newest titles proves this.

Bill summed it up perfectly, Bill is sensible, be like Bill. Thread really should be locked now, it's boring topic and a never ending broken record (from everyone

) now.

) now.Would be nice if this thread would disappear but it's meaningless if people keep posting comments relating to the memory issue in other threads continuously. I won't point out names but you know who you are.Thread really should be locked now, it's boring topic and a never ending broken record (from everyone) now.

Bill summed it up perfectly, now it's time to (Frozen) let it goooo and give the mods a break.

- Status

- Not open for further replies.