I've had my 3900x since August, and I've not had many issues with it really, but after reading a few game CPU benchmarks recently it does appear that my minimum frame rates are lower than they should be.

I ran a few benchmarks when I first got it, mainly to check the CPU cooling was OK. I play at 4K, so i'm always going to be GPU limited, therefore it wasn't worth benchmarking a ton of stuff. At the time I noticed that in some games my minimum frame rate was a lot lower than an Intel 5930k that I used for many years, but I guess I didn't think too much about it as it wasn't apparent when playing games.

But Red Dead Redemption II just came out, and in benchmarking that I'm noticing the same trend again.

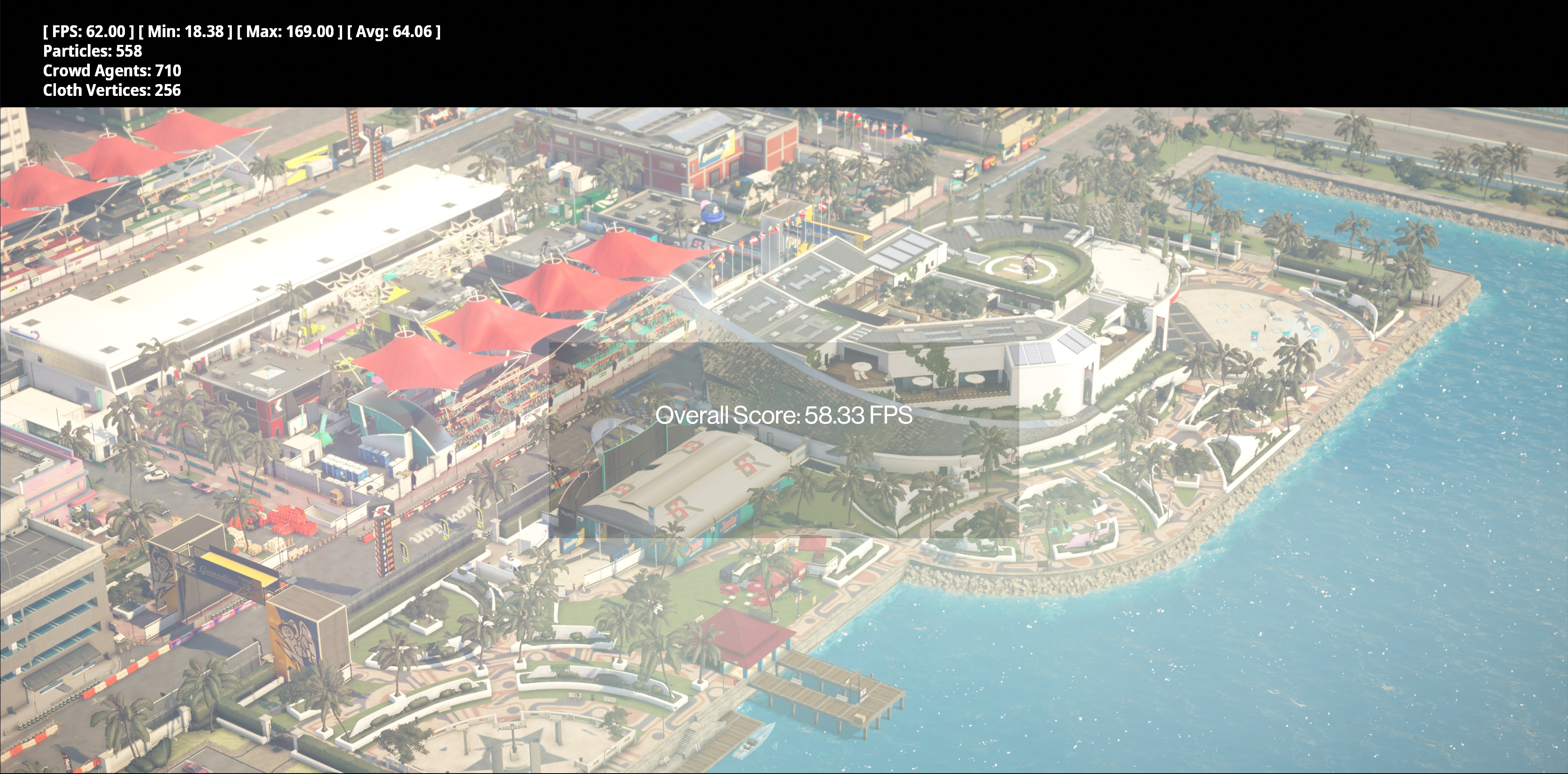

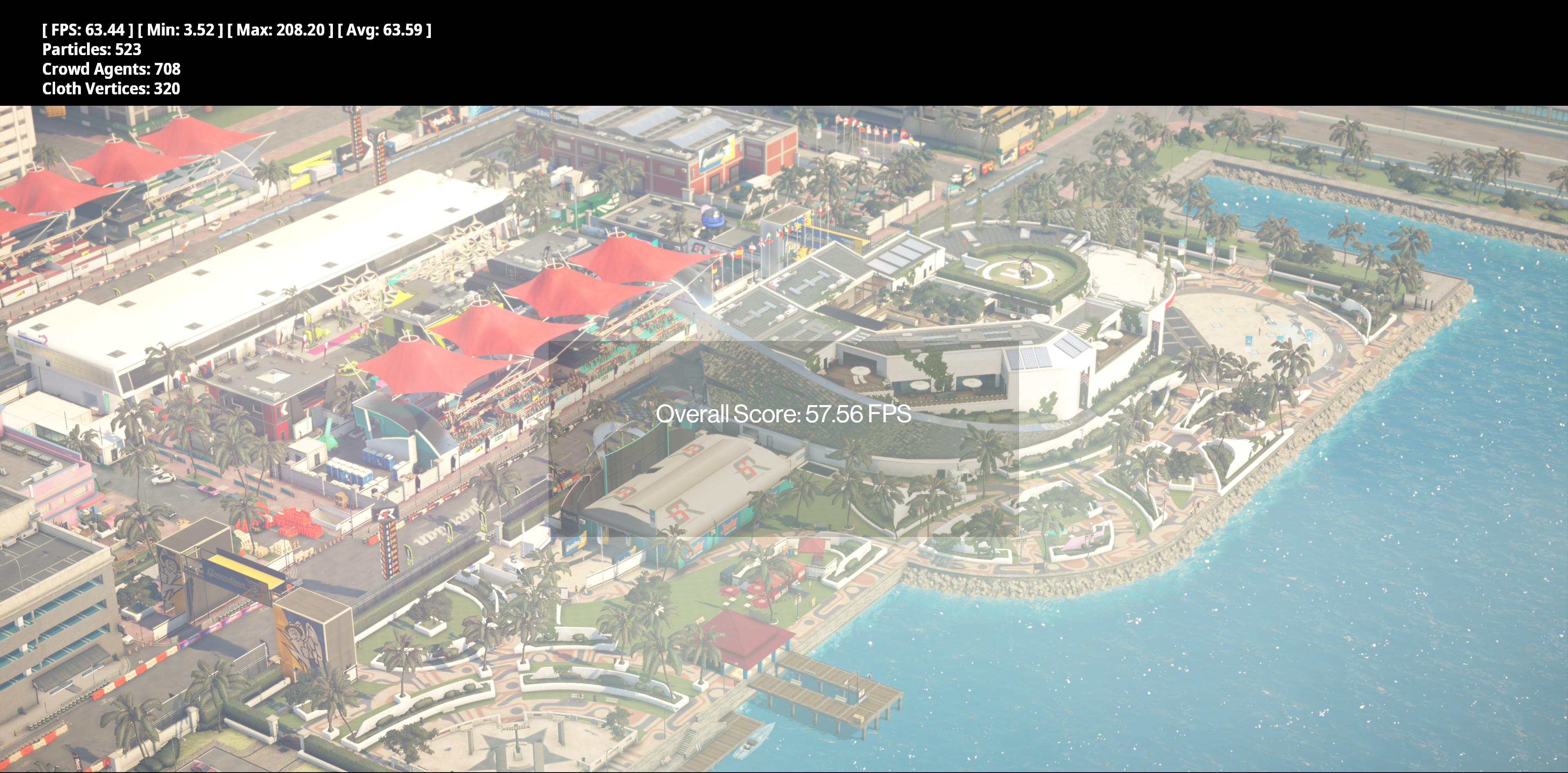

An example below

Hitman 2 Miami Benchmark - Min 18.38 fps

Hitman 2 Miami Benchmark - Min 3.52 fps

In the Red Dead Redemption II in-game benchmark I've done two runs, both with fresh install of Windows 10 and latest F10a BIOS with AMD AGESA 1.0.0.4 B and my minumum frame rates for 4k/ultra settings were 7fps and 6fps, respectively.

The average was about 43fps both times, which is more in-line with what others are getting with the same 2080Ti GPU

According to Gamers Nexus though, their 1% low and 0.1% lows didn't drop below 28.5 fps at similar settings:

I don't notice big spikes or drops in games, so perhaps I shouldn't even worry about it but it does seem like something isn't quite right.

What should I be looking at?

Spec in sig

18 FPS vs 3 FPS?

Is that sustained? i mean are you actually getting sustained periods of 3 FPS on the 3900X and 18 FPS on the 5930X?

Or is it like a lot of benchmarks do and just pick up the odd 200ms hitch on one or two frames and register that as the Min FPS?

This is why reviewers use 1% and 0.1% lows, some of them don't even use 0.1% lows in which case its an average of the lowest frame rates of 1% of the run, it does away with singular "Frame Hitching" becoming the registered lows.

*pats mine on the cooler*

*pats mine on the cooler* I’m Just hoping ssd drives come down in price to keep all the games on

I’m Just hoping ssd drives come down in price to keep all the games on