Soldato

- Joined

- 8 Jun 2018

- Posts

- 2,827

I'm talking about die size.What are you talking about?

I consider 2-3 years long time ago.Nvidia lost their Efficiency per mm2 a long time ago? That they need to pack on more transistors to maintain a competitive edge? Lets look at those claims by comparing some of the like for like GPUs.

That's some good fishing expedition. Since it's apparent that you won't be buying those cards this or next generation going back then serves little relevance to Navi, Turing and Ampere (that I was making reference to). Lets try to keep the conversation current.28nm Fiji and 980ti.

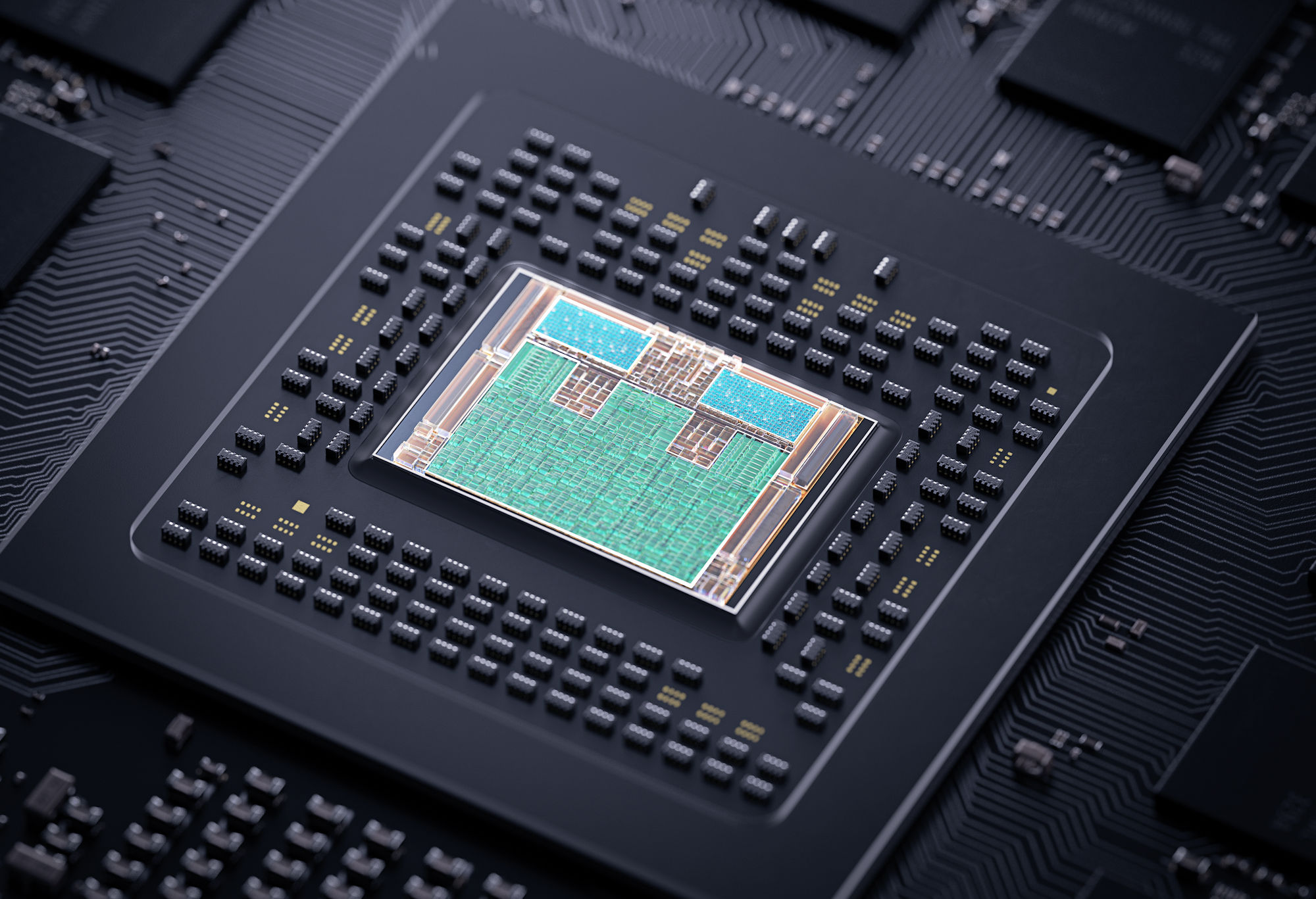

Fiji: - 596mm2 and 8.9 Billion Transistors

980 T:.- 601mm2 and 8 Billion Transistors.

So Nvidia has the edge here. And not only has the edge in efficiency per mm2 but also has better performance and consumes a lot less power.

14/16nm: Pascal and Vega.

Vega 64: - 486mm2 and 12.5 billion Transistors.

1080: - 314mm2 and 7 billion transistors

So, Nvidia is way more efficient per mm2. Performance is about the same with Vega getting a little better towards the end. I am also going to say power consumption is roughly the same too as you can under volt the Vega cards.

The current situation is that Nvidia are still on a 12nm process and AMD are on a 7nm process. Also, AMD's current GPUs lack any support for any of the Directx 12 Ultimate features. So you won't be able to make any meaningful comparisons about efficiency until both companies are on the same die size and pushing the same features (DX12 ultimate)

This is a myopic statement there. GPUs require power regardless be it more or less. And like you said most don't mind the power consumption as long as the performance is there. It's doesn't necessitate how "you" define that performance. Why? Because you left out one very important aspect, price. Therefore, it's not power consumption that takes focal point, it's the price. Making that statement moot.I think most people on this forum don't mind the power consumption as long as the performance is there. And that's where AMD have been failing. Their GPUs use a lot more power for less performance than their Nvidia counterparts. Or to put it another way, if AMD came out with a 250Watt GPU that smashed the 3080TI, nobody here would care that it used 300Watts.

You can't say it's accurate then say its inaccurate for the sake of saying "it's inaccurate". It's not about the differences in Uarch on the wafer as you speciously claim. It's about the manufacturing cost per wafer that I was referring to. We know that nvidia is going to 7nm. It's pretty much a given that the die sizes on Ampere will mimic Turing (and yes that is my opinion on it). Lets stay on topic here.This whole section of your post is inaccurate. Oh, what you are saying is correct about more dies per wafer been cheaper etc. But, the basis for this is all wrong. You are looking at Navi cards on a 7nm process and comparing them to Turing on a 12nm process. Do you really think that AMD's big Navi will be competitive with the 3080Ti and still be the same small die size as the 5700XT? Not a hope.

Performance isn't my discussion. I clearly stated that manufacturing cost is where AMD seems to be targeting Nvidia. As you pointed out AMD found a way to bring 360 mm² die to perform about the same as 2080 which is 545 mm², on console. That's the point! AMD is focusing on area (real estate). Nvidia, performance. Yet AMD is catching Nvidia on performance while still accounting for area (real estate).We can even work it out. The Xbox series X has an RDNA 2 GPU that's supposedly about the same performance as the 2080 super(545mm2). The GPU in the Xbox is 360mm2. But again, that's 12nm vs 7nm. So if the 3080Ti is 30% faster than the 2080Ti, then big Navi would need to be over 500mm2 to compete. And that's just in rasterized performance. They will also need to be competitive in Ray Traced performance.

Not extra expense, area expense. The cost of those gpus per wafer along with functional gpus per wafer, etc. will provide a total cost to manufacture. Which, as we know, is trickled down to the consumer. What you pay for is not so much a luxury tax but the actual cost to manufacture. Which will always be higher. So no, Nvidia won't be able to cut prices if AMD does have an answer to Ampere Titan (IMO). Which is the rumor. That's why I made the correlation between AMD vs Intel and AMD vs Nvidia in my earlier post.And with all this extra size and features will come extra expense. Considering the 5700T had a small die and has no support for yet cost $399 on release. And, as you say large dies cost more, so it could easily have an $800 plus price tag.

As for Dx12 Ultimate, sure it's unfortunate that Navi10 users don't have this. However, it's something that won't be seen in games for sometime now. Lets not confuse announcement of Dx12 Ultimate vs a game released today using Dx12 Ultimate.

Last edited:

I know, me too

I know, me too