Yes it has if you are basing 40% ipc gain of Zen vs Carrizo.

Carrizo is Excavator.

Don't blame me I didn't bring up zen in the thread, I'm just stating facts and stopping people getting amd hyped.

Very little of what you've stated are facts. One, Carrizo is optimised for the process for the area it's being used in. You choose the right process and use different metals sometimes, different transistor designs for different frequencies. It is better optimised for lower leakage, better idle power cut off and lower clock frequencies. You could EASILY take the same exact architecture and even on the same node and most of the same process use a different transistor design, different spacing and different number of layers to quite significantly change the electrical performance to make it much more efficient at higher clocks.

So no, Carrizo doesn't 'not have any power improvements' because you are comparing apples with oranges when comparing chips manufactured for different purposes. An architecture doesn't simply work one way and one way only. Layout, exact node and where you want to target performance have a very large effect on power output at various clocks.

Also, IPC has absolutely everything to do with clock speed, so anyone(which is both of you) stating it doesn't, don't know what they are talking about.

High IPC designs in general want as low latency as possible meaning a very short pipeline. Why, because the time the CPU is doing the least work is when it's mispredicted an instruction and has to fetch a new one. So you want as short a pipeline as you can get, which is directly linked to the achievable clock speeds of an architecture.

Stages in the pipeline and clock speed the architecture will run at are intrinsically linked. Having a wider core means to fill it efficiently you need larger prediction logic to keep it filled better and more consistently and you want a short pipeline to go along with it or it just isn't optimised. A very high clock speed design will want more stages in the pipeline and a narrower core.

IPC, clock speed, width of the core and amount of supporting core logic to keep the cores filled up are all very heavily linked together. There is a reason they didn't continue with a 5Ghz Core 2 Duo. P4, long pipeline, high clock speed, narrow core. Core 2 Duo, wider core, short pipeline, low clock speed.

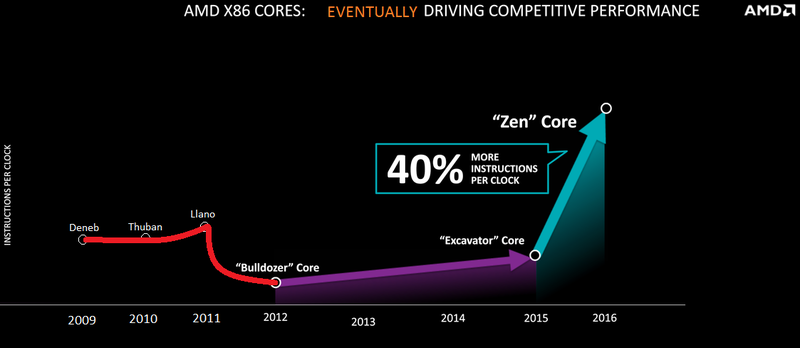

When AMD go out there and say X architecture is y% faster than Z architecture they aren't talking about a specific implementation but in general for that architecture because as above you can take any architecture and change the layout/transistor/node to drastically change it's performance. They are saying a mobile Zen aimed at low power would have a 40% higher IPC than a similar Excavator design, and a Zen FX for desktop and high power would be 40% higher IPC than a Excavator done for desktop/high power.