Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,842

- Location

- Planet Earth

Actually, the opposite. It's much lighter on the VRAM than UE4 (thanks to Nanite) and most games released up to now (AAA), relative to asset quality. I'd worry more for custom engines in that regard (in particular ports from consoles, cough Sony cough). Its problems are the perennial ones for UE, which are how to properly handle open-world asset streaming and not break the game & how to better make use of the CPU. Basically defeating the stutterfest it's plagued by. Moreso for smaller external devs, because there's a lot of ways to trip yourself up as a dev using UE, and it's much harder to actually modify it to your use case to run well when you don't have an elite programming team that specializes in it (like The Coalition).

CDPR for example has completely solved this problem for themselves, and now that they're on UE5 and major contributors to it perhaps that will put UE5 on the right track. Ubisoft also does very well on this front (but their older approaches tend to lean heavier on vram) but then they have huge teams & tune their engines specifically for open-world games. I would say that out of all the current open-world games that have (at least some form of) RT, the best mix of HQ assets + good LoD management + no undefeatable stutter, the best one is Watch Dogs: Legion. And if we look at how that handles vram we can see it's eminently playable on even an 8 GB card (with minimal difference vs ultra HD textures & max streaming budget), so for sure 12 GB will be fine for the remainder of this generation. In fact already the cross-gen titles that aren't completely gimped to low settings (or lacking some key graphical features entirely, like say a basic GI) prove to be more than enough to stress even the PS5/XSX so we're not going to see games that push memory requirements that much higher when all the assets are done with the consoles in mind. If anything we see stuff like Pathtracing being what gets pushed as the stressor option on PC, and that's super heavy on compute but not moreso on vram than basic RT.

For UE5 you can see CPU usage issues but modest vram usage:

And if we look at Remnant 2 which just recently launched as a UE5 title with Nanite (but no Lumen), we can see it do very well with little vram but have very HQ assets on show (which makes sense, it's basically what Nanite exists for):

So are you saying the RTX4080 and RTX4090 would be fine with 12GB? That an RTX4070 8GB might be fine? The RTX4060TI 8GB is perfectly OK? Seems rather weird Nvidia would put so much VRAM on the higher end cards if 12GB is perfectly fine!!

I don't think anyone really believes that. A few observations:

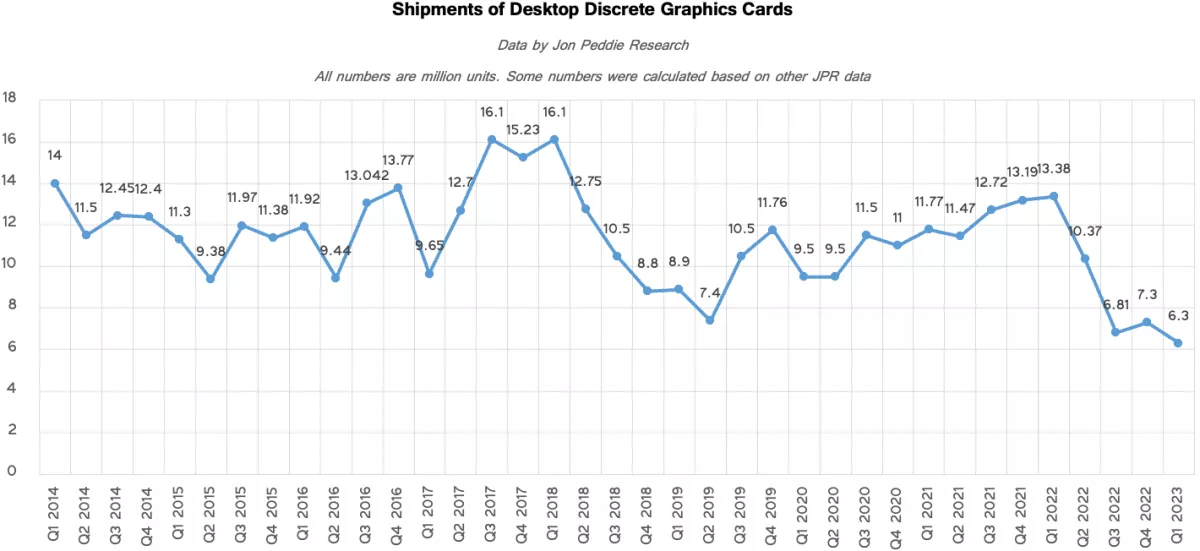

1.)You are only looking at early UE5 titles,and lots of devs say VRAM requirements are going up. Just compare early UE4 titles with current ones. You can't just look at early titles and surmise that is it for the next few years.

2.)What happens when devs want to target even higher resolution textures and simply want to use more individual textures? Is 8GB and 12GB going to be fine at qHD and 4K in all UE5 titles for the next few years?

3.)People buy £800 cards to play games at 4K or to have greater longevity at qHD.Most people I know in the realworld keep dGPUs(especially expensive ones) for 3~5 years.Many on here upgrade every year or two. So you need to consider longer lifespans and that is going to be into newer generations.

4.)The consoles are three years old now. The PS5 PRO is out next year,and its most likely the XBox Series X2 will be out earlier than expected(no XBox Series X refresh). RTX5000 series in 2025. So what happens in 2025,which is barely 18 months away?

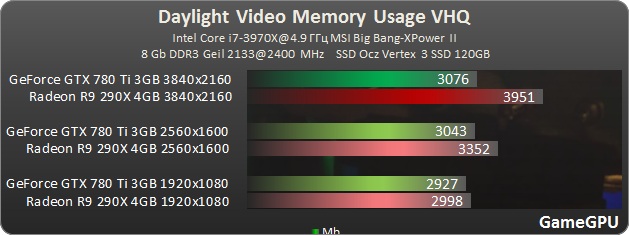

5.) We have gone though people saying 256MB was fine(8800GT 256MB vs HD3870 512MB),3GB was fine(GTX1060 and GTX780) and 4GB was fine(AMD Fury X vs GTX980TI),but it wasn't in the end. History has a good track record of saying weird SKUs with imbalanced memory subsystems have problems. The RTX4070TI is one of them.

6.)I have an RTX3060TI 8GB and I correctly surmised 8GB would be an issue at some point(the RX6700XT was nearly £500 so it was poor value). This is why I didn't buy the RTX3070 because I know it would not last any longer. It has the same playability when it hits that wall.

But I never expected that so soon after Nvidia launched the 12GB RTX4070,the RTX3070/RTX3070TI would hit a rock.

So I expect the same of the RTX4070TI when the RTX5070/RTX5070TI 16GB launches in 2025. I also expect the gap between the RTX4070TI and RTX4080 16GB to start growing after this.

7.)Remember again,this isn't a £550 dGPU but an £800 one. Like the RTX3070/RTX3070TI its a fast core gimped by a lack of VRAM/memory subsystem. Once it hits that issue it will be no better than an RTX4070! People need to stop trying to find Diamonds in a coal runoff heap.

The RTX4070TI 12GB and RX7900XT are the RTX4070 12GB and RX7800XT 20GB. They should be £600. But seriously the RTX4070TI 12GB is as cynical as RTX4060TI 8GB. Its the same as the RTX3070TI 8GB IMHO.

The RTX4060 8GB,RTX4070 12GB,RTX4080 16GB and RTX4090 24GB might be overpriced,but they seem somewhat more balanced.This is like Apple putting 8GB on entry level PCs and saying its OK!

Now you might disagree with all the points above. That is your perogative. But I don't agree with you that the 12GB on the RTX4070TI is acceptable because its £800. If I was spending that much money I would rather get an RTX4070 12GB and spend the rest on beer. The RX7900XT is only marginally better because it has at least 16GB. OFC,if Nvidia wasn't so greedy and made the RTX4070TI 24GB,AMD wouldn't even have that.

Last edited: