-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Ryzen 7 5800X 3D Cache Eight Core 4.5GHz (Socket AM4) Processor - Retail - Go Go Go xD

- Thread starter pigeonguyuk

- Start date

More options

Thread starter's postsI learned that in this forum people make absurd claims that they themselves know are false and then dissappear when asked to back up their claims.Did you learn nothing from the other two times both of of got slapped by dons for dragging threads off topic with this same repeating argument?

neither company are not immune to it they are business after all , I recall intel refusing to go past 4 cores untill AMD started releasing more cores ,

5900x 3d wouldnt have gained anything for gaming and reduce multi core with lower clocks

we dont know how advanced they are with 3d cache, they had to reduce the clock speed and also it increased temps its basically prototype on older cpu without any IPC - clock increases . No doubt 3d cache with higher clock speeds / more cores will come in the future

The 5800X3D are Milan-X and those are in high demand, tho it is a little strange they don't do 6 core CCD versions of it, surely they have a pile of them that failed Milan-X validation.

second generation of 3dcache will be Intel killer, higher clocks (probably even OC enabled), better integration and combined with Zen 4 ddr5 + double l2 cache, RPL will be in trouble because even regular Zen 4 will be at worst 10% behind while consuming much less power (fine for me,) and 3d versions are even more efficient because they don't need that high clock to reach same performance. Zen 4-3d will reach Meteor Lake performance in some games that love extra cache, just like 5800x3d have so huge lead in some games that RPL won't catch up it.

Soldato

- Joined

- 1 May 2013

- Posts

- 9,963

- Location

- M28

RPL will be awesome, even ADL consumes too much, and RPL will have even higher clocks + 8 additional cores, and all of that on the same node so it is expected. Zen 4 will be much more efficient, especially 3dcache versions that doesn't need high clocks to have better performance.

Source for this made up pic please.

Thought trolling was not allowed

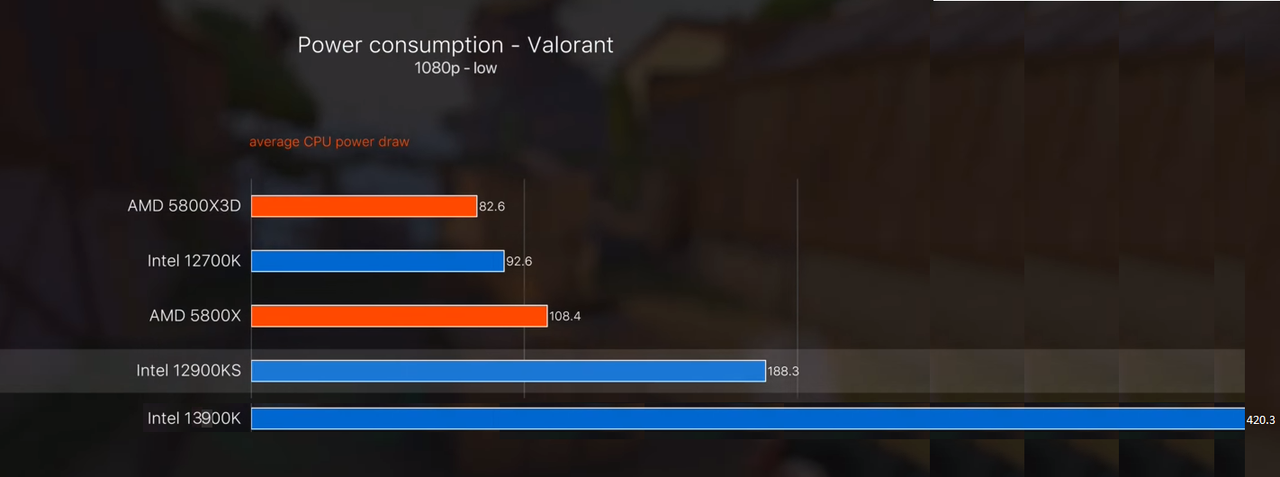

Yes dodgy graph, the 5800X3D only pulls around 55-65W in my testing on Valorant, CPU power usage on my overlay.Source for this made up pic please.

Thought trolling was not allowed

Soldato

- Joined

- 1 May 2013

- Posts

- 9,963

- Location

- M28

Yes dodgy graph, the 5800X3D only pulls around 55-65W in my testing on Valorant, CPU power usage on my overlay.

Don't know why some people, who should know better, are playing along with this. The copy and paste on the graph and text are amateur to say the least.

I’m disputing the 5800X3D results.Don't know why some people, who should know better, are playing along with this. The copy and paste on the graph and text are amateur to say the least.

Associate

- Joined

- 23 Dec 2015

- Posts

- 79

I received my 5800x3d from ocuk and installed it today (replacing a 3700x) and I have been unable to access the CO in Ryzen master, it is not available. it does not show anywhere?

I have the latest version of RM, the latest AMD drivers and the latest BIOS for my motherboard

is there anything I need to set in the BIOS to enable it? I looked at some guides and none of them mention one.

MB is Gigabyte X570 Aorus Pro f36C

I also seem to have low scores for memory compared to a lot I have seen on forums, the timings are set to the fastest timing in ryzencalc so I wonder if my bios is bugged or windows need a reinstall, it must be 4 years since it was installed

I have the latest version of RM, the latest AMD drivers and the latest BIOS for my motherboard

is there anything I need to set in the BIOS to enable it? I looked at some guides and none of them mention one.

MB is Gigabyte X570 Aorus Pro f36C

I also seem to have low scores for memory compared to a lot I have seen on forums, the timings are set to the fastest timing in ryzencalc so I wonder if my bios is bugged or windows need a reinstall, it must be 4 years since it was installed

I received my 5800x3d from ocuk and installed it today (replacing a 3700x) and I have been unable to access the CO in Ryzen master, it is not available. it does not show anywhere?

I have the latest version of RM, the latest AMD drivers and the latest BIOS for my motherboard

is there anything I need to set in the BIOS to enable it? I looked at some guides and none of them mention one.

MB is Gigabyte X570 Aorus Pro f36C

I also seem to have low scores for memory compared to a lot I have seen on forums, the timings are set to the fastest timing in ryzencalc so I wonder if my bios is bugged or windows need a reinstall, it must be 4 years since it was installed

Curve Optimizer? I don't think that's available for the 5800X3D, its a locked chip.

On the memory, that is a little high on the latency, look at your Northbridge clock, 916Mhz, for 3667 MT/s RAM it should read the same as the Memory Bus, 1833Mhz, it looks like you have the Fabric Clock running in 1/2 mode, it should be in 1:1 mode

My Aida64: Yours should be better than this as your RAM timings are much tighter, you need to find out why you're running 1/2 IF clock and change it to 1:1.

Last edited:

@dimension99

Ryzen switched the IF clock to 1/2 ratio at a certain clock, i can't remember if its over 3600MT/s or over 3800MT/s, if its 3600MT/s then that's what's happened, you're running 3666MT/s

In your BIOS go to Tweaker > UCLK Mode, if its set to auto set it to UCLK == MEMCLK, or set your XMP to 36X, IE 3600MT/s.

Ryzen switched the IF clock to 1/2 ratio at a certain clock, i can't remember if its over 3600MT/s or over 3800MT/s, if its 3600MT/s then that's what's happened, you're running 3666MT/s

In your BIOS go to Tweaker > UCLK Mode, if its set to auto set it to UCLK == MEMCLK, or set your XMP to 36X, IE 3600MT/s.

Soldato

- Joined

- 1 May 2013

- Posts

- 9,963

- Location

- M28

I’m disputing the 5800X3D results.

Have you tried without PBO tuner which whatever source that graph came from wouldn't have done?

Hi guys from the benchmarks I have read is it fair to say that even the benefits of this CPU and additional cache really disappear when running games at 4k or higher due to the usual increased GPU dependencies? The 5800x and 5800x3D seem to be really close and within a few percent of each-other when games are tested at 4k.

I do wonder how much of a boost the Zen4 architecture will be.

I do wonder how much of a boost the Zen4 architecture will be.

It really depends how long you intend to keep it. GPUs look set to get a lot faster so CPU could become more important at 4K than it currently is. If you upgraded again by then it's a none issue. I intend to keep mine for a few more GPU generations so the extra umph might come in handy.Hi guys from the benchmarks I have read is it fair to say that even the benefits of this CPU and additional cache really disappear when running games at 4k or higher due to the usual increased GPU dependencies? The 5800x and 5800x3D seem to be really close and within a few percent of each-other when games are tested at 4k.

I do wonder how much of a boost the Zen4 architecture will be.

Surely that's mostly a given, but if throw in the big caveat which applies to most games benchmarking and especially CPU ones: you should almost ignore the averaged FPS and concentrate on minimums and frametimes.The 5800x and 5800x3D seem to be really close and within a few percent of each-other when games are tested at 4k.

Problem send to be that in a lot of 5800X3D reviews, minimums were ignored at 4k.

Yes, the 4090 is reported to be 1.5-2x faster in rasterization according to the latest Moores Law video so as I now play exclusively at 4k or higher I am definitely interested in making sure I can properly power it when I buy it as I am now playing a lot of VR, mostly modded Skyrim. My 5800x is of course a quick CPU but as far as temps and OC headroom go I really got a lemon and I run it at a lower voltage and speed to keep temps down.It really depends how long you intend to keep it. GPUs look set to get a lot faster so CPU could become more important at 4K than it currently is. If you upgraded again by then it's a none issue. I intend to keep mine for a few more GPU generations so the extra umph might come in handy.

Yes I had trouble finding that info which would really show any benefits. I will look at Youtube to see if people have done some decent real-time comparison between the CPU's.but if throw in the big caveat which applies to most games benchmarking and especially CPU ones: you should almost ignore the averaged FPS and concentrate on minimums and frametimes.

Problem send to be that in a lot of 5800X3D reviews, minimums were ignored at 4k.

Last edited:

Yes dodgy graph, the 5800X3D only pulls around 55-65W in my testing on Valorant, CPU power usage on my overlay.

What do you get without a CO profile?

Don't know why some people, who should know better, are playing along with this. The copy and paste on the graph and text are amateur to say the least.

It is a hilarious BS chart for sure and should be ignored, like most "leaks". Reviews will show the first reliable data.

Surprisingly Anandtech did a CPU review of the 5800X3D!Yes, the 4090 is reported to be 1.5-2x faster in rasterization according to the latest Moores Law video so as I now play exclusively at 4k or higher I am definitely interested in making sure I can properly power it when I buy it as I am now playing a lot of VR, mostly modded Skyrim. My 5800x is of course a quick CPU but as far as temps and OC headroom go I really got a lemon and I run it at a lower voltage and speed to keep temps down.

Yes I had trouble finding that info which would really show any benefits. I will look at Youtube to see if people have done some decent real-time comparison between the CPU's.

(Long running joke especially on the AT forums as they seldom do any reviews anymore and tend to be very very late.)

And they had mins in their 4K part. The biggest gain was in GTA V but they tested that at 4K Low:

GTA has some similarities with Skyrim/FO4 in that it can be extremely CPU dependent but that is probably a bigger gain than we saw in CAT's FO4 benching thread (which is at 480P). While Ryzen 5800X3D does lead in the FO4 benches there (but nobody has submitted a 12900K yet), even with the 3D memory timings still seem to matter. Pity as the real appeal of 5800X3D vs 12700K/12900K is that it can be largely plug and play and mostly works quite well with even cheap PC3200 memory. (Well that, and Alder Lake can get really power hungry; still seems that Intel P cores are very much brute force - not that throwing transistors at a problem is necessarily bad until space and heat becomes a problem - which is mainly a problem for Intel with servers.)

Yes if Intel could rival the efficiency of the 5800X3D they would have a beast with their high clocks. Seems like at the moment you can't have everything. I do like the low power draw and therefore heat output. I'm too old to be sweating playing PC games, I've got a rowing machine for that and trying to keep up with the dogSurprisingly Anandtech did a CPU review of the 5800X3D!

(Long running joke especially on the AT forums as they seldom do any reviews anymore and tend to be very very late.)

And they had mins in their 4K part. The biggest gain was in GTA V but they tested that at 4K Low:

GTA has some similarities with Skyrim/FO4 in that it can be extremely CPU dependent but that is probably a bigger gain than we saw in CAT's FO4 benching thread (which is at 480P). While Ryzen 5800X3D does lead in the FO4 benches there (but nobody has submitted a 12900K yet), even with the 3D memory timings still seem to matter. Pity as the real appeal of 5800X3D vs 12700K/12900K is that it can be largely plug and play and mostly works quite well with even cheap PC3200 memory. (Well that, and Alder Lake can get really power hungry; still seems that Intel P cores are very much brute force - not that throwing transistors at a problem is necessarily bad until space and heat becomes a problem - which is mainly a problem for Intel with servers.)

Have you tried without PBO tuner which whatever source that graph came from wouldn't have done?

No, but fair point I forgot I was running -30 CO in that video. Probably knocks about 15W off so would roughly match up with what they saw.What do you get without a CO profile?

It is a hilarious BS chart for sure and should be ignored, like most "leaks". Reviews will show the first reliable data.

I tested GTA V and as noted by Gamers Nexus...Surprisingly Anandtech did a CPU review of the 5800X3D!

(Long running joke especially on the AT forums as they seldom do any reviews anymore and tend to be very very late.)

And they had mins in their 4K part. The biggest gain was in GTA V but they tested that at 4K Low:

GTA has some similarities with Skyrim/FO4 in that it can be extremely CPU dependent but that is probably a bigger gain than we saw in CAT's FO4 benching thread (which is at 480P). While Ryzen 5800X3D does lead in the FO4 benches there (but nobody has submitted a 12900K yet), even with the 3D memory timings still seem to matter. Pity as the real appeal of 5800X3D vs 12700K/12900K is that it can be largely plug and play and mostly works quite well with even cheap PC3200 memory. (Well that, and Alder Lake can get really power hungry; still seems that Intel P cores are very much brute force - not that throwing transistors at a problem is necessarily bad until space and heat becomes a problem - which is mainly a problem for Intel with servers.)