A dev on reddit has confirmed the practice. But he also explained it a bit more so not just lazyness.

As we know UE is now dominant in the AAA industry, and this engine utilises streaming of textures whilst playing.

The consoles have lots of hardware optimisations which make the overhead of loading textures really low hence either reduced or no stutters. (they do of course also have better VRAM to tflops balance).

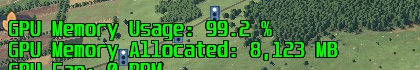

On the PC this is a problem of course, but according to this dev, if they used system ram more instead of VRAM which is an option on PC it would be even worse unless of course VRAM is being saturated, in which case using system ram would improve things.

He didnt really offer a solution though other than direct storage will improve things.

The take in my opinion from what he said is they choose to use a solution that works best for cards with decent VRAM, and its the usual "upgrade" for those who have VRAM starvation.

Of course time saving will still be a part of this in my opinion, as its quicker to port a game if you dont have to tinker with the memory allocation code.

--

Personally I wish UE would just go away but sadly its getting more common if anything, I have played low end games using UE4 and they still had dodgy performance, just seems a horrid engine.

Also a few other devs who responded spoke about UE4, and they admitted the engine has practically no built in optimisation. Most of these comments were on a thread about the new star wars game.

He is not the first dev to say this, he will not be the last, i have been saying it for at least 2 years.

UE is now dominant in the industry because its nothing short of brilliant. And its not going to get any better, every other engine developer is going to want to emulate the technology.

Live texture streaming, and Object Container Streaming first appeared in 2016, to my knowledge, in Star Citizen, its the only way to get seamless transitions from space to the surface of planets, especially in a multilayer environment when you have crew mates in the back of your ship doing their own thing so you can't level load.

Then Sony, on the PS5, with Ratchet and Clank, again, seamless, no level loading.

When the CIG Frankfort office cracked this after working on the tech for about 2 years they quietly put out a little celebration video.

And in game in 2020.

youtu.be

This has been coming, for years, and frankly PCMR dGPU's are being left behind by Game Consoles and game developers have just had enough of being strangled by that, even CIG making a PC exclusive game are saying you're going to have to run proper hardware to play our game, because we can't make the game we want to make for middling dGPU's, tho they do try to keep it running ok on 8GB hardware they have talked about how difficult a task that is, its a lot of time and effort. It runs better with a 12GB and certainly 16GB GPU and a fast NVMe.

I don't do it just because i want to hate on Nvidia, i have a project in UE5 that's on hold until i get a GPU with more VRam, because 8GB just wont do it.

8GB isn't even low end these days, that's 12GB, mid range 16GB, higher end 20GB at least, there is no reason for Nvidia or AMD to not do that, VRam costs them peanuts, the only reason they would do this is for planned obsolescence, the RTX 3070 and 3080 are exactly that and i as a PC enthusiast do not take kindly to BS like that, having to pay hundreds and hundreds of £ for these things i take that personally. I fell like i'm being manipulated and ripped off by some cynically disrespectful people.

We should all call them out of it and demand better. Because right now PCMR is a pee taking joke. And its you and me they are taking the pee out of....

www.overclockers.co.uk

www.overclockers.co.uk

www.overclockers.co.uk

www.overclockers.co.uk

some very attractive curves.

some very attractive curves.