This kind of supports the rumors about Navi 32 and 33 having issues or maybe AMD is taking the time to retape silicon to fix issues that emerged with Navi 31...Nvidia will keep prices of the 4070 high then and only re-adjust when these cards are out.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It looks like the 'real' /affordable RDNA3 + next gen NV desktop launch won't launch until September. Thoughts?

- Thread starter g67575

- Start date

More options

Thread starter's postsCaporegime

- Joined

- 18 Oct 2002

- Posts

- 31,181

Nah, AMD aren't real competition enough to drop pricing tbf.Nvidia will keep prices of the 4070 high then and only re-adjust when these cards are out.

PC market is on low down.This kind of supports the rumors about Navi 32 and 33 having issues or maybe AMD is taking the time to retape silicon to fix issues that emerged with Navi 31...

No rush with new stuff.

They aren't, until they are. Nvidia didn't drop the price of theNah, AMD aren't real competition enough to drop pricing tbf.

Associate

- Joined

- 29 Jun 2016

- Posts

- 543

I tend to agree, where the A750 and A770 are the highest performance per £ cards available right now, however their initial drivers were so bad I think this generation's credability is beyond repair.Ironically Intel might be the one pushing prices down, they are openly focusing on the sub $300 market, which once upon a time was AMD's strong point.

Rumours are of battlemage being a great mid-range card (where realistically the current offerings are mid-to-low end), but are only scheduled for release in 2024... That's a sad prospect...

What we need is a new RX 580.

250€, 90 fps @1080 ultra without RT and upscaling and enough RAM to give it longevity.

If the RX 6700XT was about 100€ lower in price it would basically be it, sadly it's hovering around 400€ and it's still IMHO one of the few cards that I would be willing to spend money for.

250€, 90 fps @1080 ultra without RT and upscaling and enough RAM to give it longevity.

If the RX 6700XT was about 100€ lower in price it would basically be it, sadly it's hovering around 400€ and it's still IMHO one of the few cards that I would be willing to spend money for.

Caporegime

- Joined

- 18 Oct 2002

- Posts

- 31,181

Also....world peace.250€, 90 fps @1080 ultra without RT and upscaling and enough RAM to give it longevity.

RX 6600 is there in price, just missing enough RAM.Also....world peace.

Associate

- Joined

- 27 Jan 2009

- Posts

- 1,286

- Location

- United Kingdom

I don't think affordable is the correct word.

They will just appear affordable because the other tier is so expensive.

I've now decided to get what ever hits my price category first. £800 for a 4080 or £1000 for a 7900xtx NitroX

Take too long to get there though and ill just skip another gen.

They will just appear affordable because the other tier is so expensive.

I've now decided to get what ever hits my price category first. £800 for a 4080 or £1000 for a 7900xtx NitroX

Take too long to get there though and ill just skip another gen.

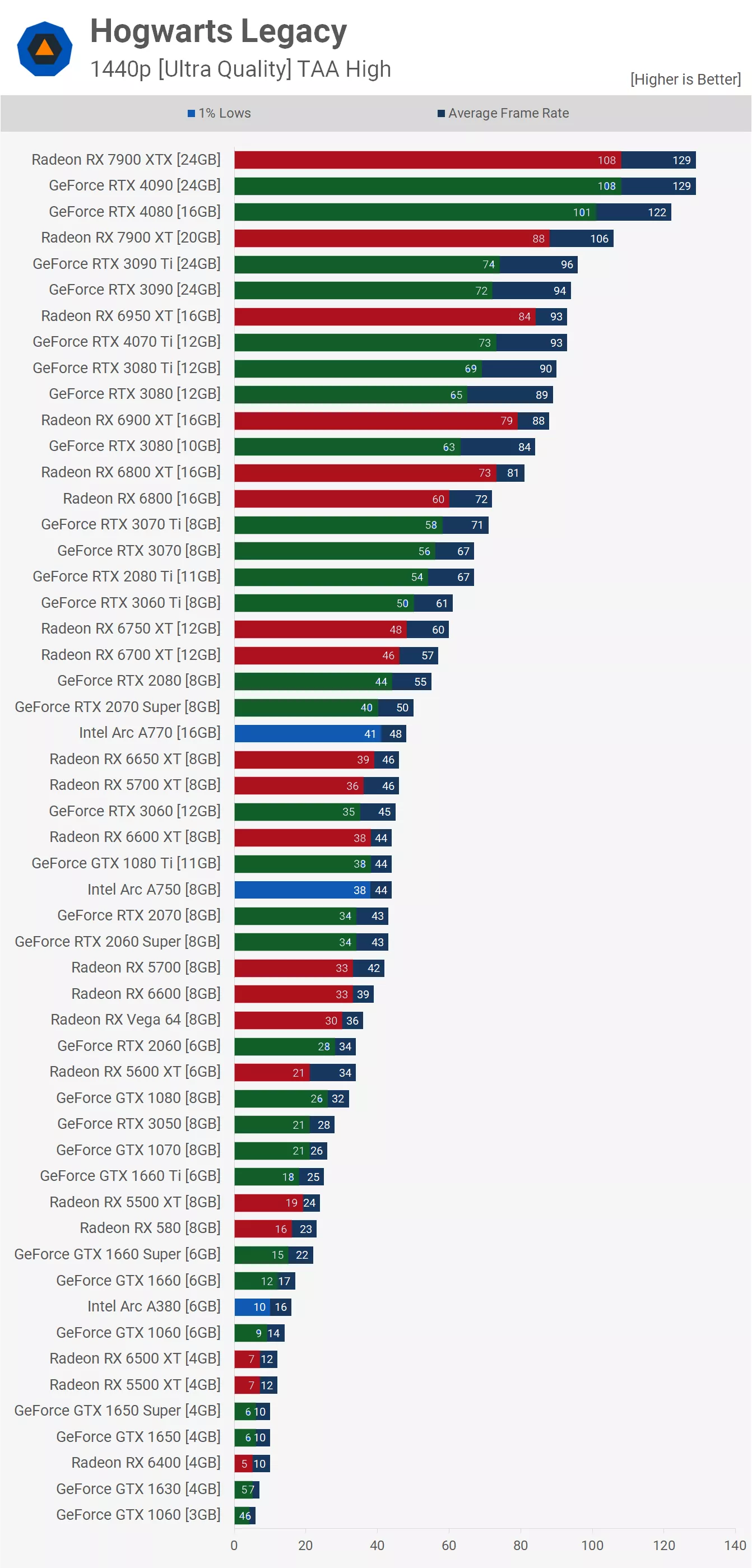

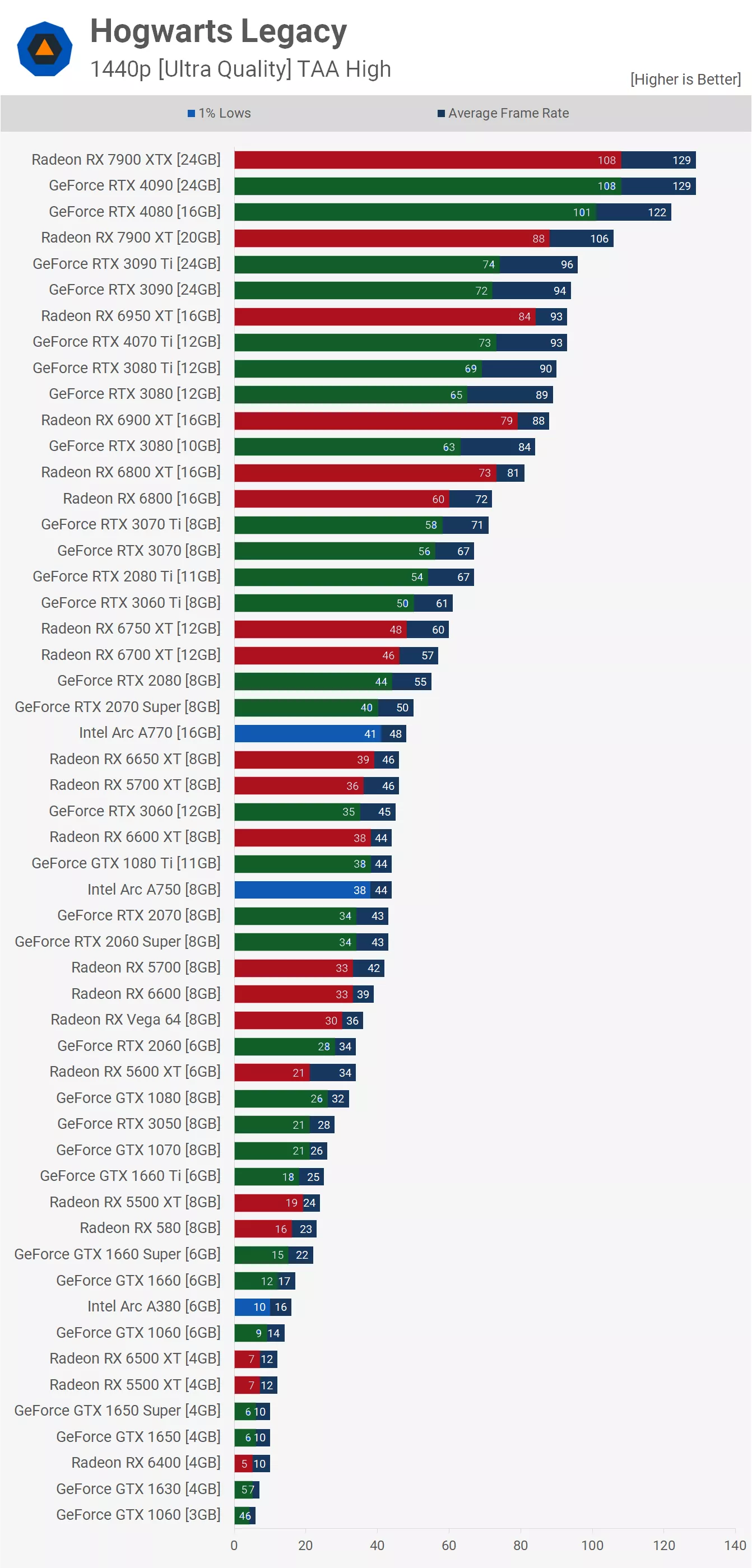

I think the prices of the 6700 XT are very good (actually below the MSRP price of £419). I would have bought one, but there are a few games it struggles with at 1440p, like Cyberpunk 2077, WH3 and no doubt the new Hogwarts game.

We basically need the performance of the RTX 3080 but for less money, to play very smoothly at 1440p (or perhaps 1440p upscaled to 4K).

There's also ray tracing to consider, but I think this subject tends to distort people's views about graphics performance. It seems like something to consider only when used together with other features like frame generation, which is still probably 1-2 years away from seeing wider adoption in games.

We basically need the performance of the RTX 3080 but for less money, to play very smoothly at 1440p (or perhaps 1440p upscaled to 4K).

There's also ray tracing to consider, but I think this subject tends to distort people's views about graphics performance. It seems like something to consider only when used together with other features like frame generation, which is still probably 1-2 years away from seeing wider adoption in games.

Last edited:

Associate

- Joined

- 1 Oct 2020

- Posts

- 1,240

At this stage, yes. Where the mid range current gen cards actually end up performance wise will be very interesting, especially in comparison to previous gen cards. I think it will be difficult to recommend current mid gen over previous high gen, with warranty being the difference.There's also ray tracing to consider, but I think this subject tends to distort people's views about graphics performance. It seems like something to consider only when used together with other features like frame generation.

It won't be long before rental schemes are in place in a widespread fashion.

I don't think we will see Navi32 GPUs until FSR3 /frame generation is ready, which AMD has kept quiet about.

I don't believe that the GPUs themselves aren't ready, but it would make for a more impactful launch if they launch alongside FSR3.

The key thing, is that AMD is still somewhat behind Nvidia in 3D graphics performance with their Navi31 cards (also in ray tracing, but FSR3 can help here).

It's probably just not ready yet, perhaps in a few months.

Nvidia has their frame gen. tech ready to use now, so it means there's really nothing that should hold back the release of cards like the RTX 4070 (except that they want to focus on releasing and producing laptop GPUs first).

I don't believe that the GPUs themselves aren't ready, but it would make for a more impactful launch if they launch alongside FSR3.

The key thing, is that AMD is still somewhat behind Nvidia in 3D graphics performance with their Navi31 cards (also in ray tracing, but FSR3 can help here).

It's probably just not ready yet, perhaps in a few months.

Nvidia has their frame gen. tech ready to use now, so it means there's really nothing that should hold back the release of cards like the RTX 4070 (except that they want to focus on releasing and producing laptop GPUs first).

Last edited:

This is basically what you need to play games at 1440p (ultra, RT off), in the latest titles:

RTX 3080 or RX 6800 XT level of performance.

The RTX 4080 does well with RT enabled:

RTX 3080 or RX 6800 XT level of performance.

The RTX 4080 does well with RT enabled:

Last edited:

There's been a 22.2% increase in inflation since late 2020. The MSRP of the RTX 3070 FE was £470 in October 2020, and there has been approx. 22.3% inflation (RPI) since then, so a similarly priced RTX 4070, taking inflation into account, would cost ~£575. If it uses GDDR6X this will increase the cost a bit (so will using 12GB of VRAM instead of 8GB). Nvidia says their new fab process is expensive also, and the cooler design is improved too (arguably GDDR6X does need this), so a 4070 could end up being quite expensive.

Maybe they could make 2 versions, with different kinds of VRAM?

Maybe they could make 2 versions, with different kinds of VRAM?

Last edited:

IDC what anyone says, Nvidia is cheaping out on Vram and has for a while. 7 years and no movement for most of the sku's. Imagine if the 1080 shipped with 512 megabytes of vram. AMD cuts costs as hard as anyone and still managed to throw 12 gigs onto a card like the 6700xt, that tells me that's the minimum that a card like that should have.

Hardware doesn't progress as much as we think it does. We're still just using 4 cores and 8 GB texture loads in 2023. But it's so hard to break into the GPU and CPU market, the tech level required to make a competitive entry is staggering and a small handful of companies have the fabs all tied up.

I'm also unsure if any of this matters all that much for games, tripling the visual complexity on screen in a modern game like Hogwarts does next to nothing - we're heavily into diminishing returns with traditional rendering.

If the entire gaming development ecosystem was focused on a high end PC platform for years like they are on say, the PS5 hardware, I do wonder what that game would look like. Some parts of games, if I zoom in, seem to be as busy to look at as their IRL counterpart - yet I'm in no danger of forgetting I'm looking at videogame minutiae

Hardware doesn't progress as much as we think it does. We're still just using 4 cores and 8 GB texture loads in 2023. But it's so hard to break into the GPU and CPU market, the tech level required to make a competitive entry is staggering and a small handful of companies have the fabs all tied up.

I'm also unsure if any of this matters all that much for games, tripling the visual complexity on screen in a modern game like Hogwarts does next to nothing - we're heavily into diminishing returns with traditional rendering.

If the entire gaming development ecosystem was focused on a high end PC platform for years like they are on say, the PS5 hardware, I do wonder what that game would look like. Some parts of games, if I zoom in, seem to be as busy to look at as their IRL counterpart - yet I'm in no danger of forgetting I'm looking at videogame minutiae

I tend to agree, 12GB of VRAM would be a sensible amount to include. My preference would be two 12GB cards, with the difference being whether GDDR6 or GDDR6X is used.

Alternatively, just use the fastest GDDR6 memory available, which would be a new variant called 'GDDR6W' (upto 22gbps). It definitely looks like GDDR6X is due to be superseded by other technologies fairly soon.

Alternatively, just use the fastest GDDR6 memory available, which would be a new variant called 'GDDR6W' (upto 22gbps). It definitely looks like GDDR6X is due to be superseded by other technologies fairly soon.

Last edited:

IDC what anyone says, Nvidia is cheaping out on Vram and has for a while. 7 years and no movement for most of the sku's. Imagine if the 1080 shipped with 512 megabytes of vram. AMD cuts costs as hard as anyone and still managed to throw 12 gigs onto a card like the 6700xt, that tells me that's the minimum that a card like that should have.

Hardware doesn't progress as much as we think it does. We're still just using 4 cores and 8 GB texture loads in 2023. But it's so hard to break into the GPU and CPU market, the tech level required to make a competitive entry is staggering and a small handful of companies have the fabs all tied up.

I'm also unsure if any of this matters all that much for games, tripling the visual complexity on screen in a modern game like Hogwarts does next to nothing - we're heavily into diminishing returns with traditional rendering.

If the entire gaming development ecosystem was focused on a high end PC platform for years like they are on say, the PS5 hardware, I do wonder what that game would look like. Some parts of games, if I zoom in, seem to be as busy to look at as their IRL counterpart - yet I'm in no danger of forgetting I'm looking at videogame minutiae

It's a thought but it requires ignoring that the consoles are easier to focus on because they're not the pick-n-mix mess of hardware and software that is the world of pcs.

The developers have guarantees of what will be there to work with.

IDC what anyone says, Nvidia is cheaping out on Vram and has for a while. 7 years and no movement for most of the sku's. Imagine if the 1080 shipped with 512 megabytes of vram. AMD cuts costs as hard as anyone and still managed to throw 12 gigs onto a card like the 6700xt, that tells me that's the minimum that a card like that should have.

Hardware doesn't progress as much as we think it does. We're still just using 4 cores and 8 GB texture loads in 2023. But it's so hard to break into the GPU and CPU market, the tech level required to make a competitive entry is staggering and a small handful of companies have the fabs all tied up.

I'm also unsure if any of this matters all that much for games, tripling the visual complexity on screen in a modern game like Hogwarts does next to nothing - we're heavily into diminishing returns with traditional rendering.

If the entire gaming development ecosystem was focused on a high end PC platform for years like they are on say, the PS5 hardware, I do wonder what that game would look like. Some parts of games, if I zoom in, seem to be as busy to look at as their IRL counterpart - yet I'm in no danger of forgetting I'm looking at videogame minutiae

They do it as it fits their strategy to upsell.