-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA 4000 Series

- Thread starter MrClippy

- Start date

More options

Thread starter's postsCan't really see any particular game that could cause it

Been several high profile releases recently like Hogwarts Legacy where you really need a higher end 2000 series or better to not sacrifice too much.

They are just reserving absurd amounts for the desktop using a blanket percentage of the VRAM available on your card. 4090 sees 5GB being reserved just for desktop.Maybe the really are using the VRAM as a place to store things like they could on a unified memory console? Seems strange, but possible.

Thing is, rather than the moans about "bad console" ports, people do have to ask themselves: what drives gaming, which platform gets developed for, etc?

Yes, some things are due to sloppy ports, but if a game has been in development for a couple of years and has made all kind of assumptions on the system (VRAM amount, core count, decompression hardware etc.), and if the PC port is an after though...

In an ideal world, the PC port would have been in the thinking at the design stages; no ports would be rushed etc.

However, as things stand... This is only going to get worse.

It might even be that matching a PC with 8 Zen 2 cores is futile and the nature of PC ports and lack of disk compression hardware means that 12+ cores of Zen3/4 will be required to max things out.

Also instead of developing the engine from the ground up they simply ported it from PS5 which uses dedicated decompression hardware for assets. So now only very high end CPUs can run this game with that much load and even they struggle if you pair it with 4090. My Noctua NH-U12A fans were working at full blast on my 12700k in this game with dlss.

There are bad console ports but this game has clearly been developed by someone who has no understanding of PC game development. Even Fromsoft did better for the PC with Elden Ring and that's with their horrible 60 fps cap and no ultrawide support. This game is an outlier by all standards and should not be used as a reference point for anything seeing as it reduces a 12900k to the level of a PS5 with their decompression solution.

What amazes me is that HUB actually decided to benchmark this travesty on 26 video cards so that they can rant on about 8GB cards in multiple videos declaring victory victory. I literally turned on the game and was greeted with my CPU fans blasting and my 4090 GPU usage dropping like a rock within the first hour. Who benchmarks a game like this?

I agree with Alex. They should ideally pull this game from the store and Sony should involve Nixxes to fix it.

Last edited:

1 is DLSS Q...At a quick glance i would say Image 2 is DLSS and 1 is native, 2 is sharper than 1 but lower resolution.

7 is native

2 is FSR ultra performance with 100% sharpness.

1 is DLSS Q...

7 is native

2 is FSR ultra performance with 100% sharpness.

But dlss is 720p!!!

I did this test a while back with CP 2077, most of the dlss nay Sayers also got them wrong

Well in static images even DLSS Performance looks better than native, that's why I said images are unfair towards native. In motion native is obviously better than dlss performance. Against DLSS Q it's a wash / depends on the game.But dlss is 720p!!!

I did this test a while back with CP 2077, most of the dlss nay Sayers also got them wrong

Well in static images even DLSS Performance looks better than native, that's why I said images are unfair towards native. In motion native is obviously better than dlss performance. Against DLSS Q it's a wash / depends on the game.

In motion, dlss can often trash even native + taa if you're looking at overall temporal stability. Do agree lower presets aren't as good though but they have come along way since last year where even dlss performance is far more usable, obviously works best the higher res you use though

12900k + 4090 / also on a u12aThey are just reserving absurd amounts for the desktop using a blanket percentage of the VRAM available on your card. 4090 sees 5GB being reserved just for desktop.

Also instead of developing the engine from the ground up they simply ported it from PS5 which uses dedicated decompression hardware for assets. So now only very high end CPUs can run this game with that much load and even they struggle if you pair it with 4090. My Noctua NH-U12A fans were working at full blast on my 12700k in this game with dlss.

There are bad console ports but this game has clearly been developed by someone who has no understanding of PC game development. Even Fromsoft did better for the PC with Elden Ring and that's with their horrible 60 fps cap and no ultrawide support. This game is an outlier by all standards and should not be used as a reference point for anything seeing as it reduces a 12900k to the level of a PS5 with their decompression solution.

What amazes me is that HUB actually decided to benchmark this travesty on 26 video cards so that they can rant on about 8GB cards in multiple videos declaring victory victory. I literally turned on the game and was greeted with my CPU fans blasting and my 4090 GPU usage dropping like a rock within the first hour. Who benchmarks a game like this?

I agree with Alex. They should ideally pull this game from the store and Sony should involve Nixxes to fix it.

12900k + 4090

Yes - there are games where the TAA solution is jarringly blurry and it gets even more so while moving. I played a lot of Cod cold war back in the day and man, DLSS Q was such an upgrade over the native rendering - was not even close. But as i've said repeatedly, this is pointless argument, facts and pictures won't convince anyone that wants to hate.In motion, dlss can often trash even native + taa if you're looking at overall temporal stability. Do agree lower presets aren't as good though but they have come along way since last year where even dlss performance is far more usable, obviously works best the higher res you use though

In motion, dlss can often trash even native + taa if you're looking at overall temporal stability. Do agree lower presets aren't as good though but they have come along way since last year where even dlss performance is far more usable, obviously works best the higher res you use though

Even with the latest version of DLSS 3 things like small fast moving birds in the background can have a long dark trail like 1-2 seconds worth of motion behind them which does somewhat take away from the image quality/stability in any scene containing said artefact.

DLSS performance also struggles with things like objects with long hair/fur which is often just a grainy mess of noise especially in high contrast areas.

Personally not a fan of these upscaling techniques but I'll accept it where it is necessary in games which have an actual decent ray tracing implementation or games which are ambitious in scale like Hogwarts Legacy.

Last edited:

I wouldn't be able to tell dlss Q from native rendering even if my life depended on it. In fact, I m willing to bet money no one else can either. Native rendering also has flaws, like excessive shimmering on TLOU1 (already posted a video about it). DLSS performance mostly has a much softer presentation and suffers from ghosting (probably the dark trail you are talking about) in fast motion. But that one should be used as a last resortEven with the latest version of DLSS 3 things like small fast moving birds in the background can have a long dark trail like 1-2 seconds worth of motion behind them which does somewhat take away from the image quality/stability in any scene containing said artefact.

DLSS performance also struggles with things like objects with long hair/fur which is often just a grainy mess of noise especially in high contrast areas.

Personally not a fan of these upscaling techniques but I'll accept it where it is necessary in games which have an actual decent ray tracing implementation or games which are ambitious in scale like Hogwarts Legacy.

I wouldn't be able to tell dlss Q from native rendering even if my life depended on it. In fact, I m willing to bet money no one else can either. Native rendering also has flaws, like excessive shimmering on TLOU1 (already posted a video about it). DLSS performance mostly has a much softer presentation and suffers from ghosting (probably the dark trail you are talking about) in fast motion. But that one should be used as a last resort

The dark trails happen in quality mode as well - the game needs to provide quite a long movement history to avoid it for some reason and in some cases that isn't possible. Even in quality mode I notice a fair few instances of things like thin items in motion like a radio antenna having a halo around them and situations where you are walking up/down stairs where the vertical stability in a scene can cause image quality issues at certain angles and the slight softening of the image I notice straight away vs native even if I've not seen native.

The better edge stability and resolved shimmering is nice but I find it a mixed bag - Hogwarts Legacy is vastly improved in DLSS mode if you use the FreeStyle Details filter due to the DLSS implementation lacking a sharpening pass even if you stick the latest DLLs in there but ultimately I'd still rather have native.

But that's the whole issue, when a lot of the times even native rendering has issues....what can DLSS do to fix those. That's why I said most of the times it gets 90+% there next to native.The dark trails happen in quality mode as well - the game needs to provide quite a long movement history to avoid it for some reason and in some cases that isn't possible. Even in quality mode I notice a fair few instances of things like thin items in motion like a radio antenna having a halo around them and situations where you are walking up/down stairs where the vertical stability in a scene can cause image quality issues at certain angles and the slight softening of the image I notice straight away vs native even if I've not seen native.

The better edge stability and resolved shimmering is nice but I find it a mixed bag - Hogwarts Legacy is vastly improved in DLSS mode if you use the FreeStyle Details filter due to the DLSS implementation lacking a sharpening pass even if you stick the latest DLLs in there but ultimately I'd still rather have native.

Another thing I notice personally is that the reconstructed details aren't consistent between different instances of a scene for instance if you were driving down a road where there was dense detail along the sides with your view intermittently broken up by say sections of fencing then every time you see the scenery it will only be approximately the same and though a lot of people probably wouldn't notice I can't un-see it once I've noticed myself.

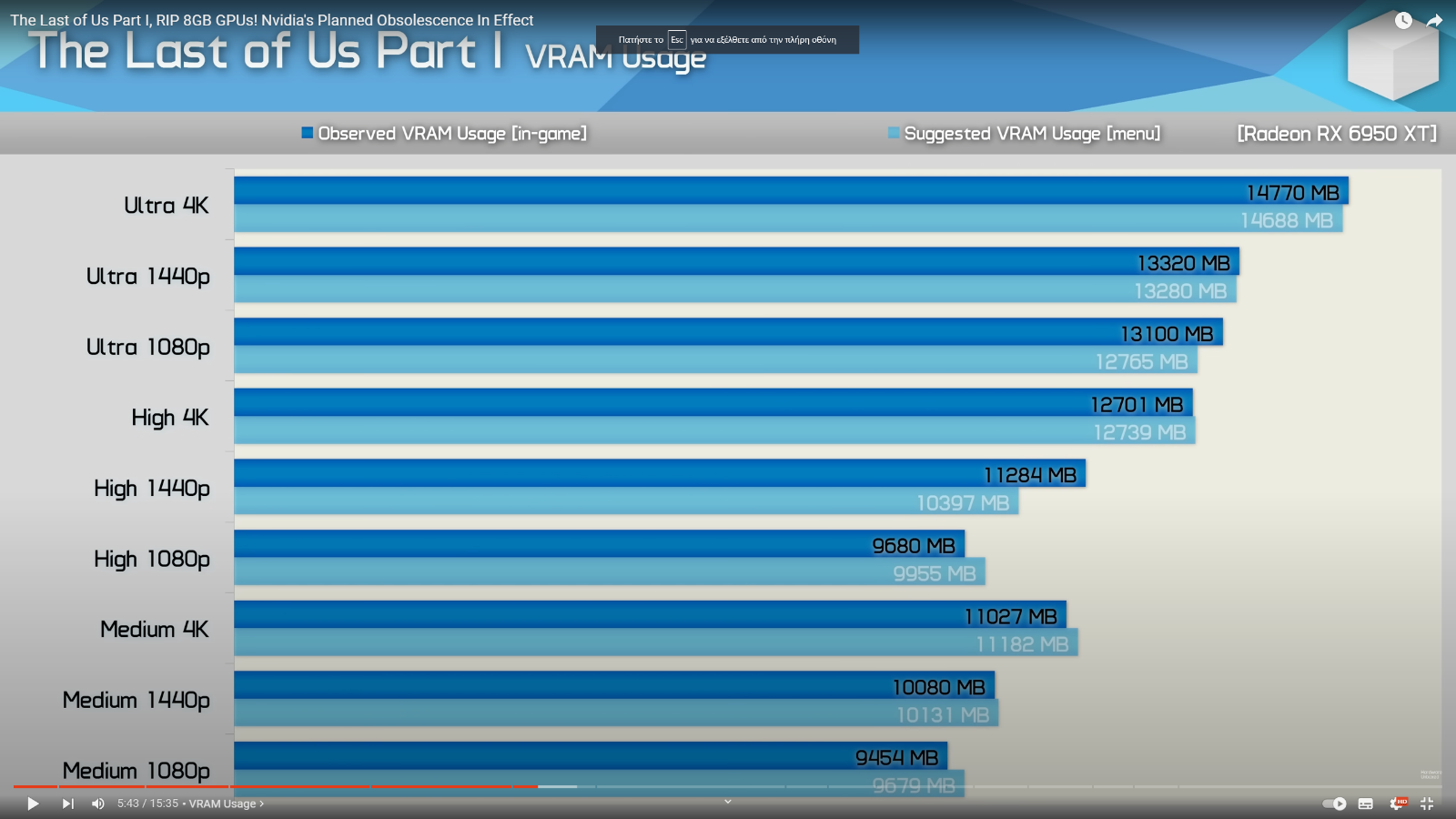

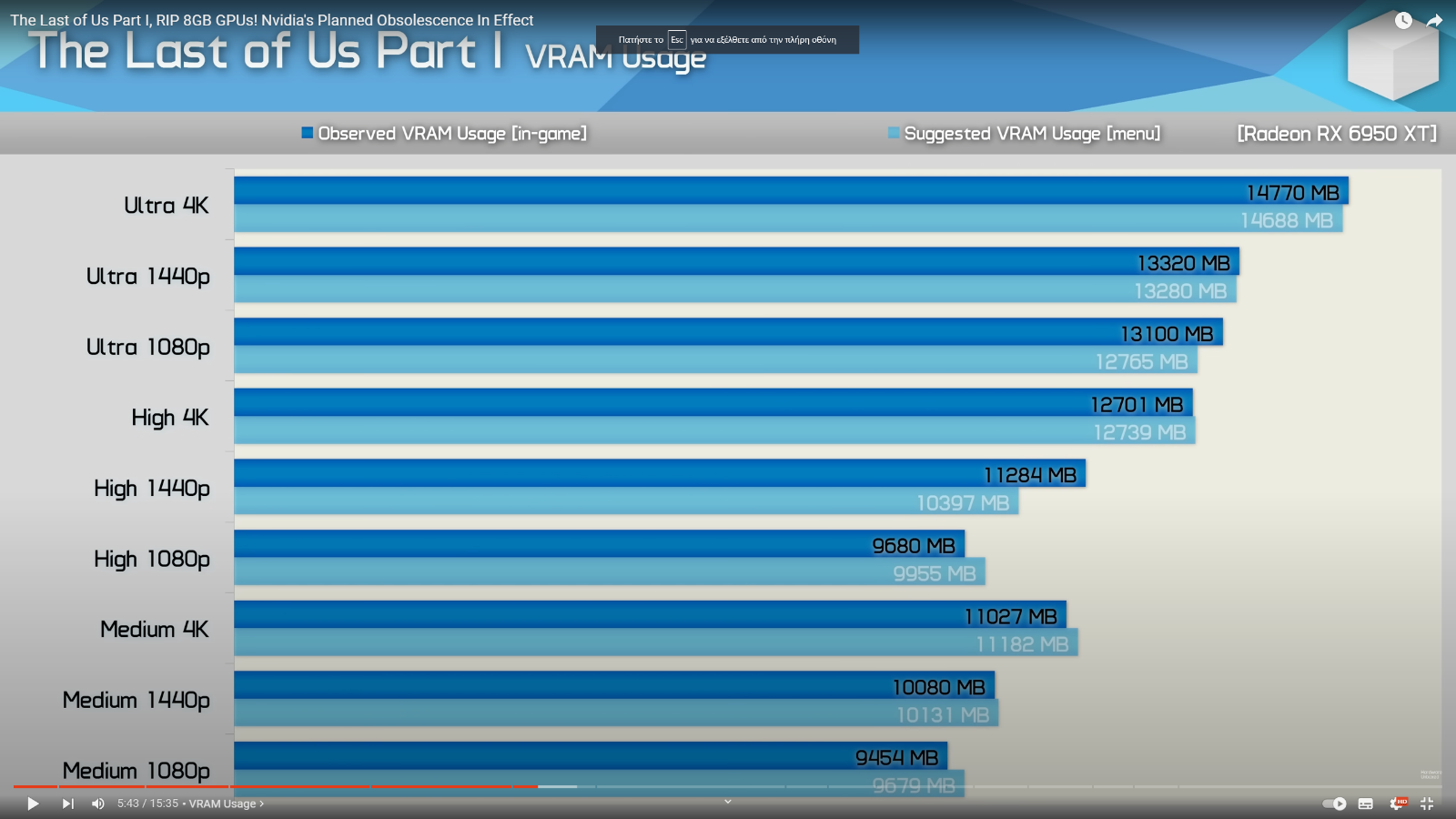

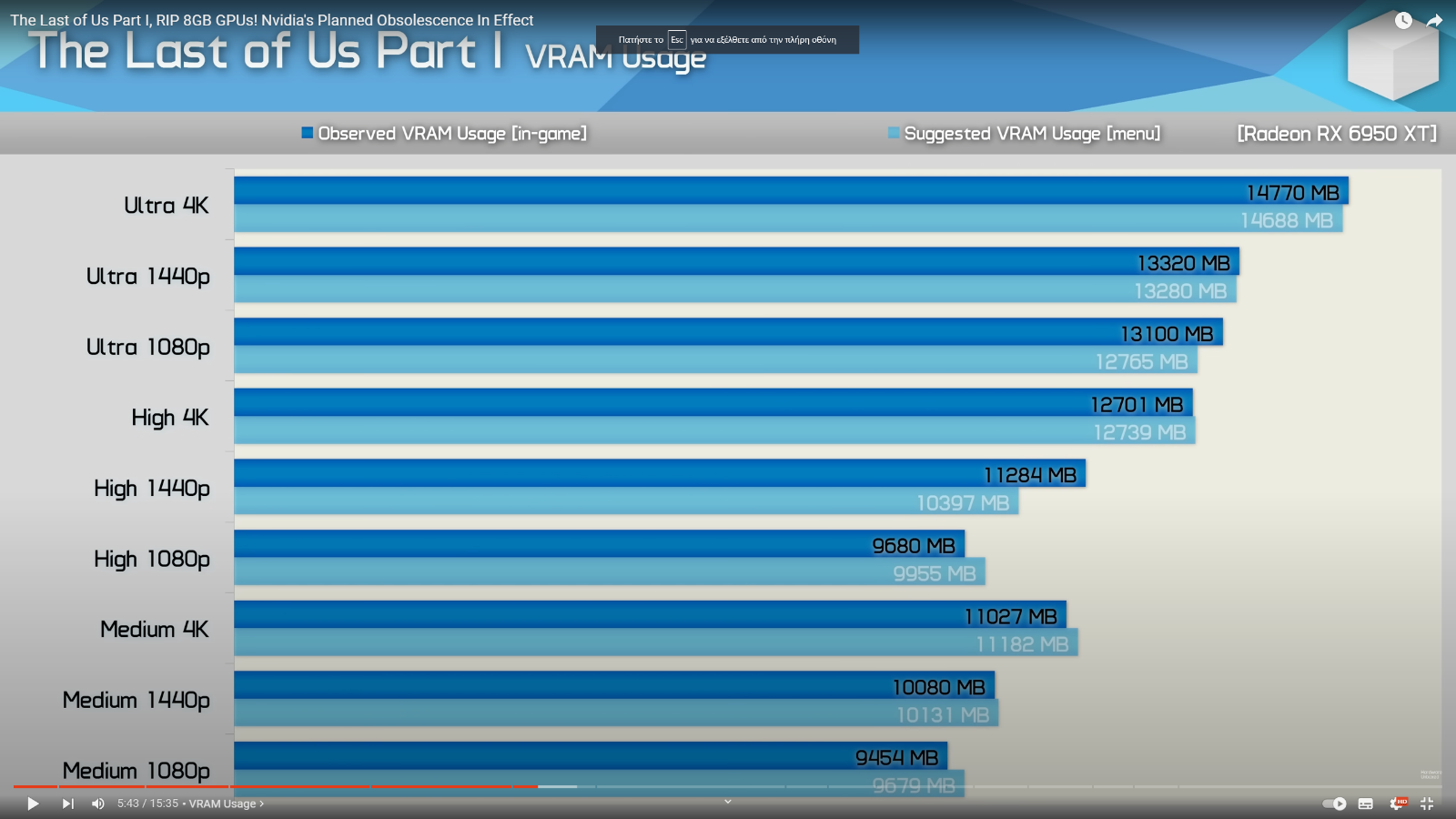

Suggested VRAM requirements posted by hwunboxed

I just noticed that on my nvidia GPU, the suggested vram is much higher. It needs 16500 GB for 4k ultra, over 11k for 1080p medium, and 14+ GB for 1080p ultra. Maybe, just maybe, a specific brand (cough cough) had something to do with the game requiring more vram from competitors products. Not pointing any fingers...

I just noticed that on my nvidia GPU, the suggested vram is much higher. It needs 16500 GB for 4k ultra, over 11k for 1080p medium, and 14+ GB for 1080p ultra. Maybe, just maybe, a specific brand (cough cough) had something to do with the game requiring more vram from competitors products. Not pointing any fingers...

Been several high profile releases recently like Hogwarts Legacy where you really need a higher end 2000 series or better to not sacrifice too much.

You can see number of people by game. They barely make a dent.

The type of games that move the needle, is where PUBG releases something new.

Last edited:

It is also the only game I have ever played which uses 22GB of system RAM. If you have 16GB RAM, the game will constantly crash which is the reason this game is notorious for crashing in Steam reviews. By isolating 8GB GPUs and those with 16GB of RAM, they shot themselves in the foot.Suggested VRAM requirements posted by hwunboxed

I just noticed that on my nvidia GPU, the suggested vram is much higher. It needs 16500 GB for 4k ultra, over 11k for 1080p medium, and 14+ GB for 1080p ultra. Maybe, just maybe, a specific brand (cough cough) had something to do with the game requiring more vram from competitors products. Not pointing any fingers...

Last edited:

I wouldn't be able to tell dlss Q from native rendering even if my life depended on it. In fact, I m willing to bet money no one else can either. Native rendering also has flaws, like excessive shimmering on TLOU1 (already posted a video about it). DLSS performance mostly has a much softer presentation and suffers from ghosting (probably the dark trail you are talking about) in fast motion. But that one should be used as a last resort

thats a first for me usually I see the shimmering the other way round could be reason because TLOU1 is broken ?

here lighting is better on ps5, PS5 version actually looks really good

Last edited:

thats a first for me usually I see the shimmering the other way round could be reason because TLOU1 is broken ?

here lighting is better on ps5, PS5 version actually looks really good

The game needs all that vram/grunt and the PS5 version still looks better?

They should have let nixxes port this one. Whoever did this messed up.

My laptop has 16GB of ram and not had a single crash while playing for hours wheres it crashes all the time on my deskto that also has 16GB of ram. Ram usage sits at about 13-14GB for me.It is also the only game I have ever played which uses 22GB of system RAM. If you have 16GB RAM, the game will constantly crash which is the reason this game is notorious for crashing in Steam reviews. By isolating 8GB GPUs and those with 16GB of RAM, they shot themselves in the foot.

Last edited: