Lmao, framerates coming out of my eyeballs

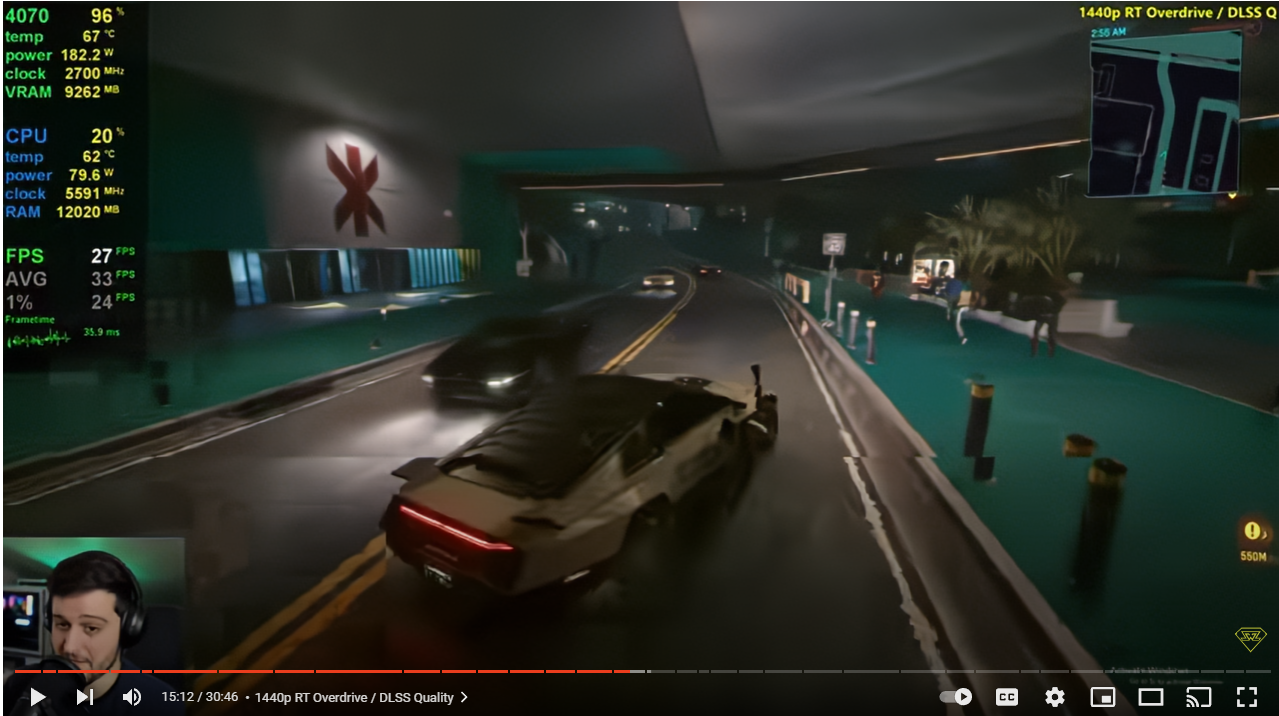

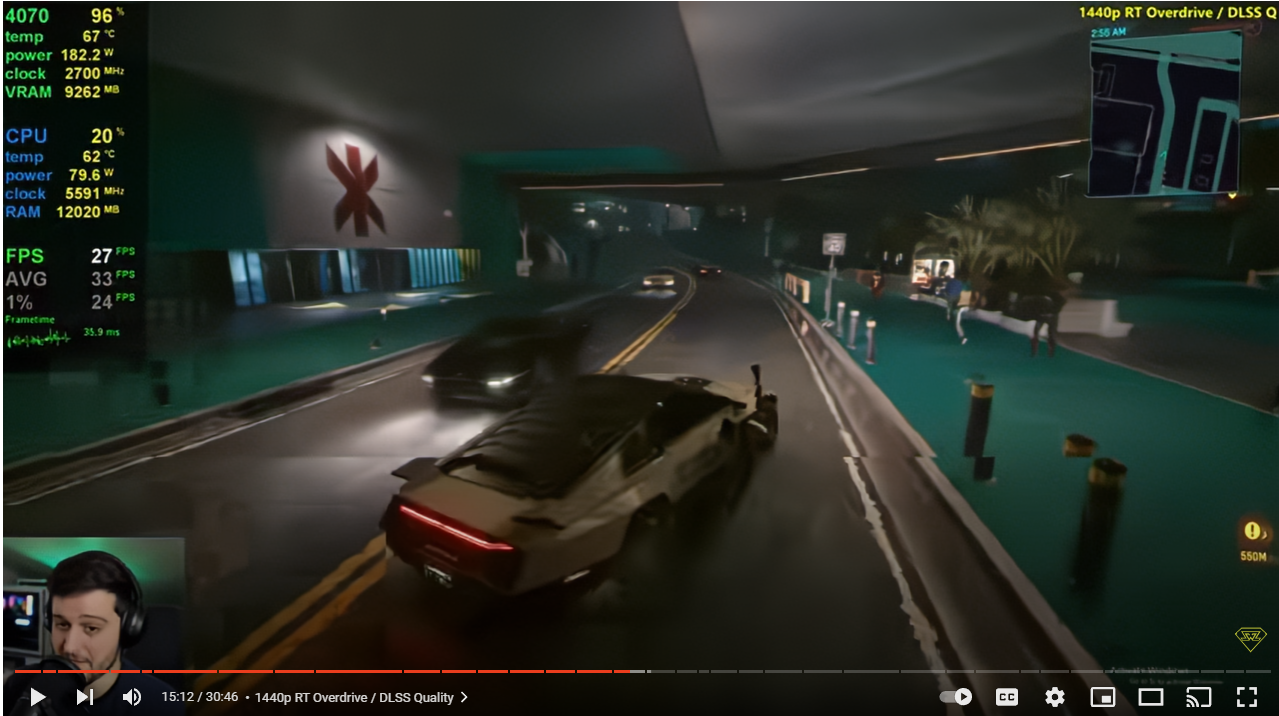

Frame gen on DLSS manually set to Quality:

Frame gen off, DLSS Quality:

Spare a thought for those less fortunate.

Last edited:

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Lmao, framerates coming out of my eyeballs

Frame gen on DLSS manually set to Quality:

Frame gen off, DLSS Quality:

I highly recommend using the DLSS Tweaks tool to set the render resolution of DLSS Quality to 74% from the default 66% in Cyberpunk as the default 66% doesn't look as good as native 3440x1440p. The 4090 is anyway sleeping at DLSS Quality even with Path Tracing so this way you get an image which actually looks sharper and better than native 1440p and the FPS is usually around 80-90 with dips into the low 60s in the most extremely demanding areas like the Cherry Blossom market. Input latency is noticeable when it dips into the 60s with frame gen but this is a slow paced shooter so don't need high response times so as long as Frame Gen removes that visual judder I am fine with the high latency.Lmao, framerates coming out of my eyeballs

Frame gen on DLSS manually set to Quality:

Frame gen off, DLSS Quality:

Just highlights that the 4090 is the only version worth buying of the 4 series (so far), but at a price.

Complete opposite to the last gen '90'.

Good point, the 3080 FE was an utter bargain at the time.

even worse for the 3090 was it boiling the vram! took till the TI version to get that figured out'twas wannit! 90% of the perf for 1/2 the price. Price vs perf this gen, whilst very expensive like everything else, is a bit more linear between the stack. 3090 was an odd price for its relative performance.

I believe the reason the 3080 and 3090 were so close in performance is that nVidia were not planning on using the GA102 die for the 3080, but yields were so poor from Samsung that they cut the price substantially for nVidia. This meant they got more GA102s to use for more products.

Only slight annoyance earlier, checking out the AV1 encoding via Handbrake and came to realise that AV1 via HW )Envenc) is not supported yet but is apparently in the mainline for the next snapshot release (note added in March) - I have the latest version but NVenc is only under H265 and H264 with the option to use AV1 (SVT) - I tried it anyway,

Source video from a game capture: 7.1GB

Encoded to H264 NVenc: 3.7GB

Encoded to H.265 NVenc - 1.8GB

Visually, the quality appears the same, average encode rate 171fps for both which is about 30fps or so higher encoding rate than the 3080 ti for the same codecs at these settings (average).

I tried AV1 but as it's software only in handbrake, it was encoding at 30fps and would have taken 17 minutes vs the 3 minutes of the above, so will wait until NVenc is supported for AV1. The bit benefit is that whilst you have to use higher bitrates in 264/265 to retain good quality, you can get the same quality with only 6-8Mbps bitrate using AV1, so being able to smash out AV1 transcodes from game recordings etc that are half the size of H265 is quite appealing, means uploading to youtube etc is much faster too.

And here it is installed, don't like the RGB lighting though, but luckily the side panel is tinted so it's not a distraction. No snagging on the AIO tubes or the 12VHPWR. It's all nice and snug

What encoding settings were you using and what is the source bitrate?Only slight annoyance earlier, checking out the AV1 encoding via Handbrake and came to realise that AV1 via HW )Envenc) is not supported yet but is apparently in the mainline for the next snapshot release (note added in March) - I have the latest version but NVenc is only under H265 and H264 with the option to use AV1 (SVT) - I tried it anyway,

Source video from a game capture: 7.1GB

Encoded to H264 NVenc: 3.7GB

Encoded to H.265 NVenc - 1.8GB

Visually, the quality appears the same, average encode rate 171fps for both which is about 30fps or so higher encoding rate than the 3080 ti for the same codecs at these settings (average).

I tried AV1 but as it's software only in handbrake, it was encoding at 30fps and would have taken 17 minutes vs the 3 minutes of the above, so will wait until NVenc is supported for AV1. The bit benefit is that whilst you have to use higher bitrates in 264/265 to retain good quality, you can get the same quality with only 6-8Mbps bitrate using AV1, so being able to smash out AV1 transcodes from game recordings etc that are half the size of H265 is quite appealing, means uploading to youtube etc is much faster too.

Last 4090Fe tpa was March 24th.Is there a telegram part alert for 4090fe?

Is there a telegram part alert for 4090fe?

No wonder you had such good compression with a 105 Mb/s source bitrate. I leave mine on the shadowplay default at 4K which is 50 Mb/s. Can't compare encoding speed due to different resolution and bitrate, but using the built in NVENC 4K preset I get 120.5 fps with a 4070 Ti. 6.62 GB to 3.26 GB.Default encoding settings but just selecting the NVenc of H265 etc:

Source details:

Ah yes, I did whack up the bitrate in Shadowplay just because there were no performance downsides in doing soNo wonder you had such good compression with a 105 Mb/s source bitrate. I leave mine on the shadowplay default at 4K which is 50 Mb/s. Can't compare encoding speed due to different resolution and bitrate, but using the built in NVENC 4K preset I get 120.5 fps with a 4070 Ti. 6.62 GB to 3.26 GB.

Maybe I should lower it a bit though since I suppose there is no visual difference in the source AVC capture anyway, although it might be more beneficial at faster motion scenes.

Maybe I should lower it a bit though since I suppose there is no visual difference in the source AVC capture anyway, although it might be more beneficial at faster motion scenes.