It seems like everyone is jumping in on this question, so I'll also put in my 2 cents (and try to stick to the facts as best as possible). It really depends on resolution, game, etc. As cards get older they age more poorly and the newer software seperates them out more and more (due to things like increasing VRAM requirements). Any general numbers of this GPU is x% better than that GPU are usually overall averages at a given resolution.

This video by hardware unboxed does a very factual numbers to numbers comparison between generations and shows how bad things are, especially as of the RTX 4000 series:

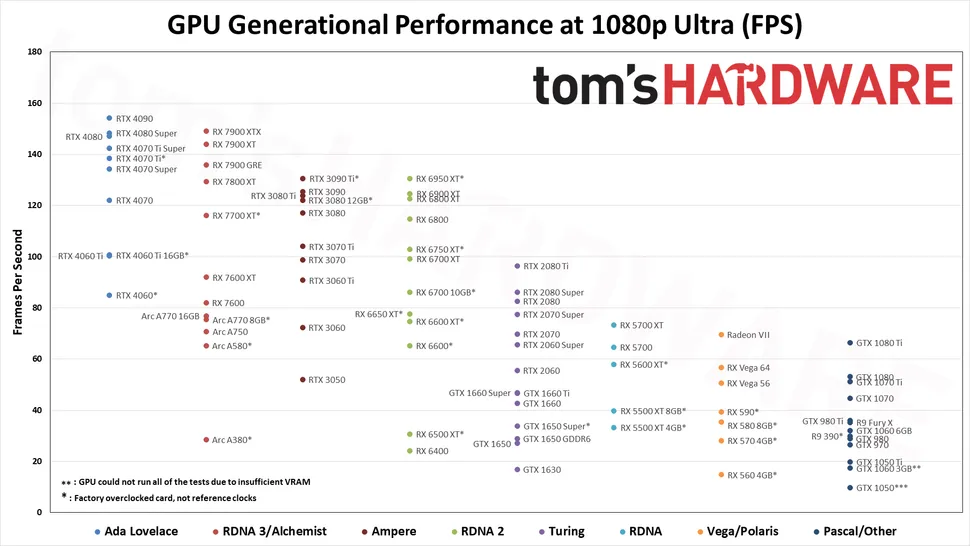

Else this chart is a quick overall view of how things look, but admittedly at 1080p and keeping in mind newer games were also used, so older GPUs may perform disproportionately worse or there could be CPU bottlenecking.

Historically, the jumps have been significant all the way down the stack.

980ti > 1080ti ~85% (35.9fps vs 66.4fps)

1080ti > 2080ti ~45% (66.4fps vs 96.3fps)

2080ti > 3090 ~30% (96.3fps vs 125.5fps) -I used the 3090 since the top card went from x80ti to 90

3090 > 4090 ~23% (125.5fps vs 154.1fps)

970 > 1070 ~69% (26.5fps vs 44.7fps)

1070 > 2070 ~56% (44.7fps vs 69.8fps)

2070 > 3070 ~41% (69.8fps vs 98.8fps)

3070 > 4070 ~23% (98.8fps vs 122.0fps)

1060* > 2060 ~72% (32.1fps vs 55.5fps) -6GB model used, since the 3GB gimped version came out later

2060 > 3060 ~30% (55.5fps vs 72.3fps)

3060 > 4060 ~17% (72.3fps vs 84.9fps)

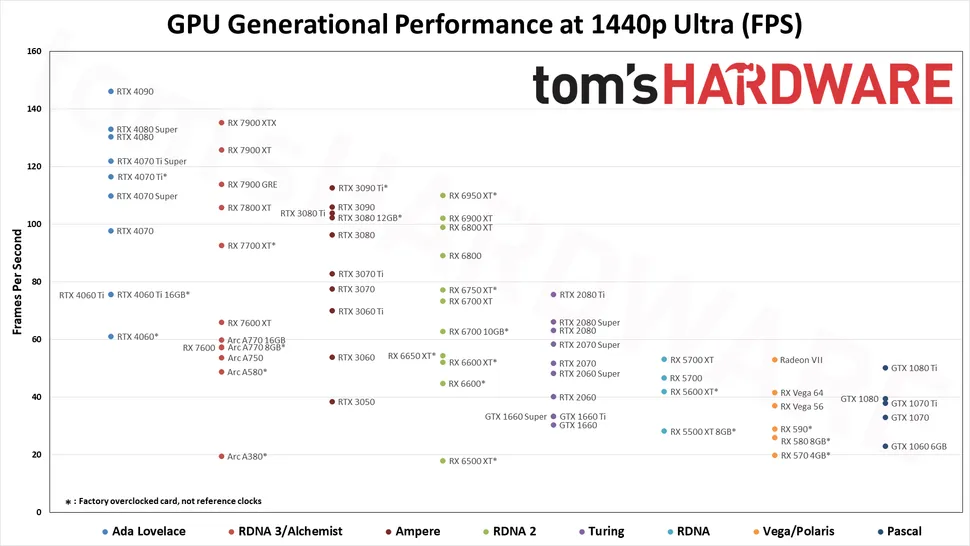

The higher end cards make bigger jumps at 1440p, as of recent generations at least.

980ti > 1080ti ~89% (26.6fps vs 50.2fps)

1080ti > 2080ti ~50% (50.2fps vs 75.6fps)

2080ti > 3090 ~40% (75.6fps vs 106.0fps)

3090 > 4090 ~37% (106.0fps vs 146.1fps)

1070 > 2070 ~56% (33.1fps vs 51.8fps)

2070 > 3070 ~50% (51.8fps vs 77.7fps)

3070 > 4070 ~25% (77.7fps vs 97.8fps)

1060 > 2060 ~74% (23.0fps vs 40.1fps)

2060 > 3060 ~34% (40.1fps vs 54.0fps)

3060 > 4060 ~13% (54.0fps vs 61.2fps)

So what about 4k?

The older GPUs drop off like a rock and some GPUs perform far worse than their segment due to things like VRAM. Unfortunately Tom's Hardware didn't include numbers for most of the older GPUs so I can't put in much of any comparison. But the 1440p vs 1080p should give you an idea what to expect. The 4090 increases performance from the 3090 by 62% (114.5 from 70.7) and 63% for jump between 3090 and 2080ti (70.7 vs 43.5). I'm guessing part of the big jumps here include the fact that the flagships are the only ones to get lots of memory and decent memory bandwidth to be able to handle 4k titles well enough.

Even though I've just done all of those very rough approximate calculations up above, I don't feel it's a decently representative picture of what to expect. Nvidia messing around with segmentation and naming makes like for like generation comparison tricky. Especially as with newer games tested, the older architectures tend to do more poorly. E.g. it appears as though a 1070 usually performs better than a 980ti, even though back when it released, it was more neck and neck between the 2 cards.

With older GPUs being gimped on newer software, the trend of seemingly getting less performance with each generation would actually be amplified, as the older GPUs would have held up better at the times when their follow-ups released.

Perhaps back in the day one might have expected 50% better performance with next gen. But we can see that with the 40 series we're lucky to get significant gains over previous gen, with mainly the higher tier card getting the best gains, mainly at the highest resolutions and settings and very poor stagnation at the mainstream cards. The Supers obviously hold out better, but there's no guarantees that there will be a super each generation.

A 5060 with a disproportionate 50% performance boost over a 4060, would still struggle to be better than a current gen 4070. It looks even worse when compared to the 4070 Super.

A 5070 getting a 40%+ boost over the 4070 like we used to, would certainly be able to get close to a 4090. But in reality, we'd be lucky for a 5070 to beat a 4080.

For 4k, sure a 5090 should be able to get ahead of a 4090 by at least 30-40%, if not the usualy 60% like we've had before.

But overall, this is just speculation based on previous performance and as we've seen, what happened before means bugger all when Nvidia can choose to mix things up and shift naming, pricing and segmentation around.

TLDR I've wasted a decent amount of words showing past performance increases and it might not necessarily be indicative of what to expect for the 5000 series.

The 4K results are better presumably because it's now GPU limited.

The 4K results are better presumably because it's now GPU limited.