What's the TLDR for the new Nvidia cards, it looks like 4080 & 4090 are releasing soon, is the performance a mystery until NDA lifts?

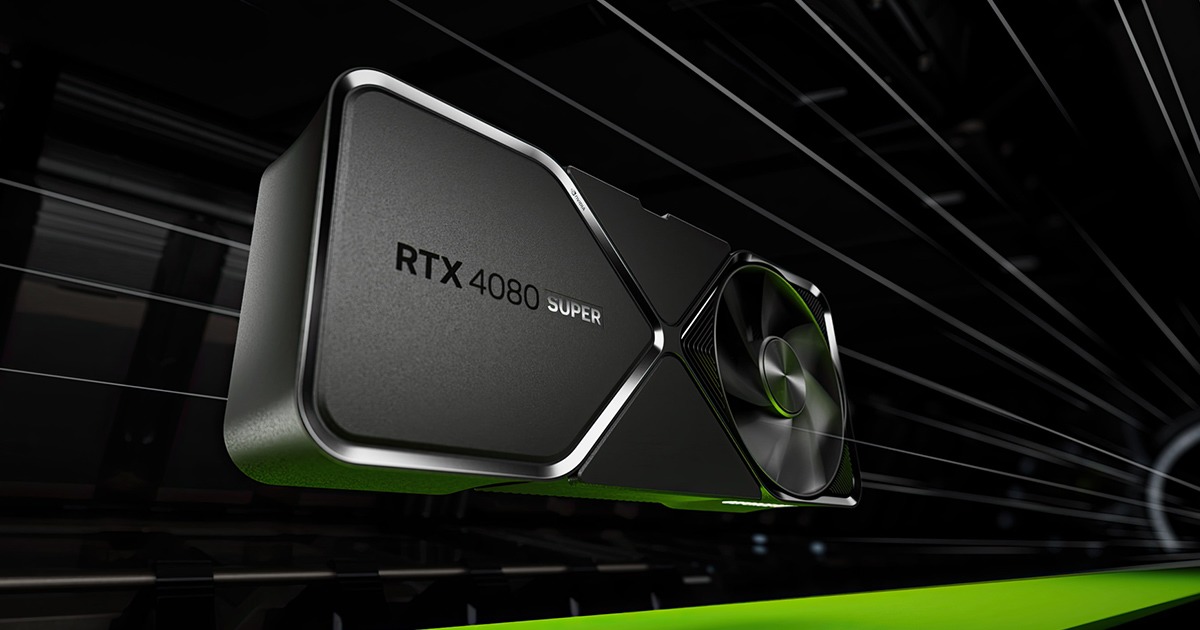

The price is high, performance looks good but not stunning with current gen games, Nvidia are banking on their DLSS and ray tracing technology being embraced to make these cards really shine in the future. People are annoyed over the naming scheme, and especially the 16 and 12 Gb 4080 sharing a name when the 4080-12Gb is a very different card.

I see Nvidia are advertising a 4080 for £950. What is the likely performance uplift over my 6800XT - or, is this unknown at the moment?

Small, like 5-20% probably, going to vary by game and is unknown until these cards get properly benchmarked by independent parties. However, if future games embrace DLSS and go hard on ray tracing, the difference will be much larger.

For my money? Not worth upgrading. Wait for the next generation and see what happens with the games you want to play.

Last edited: