Well must be a train wreck on Xbox then because I found it near on unplayable at any resolution or settings on my 3080.

Could not hold 60fps no matter what I tried and 60fps was very choppy.

The consoles target 30fps with a dynamic resolution. Can drop as low as 1440p outside when driving fast for example.

Also I'm guessing you are resisting using DLSS?

This is actually only slightly debatable? I think it is working without problems, but I would need to test it a bit more, since I have old CPU and waiting for a new one to play it.

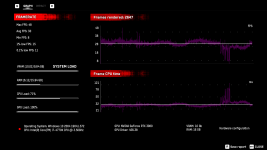

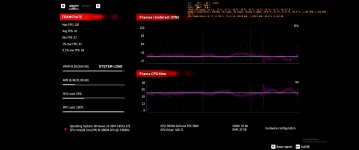

So these are max settings, extra details are set to 100% in native 4K, with RTX on max, but without DLSS which lowers vRAM consumption a bit.

Do you get much higher frame rates with lower settings? Because that looks CPU bound to me. 30ms to 80ms CPU frame times, ouch.

Thought that was confirmed a while ago in the 10gb is it enough thread?

The card seems to run out of grunt before it does of vram from what we can see so far.

Which is exactly what you want. 4K games keep moving on every year. Even though 2080Ti was supposedly a 4K card in it's time, the RTX 3070 isn't unless you play those same old games. 8GB is therefore plenty.

Last edited:

.

.