If Ampere offers tiny performance increases while simultaneously charging huge price increases, your idea of "value" might continue on another generation.

However, if Ampere offers real value, like pretty much any generation before Turing, the emperor will have no clothes.

They won't offer value. They will never offer value until every single one of them is matched by AMD and the price war begins. Which isn't happening. They may offer value compared to Turing, but that isn't saying much, is it?

They should offer lots of performance, but that remains to be seen. However, apparently with this co processor which could contain the tensor cores* that would explain exactly why they are so much better at RT.

Apparently the 3060 is as good at RT as the 2080Ti. Remember though, I said

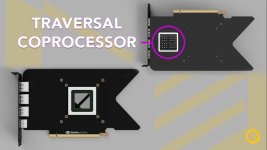

as good at RT not as fast ! so any performance increase in RT will only take it so far because of the core itself. However, look at this pic.

Let me explain that. There's a GPU die core, and a tensor core co processor. That would explain why the 2080Ti die is 772mm2, yet apparently either the 3080 or 3090 is "only" 600 odd. Sure, it's some kind of shrink here, but we have no idea how big Samsungs shrunk cores are in density (well, we could probably find out but I will leave that to guys like AdoredTV etc). What I am saying is, when you combine both cores it would have been so monolithic and big that the failure rates would have been so incredibly high that you would have got like, two big dies working per wafer.

This way they can make GPU dies on one wafer, and the possible tensor core dies on another wafer. Making the tensor cores smaller in die size means better yields all around. Same goes for the GPU die itself.

And see, that is why Turing was so expensive. I've said it before but people don't listen, Turing was *really* expensive to produce. The dies were honking great monoliths, the failure rates were really high and the cost was high also (being TSMC). So only part of it was Jen doing you up the Gary Glitter, the rest was solely down to costs. The 2080Ti FE cooler cost them about $60 just to produce.

and use standard atx cales (ie 8pin pci express x2)

and use standard atx cales (ie 8pin pci express x2)