You can pick your game and we can test if you are interested. I can back up everything I say with actual results

Im taking about the 7800x3d ? I dont have one do you ?? you said I have the cpus in question, no need. ???

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You can pick your game and we can test if you are interested. I can back up everything I say with actual results

Ah sorry, didn't comment on the 7800x 3d, I specifically said I don't have it so can't test it. Which makes your previous reply kinda weird, doesn't it?Im taking about the 7800x3d ? I dont have one do you ?? you said I have the cpus in question, no need. ???

I didn't pick one game, i've tried a lot. Warzone / cyberpunk / TLOU / hogwarts / spiderman. TLOU is just the worst case when it comes to power draw, that's why I mentioned that one, my CPU picks at 117w.point still stands you picked one game and almost sounding its the norm ? but I wont get involved you get on with it

could ya get some screenshots up of benches that show this, im interested to know the truth about all this. most reviews just show peak power consumption in rendering or prime etc but not power consumption while gaming or even idle and low load power usage. best to get new thread up and running and we can finally get to the bottom of this cuz at them moment we are just going in circles with no factual data being presented.You've no idea, do you? A 12900k consumes 110w max in the heaviest game that exists right now (the last of us!) - which is very similar to how much the 5800x 3d also consumes in this game (but with much much worse performance mind you).

No idea about the 7800x 3d, didnt have time to test it yet

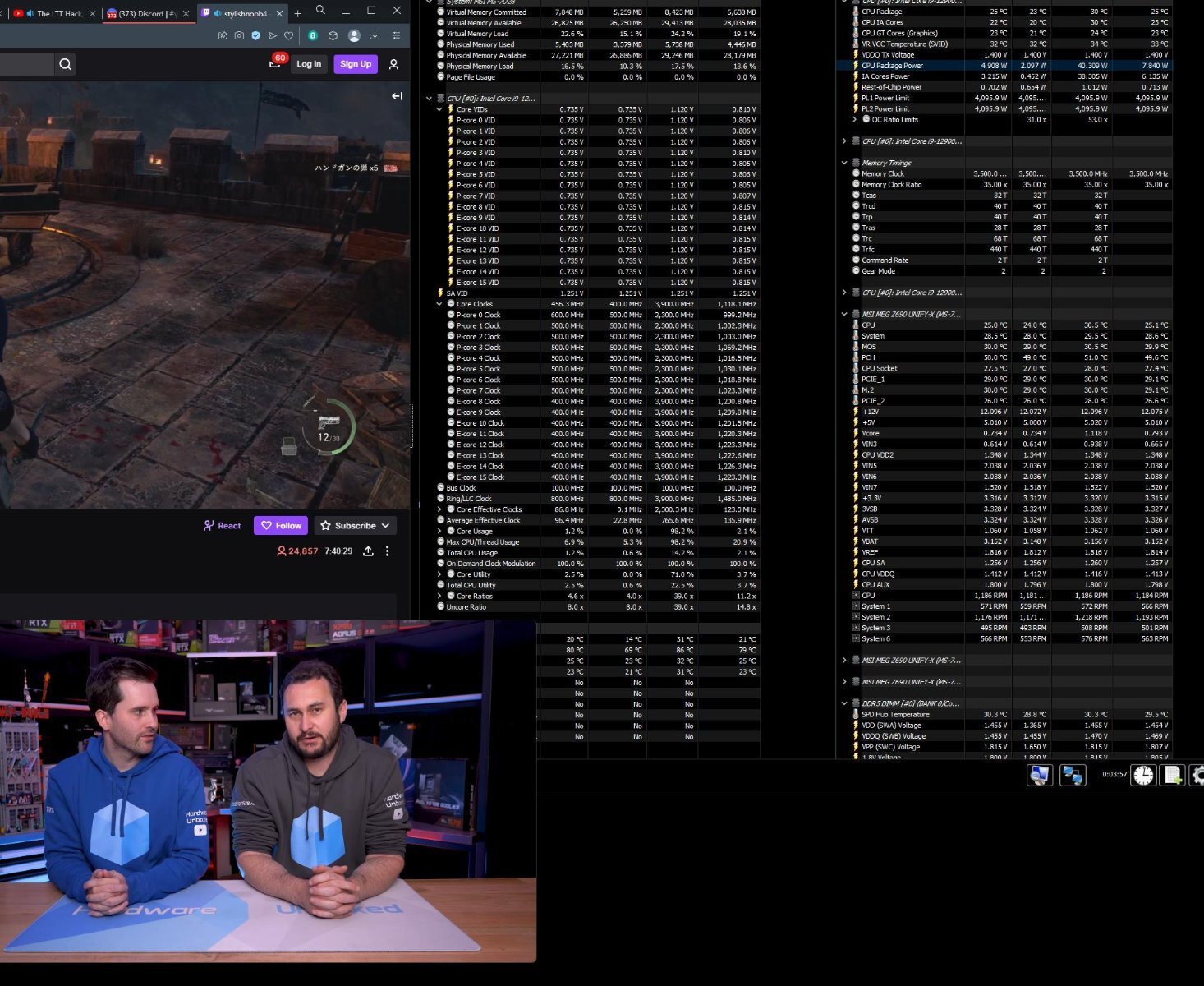

Well I have this for example, im on a discord call - watching a twitch stream and a youtube video, power draw is between 4 and 6 watts. Usually after full 8 hours of actual work (mostly multiple browsers, excels , words pdfs etc, nothing heavy but lots of apps open) it averages out at around 6 to 8 watts.could ya get some screenshots up of benches that show this, im interested to know the truth about all this. most reviews just show peak power consumption in rendering or prime etc but not power consumption while gaming or even idle and low load power usage. best to get new thread up and running and we can finally get to the bottom of this cuz at them moment we are just going in circles with no factual data being presented.

Sorry I'm on the phone now, I'll send you when I'm home.@Bencher whats your channel send the link. This is very interesting stuff. How do you measure power draw is it at the wall or in software e.g hwinfo etc?

Sorry I'm on the phone now, I'll send you when I'm home.

Power draw is by hwinfo, I calibrated the motherboard so it's as accurate as it can be (ac DC ll / vid = vcore etc, you can see it from the above screenshot actually. .). The only thing I can't account for fully is vrm loses but those should be minimal since I have a high end board - but they are kinda irrelevant as well if you are comparing between cpus.

I had a 13900k briefly but I swapped back to the 12900k. The 13 part was a power hog in gaming unless you downclocked it. It consumed around 60 to 80% more power than the 12900k. It was fast, too fast, but the power draw was nutty.

Υes, but my motherboard also has readings directly from the VRMs.Is it the CPU package power sensor under the Core i9 12900K in HWInfo64?

That is only reading I have on mine for power draw.

Well I gave up on static al core clocks being something I desire and sold off 13900K and now on a 7800X3D.

So much better and more stable and so much lower power usage and much less heat dumped into case as a result

And 7800X3D has only 8 strong cores and a bunch of L3 cache (96MB and it spanks 13900K in gaming anyways. So much better than dealing with Intel high heat and power consumption and having to disable those useless for gaming e-waste cores.

What board and memory did you go for with your 7800x3d CPU?

Had you previous experience with a recent AMD build, and did all go well for you when putting it all together ?

Thanks

It was a shame with Intel as Coffee Lake and prior passing lots of stress/stability tests seemed to guarantee real world usage stability. That sadly was not the case with Raptor Lake as WHEAs were very random indicating maybe easy and fast degradation with Raptor Lake is very real. So sold the chip and mobo and RAM off and now much happier with more reliable and stable 7800X3D even somewhat tweaked, but obviously no static clocks.

You really should have got a 7800X3D from the start as it is perfect for people like yourself that just want to plug and play. I've tested my overclocked/tuned 13700K against my CO/tuned 7800X3D and it was faster than my 7800X3D in all games but one and way faster in general software usage. It's overclocked to 5.8Ghz all core to 6.2Ghz boost and has been for months and I get no WHEA etc.

Raptorlake overclocking really isn't for novices or people that don't have the time to learn/do it properly, much better to get a 7800X3D for gaming instead.

In speaking due you use voltage offsets or static vcore with LLC??

I use adaptive voltage. I have only ever use constant voltage the very first time when overclocking to establish around what voltage a CPU needs for a certain clock speed.

For 5.8Ghz all core load it will use ~1.394v

I have been doing lots and lots of testing, quite extreme actually.

I have been tinkering with various hidden power settings that manipulate the cpu scheduler.

Doing tests with cpu-z bench as quick and simple test.

So when we think about single threaded, by default these hybrid cpu's keep all p-cores parked, I dont yet know what determines to get a p-core unparked, but I do know that it will always prioritise the 2 preferred cores, these clock higher than the rest of the cores. If all the p-cores are unparked which can be done by adjusting the scheduler settings to force them to always be unparked (or via software calls likely, as an example all core cinebench unparks them), then single threaded performance will be hurt as it seems to be the parking mechanism that routes single threaded load to the preferred cores, once more are unparked it becomes at the mercy of the standard cpu scheduler.

If the e-cores are disabled in the bios, then some p-cores will always be unparked, and it increases the chance of non preferred p-cores been unparked, I have yet to test with e-cores disabled as personally I dont think thats the best way to use these chips, but I will do at some point, the second likely problem with disabling e-cores is you cant remove background tasks from the p-cores. This I was able to observe a noticeable impact on the single threaded cpuz score.

So using something like process hacker move svchost, browser process, afterburner, hwinfo, discord and other background apps to e-cores via affinity settings. There is also the cpu scheduler master setting which has 5 options.

Automatic

efficient cores

prefer efficient cores

prefer performant cores

performant cores

Now when I tested 'performant cores', it was a lower score vs 'prefer performant cores', likely because when using prefer, it moves lower demanding tasks conflicting on the core to e-cores when a heavy task is running. I hadnt moved every single background binary to e-cores only the biggest one's.

The e-cores also dont just offer more raw grunt, but they also offer more cores to reduce scheduling bottlenecks (can cause stutters in games). This is the primary reason why I think its better to have them available.

The downside of them is if anything interactive runs on them instead of the p-cores then that might give slower interactive performance, and also potentially can be less power efficient, as an example if 'any' p-core is unparked, all e-cores will stay at max clocks due to default power scheduler settings (hidden setting) and this also raises the vcore significantly. So I expect there is no perfect solution to cover all bases, but it is still something I am experimenting with. I have noticed occasionally things run on them when I dont want to, e.g. the UAC prompt always seems to use e-cores to process the prompt box. Unless 'performant cores' forces it over, and I havent found a way to adjust that via process hacker.

Via hidden settings the min number of unparked p-cores is adjustable, so you can force them to stay awake, if using prefer p-cores or forced p-cores these do NOT force cores to unpark, so they only actually work if p-cores are available, luckily keeping both preferred p-cores always unparked has no noticable effect on vcore or power consumption, however keeping 'all' p-cores always unparked does have an effect especially when p-core clocks are increases and as mentioned earlier no longer ensures single threaded stuff goes to a preferred p-core.

If you dont care about the scheduling bottlenecks from having less cores, then an approach could be made by disabling all e-cores, then also setting all cores to same clocks as preferred p-cores (if cpu can handle it, might need a voltage bump), by changing to an all core clock that removes any concerns about needing preferred p-cores for max single threaded performance. But will still lose some for having bg tasks running on them. (my single thread score went down by about 4-5% when not moving task to e-cores)

I have also confirmed as a quick and dirty adjustment, simply using the high performance or ultimate power profile, combined with routing background stuff specifically to e-cores and adjusting the thread scheduler to "prefer performant cores" gives insane performance all over the shop, easily beating my 9900k at everything, but is a bit power inefficient this way when using light load e.g. 30 watts cpu package power to watch youtube.

For what its worth I think its inevitable AMD will bring out a variant of e-cores, on their server chips I think they are just cores with less cache, so might see same on future desktop chips.