You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Post your hard drive benchmarks!

- Thread starter Scoobie Dave

- Start date

More options

Thread starter's posts- Joined

- 16 Sep 2003

- Posts

- 2,901

- Location

- Plugged into the Matrix

Any more results with the latest drives?

Post em in here!

Post em in here!

Soldato

- Joined

- 27 Dec 2006

- Posts

- 2,916

- Location

- Northampton

Soldato

- Joined

- 25 Sep 2003

- Posts

- 3,750

- Location

- Manchester

5x500gb in raid5

\o/

It's 'migrating', only another 70 something hours to go...

\o/

It's 'migrating', only another 70 something hours to go...

Soldato

- Joined

- 25 Sep 2003

- Posts

- 3,750

- Location

- Manchester

I know, I was just posting it up as a laugh really.

- Joined

- 16 Sep 2003

- Posts

- 2,901

- Location

- Plugged into the Matrix

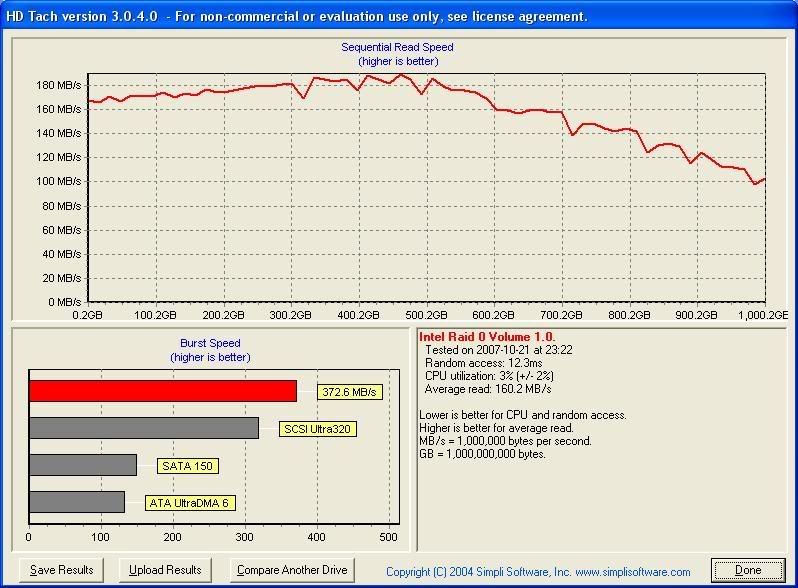

Recurrect this:

2 x Seagate ST3250410AS 250GB Hard Drive @128kb

Looks a bit low....

Is your write back cache enabled?

You get 500Gb storage space after buying 2.5Tb of storage? Doesnt seem worth it to me.5x500gb in raid5

You get 500Gb storage space after buying 2.5Tb of storage? Doesnt seem worth it to me.

His HD Tach shows 2Tb, as it should in Raid 5?

His HD Tach shows 2Tb, as it should in Raid 5?Soldato

- Joined

- 25 Sep 2003

- Posts

- 3,750

- Location

- Manchester

You get 500Gb storage space after buying 2.5Tb of storage? Doesnt seem worth it to me.

It's 4x500gb as the storage drives, the fifth 500gb just does the parity stuff so if one of the four drives fails I can just replace it with another 500gb drive and it will carry on like nothing happened. That's why it's worth it.

Just to be pedantic what you've described is RAID3 (dedicated parity disk), with RAID5 the parity is striped across all drives so in a 5 disk array each drive is 80% data and 20% parity. The advantage of striping the parity is that the RAID5 array is not limited by the write speed of a single drive in the way that RAID3 is. However to take advantage of this you need a controller capable of calculating the parity bits at a sufficiently high rate to keep up with the disks, that usually means splashing a lot of cash.It's 4x500gb as the storage drives, the fifth 500gb just does the parity stuff so if one of the four drives fails I can just replace it with another 500gb drive and it will carry on like nothing happened. That's why it's worth it.

Associate

- Joined

- 26 Oct 2002

- Posts

- 1,728

- Location

- Surrey

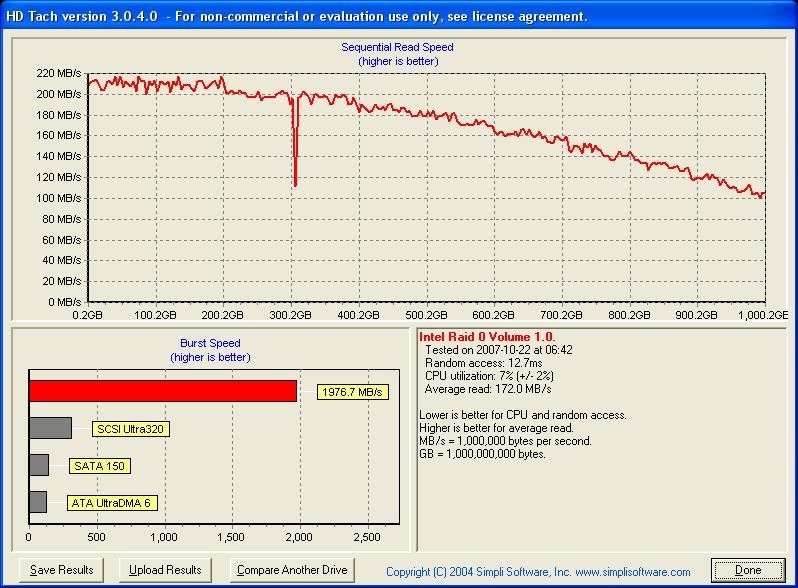

Before I get any 'that's really slow', The pic below is of my main array on my home server. As you can see smashes through the 2048gb barrier and as I have kept it as one physical drive/partition these fairly heavy speed drops were to be expected:

As it is my home server and hosts all my movies/everything else under the sun I wasn't bothered about flat out speed, merely the huge single drive as I use a LOT of space. The array is well over half full already. 11xWD5000KS drives in raid 5 with a hot spare. To be honest the speeds shown on that test don't do it justice IMO as stuff really flies up and down the gigabit between the machines and we have never had the slightest hint of a bottleneck .

.

As it is my home server and hosts all my movies/everything else under the sun I wasn't bothered about flat out speed, merely the huge single drive as I use a LOT of space. The array is well over half full already. 11xWD5000KS drives in raid 5 with a hot spare. To be honest the speeds shown on that test don't do it justice IMO as stuff really flies up and down the gigabit between the machines and we have never had the slightest hint of a bottleneck

.

.Tough, you're getting one - that's really slowBefore I get any 'that's really slow'

No seriously, it is. With 11 drives you should be easily cracking 250MB/s - I get over 200 with 8 T7K250s. Although looking at that card it's PCI-X, you running it in a plain PCI slot?

Associate

- Joined

- 26 Oct 2002

- Posts

- 1,728

- Location

- Surrey

Plain PCI? That would be a hanging offence in my book!  . The board is an Asus P5WDG2 WS PRO with PCI-X 133 slots

. The board is an Asus P5WDG2 WS PRO with PCI-X 133 slots  . Usual thing of all hardware that isn't used is disabled as in USB etc. Onboard raid is being used for the OS drive and the the only other card in there is an x300 pci-e graphics card.

. Usual thing of all hardware that isn't used is disabled as in USB etc. Onboard raid is being used for the OS drive and the the only other card in there is an x300 pci-e graphics card.

http://www.microsoft.com/whdc/device/storage/LUN_SP1.mspx - 32 bit Server 2003 R2

It is running a GPT disk on . While I was never impressed with the 'on paper' speed, it runs perfectly well with 100% reliability and is a stupid amount of storage. The only way to see for sure would be to destroy the array and either create 2 much smaller arrays, OR enable auto carving, but I don't have a spare few TB's about the place to move the data to .

.

. The board is an Asus P5WDG2 WS PRO with PCI-X 133 slots

. The board is an Asus P5WDG2 WS PRO with PCI-X 133 slots  . Usual thing of all hardware that isn't used is disabled as in USB etc. Onboard raid is being used for the OS drive and the the only other card in there is an x300 pci-e graphics card.

. Usual thing of all hardware that isn't used is disabled as in USB etc. Onboard raid is being used for the OS drive and the the only other card in there is an x300 pci-e graphics card.http://www.microsoft.com/whdc/device/storage/LUN_SP1.mspx - 32 bit Server 2003 R2

It is running a GPT disk on . While I was never impressed with the 'on paper' speed, it runs perfectly well with 100% reliability and is a stupid amount of storage. The only way to see for sure would be to destroy the array and either create 2 much smaller arrays, OR enable auto carving, but I don't have a spare few TB's about the place to move the data to

.

.

Last edited:

At the end of the day for what you're using it for those are the important things, out and out performance isn't always necessary.it runs perfectly well with 100% reliability and is a stupid amount of storage.

Soldato

- Joined

- 25 Sep 2003

- Posts

- 3,750

- Location

- Manchester

Just to be pedantic what you've described is RAID3 (dedicated parity disk), with RAID5 the parity is striped across all drives so in a 5 disk array each drive is 80% data and 20% parity. The advantage of striping the parity is that the RAID5 array is not limited by the write speed of a single drive in the way that RAID3 is. However to take advantage of this you need a controller capable of calculating the parity bits at a sufficiently high rate to keep up with the disks, that usually means splashing a lot of cash.

Thanks for explaining that. I can see why it takes so long to migrate now. All the data needs to be rebuilt over all the drives and the parity built. I just thought the 5th drive was plonked in and the parity was then put on that drive.

I'll post up a benchmark when it's finished for a direct comparison between a 4 disk array raid 0 and a 5 disk array raid 5.

)

)