I'm pretty sure its the other way around, only the 12600k/12600kf i5 has E cores (which is near enough i7 prices anyway). The regular 12600, 12500, 12400 don't. I'd somehow got the impression that all Raptor Lake i5s would have E cores but maybe I'm wrong and it'll just be the 13600k again....which is why everyone is talking about the regulator and the cache.Because it's only the 12400 that doesn't have e cores. And even if it did, one big issue still have is power draw so the voltage regulator is a much bigger deal for Raptor Lake.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Raptor Lake Leaks + Intel 4 developments

- Thread starter g67575

- Start date

More options

Thread starter's postsIt's possible the RPL i5 non K SKUs may get 4 Ecores since the 13600k is getting 8 the next time around as far as I'm aware.I'm pretty sure its the other way around, only the 12600k/12600kf i5 has E cores (which is near enough i7 prices anyway). The regular 12600, 12500, 12400 don't. I'd somehow got the impression that all Raptor Lake i5s would have E cores but maybe I'm wrong and it'll just be the 13600k again....which is why everyone is talking about the regulator and the cache.

I'm pretty sure its the other way around, only the 12600k/12600kf i5 has E cores (which is near enough i7 prices anyway). The regular 12600, 12500, 12400 don't. I'd somehow got the impression that all Raptor Lake i5s would have E cores but maybe I'm wrong and it'll just be the 13600k again....which is why everyone is talking about the regulator and the cache.

It is yep: of the i5 CPUs, only the 12600k has e cores. The only 65 watt CPUs with e cores is 12900 and 12700, but they have a base clock that is way under the 12600K (2.4 and 2.1 vs 3.7).

The 12400 also has a slower IGP than all the other i5/i7 CPUs and for some reason Intel doesn't give the lowest i5 vpro, which is an advantage tbh.

These 65w cpus can go way above that TDP on a good board that allows them to run on PL2 indefinitely and then their clocks aren't much behind their unlocked counterparts so the base clocks only apply to PL1, you can realistically expect them to clock much higher during load unless your mobo cannot sustain it.

Haven't tested other workloads yet but my 12700kf consumes around 50w stock in Elden Ring when boosting to around 4.7ghz, so Alder Lake is quite efficient in gaming. It can eat up way more than that when you push it but I'm more interested in undervolting, TBH.

Haven't tested other workloads yet but my 12700kf consumes around 50w stock in Elden Ring when boosting to around 4.7ghz, so Alder Lake is quite efficient in gaming. It can eat up way more than that when you push it but I'm more interested in undervolting, TBH.

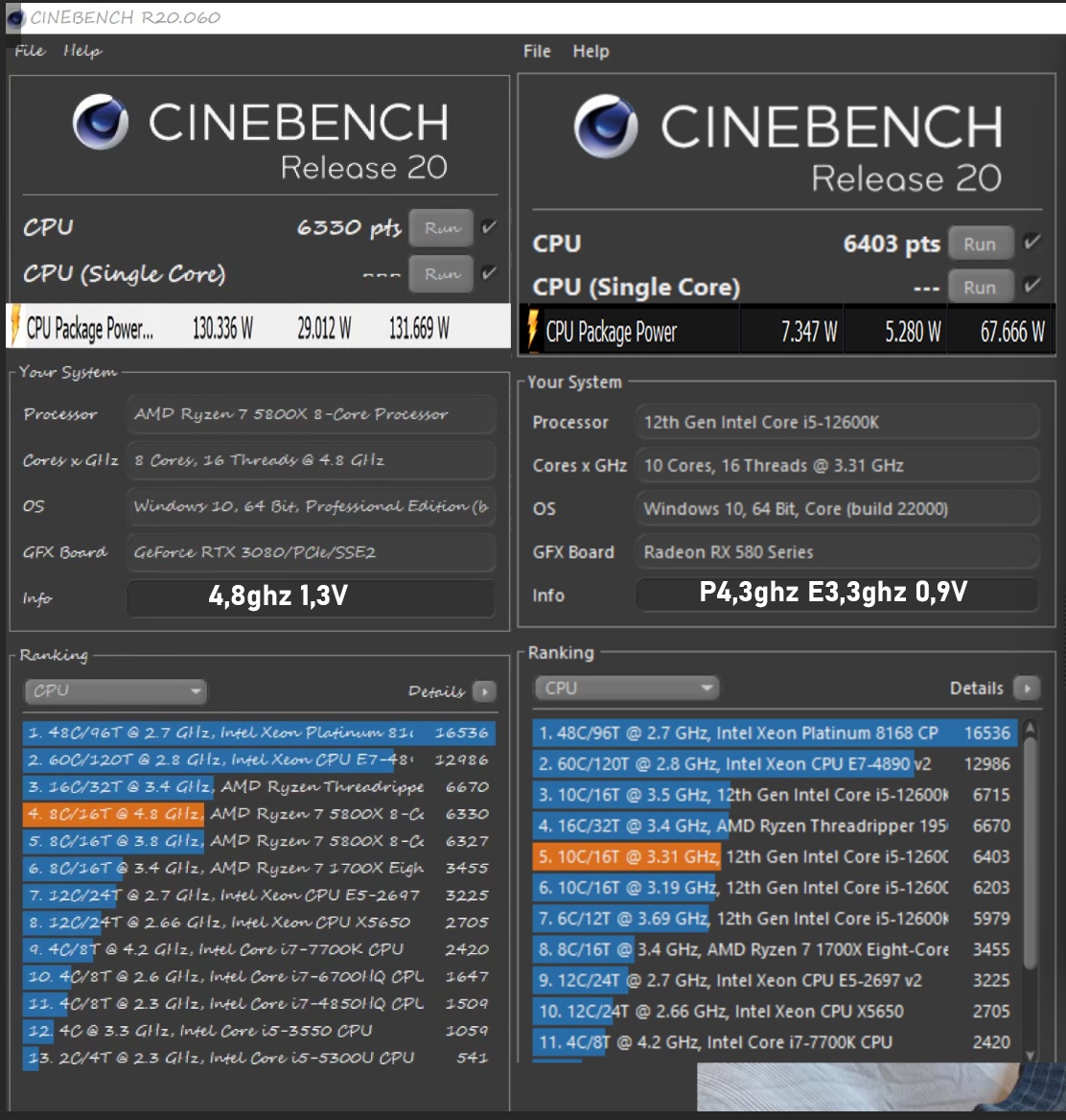

In games my 5800X uses anything from 30 to 60 watts depending on the game and boost to 5Ghz on all cores

But also 110 to 120 watts in any heavy workloads at 4.7Ghz, how much power does a 12700KF use in Handbreak at 4.7Ghz all core?

Any CPU is efficient when its in a light workload like gaming.

But also 110 to 120 watts in any heavy workloads at 4.7Ghz, how much power does a 12700KF use in Handbreak at 4.7Ghz all core?

Any CPU is efficient when its in a light workload like gaming.

In games my 5800X uses anything from 30 to 60 watts depending on the game and boost to 5Ghz on all cores

But also 110 to 120 watts in any heavy workloads at 4.7Ghz, how much power does a 12700KF use in Handbreak at 4.7Ghz all core?

Any CPU is efficient when its in a light workload like gaming.

I have no idea as I haven't checked, but I don't think it'll even boost 4.7 on all cores stock. It's still like 30% faster in HB than 5800x according to benchmarks so kind hard to gauge. I doubt it's gonna pull 200W, though.

I think it's also faster in gaming than 5800X boosting to 5ghz while consuming similar power, that was the point, obviously it's not going to consume 50W smashed on all cores 100%.

I just game and make music, the latter is where CPUs get hammered.

It's annoying that most reviews just show max load in Prime or something. I don't care about power draw in benchmarks.Yeah, alderlake is way more efficient in gaming than zen 3. Lots of tests have been done (derbauer / igorslab). Zen 3 has up to 50% less efficiency in gaming.

It gets more clicks that way, especially in the era of a particular brands fanboys not caring about the actual facts. Check igorslabs reviews, does a pretty nice job and shows consumption and efficiency throughout all of the applications he runs (games / cinebench / blender / autocad). Turns out, alderlake is insanely efficient, way more efficient than zen 3 in like 99% of tasks.It's annoying that most reviews just show max load in Prime or something. I don't care about power draw in benchmarks.

I already look at his GPU reviews, cos he shows average load and the spikes, better than anyone else I saw, but I never looked at CPUsIt gets more clicks that way, especially in the era of a particular brands fanboys not caring about the actual facts. Check igorslabs reviews, does a pretty nice job and shows consumption and efficiency throughout all of the applications he runs (games / cinebench / blender / autocad). Turns out, alderlake is insanely efficient, way more efficient than zen 3 in like 99% of tasks.

Yeah, alderlake is way more efficient in gaming than zen 3. Lots of tests have been done (derbauer / igorslab). Zen 3 has up to 50% less efficiency in gaming.

For that to be true i would have to be seeing 75 watts in game, its never anywhere near that high, my average is about 40 watts.

I think you don't understand what efficiency means. You could be seeing 1 watt and still be inefficient. Efficiency = frames / watt. If you are getting 100 fps at 75 watts while alderlake gets 130 fps at 80 watts, alderlake is more efficient. I don't really understand why you are arguing, there are actual reviews out there that show this (igorslab for example). There are games were zen 3 consumes TWICE as much as the 12900k in gaming.For that to be true i would have to be seeing 75 watts in game, its never anywhere near that high, my average is about 40 watts.

I think you don't understand what efficiency means. You could be seeing 1 watt and still be inefficient. Efficiency = frames / watt. If you are getting 100 fps at 75 watts while alderlake gets 130 fps at 80 watts, alderlake is more efficient. I don't really understand why you are arguing, there are actual reviews out there that show this (igorslab for example). There are games were zen 3 consumes TWICE as much as the 12900k in gaming.

Which is exactly why using gaming as a measure of power efficiency is a nonsense, i could use a Ryzen 3100 at 20 watt vs a 5800X using 30 watt in exactly the same game getting exactly the same frame rates and with that call the Ryzen 3100 50% more efficient than the 5800X, but in another game get 2X the frame rates at 40 watts vs 35 watts for the Ryzen 3100.

Its why no one but Intel fanatics use gaming as a measure of power efficiency, you can't win the power efficiency argument by any tried and tested standards so you contrive your own manipulated standards and then go round and round in circles trying to justify it.

Last edited:

Depends if you just game though.Which is exactly why using gaming as a measure of power efficiency is a nonsense, i could use a Ryzen 3100 at 20 watt vs a 5800X using 30 watt in exactly the same game getting exactly the same frame rates and with that call the Ryzen 3100 50% more efficient than the 5800X, but in another game get 2X the frame rates at 40 watts vs 35 watts for the Ryzen 3100.

Its why no one but Intel fanatics use gaming as a measure of power efficiency, you can't win the power efficiency argument by any tried and tested standards so you contrive your own manipulated standards and then go round and round in circles trying to justify it.

Depends if you just game though.

A 5950X uses slightly less power during gaming than my 5800X but overall also get's slightly higher frame rates, so is the 5950X more efficient than a 5800X? No, they are the same CPU core set up slightly differently, my 5800X has 2 CCD's in it, it was intended to be a 5950X but one of the CCD's is either dead or its been fused off because there was a higher demand for 5800X's than 5950X's at the time.

If you just game there is little difference between any CPU, if his 12700F uses 5 watts less in the same game and get's 10 FPS more so ####'ing what? a 5950X might use the same power and get 5 more FPS, again so ####'ing what? Do i buy the 5950X instead? NO!

Now encode a video for Youtube with these CPU's.

I've no idea what the heck you are saying. Nobody is a fanatic here. Unless you are saying igorslab (the guy doing the reviews) an Intel fanatic I guess. I care about efficiency during gaming, since I use my PC to game, that's why I bought an alderlake. A 5950x consumes TWICE as much for the same performance. That's a huge difference, so I have no idea wtf you are talking aboutWhich is exactly why using gaming as a measure of power efficiency is a nonsense, i could use a Ryzen 3100 at 20 watt vs a 5800X using 30 watt in exactly the same game getting exactly the same frame rates and with that call the Ryzen 3100 50% more efficient than the 5800X, but in another game get 2X the frame rates at 40 watts vs 35 watts for the Ryzen 3100.

Its why no one but Intel fanatics use gaming as a measure of power efficiency, you can't win the power efficiency argument by any tried and tested standards so you contrive your own manipulated standards and then go round and round in circles trying to justify it.

If you just game there is little difference between any CPU,

That's not true, zen 3 consumes up to twice more watts for the same performance. That's not "little" difference, that's a ********.

Just an example from igorslab https://www.igorslab.de/wp-content/uploads/2021/11/Far-Cry-6-AvCPUWattFPS_DE-720p.png

I've no idea what the heck you are saying. Nobody is a fanatic here. Unless you are saying igorslab (the guy doing the reviews) an Intel fanatic I guess. I care about efficiency during gaming, since I use my PC to game, that's why I bought an alderlake. A 5950x consumes TWICE as much for the same performance. That's a huge difference, so I have no idea wtf you are talking about

Really? You say that but in the next post....

That's not true, zen 3 consumes up to twice more watts for the same performance. That's not "little" difference, that's a ********.

Just an example from igorslab https://www.igorslab.de/wp-content/uploads/2021/11/Far-Cry-6-AvCPUWattFPS_DE-720p.png

Put up a slide on power consumption in games using what is widely known even by Igor's Lab to be the worst case there is for AMD in absolute frame rates comparisons.

Igor's Lab is a (self proclaimed expert) on anything and everyting he touches and yet he fails to understand the most rudimentary basics about efficiency testing, but he doesn't does he? No, this is quite deliberate, this is the equivalent of sticking one vendors GPU in a sealed sweatbox and then comparing it to another vendors GPU on an open air test bench and then crying "see look at my expert results"

are you really that daft or do you think i am?