-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Raptor Lake Leaks + Intel 4 developments

- Thread starter g67575

- Start date

More options

Thread starter's postsA 5950X uses slightly less power during gaming than my 5800X but overall also get's slightly higher frame rates, so is the 5950X more efficient than a 5800X? No, they are the same CPU core set up slightly differently, my 5800X has 2 CCD's in it, it was intended to be a 5950X but one of the CCD's is either dead or its been fused off because there was a higher demand for 5800X's than 5950X's at the time.

If you just game there is little difference between any CPU, if his 12700F uses 5 watts less in the same game and get's 10 FPS more so ####'ing what? a 5950X might use the same power and get 5 more FPS, again so ####'ing what? Do i buy the 5950X instead? NO!

Now encode a video for Youtube with these CPU's.

As I've told you, I make music and game, couldn't give a flying **** about encoding and that's why I haven't checked it.

And even if, 12700k is quite a bit faster in practically all workloads while drawing some more power so will you sit there and measure efficiency?

If I only gamed, I'd have gotten a 12400 or 12600k but music is one of the heaviest workloads I can think of, real life, not some stupid benchmarks.

All I wanted to say is that Alder Lake doesn't draw a lot when gaming and performs better than Ryzens which is a fact, because some people thought they're just hot and power hungry by default. In fact, they run cooler than higher end Ryzens under load in most cases and the power draw in heavy use is pretty reasonable given the 12700k can trade blows with the 5950X. Yes, it'll draw between 30-40W more stock in heaviest workloads than your 5800x but it'll be a good deal faster, like 3mins less in HB so what do you really want to prove with encoding videos for YT? You'd have a point if they delivered similar performance in the workloads you tout as indicative but they don't, the 12700k is just faster in most cases.

5800x is a great CPU and uses less power in heaviest workloads, I never said otherwise, but it also loses to the 12700k by a noticeable margin in the stuff you talk about so it's harder to talk about efficiency in absolute terms.

Associate

- Joined

- 29 Oct 2019

- Posts

- 1,003

Even if that is true your argument is fatally flawed. First of all, "up to twice more watts" is for the 5950x, and who the heck buys a 5950x solely for gaming? People with that CPU are going to have tasks with 100% CPU utilization for extended periods of time, for which Zen 3 is massively more efficient than Alder Lake. If you look at the overall efficiency for all tasks at stock settings there's no doubt Zen 3 would be miles ahead if you use the 5950x for your comparison.That's not true, zen 3 consumes up to twice more watts for the same performance. That's not "little" difference, that's a ********.

Just an example from igorslab https://www.igorslab.de/wp-content/uploads/2021/11/Far-Cry-6-AvCPUWattFPS_DE-720p.png

Secondly, nobody is forced to run at stock settings if they care about power consumption. Since I'm limited by my monitor's 144Hz refresh rate I run it at 3.325GHz for gaming by setting the max power state to 99% with a batch file. At that clock speed Zen 3 is ridiculously efficient and likely consumes less power than Alder Lake.

But if there wasn't the content out there like igors then a lot of people who are not as informed just assume that the prime power draw numbers and temps translate to gaming, you only have to read through the 12900k comment sections on YouTube / Reddit to see as much in the comments.A 5950X uses slightly less power during gaming than my 5800X but overall also get's slightly higher frame rates, so is the 5950X more efficient than a 5800X? No, they are the same CPU core set up slightly differently, my 5800X has 2 CCD's in it, it was intended to be a 5950X but one of the CCD's is either dead or its been fused off because there was a higher demand for 5800X's than 5950X's at the time.

If you just game there is little difference between any CPU, if his 12700F uses 5 watts less in the same game and get's 10 FPS more so ####'ing what? a 5950X might use the same power and get 5 more FPS, again so ####'ing what? Do i buy the 5950X instead? NO!

Now encode a video for Youtube with these CPU's.

I'd imagine they sell a lot of 5950Xs to people who just game, I'm sure the 3090 / 6900XT crowd wouldn't bat an eyelid at the pricing and just want the top parts.Even if that is true your argument is fatally flawed. First of all, "up to twice more watts" is for the 5950x, and who the heck buys a 5950x solely for gaming

Im confused. Are you saying that farcry 6 is the worst case for AMD? Cause the numbers are pretty much the same on every game tested. Here is the average across multiple gamesReally? You say that but in the next post....

Put up a slide on power consumption in games using what is widely known even by Igor's Lab to be the worst case there is for AMD in absolute frame rates comparisons.

Igor's Lab is a (self proclaimed expert) on anything and everyting he touches and yet he fails to understand the most rudimentary basics about efficiency testing, but he doesn't does he? No, this is quite deliberate, this is the equivalent of sticking one vendors GPU in a sealed sweatbox and then comparing it to another vendors GPU on an open air test bench and then crying "see look at my expert results"

are you really that daft or do you think i am?

https://www.igorslab.de/wp-content/uploads/2021/11/04-Efficiency.png

Well the thing is, alderlake is actually more efficient at most workloads other than gaming as well. So your argument is just WRONG. Igorslabd tested a bunch of productivity apps. Phoronix also tested about 150 productivity tasks as well, on average the 12900k is both faster and more power efficient. So, nope, you are actually just wrong.Even if that is true your argument is fatally flawed. First of all, "up to twice more watts" is for the 5950x, and who the heck buys a 5950x solely for gaming? People with that CPU are going to have tasks with 100% CPU utilization for extended periods of time, for which Zen 3 is massively more efficient than Alder Lake. If you look at the overall efficiency for all tasks at stock settings there's no doubt Zen 3 would be miles ahead if you use the 5950x for your comparison.

Secondly, nobody is forced to run at stock settings if they care about power consumption. Since I'm limited by my monitor's 144Hz refresh rate I run it at 3.325GHz for gaming by setting the max power state to 99% with a batch file. At that clock speed Zen 3 is ridiculously efficient and likely consumes less power than Alder Lake.

You can power limit both CPU's, that's not really an argument. You do realize that alderlake also gets more power efficient when you decrease the clocks, right?

Here you go, autocad power efficiency. The 12900k smashes the crap out of the 5950x

https://www.igorslab.de/wp-content/uploads/2021/11/82-Power-Efficiency-Mixed.png

Last edited:

Associate

- Joined

- 4 Oct 2017

- Posts

- 1,320

who the heck buys a 5950x solely for gaming? .

Guilty as charged, although I thought I was future proofing myself but I'll be changing my cpu long before games need that many cores lol

Associate

- Joined

- 29 Oct 2019

- Posts

- 1,003

At stock for fully loaded workloads, which is the most likely use case for such a CPU, the 12900k consumes 244w compared to 120w for the 5950x (source). That's more than twice the power consumption for a single digit performance improvement. Cherry picking a few benchmarks that don't even fully utilize them is a very poor argument.Well the thing is, alderlake is actually more efficient at most workloads other than gaming as well. So your argument is just WRONG. Igorslabd tested a bunch of productivity apps. Phoronix also tested about 150 productivity tasks as well, on average the 12900k is both faster and more power efficient. So, nope, you are actually just wrong.

You can power limit both CPU's, that's not really an argument. You do realize that alderlake also gets more power efficient when you decrease the clocks, right?

Here you go, autocad power efficiency. The 12900k smashes the crap out of the 5950x

https://www.igorslab.de/wp-content/uploads/2021/11/82-Power-Efficiency-Mixed.png

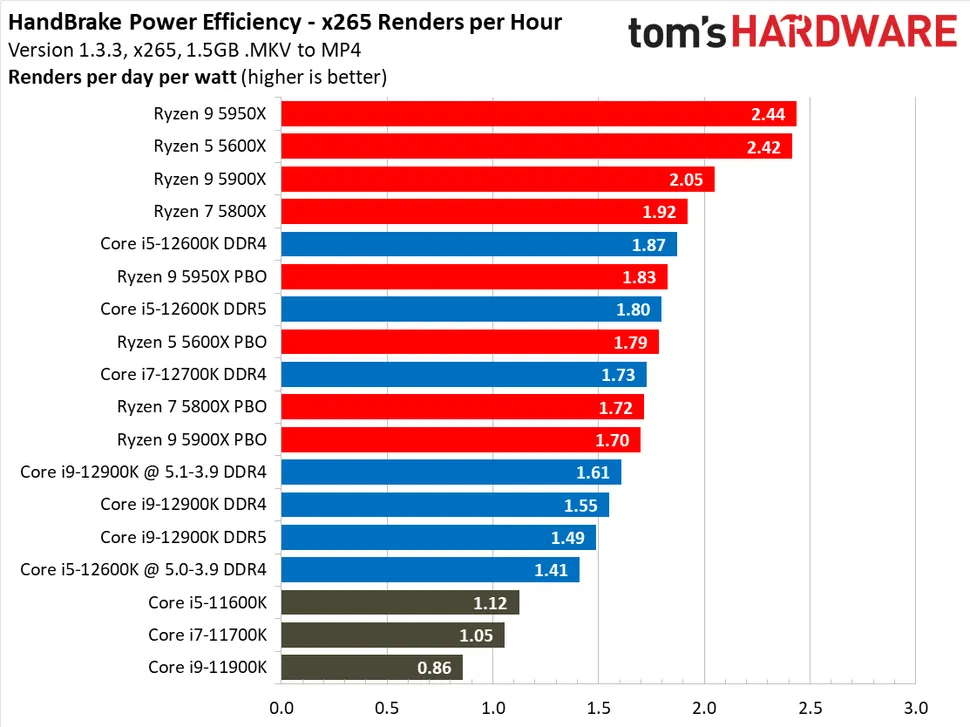

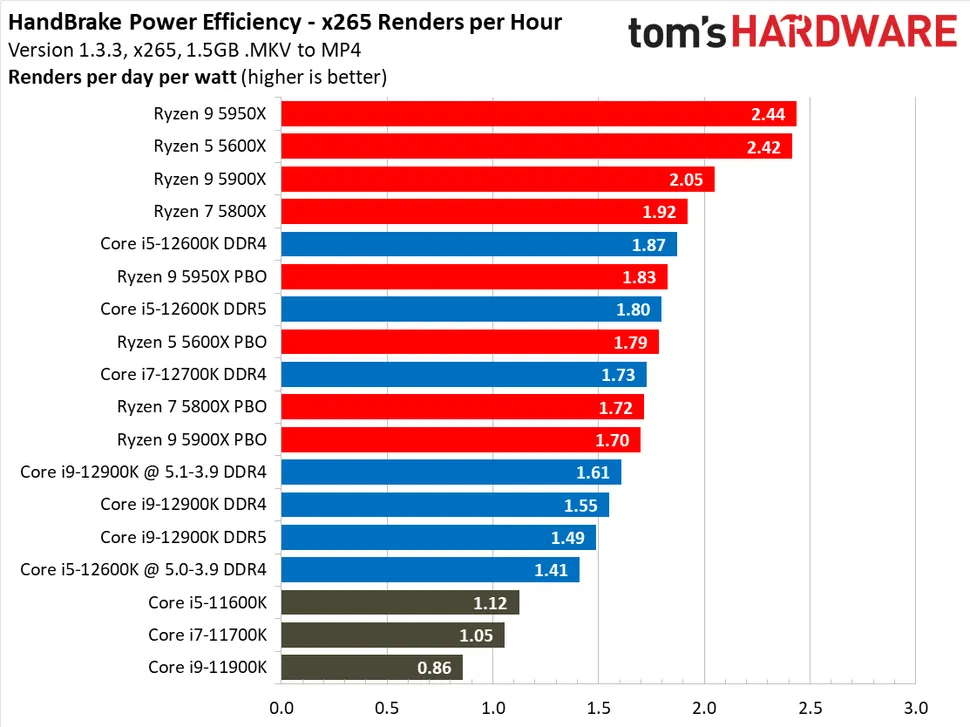

One of the things I used my 5950x for that had full utilization was encoding a large media library with x265 (which took months). If we look at the power efficiency of that at stock settings, according to tom's hardware the 5950x is 63.7% more efficient than the 12900k.

Last edited:

Well the thing is, alderlake is actually more efficient at most workloads other than gaming as well. So your argument is just WRONG. Igorslabd tested a bunch of productivity apps. Phoronix also tested about 150 productivity tasks as well, on average the 12900k is both faster and more power efficient. So, nope, you are actually just wrong.

You can power limit both CPU's, that's not really an argument. You do realize that alderlake also gets more power efficient when you decrease the clocks, right?

Here you go, autocad power efficiency. The 12900k smashes the crap out of the 5950x

https://www.igorslab.de/wp-content/uploads/2021/11/82-Power-Efficiency-Mixed.png

Bencher, that autocad graph you link, the title says lower is better, so (if thats not a mistake by the review writer) that seems to show the opposite of what you claim.

That's not true, zen 3 consumes up to twice more watts for the same performance. That's not "little" difference, that's a ********.

Just an example from igorslab https://www.igorslab.de/wp-content/uploads/2021/11/Far-Cry-6-AvCPUWattFPS_DE-720p.png

"Just an example"

A cherry picked graph which happens to show intel in best light, what about the other titles he tests.

Is that why you just link a single graph picture and not the article, so that people cannot see the data in full and in context?

To save you some time, here you go

https://www.igorslab.de/intel-core-...e-gaming-cpu-fuer-die-breite-masse-teil-1/11/

Scroll down to the the average watts per frame for ALL game tests. Its swings and roundabouts bar the 12400 whose clocks are more conservative for alderlake.

Last edited:

Its a translation mistake since its a German site. So yes it does show what im claimingBencher, that autocad graph you link, the title says lower is better, so (if thats not a mistake by the review writer) that seems to show the opposite of what you claim.

Uhm, i alsonposted the average across all games, the 12900k smashes the 5950x in both efficiency and perfromance. So what cherrypicks are you talking about..?"Just an example"

A cherry picked graph which happens to show intel in best light, what about the other titles he tests.

Is that why you just link a single graph picture and not the article, so that people cannot see the data in full and in context?

To save you some time, here you go

https://www.igorslab.de/intel-core-...e-gaming-cpu-fuer-die-breite-masse-teil-1/11/

Scroll down to the the average watts per frame for ALL game tests. Its swings and roundabouts bar the 12400 whose clocks are more conservative for alderlake.

But if there wasn't the content out there like igors then a lot of people who are not as informed just assume that the prime power draw numbers and temps translate to gaming, you only have to read through the 12900k comment sections on YouTube / Reddit to see as much in the comments.

I'd imagine they sell a lot of 5950Xs to people who just game, I'm sure the 3090 / 6900XT crowd wouldn't bat an eyelid at the pricing and just want the top parts.

I agree, I see this a lot. "Omg the 12900K draws 300/400 watts go AMD". With CPUs having a gadzillion cores and silly turbos power draw charts really need to evolve to be more informative. At least hardware unboxed shows a game (Cyberpunk) in their reviews, but it could use more context.

And one of the things i used my 12900k was autocad for 9 months straight, and if we look at those numbers its 100% more efficient than the 5950x.At stock for fully loaded workloads, which is the most likely use case for such a CPU, the 12900k consumes 244w compared to 120w for the 5950x (source). That's more than twice the power consumption for a single digit performance improvement. Cherry picking a few benchmarks that don't even fully utilize them is a very poor argument.

One of the things I used my 5950x for that had full utilization was encoding a large media library with x265 (which took months). If we look at the power efficiency of that at stock settings, according to tom's hardware the 5950x is 63.7% more efficient than the 12900k.

The point is, individual scenarios dont matter. In the vast vast vast majority of tasks alderlake is more efficient. Phoronix did 150 benchmarks the 12900k was top dog in both performance and efficiency. End of story, i really don't get why do you keep arguing. Its 150 tasks for ****s sake..

Also, if you are running all core Workloads you shouldn't be running with 240w power limits, so your point is kinda moot even for x265. Who the heck runs encodes for a month with a 240w power limit? Lol

Hwunboxed numbers are just silly. They show us the system power consumption but they fail to mention that the 12900k outputs more frames, which means the gpu consumes more cause it drives more frames. In actual efficiency the 12900k slaps the 5950x in the mouth in cyberpunk.I agree, I see this a lot. "Omg the 12900K draws 300/400 watts go AMD". With CPUs having a gadzillion cores and silly turbos power draw charts really need to evolve to be more informative. At least hardware unboxed shows a game (Cyberpunk) in their reviews, but it could use more context.

Associate

- Joined

- 29 Oct 2019

- Posts

- 1,003

Autocad is single thread limited and therefore an irrelevant benchmark for comparing 16 core CPU's. Also the 12900k was released 5 months ago so it's quite the achievement to use it for 9 months straightAnd one of the things i used my 12900k was autocad for 9 months straight, and if we look at those numbers its 100% more efficient than the 5950x.

The point is, individual scenarios dont matter. In the vast vast vast majority of tasks alderlake is more efficient. Phoronix did 150 benchmarks the 12900k was top dog in both performance and efficiency. End of story, i really don't get why do you keep arguing. Its 150 tasks for ****s sake..

.

.240w is the power draw at stock settings so I imagine quite a lot of people would be running it with those limits.Also, if you are running all core Workloads you shouldn't be running with 240w power limits, so your point is kinda moot even for x265. Who the heck runs encodes for a month with a 240w power limit? Lol

Autocad is single thread limited and therefore an irrelevant benchmark for comparing 16 core CPU's. Also the 12900k was released 5 months ago so it's quite the achievement to use it for 9 months straight.

240w is the power draw at stock settings so I imagine quite a lot of people would be running it with those limits.

A productivity task is irrelevant? Really? LOL.

Who cares what a lot of people will do. The point is, if you want to run all core workloads for HOURS, then you shouldnt have a 240 p limit on any cpu.Instead of looking at the facts objectively, you are just trying to prove that your choice is better. Well it's not anymore.

Also, if efficiency on all core workloads is your thing, 12900k gets 13k cbr23 @ 35watts. It doesn't get more efficient than that

And one of the things i used my 12900k was autocad for 9 months straight, and if we look at those numbers its 100% more efficient than the 5950x.

Wow you've been using a CPU since well before it was released, what fictional motherboard was it installed on 9 months ago?

You missed the plot. Was trying to give an example to show that individual scenarios are meaningless. Yes there are tasks that one cpu will be more efficient than the other, focusing solely on that individual task to prove superiority in efficiency is dumb, because you can do the same with both cpus hence my autocad example.Wow you've been using a CPU since well before it was released, what fictional motherboard was it installed on 9 months ago?

Fact of the matter is as shown by phoronix, in an average of 150 tasks the 12900k is both faster and more power efficient.