Turns out I was half asleep last time I watched this and didn't notice that Lisa has confirmed that RDNA 3 will be chiplet based. So we have two pieces of information.

AMD Reveals Next Generation RDNA 3 GPU (Watch the Demo)

AMD's CEO Lisa Su gives the first public demo of the new AMD Radeon RX GPU running the game Liza P by Neowiz.Never miss a deal again! See CNET’s browser exte...www.youtube.com

A picture of the flagship card (it is THICC!) and a quick demo. Not much but it confirms that we are very close to a launch.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RDNA 3 rumours Q3/4 2022

- Thread starter Grim5

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsSoldato

- Joined

- 21 Jul 2005

- Posts

- 21,103

- Location

- Officially least sunny location -Ronskistats

Hopefully it will compete so all the enthusiasts can pickup their nvidia cards for cheaper..

Hopefully it will compete so all the enthusiasts can pickup their nvidia cards for cheaper..

I actually found myself wanting a PowerColor Liquid Devil or a Toxic card.

The best all-round Nvidia 3xxx card that I could get hold of was the Asus TUF. EVGA's malfunction was to AMD's benefit I think and I think AMD's AIB's really stepped up with the 6000 series.

If EVGA keep malfunctioning, it will hinder desirability of the Nvidia range in general for this coming gen.

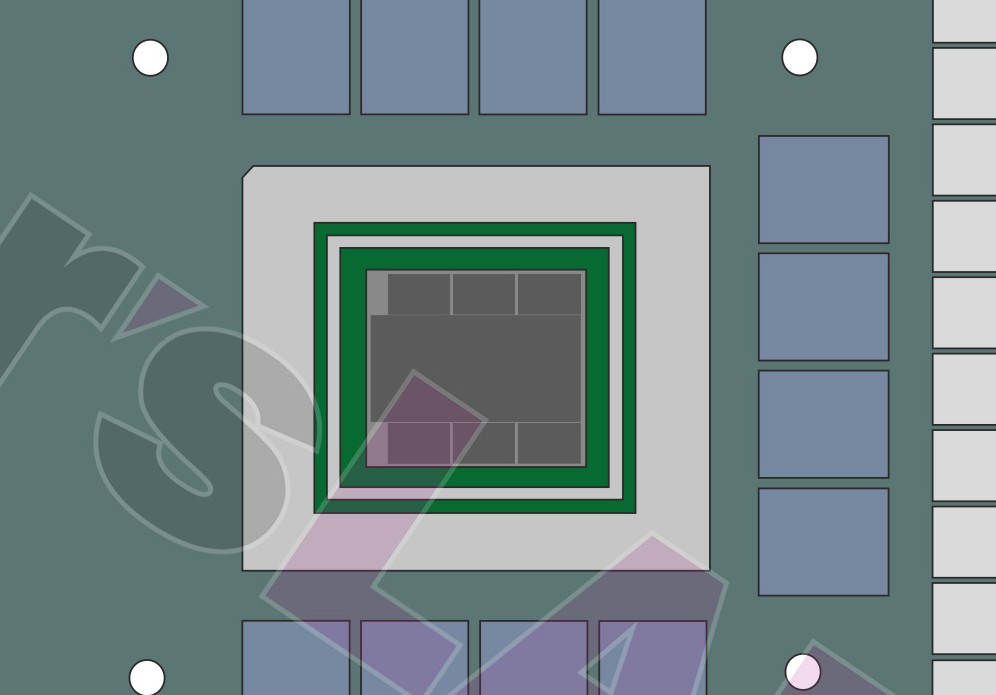

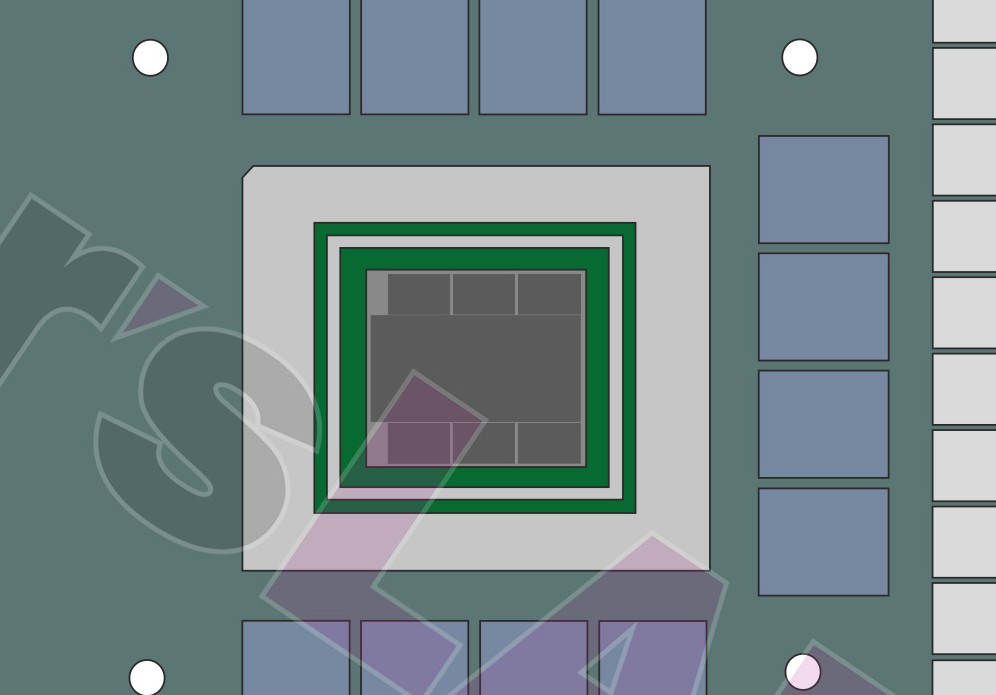

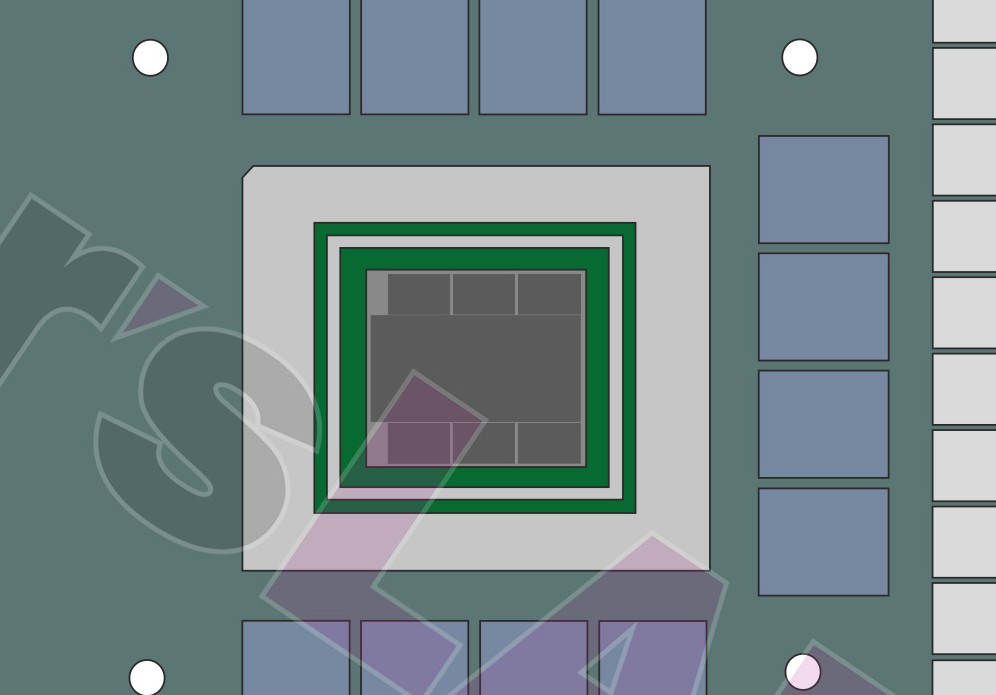

So Igor's lab has sketched out the rumoured board for the 7900xt

Key points:

450W Max.

24GB of VRAM max. (Gunning for the creatives I see; lets hope ROCm and HIP catches up)

1 HDMI, 3DP, No USB-c in sight

www.igorslab.de

www.igorslab.de

Key points:

450W Max.

24GB of VRAM max. (Gunning for the creatives I see; lets hope ROCm and HIP catches up)

1 HDMI, 3DP, No USB-c in sight

AMD Radeon RX 7900XT - Possible board design and new findings | Exclusive | igor´sLAB

While the protagonists of the sensational leaks are currently fighting among themselves and wrestling for the ultimate interpretative sovereignty of NVIDIA and AMD products, I grabbed a bag of virtual…

Caporegime

- Joined

- 18 Oct 2002

- Posts

- 31,182

Nah I think the grunt may actually justify it this time around for gaming, just like the 4090.24GB of VRAM max. (Gunning for the creatives I see; lets hope ROCm and HIP catches up)

4090 Ti Super+ 50GBCan’t wait for the “is 24gb enough” thread.

450W is monsterous. Won't be popular but we need some kind of power draw limit or tax on cards this unnecessarily powerful.So Igor's lab has sketched out the rumoured board for the 7900xt

Key points:

450W Max.

24GB of VRAM max. (Gunning for the creatives I see; lets hope ROCm and HIP catches up)

1 HDMI, 3DP, No USB-c in sight

AMD Radeon RX 7900XT - Possible board design and new findings | Exclusive | igor´sLAB

While the protagonists of the sensational leaks are currently fighting among themselves and wrestling for the ultimate interpretative sovereignty of NVIDIA and AMD products, I grabbed a bag of virtual…www.igorslab.de

However, this also limits the maximum board power of the card to 450 watts, whereby the actual TBP should be far below that.

Its not that its a "450 Watt GPU" the board in limited to 450 watts, so even with overclocking the GPU + VRam can only pull up to 450 watts, at stock its likely to be a chunk below that.

With a 50% performance per watt improvement and the 6900XT pulling 300 watts board power my guess its between 350 and 400 watts, 400 watts would make it 2X as fast as the 6900XT.

The 3090 is 350 watts, sometimes higher.

The 3090 is 350 watts, sometimes higher.

My 6900XT uses less power than the 5700XT it replaced at the same settings (using HWiNFO64 to view power). I can up the settings and get higher FPS which ups the power but I game at 60Hz 1440p, so it generally uses far less as the 5700XT was working much closer to 100% to get the same results.

Yet 500 watt 3090ti are ok?450W is monsterous. Won't be popular but we need some kind of power draw limit or tax on cards this unnecessarily powerful.

450W is monsterous. Won't be popular but we need some kind of power draw limit or tax on cards this unnecessarily powerful.

The market will sort it out when gamers start comaining about sweatty clothes whilst pumping all that heat into their gaming room.

My 6900XT uses less power than the 5700XT it replaced at the same settings (using HWiNFO64 to view power). I can up the settings and get higher FPS which ups the power but I game at 60Hz 1440p, so it generally uses less as the 5700XT was working much closer to 100% to get the same results.

The 6900XT was also "+50% performance per watt over 5700XT"

The 5700XT was 225 watts, so at 225 watts the 6900XT is 50% faster, at 300 watts the 6900XT should have been 85% faster but AMD were sandbagging a bit, its actually exactly 2X the performance of the 6900XT at exactly 300 watts, so its actually more than +50% performance per watt, more like +65%.

AMD say the same thing about RDNA3 "+50% performance per watt"

They are just pulling those +50% numbers out of their arse, but in a good way.

Yes, the 6900XT seems good on power until games max it out to get 60Hz, the 5700XT would use ~200W and most games use 80-130W with the 6900XT. 3DMark hits 340W though.The 6900XT was also "+50% performance per watt over 5700XT"

The 5700XT was 225 watts, so at 225 watts the 6900XT is 50% faster, at 300 watts the 6900XT should have been 85% faster but AMD were sandbagging a bit, its actually exactly 2X the performance of the 6900XT at exactly 300 watts, so its actually more than +50% performance per watt, more like +65%.

AMD say the same thing about RDNA3 "+50% performance per watt"

They are just pulling those +50% numbers out of their arse, but in a good way.

Gaming average according to TPU is exactly 300 watts ^^^^, the 5700XT is almost exactly 225 watts. AMD's TDP ratings are bang on....

------------------

What i'm saying is the board on the 7900XT is limited to 450 watts because it doesn't need a lot of power to reach 2X the performance of the 6900XT.

Its probably under 400 watts and AMD figure you don't need more than 70 watts or so to push the silicon to its limits in overclocking.

------------------

What i'm saying is the board on the 7900XT is limited to 450 watts because it doesn't need a lot of power to reach 2X the performance of the 6900XT.

Its probably under 400 watts and AMD figure you don't need more than 70 watts or so to push the silicon to its limits in overclocking.

If I get a GPU this time (if I can justify it) it will be AMD as long as they are competitive to the point that they are very close to Nvidia's performance for each tier (except for the 4090+ tier, I would never consider such an expensive card so that tier is irrelevant to me). The much lower power consumption and heat production will be a huge bonus given what's going on at the moment.Hopefully it will compete so all the enthusiasts can pickup their nvidia cards for cheaper..

I know Gamers Nexus did a video and praised the FE cooler but my 3080 FE, with the CPU being cooled by a Noctua NH-D15S with two fans, really heats up my case (my NVME SSD, X570 chipset etc) and my room; much more than my GTX 1080 ever did. This is a case with good airflow too (Phanteks P600S).

Maybe Gamers Nexus should have tested with a bigger CPU air cooler because the FE design doesn't work that well for me.

If I get a GPU this time (if I can justify it) it will be AMD as long as they are competitive to the point that they are very close to Nvidia's performance for each tier (except for the 4090+ tier, I would never consider such an expensive card so that tier is irrelevant to me). The much lower power consumption and heat production will be a huge bonus given what's going on at the moment.

I know Gamers Nexus did a video and praised the FE cooler but my 3080 FE, with the CPU being cooled by a Noctua NH-D15S with two fans, really heats up my case (my NVME SSD, X570 chipset etc) and my room; much more than my GTX 1080 ever did. This is a case with good airflow too (Phanteks P600S).

Maybe Gamers Nexus should have tested with a bigger CPU air cooler because the FE design doesn't work that well for me.

Most of these reviewers are hypocrites, i say that while at the same time i like Steve Burke, but they have an implicit bias due to the relationships they have formed with people who work with Intel and to a lesser extent Nvidia, its not the same relationship they have with AMD who keep themselves more distant due to the much more "impartial" way AMD have been treated by these people when they were struggling to survive.

The sympathy and empathy Steve Burke extends to Intel with its current predicaments is very obvious , they go to great lengths to gloss over everything that is wrong with ARC and treat what is incompetence on Intel's part as something that again should be empathised with.

When AMD was struggling to make GPU's and CPU's, these were products that had insufficient performance and high power consumption, problems with drivers tho nothing like as bad as what Intel are experiencing now, did AMD get any sympathy? No, the way they were treated in those days by these people was frankly brutal, and they knew AMD was on the brink of bankruptcy, did they care about the loss of competition then? Not even slightly.

Now all of that is turned on its head, power consumption, performance per watt, drivers... none of that matters now, and poor Intel, diddums.

I can't remember a video with more sympathy and empathy than the iconic Waste of Sandsympathy and empathy Steve Burke extends to Intel

I can't remember a video with more sympathy and empathy than the iconic Waste of Sand

Milk toast. and i'm talking about ARC.

- Status

- Not open for further replies.