Hence the key word here:

Do you not find it a bit odd how the only thing we know of rdna 3 ray tracing so far is amds comment of "it will be more advanced than rdna 2", if they were going to significantly improve it, why not say something like "it will be better than ampere", same way intel have.... Not agree that is a poor choice of words and doesn't show they have much confidence in it?

Then go based on history:

- how long did amd take to catch up on dx 11?

- how long did they take to catch up on raster?

- how long did they take to catch up on opengl? And still aren't there yet

Of course, they could come out and completely smash 40xx RT and I hope so as right now, if you care about ray tracing, we only have the one choice.

Looking historically at AMD tells us very little though, especially from one that was circling the drain and had no compelling products.

Looking at their recent history paints a more telling picture, going from being no where near to competitive at the high end on raster. They've also made huge gains across their software stack - including FSR not existing 16 months ago to the latest 2.1 release which is really closing the gap.

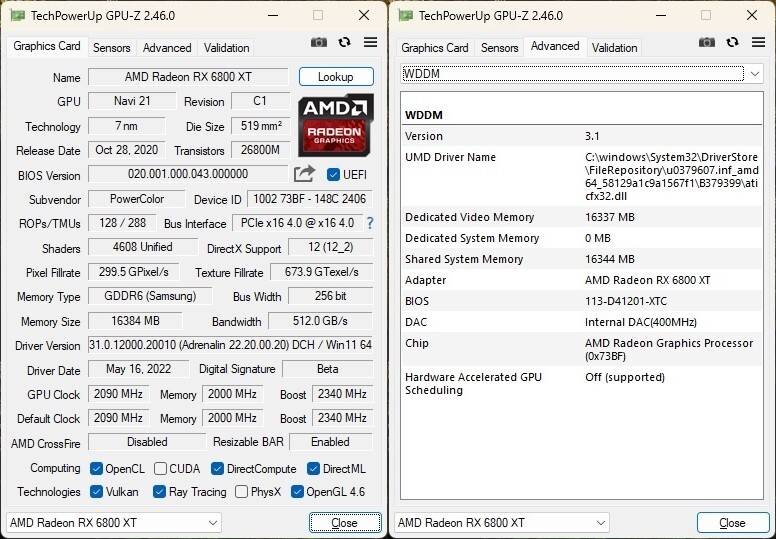

Not sure if the statement on OpenGL is true or not (literally can't find benchmarks), but Techpowerup says the new driver reaches 3080 levels on the 6800XT using Unigine Valley benchmark.

Their AMF encoder has gone from neglect to competitive, but they still need to work on wider software support and some improved hardware encoders next generation for sure.

Whilst they still have plenty to do, if they continue momentum I might be interested next gen - or at least I won't default my nVidia recommendation when people ask what they should buy