Soldato

- Joined

- 23 Jun 2004

- Posts

- 4,888

- Location

- Blackburn

Well that's my point, if DLSS lowers image quality like you are suggesting then native 1440p should look better than 4k DLSS Quality, since they both render at 1440p. But it doesn't. It actually looks much worse. Which is the point.

Well yeah thats pretty obvious as lowering the resolution on your monitor is going to look bad. In real world use people don't do that. If I have a 4k monitor I'm not doing to run 1080p or 1440p because it will look bad. I'm going to choose either 4k native resolution or 4k with DLSS if the game supports.

4k native on a 4k resolution looks better than 4k with DLSS especially in motion though some games DLSS does look near indistinguishable but there are alway scenes that you can see artifacts if you go out of your way to look for them.

Anyway this thread is getting a bit silly now. Maybe drop the whole upscaling discussion.

- although given current pricing and performance uplifts they are actually not a bad choice at all).

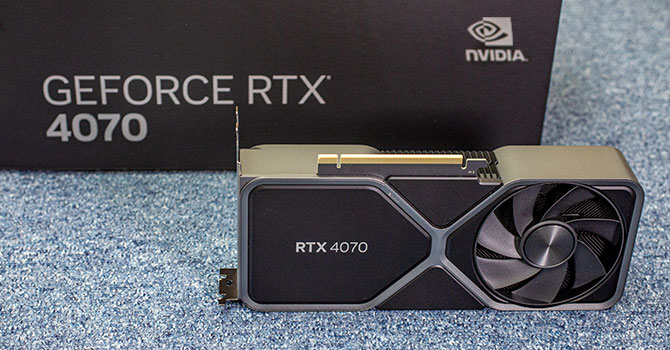

- although given current pricing and performance uplifts they are actually not a bad choice at all). R. Bought a FE model to test. Don't recommend it at current pricing for a purely gaming card but am interested in seeing what it can do for myself.

R. Bought a FE model to test. Don't recommend it at current pricing for a purely gaming card but am interested in seeing what it can do for myself.