It's not a question if AMD is big enough to make huge chips. There is a lot more to it than that, as you well know. If huge monolithic chips were the future, do you think that Intel would be striving their nuts off to get their chipletts out of the door.The other way to think about this is:

Are chiplets a good thing for consumer, or for the manufacturer?

This is similar to asking "who gains the most from Intel's hybrid approach?"

In both cases the primary reason was cheaper to manufacturer. In AMD's case because back then they didn't have the budget, in Intel's case because the P cores are simply too big*.

And in both cases, consumers do gain something: without chiplets AMD would probably not be back in the game; for Intel some workloads do gain from the E-cores.

But back to my original question: I think AMD are now large enough to be able to offer a big monolith core. And there is little doubt that a 16C monolith with full cache and the IMC on-die would perform better and consume less power when idling.

* yet as @humbug says Intel are - at least in the server market - giving away their CPUs despite the space saving of the E cores (although to be strict: Intel's Hybrid hasn't made it to servers yet).

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Poll: Ryzen 7950X3D, 7900X3D, 7800X3D

- Thread starter kindai

- Start date

More options

Thread starter's postsIt's not a question if AMD is big enough to make huge chips. There is a lot more to it than that, as you well know. If huge monolithic chips were the future, do you think that Intel would be striving their nuts off to get their chipletts out of the door.

Now here is a good point, Intel have for the past 4 years trying its hardest to copy exactly what AMD are doing.

Didn't Apple just recently make a small revolution with M1/M2 chips, very monolithic, with many specialised modules and a GPU integrated into one?

I don't know i don't follow what Apple are doing, i don't mean that in a derogatory way i'm sure its all very interesting but i have my plate full with hobby interests already.

Pretty much all semi conductor companies have been trying to do MCM and 3D stacking for decades because they all recognise and have done for decades the limitations of monolithic. Apple are no different.

Last edited:

M2 Max is almost a wafer-scale chips with the way it is arranged. Think Cerebras - https://en.wikipedia.org/wiki/Cerebras#Technology - although no images on the wiki article but found one:

What hasn't been tried AFAIK is designing a chip can be cut up but can also be used as one piece. Yes, the traces would have to big and all heading out to the next "chiplet" and when cut the connections would have to be routed under the chip somehow.

On the other hand 3D stacking is sort of like that. So maybe in Zen5 or so, the IO die will no longer be beside the CCD but underneath it as every mm costs hugely in power.

What hasn't been tried AFAIK is designing a chip can be cut up but can also be used as one piece. Yes, the traces would have to big and all heading out to the next "chiplet" and when cut the connections would have to be routed under the chip somehow.

On the other hand 3D stacking is sort of like that. So maybe in Zen5 or so, the IO die will no longer be beside the CCD but underneath it as every mm costs hugely in power.

Soldato

- Joined

- 16 Aug 2009

- Posts

- 8,068

I don't think its possible to remove previous drivers I couldn't find an option to do so and read somewhere else you shouldn't anyway. The new ones installed over the old ones fineAhh good I’ve bought an Asus board.

Do you have to uninstall previous drivers before the new ones on AMD? Just for future reference of course.

That is interesting.M2 Max is almost a wafer-scale chips with the way it is arranged. Think Cerebras - https://en.wikipedia.org/wiki/Cerebras#Technology - although no images on the wiki article but found one:

What hasn't been tried AFAIK is designing a chip can be cut up but can also be used as one piece. Yes, the traces would have to big and all heading out to the next "chiplet" and when cut the connections would have to be routed under the chip somehow.

On the other hand 3D stacking is sort of like that. So maybe in Zen5 or so, the IO die will no longer be beside the CCD but underneath it as every mm costs hugely in power.

They would need an interposer under that, a piece of silicon inside the PCB with the traces to connect it all together, Ryzen and RDNA3 chips all sit on top of an interposer.

Of course its not a simple as that, actually making it work is the complicated bit.

While making this one foot die work might be theoretically possible actually doing it is an entirely different matter and even if they could the package is way too large to actually use.

Deleted member 258511

D

Deleted member 258511

It’s done through windows apps AFAIK. Just read that it can cause conflicts. Most people I’ve asked say they just leave it to be fair.I don't think its possible to remove previous drivers I couldn't find an option to do so and read somewhere else you shouldn't anyway. The new ones installed over the old ones fine

I understand yields and cost reduction, yes.

You didn't answer my question though.

You hit scaling and cost walls with those types of architecture and the result is underperforming expensive chips, that’s only remedy is push power use to gain performance.

If the goal is to build slow, expensive and power hungry chips, those types of designs are great, but without silicon stacking or some technological breakthroughs, you run into a dead end like Intel.

I can think of one company that builds the end game of monolithic designs and it’s power use is measured in the kilowatts range.

@KompuKare on 3D stacking.

These 3D chips are showing us where AMD are going next with APU's.

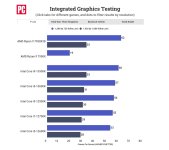

The 7000 has a token iGPU, its just for display out put, nothing more, on the X3D variants it has access to the 3D stacked cache, which is even on a different die. the result...

A 3.3X improvement in performance.

It didn't need access to that cache and i'm sure it needs to be wired so to do it....

These 3D chips are showing us where AMD are going next with APU's.

The 7000 has a token iGPU, its just for display out put, nothing more, on the X3D variants it has access to the 3D stacked cache, which is even on a different die. the result...

A 3.3X improvement in performance.

It didn't need access to that cache and i'm sure it needs to be wired so to do it....

Last edited:

As I'm watching him abit more his content is no real different to the attention seeking, clickbaiting, trolling and fanboying that goes on here.He’s very good at getting people fired up. He uses a hot topic adds in a small percentage of fact and then completely goes way over the top to get attention. I suppose just testing the way people in here would prefer to see wouldn’t be entertaining so he chooses the click bait style instead.

Last edited:

The link for that ^^^ sorry.

AMD Ryzen 7000 "Phoenix" APUs with RDNA3 Graphics to Rock Large 3D V-Cache

AMD's next-generation Ryzen 7000-series "Phoenix" mobile processors are all the rage these days. Bound for 2023, these chips feature a powerful iGPU based on the RDNA3 graphics architecture, with performance allegedly rivaling that of a GeForce RTX 3060 Laptop GPU—a popular performance-segment...

AMD Ryzen 7000 "Phoenix" APUs with RDNA3 Graphics to Rock Large 3D V-Cache

AMD's next-generation Ryzen 7000-series "Phoenix" mobile processors are all the rage these days. Bound for 2023, these chips feature a powerful iGPU based on the RDNA3 graphics architecture, with performance allegedly rivaling that of a GeForce RTX 3060 Laptop GPU—a popular performance-segment discrete GPU. What's more, AMD is also taking a swing at Intel in the CPU core-count game, by giving "Phoenix" a large number of "Zen 4" CPU cores. The secret ingredient pushing this combo, however, is a large cache.

What a night, first Geordie Shore, then lame chasers and a finale of Jersey Shore. Totally reem.As I'm watching him abit more his content is no real different to the attention seeking, clickbaiting, trolling and fanboying that goes on here.

Its going to be a joke/meme CPU I recon. Basically no point buying it over a 7800x3d and if you can afford a 7900x3d you may as well take the 7950x3d

Reviews rolling in seem to confirm this is the state of it

That's not true, not by a long shot. At same wattages, rpl is 10-15% less efficient than zen 4 at heavy mt. But on the other hand it wins massively in lower threaded tasks like the whole Adobe suite.This is a dilemma. We know that at full load RPL is far far less efficient than Zen4 and especially Zen4 3D.

The biggest problem with the 13900k is gaming efficiency which sucks, and it is why I abandoned it for the 12900k.

Regards the 7900x3d, they should have just made a 7600x3d. Although I appreciate it’s probably got less profit margin for them.

I wonder if we will see the next generation of x3d chips go a different route, perhaps 3D cache on all cores/chiplets.

This current design has some benefits clearly but it seems a bit inelegant design wise.

I wonder if we will see the next generation of x3d chips go a different route, perhaps 3D cache on all cores/chiplets.

This current design has some benefits clearly but it seems a bit inelegant design wise.

Regards the 7900x3d, they should have just made a 7600x3d. Although I appreciate it’s probably got less profit margin for them.

I wonder if we will see the next generation of x3d chips go a different route, perhaps 3D cache on all cores/chiplets.

This current design has some benefits clearly but it seems a bit inelegant design wise.

I think the chance of a 5600X3D is possible. Maybe once AMD have enough salvaged parts to release it as a temporary product.

Still dodging the questions.Those monolitic dies are gone from design for many reasons.

Takes time to design and costly wafers and error rates.

Make no financial sense.

Not all things in a chip needs to be reworked.

Intel lost leadership a decade ago when they tried that 10nm fiasco with big cores.

AMD is crusching them in all areas with a small die design.

Can scale and cheaper to make and faster to design die critical areas.

Its why intel is slow, late and draw power.

Its why the small die designs are now are in a gpu and next generation amd will benefit massively where nvidia has to redesign things to make it work.

if you did run amd as a company it be bankrupt now

just saying.

its why I buy amd as its run by people that knows things

1) Do you think a monolithic implimentation of AMD's current architecture perform worse?

Or

2) Do you think better performance is going backwards?

I know the chipplet approach helps with yields and costs. That's why I didn't ask that question.

Saying that going monolithic would be going "backwards" sounds more like a fanboy defending his team's current approach rather than a defendable assertion.

Last edited: