Wouldn't surprise me tbh, Pascal has been speculated to be heavily based on Maxwell.

Drip...Drip...Drip...Feeeeeeeeeeeeeeeeeed

I'm hedging by bets and keeping my 980ti I think...

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Wouldn't surprise me tbh, Pascal has been speculated to be heavily based on Maxwell.

As do i, and if Pascal doesn't do a-sync like that above article suggests, i don't see them in trouble, as it will hardly get used, Nvidia have over 80% of the market, just about every game coming out will be a GameWorks, so no a-sync, a-sync won't make a jot of difference, its not the great white hope some are expecting, nothing will change, Nvidia are just too strong.

Drip...Drip...Drip...Feeeeeeeeeeeeeeeeeed

I'm hedging by bets and keeping my 980ti I think...

The problem is sadly 55 million consoles which will be using it in all the big cross platform titles!

It'll only be used on the consoles, do you really think Nvidia are gona let game after game after game come onto the PC with a-sync if they can't do it, not a bloody chance, they had Dx10.1 ripped out, this'll be no different imo, besides, they'll all be their GameWorks as i said, so won't have it in anyway if they still don't do it.

It'll only be used on the consoles, do you really think Nvidia are gona let game after game after game come onto the PC with a-sync if they can't do it, not a bloody chance, they had Dx10.1 ripped out, this'll be no different imo.

If they ever do a-sync, then the games will flood out, but not before.

)

)I know the technology isn't the same nor are the results, but the discussion has loads of similarities to the tessellation/ over tessellation discussion's of recent years.

If mid-range Pascal beats a 980ti, then obviously they will want to shift as many 980ti's as they can before hand

As I just said I know the technology isn't the same and in GPU term the two techs are completely different.

But the discussion this forum is having has some similarities to the whole tessellation/over tessellation discussion that happen a while back, just this time it is the other way around with AMD having the tech performance advantage, if lots of Async is used.

If thats all entirely 100% accurate, why did oxide disable async for nvidia GPU's?

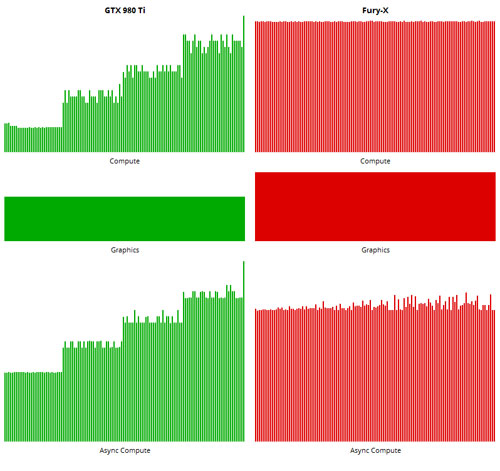

You are suggesting that enabling async compute only represents a performance bonus for hardware that supports it. You are saying that enabling it and increasing the queue depth should have no penalty on nvidia hardware with a smaller queue capability. Yet the evidence completely obliterates that argument.

Oxide didnt disable the effects, they found that disabling async actually improved performance using the same settings, same number of effects.

If thats all entirely 100% accurate, why did oxide disable async for nvidia GPU's?

You are suggesting that enabling async compute only represents a performance bonus for hardware that supports it. You are saying that enabling it and increasing the queue depth should have no penalty on nvidia hardware with a smaller queue capability. Yet the evidence completely obliterates that argument.

Oxide didnt disable the effects, they found that disabling async actually improved performance using the same settings, same number of effects.

Either its like hyperthteading, or its not. You cant really write 4 paragraphs comparing it to HT saying it doesnt cause a performance hit and then just say, oh well actually it kinda does but its still fine for people to optimise for AMD hardware and not nvidia.

Where did I say any of that?

I didn't say it doesn't have a performance hit but, ah, it kinda does, nor anything like it. Everything suggests that AMD actually have async compute, and as such can push 8 threads through the 'gpu' without a significant performance hit and thus overall increase performance. It also suggests that Nvidia do NOT have async compute at all, and are as such trying to jam 8 threads through a 4 thread 'gpu', and every time the threads switch context they have a significant performance hit thus overall decreasing performance.

The entire point is that in this comparison, AMD definitely has HT enabled, Nvidia do not appear to.

And I'll still be keeping my 980Ti.

I wont be falling for a mid range card marketed as high end. Once bitten twice shy. I only want the next Ti variant.

Indeed...

Buying a Titan (I didn't ) and then the 980 ti coming out for half the price was ugly for consumers...

When pascal comes ,and if it's at £250 , and it's on par with 980 ti performance,I'm not buying into it....

This won't happen though