What you will find is that if you are power limited in the game you are running, you may get away with a lower voltage set as the core clock is not boosting as high. However, if you then play a game where the GPU is not bouncing off the maximum power limit (aka Warzone), you will need more voltage to attain stability as the GPU has the power headroom available to boost the core clock higher. This higher core clock needs more voltage when not in a power limited scenario.Increasing min frequency seems to increase reported frequencies but decrease performances.

1075mV seems to be stable for my MBA 7900 XTX. 1070mV is crashing. But for some reasons it's not stable while playing Warzone even at 1085mV (all other games are OK), but i don't know if this is a game issue.

Also reported voltage never go above 950mV.

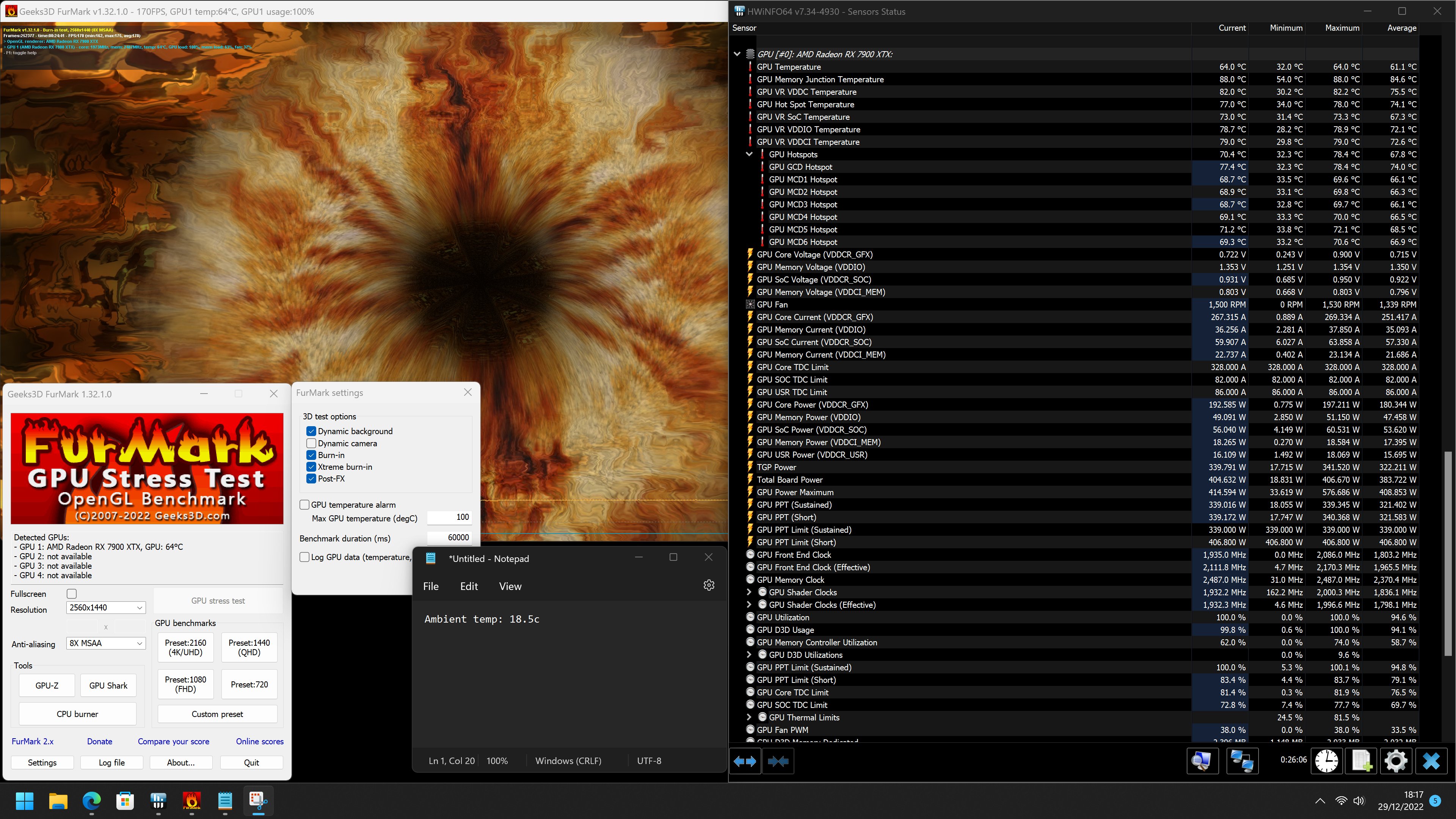

Max hotspot is 92° (only if PL+15%).

Last edited: