-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5 - unbelievable.

- Thread starter Too Tall

- Start date

More options

Thread starter's postsSo... Global illumination seems to make real time ray tracing rather pointless....?

Ray tracing is an algorithm used in Global illumination. Others are path tracing, ambient occlusion and a few others i forget

I think what bothers me the most, and people haven't understood yet, is that you aren't getting highly detailed models on the screen. What you get from Nanite, the main advantage, is the elimination of LOD transitions, which is great. But you're not seeing the models at the quality they're bragging about because there's the auto-downscaling of detail that's happening. It's like dynamic resolution except for model detail. So when I look at what they've shown and say we have games with higher levels of details (in highlights if not for the whole scene overall) that's what I mean. When I mod a game to get the best LOD I can forced on all the time, that's what I get, no matter the resolution and no matter the object's position & size on the screen. Here, you're not gonna have that, the level of detail is going to be always fluctuating & the real question is, will you ever see it properly? That's what I'm not sure about because the demo & screenshots are low res so we don't know how it would look at a proper 4K (since detail scales with resolution & screen space occupancy). I just hate this trend of fighting against the clarity of small details. It's a TAA mentality - just broad brush strokes & equalise visual differences even at localised losses. BLEAH!

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,487

Nah.

None of those games have assets of the same quality, so the comparison is pointless.

I think what bothers me the most, and people haven't understood yet, is that you aren't getting highly detailed models on the screen. What you get from Nanite, the main advantage, is the elimination of LOD transitions, which is great. But you're not seeing the models at the quality they're bragging about because there's the auto-downscaling of detail that's happening. It's like dynamic resolution except for model detail. So when I look at what they've shown and say we have games with higher levels of details (in highlights if not for the whole scene overall) that's what I mean. When I mod a game to get the best LOD I can forced on all the time, that's what I get, no matter the resolution and no matter the object's position & size on the screen. Here, you're not gonna have that, the level of detail is going to be always fluctuating & the real question is, will you ever see it properly? That's what I'm not sure about because the demo & screenshots are low res so we don't know how it would look at a proper 4K (since detail scales with resolution & screen space occupancy). I just hate this trend of fighting against the clarity of small details. It's a TAA mentality - just broad brush strokes & equalise visual differences even at localised losses. BLEAH!

You shouldn't lose quality. Think of it like this: you take a 24mpx photo with your camera and you're looking at it on the monitor. Let's say, for argument's sake, you look on a 1080p screen (so 2mpx shown only from the 24mpx picture). The resolution of the displayed image is much lower (2mpx vs 24mpx of the original), but the picture looks ok from "afar". Zoom it to 100% and you have all that detail of the 24mpx brought up. Same with the game: seen from distance the detail is there, going in close -> the detail is there as well. The trick is the engine manages to "compress" and "decompress" those assets as needed, just like Lightroom (let's say) does with your picture.

Last edited:

What do you mean? They literally do. I mean for god's sake, there's games which use EXACTLY megascans just like the demo (eg JFO). So what are you talking about, the exact LOD model? But that's what we don't know (and yes, I know with this you don't get different LOD models, I ofc mean what the equivalence would be etc).None of those games have assets of the same quality, so the comparison is pointless.

You shouldn't lose quality. Think of it like this: you take a 24mpx photo with your camera and you're looking at it on the monitor. Let's say, for argument's sake, you look on a 1080p screen (so 2mpx shown only from the 24mpx picture). The resolution of the displayed image is much lower (2mpx vs 24mpx of the original), but the picture looks ok from "afar". Zoom it to 100% and you have all that detail of the 24mpx brought up. Same with the game: seen from distance the detail is there, going in close -> the detail is there as well. The trick is the engine manages to "compress" and "decompress" those assets as needed, just like Lightroom (let's say) does with your picture.

I understand how it works, but the problem is the theory doesn't align with reality. You can see that by looking at the actual screenshots. I mean hell, forget about the statues, which is what's most immediately noticeable as bs, you can even compare the assets of the rocks with the actual megascans in UE4 right now. It's absolutely not the same level of detail, no matter the zoom level. And that's my issue: they're trying to push this narrative of incredible level of details, 8K textures, bla bla and bla, but I have two eyes and I can see and contrast. It's just not even at the level of quality of 4K assets from what we've been shown, so there's some heavy down-scaling of detail going on. The question is, how hard is that tied to the resolution? I look forward to seeing more details about it.

I think what bothers me the most, and people haven't understood yet, is that you aren't getting highly detailed models on the screen. What you get from Nanite, the main advantage, is the elimination of LOD transitions, which is great. But you're not seeing the models at the quality they're bragging about because there's the auto-downscaling of detail that's happening. It's like dynamic resolution except for model detail. So when I look at what they've shown and say we have games with higher levels of details (in highlights if not for the whole scene overall) that's what I mean. When I mod a game to get the best LOD I can forced on all the time, that's what I get, no matter the resolution and no matter the object's position & size on the screen. Here, you're not gonna have that, the level of detail is going to be always fluctuating & the real question is, will you ever see it properly? That's what I'm not sure about because the demo & screenshots are low res so we don't know how it would look at a proper 4K (since detail scales with resolution & screen space occupancy). I just hate this trend of fighting against the clarity of small details. It's a TAA mentality - just broad brush strokes & equalise visual differences even at localised losses. BLEAH!

I slightly mentioned it earlier about compression - they are almost certainly using a system that pulls scene relevant data from the model set(s) rather than brute force rendering millions of polygons from the model every time and it potentially scales for performance and resolution as well - so in a heavy scene the amount of detail on the same model at the same distance compared to a less complex scene could be very different.

I slightly mentioned it earlier about compression - they are almost certainly using a system that pulls scene relevant data from the model set(s) rather than brute force rendering millions of polygons from the model every time and it potentially scales for performance and resolution as well - so in a heavy scene the amount of detail on the same model at the same distance compared to a less complex scene could be very different.

Yup. Saw someone else attempt to think it through:

You cannot beat the rasteriser in general, but you can when you have that crazy 1 tris per pixel mapping.

So what happens is:

- if the tris is small enough, they send it down the compute pipe and do a sort of software rendering instead fo rasterising it

- if the tris is bigger than a threshold (probably a 2x2 fragment block) they send it down the render pipe for standard rasterisation as that's going to be faster

This way you can mix and match ultra high detail meshes and "standard" detail meshes together, without having to worry about how big your triangles are going to be on screen.

My understanding is, at least in this demo where all meshes have an absolutely insane amount of geometry, the vast majority of the geometry is software rendered, not using primitive shaders.

I theorised years ago that an ultra-specialised compute-based micro-polygon software renderer similar to REYES could beat the standard pipe, and some early benchmarks showed it was possible at least in some cases.

What I was missing is how to process the insane amount of geometry you need to achieve 1:1 tris to pixel mapping in most cases.

The details are not out yet, but I see the idea of using image-based topology-preserving encoding of the meshes and stream them just like virtual textures (especially now that we have sampler feedbacks) is becoming increasingly likely.

Afaik there are two approaches described in literature, that have never been used in production before.

One is to transform and encode the topology information into a texture, the other one breaks it down into sort-of meshlets and pre-compute progressive LODs automatically (I think they're called progressive buffers or something like that).

My bet would be on the first approach for a variety of reason (also because is the craziest one).

The way the encoding works is so that lower mips of the texture correspond to a similar topology but less detailed.

This maps almost too perfectly onto the virtual texturing scheme and sampler feedbacks, to not be the approach used.

If you're generating too much geometry in a certain area, drop a mip level in that section, if too little, stream in a higher mip.

This implicit parametrisation should also be easier on the disk size and highly compressible with standard texture techniques, which could be how they can avoid shipping 10 terabytes games to the user lol.

And ofc, a blast from the past (because nothing is ever entirely novel):

And ofc, a blast from the past (because nothing is ever entirely novel):

Yeah I can't imagine the system isn't just a clever use of sparse octree techniques on a per model set basis.

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,487

What do you mean? They literally do. I mean for god's sake, there's games which use EXACTLY megascans just like the demo (eg JFO). So what are you talking about, the exact LOD model? But that's what we don't know (and yes, I know with this you don't get different LOD models, I ofc mean what the equivalence would be etc).

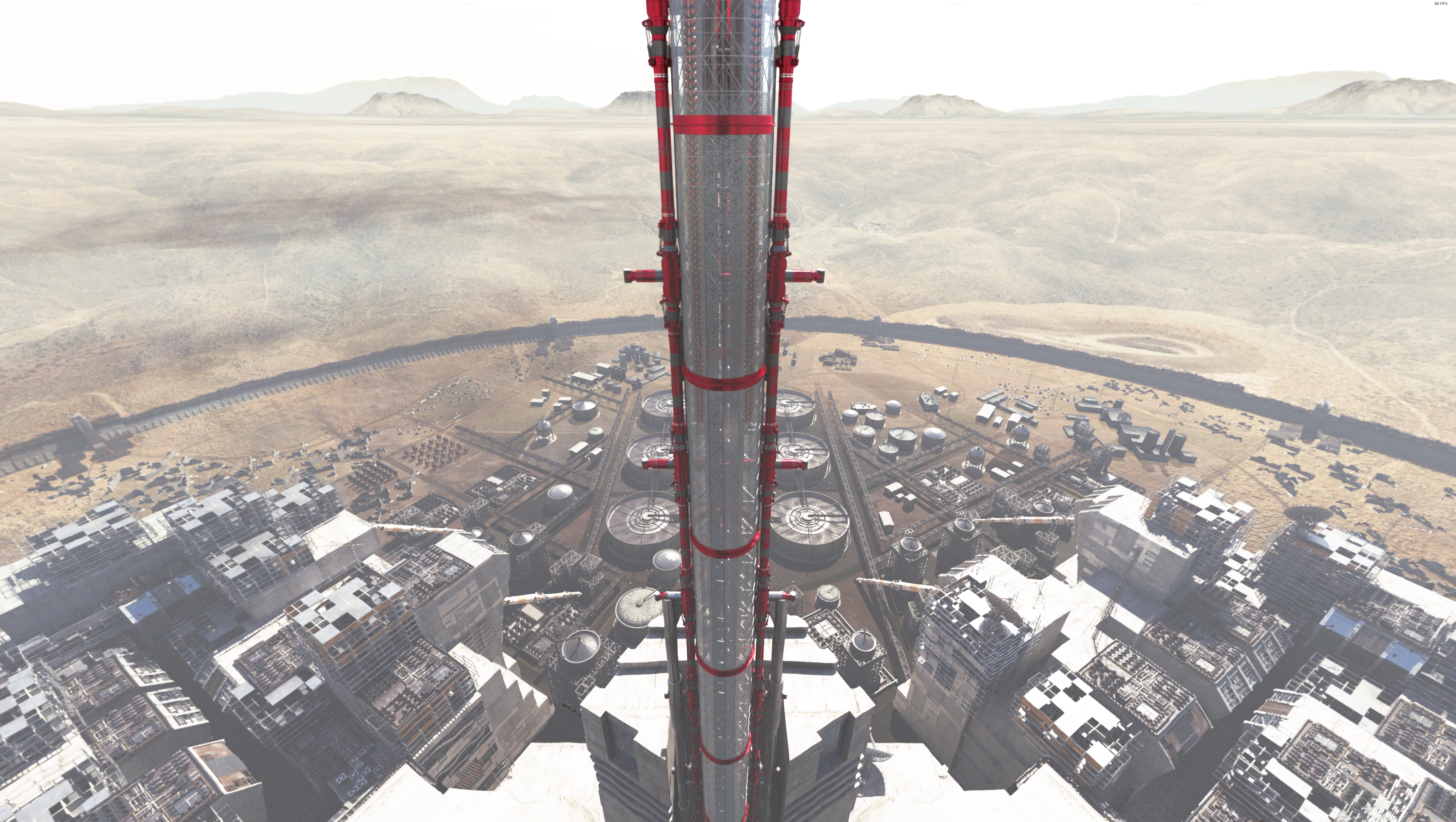

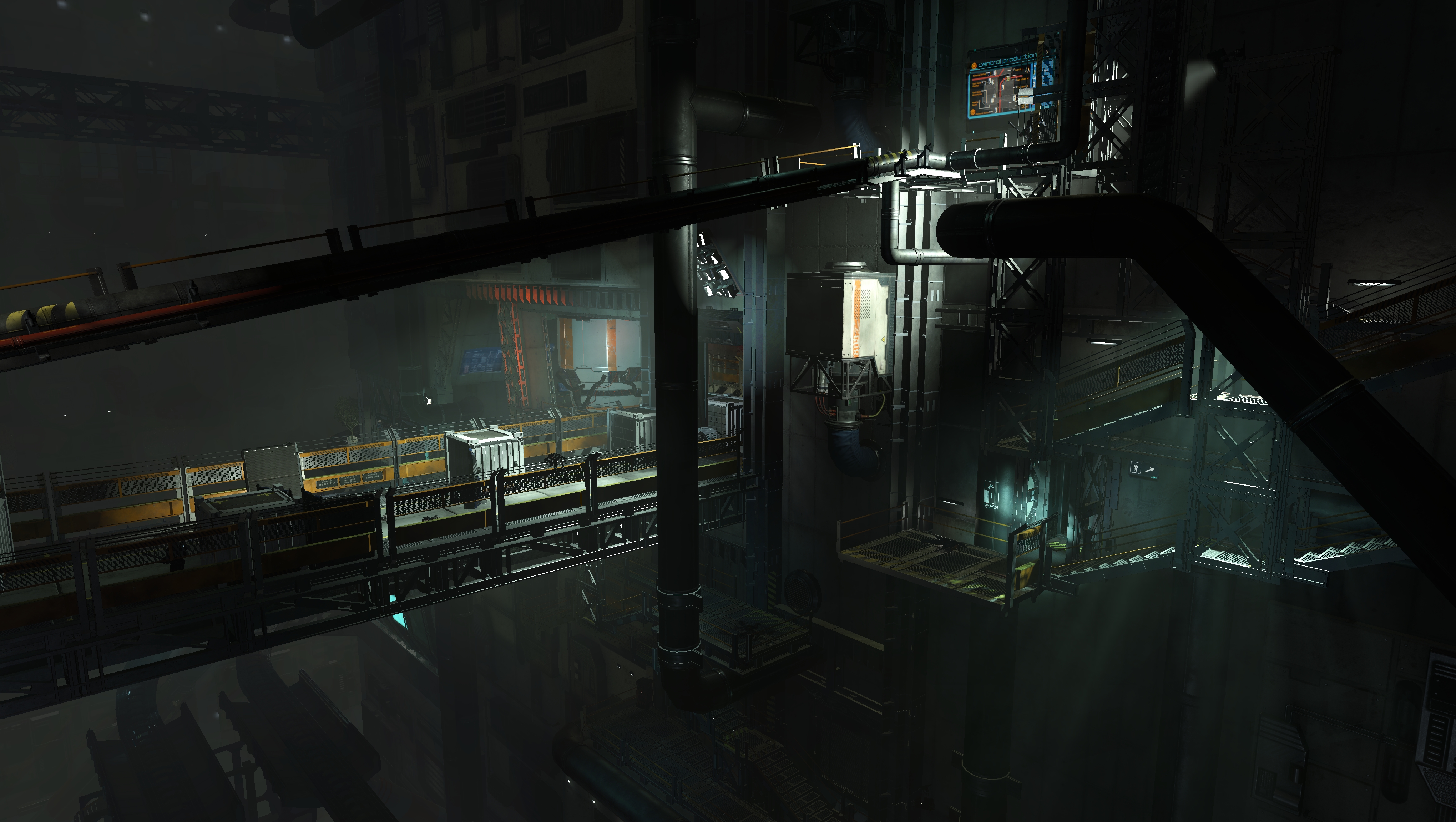

Each one asset of those games has been thought and made to run ok within the current paradigm -> already "optimized" for current systems, including original xbox and ps4. None of those games have possible trillions of polygons to draw details from - 16 trillions just the statues in that room. Moreover, Metro Exodus has lots of low end, low poly stuff around. RDR2 although it has ok texture work, is still lacking in certain departments and for sure is not "wow". Star Citizens "abuses" POM to bring details in due to "lack" of geometry - DF made a video about his as well.

I understand how it works, but the problem is the theory doesn't align with reality. You can see that by looking at the actual screenshots. I mean hell, forget about the statues, which is what's most immediately noticeable as bs, you can even compare the assets of the rocks with the actual megascans in UE4 right now. It's absolutely not the same level of detail, no matter the zoom level. And that's my issue: they're trying to push this narrative of incredible level of details, 8K textures, bla bla and bla, but I have two eyes and I can see and contrast. It's just not even at the level of quality of 4K assets from what we've been shown, so there's some heavy down-scaling of detail going on. The question is, how hard is that tied to the resolution? I look forward to seeing more details about it.

Looking at the stream in 4k ( https://www.unrealengine.com/en-US/blog/a-first-look-at-unreal-engine-5 ), while they stop and pan at the ground in the beginning, the textures have plenty of detail (plus also when she passes by that small opening at the exit of the cave), and terrain itself has enough geometry to pass as real to me. Do the same thing in RDR 2 or Metro Exodus (which is very noticeable) and you'll see the flaws in textures and geomtery.

Probably some TAA/FXAA/SMAA is going on as well which in absence of a sharpening filter can, and most likely will, reduce sharpens it certain situations - maybe true 4k looks much sharper. However, looking at the demo in motion, as a whole, it does have a filmic look and is very convincing to me. More so than any other game that I've played or seen. All that detail seems real, tangible and not just a texture.

Associate

- Joined

- 1 Aug 2017

- Posts

- 686

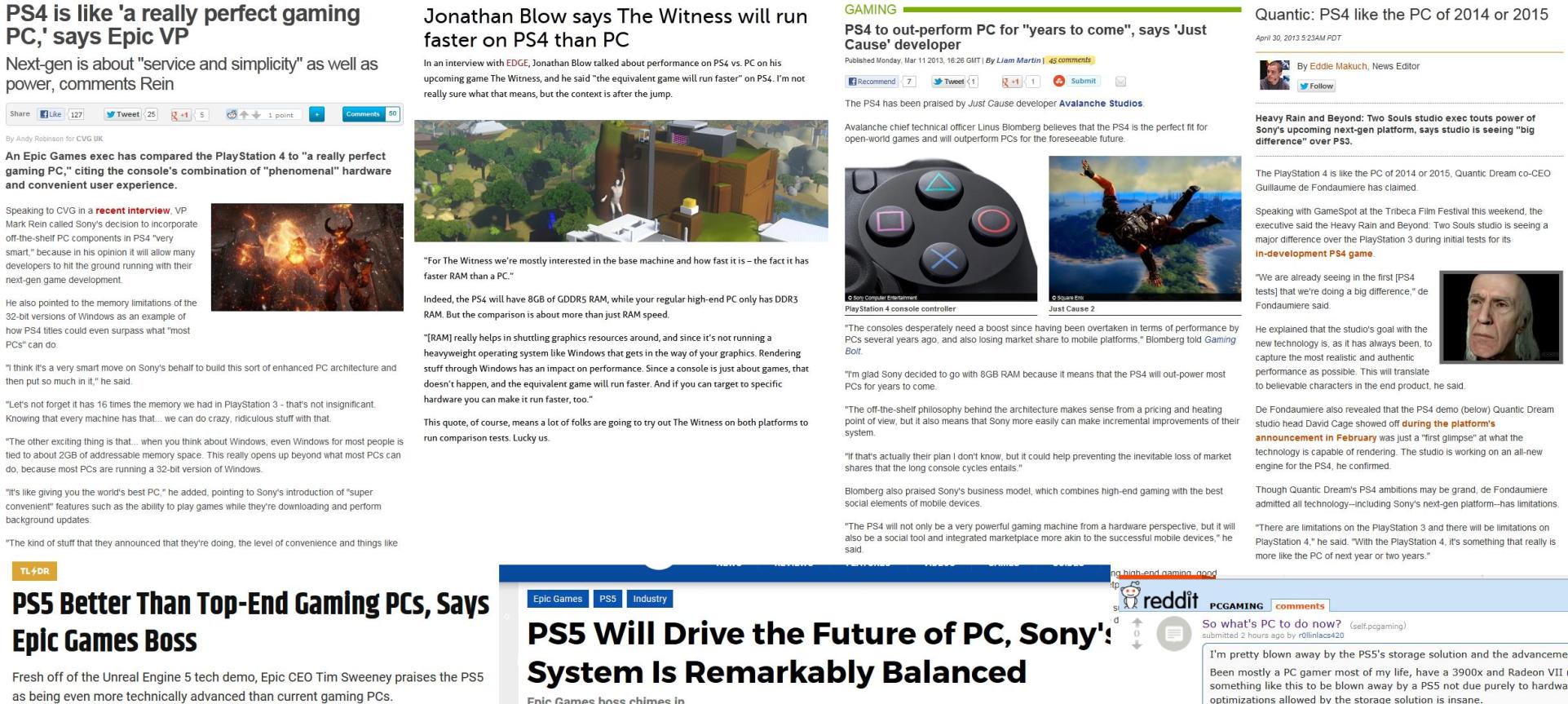

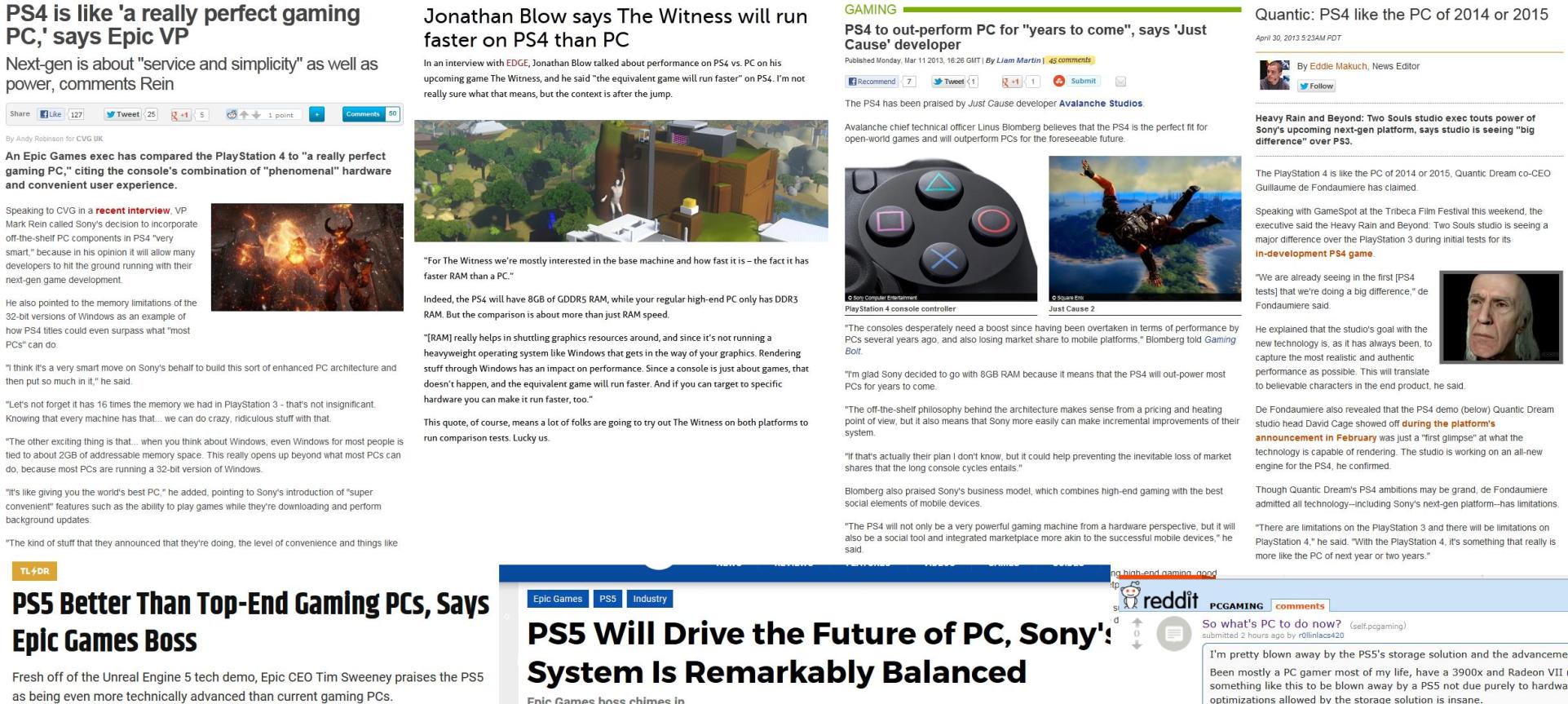

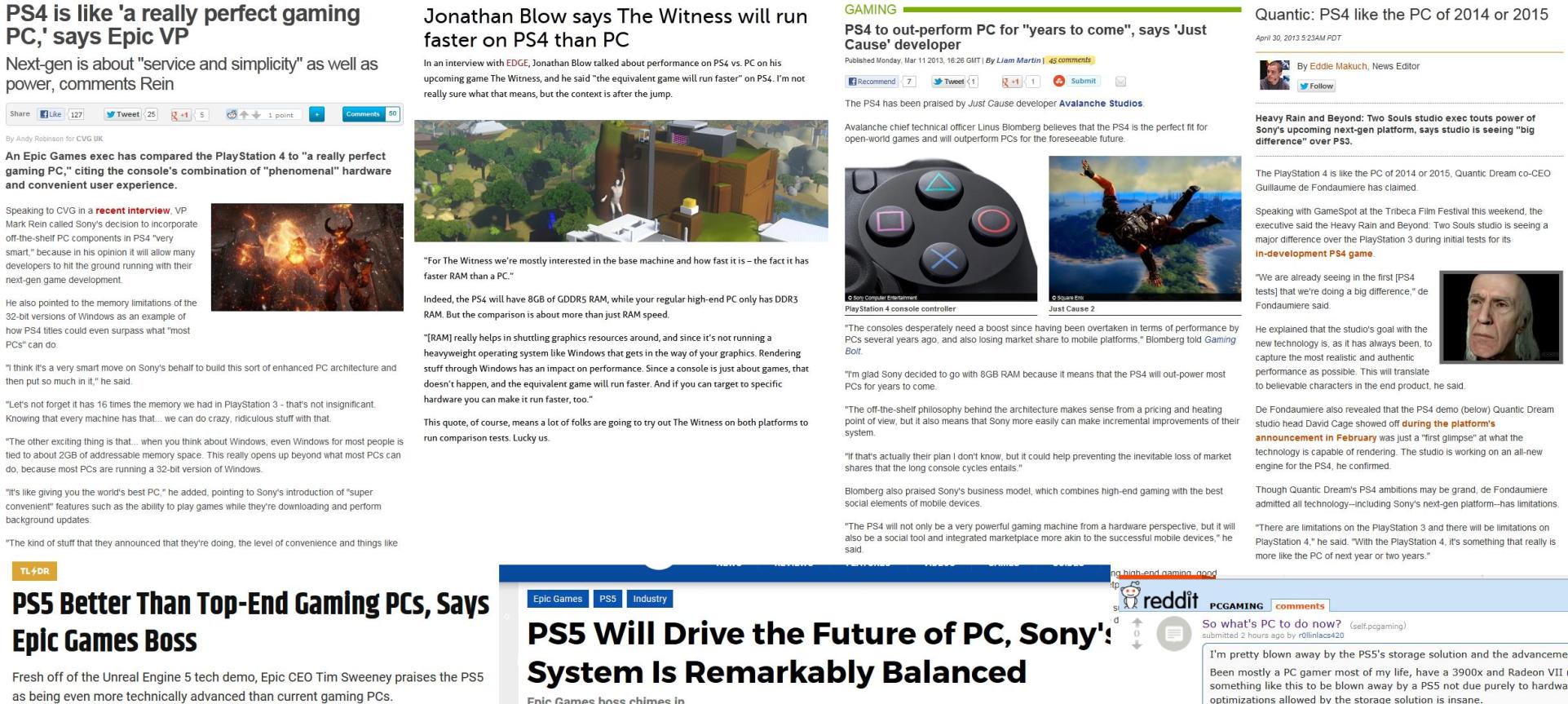

Sweeney is just a PR mouthpiece. Also this:

New generation, same marketing BS. Some gullible morons obviously fall for it, even Devs.

New generation, same marketing BS. Some gullible morons obviously fall for it, even Devs.

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,487

Sweeney is just a PR mouthpiece. Also this:

New generation, same marketing BS. Some gullible morons obviously fall for it, even Devs.

Try to look a bit deeper:

Jaguar was a terrible CPU, Ryzen it is not.

PS4 GPU had 1.84TF (a little bit more powerful than the $249 at launch HD7850), while the R290X launch about the same time had 5.63TF and costed around $549.

In comparison to now (strongest GPU of 12TF in xbox), speaking only in terms of GPU and keeping the difference the same, AMD would need to launch about the same time a 43.2TF GPU at what... around $599 (or whatever the inflation is)? Good luck with that

Never mind the storage speed.

This is not the same as what it was with the previous gen and if the devs want to get the best out of it and target 1080p@30/60fps, the leap in visual fidelity should be significant.

Associate

- Joined

- 1 Aug 2017

- Posts

- 686

Try to look a bit deeper:

Jaguar was a terrible CPU, Ryzen it is not.

PS4 GPU had 1.84TF (a little bit more powerful than the $249 at launch HD7850), while the R290X launch about the same time had 5.63TF and costed around $549.

In comparison to now, speaking only in terms of GPU and keeping the difference the same, AMD would need to launch about the same time a 43.2TF GPU at what... around $599 (or whatever the inflation is)? Good luck with that

Never mind the storage speed.

This is not the same as what it was with the previous gen and if the devs want to get the best out of it and target 1080p@30/60fps, the leap in visual fidelity should be significant.

It's all just PR/marketing to help shift hardware and games. This is just a fancy demo (which i'm not that impressed with anyway) at this point.

People who want to believe will believe anything that fulfills their dreams.

It's all just PR/marketing to help shift hardware and games. This is just a fancy demo (which i'm not that impressed with anyway) at this point.

People who want to believe will believe anything that fulfills their dreams.

Ah here he is. Rock n Troll. The guy who said Stadia was the next best thing.

Of course you aren't impressed with the tech demo. It mentioned a console. If it was a PC tech demo you would have been pulling your plonker over it.

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

I thought it looked great. As mentioned earlier, the surfaces looked tangible and realistic to a level I've not seen before. The character less so, but the rocks and walls yes.

As for 4k... I personally would rather have a realistic looking smooth 1080p than a jagged 4k 60fps at the expense of image quality. I think in the goal of raytracing and next gen engines, 1080p is acceptable imho.

As for 4k... I personally would rather have a realistic looking smooth 1080p than a jagged 4k 60fps at the expense of image quality. I think in the goal of raytracing and next gen engines, 1080p is acceptable imho.

I thought it looked great. As mentioned earlier, the surfaces looked tangible and realistic to a level I've not seen before. The character less so, but the rocks and walls yes.

As for 4k... I personally would rather have a realistic looking smooth 1080p than a jagged 4k 60fps at the expense of image quality. I think in the goal of raytracing and next gen engines, 1080p is acceptable imho.

I get the feeling the tech doesn't work so well on animated organic models versus static non-organics.

Would be interested to see some real world figures of the PS5. Something like a Timespy extreme run or some current games with compariable graphics options set on PC and PS5.

I will say im interested in the PS5. Lets see how much it’ll be and if the release titles look like the UE5 demo. I won’t hold my breath

I will say im interested in the PS5. Lets see how much it’ll be and if the release titles look like the UE5 demo. I won’t hold my breath

Would be interested to see some real world figures of the PS5. Something like a Timespy extreme run or some current games with compariable graphics options set on PC and PS5.

I will say im interested in the PS5. Lets see how much it’ll be and if the release titles look like the UE5 demo. I won’t hold my breath

Last year a 3d mark bench was leaked for a ps5 devkit - it matched the rtx2080. Though I'm struggling to find it again, perhaps someone else has a copy of it

Every few generations the consoles released leap ahead of PC Gaming GPU's for a few months then either through driver optimisations or new releases the PC gains advantage. It doesnt really matter. This time round the consoles will probably be released with the advantage as its not happened for a while then afterwards the 3xxx series cards will bring it back to the PC and possibly AMD's release will also count.