You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Virgin Media Discussion Thread

- Thread starter macca40

- Start date

More options

Thread starter's postsThat statement is so ignorant, I'm genuinely not sure where to begin (or, honestly, if it's worth the bother).

It doesn't matter which OS you run it on. As it happens,flentruns anywhere but Windows, including on macOS. Your router isn't running Windows. Your switch isn't running Windows. The core network at your ISP isn't running Windows. The Internet isn't running on Windows, either. When you flood your Internet connection with traffic and choke it because of bufferbloat (you lose packets because the buffers are full to overrunning and there's no fair queuing or other congestion control), it doesn't matter one jot whether that traffic emanated from a Windows machine, a Raspberry Pi running Debian, a FreeBSD server or a mobile phone. Traffic is traffic. Just the same, running an RRUL test (which is an industry standard, btw) emulates the type of traffic that will saturate a line in both directions and then measure what breaks. Did you even read the website?

What you said is akin to saying 'We can't measure the impact force of a 2 tonne car travelling at 70mph if that car is a Ferrari, because few people drive those. We need to test the impact force of a 2 tonne Ford car travelling at 70mph, because they're more common'... The marque of car is absolutely irrelevant to what's being measured, and why it's being measured. In that case, the salient variables are the mass and the speed, not what sicker is on the front of it.

In our case, the test is 'How does the connection's latency, jitter and bufferbloat present when under full TCP and/or UDP load?'. Where that test runs from is immaterial. Do you honestly think the Internet engineers at IETF, Cloudflare, RedHat, Google and others are all firing up Windows boxes because 'more people use them at home'? LOL TBF, I'm not singling you out here. Earlier, completely misinterpreted the difference between two sets of pings on an almost idle line (a speedtest is basically zero load). And that's fine. Not everyone knows too much about networks, or any other subject matter. The trick is to realise the things you don't know much about and (1) fix it and/or (2) not talk smack about it until you do.

If you prefer a test from Windows, give this little fella a go and post up your results. It's the Waveform bufferbloat test and it does a nice job of properly saturating the connection with packets and then making pretty graphs of the results. It'll be interesting to see what you come up with.

great analogies @Rainmaker - is it any comfort that after you are a bufferbloat-beating-advocate for a year or two more it does get easier to hold your temper?

The new speedtest.net app (and website) are finally measuring loaded latency: https://www.ookla.com/articles/introducing-loaded-latency - the principal flaw of this (and waveform) is that they don't test up and down simultaneously, as various tools in the flent suite do. A lot of gear does do weird things in this scenario.

In terms of explaining the even micro-burst nature of bufferbloat, recently I did a talk to explain the doubling that happens until you get a loss in tcp's slow start, https://www.youtube.com/watch?v=TWViGcBlnm0&t=1005s as entertainingly as I know how to do. Each round trip, at the RTT, doubles throughput until it hits the buffer and suffers a loss. However, the reaction to that overshoot reduces the rate of the flow by half (tcp reno) or 1/3 (tcp cubic), so with very few flows on the link, and reasonably short buffers, it's really hard to sustainedly saturate the link or notice bufferbloat effects, and a lot of fiber has fairly minimal buffering in the first place. Reliably detecting it needs more flows or packet captures.

My overall goal was to get induced latency and jitter below 30ms on all the technologies we have, and at a gbit fiber,

the problems largely move to the wifi. Asymmetric cable has major problems, and probably the worst bufferbloat offenders are 5G/LTE DSL and Starlink today. We can get to essentially 0 induced latency for most interactive traffic by leveraging fair queueing technologies, at any speed.

IMHO, for most people, 25/25Mbits - with good queue management - would enough. Seeing so many other technologies showing hundreds or even thousands of ms at rates below a gbit, really sucks. If it were possible to roll out fiber to everyone in the world, I'd support that, until then other technologies doing better queue management (esp on wireless), seem desperately needed, still.

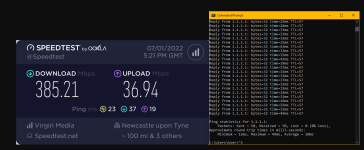

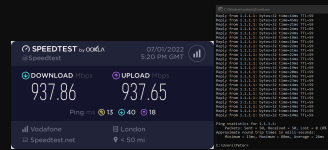

Both these results were very good. My principal kvetch is that speedtest and waveform simply do not run long enough to accurately measure bufferbloat at gig speeds.

Sometimes, it is the network's ability to carry interactive loads *at all* that is worth testing, even without an induced load. We've developed a new test leveraging irtt over here ( https://forum.openwrt.org/t/cake-w-adaptive-bandwidth/108848/3238 ) to actively measure baseline and loaded latencies on a 3ms (as opposed to a 5 minute) interval. There's a plot of Starlink's behaviors over there that shows the sat switch every 15s and the bufferbloat induced by a web page.

irtt is written in go and runs on all OSes, however this particular plotter of its output uses a ton of python and I don't know if that's portable. I'd so love to have comparable data from fiber and VM cable.

irtt is written in go and runs on all OSes, however this particular plotter of its output uses a ton of python and I don't know if that's portable. I'd so love to have comparable data from fiber and VM cable.

Soldato

Drop the hub, its crap! The sole purpose of our hub now is just a modem interface while using a Mesh system TP link Deco M4. I've not had a single dropout since switching to this and i have a lot of devices connected constantly.

On our M200 connection i get around 240/250 constant, i rarely get anything slower than this. Only off putting thing is i have to haggle them constantly on renewal to give me a decent price!

Thanks, have ordered - I really miss my Hyperoptic link haha. Not had to have any third party networking kit for many years, only deal with Datacentre stuff nowadays.

Hey Dave, I've been keeping up with you on Twitter but it's nice to see you take the time to post here again. I'll admit I wasn't having the best day (personal stuff), but my overall point stands.great analogies @Rainmaker - is it any comfort that after you are a bufferbloat-beating-advocate for a year or two more it does get easier to hold your temper?

That was one of your better videos, and that's saying something. Worthy of posting here directly:The new speedtest.net app (and website) are finally measuring loaded latency: https://www.ookla.com/articles/introducing-loaded-latency - the principal flaw of this (and waveform) is that they don't test up and down simultaneously, as various tools in the flent suite do. A lot of gear does do weird things in this scenario.

In terms of explaining the even micro-burst nature of bufferbloat, recently I did a talk to explain the doubling that happens until you get a loss in tcp's slow start, https://www.youtube.com/watch?v=TWViGcBlnm0&t=1005s as entertainingly as I know how to do. Each round trip, at the RTT, doubles throughput until it hits the buffer and suffers a loss. However, the reaction to that overshoot reduces the rate of the flow by half (tcp reno) or 1/3 (tcp cubic), so with very few flows on the link, and reasonably short buffers, it's really hard to sustainedly saturate the link or notice bufferbloat effects, and a lot of fiber has fairly minimal buffering in the first place. Reliably detecting it needs more flows or packet captures.

My overall goal was to get induced latency and jitter below 30ms on all the technologies we have, and at a gbit fiber,

the problems largely move to the wifi. Asymmetric cable has major problems, and probably the worst bufferbloat offenders are 5G/LTE DSL and Starlink today. We can get to essentially 0 induced latency for most interactive traffic by leveraging fair queueing technologies, at any speed.

IMHO, for most people, 25/25Mbits - with good queue management - would enough. Seeing so many other technologies showing hundreds or even thousands of ms at rates below a gbit, really sucks. If it were possible to roll out fiber to everyone in the world, I'd support that, until then other technologies doing better queue management (esp on wireless), seem desperately needed, still.

Once you get the bug for bloat, it's actually quick and fun to eliminate it from networks as you go. I've about covered most of my friends and family by now, and they've all commented positively about how much better their Internet is. WiFi is a dark and terrible art, I go out of my way to wire in everything I possibly can, but I do run a Ruckus R720 for guests and the kids' iPads etc. I re-ran rrul after testing out the latest IPFire with cake the other day, and the results were very favourable! Idle latency around 20ms and loaded under 30ms - definitely can't complain at that on a DOCSIS line with 'automatically' configured cake. We're getting FTTP soon, which will be a nice little upgrade. I quite like IPFire but the dev's attitude toward WireGuard is strange (imho) and I'm missing *BSD, which I run on all my production servers bar one now.

I'd so love to have comparable data from fiber and VM cable.

Leave it with me...

Just ran the tool on a VM 1gig service. A medium responsiveness is not ideal right?

@ChrisD. Can you run on yours out of curiosity?

EDIT: Cleaned up output and @Rainmaker Can we see your output too please as you’ve set configuration on your router to combat bufferbloat?

Last login: Mon Nov 8 18:42:38 on ttys000

uvarvu@Orcus ~ % /usr/bin/networkQuality -v

==== SUMMARY ====

Upload capacity: 44.792 Mbps

Download capacity: 894.068 Mbps

Upload flows: 20

Download flows: 16

Responsiveness: Medium (942 RPM)

Base RTT: 21

Start: 08/11/2021, 18:43:27

End: 08/11/2021, 18:43:42

OS Version: Version 12.1 (Build 21C5021h)

Finally got around to upgrading from Catalina to Monterey, so now have access to

networkQuality. Here's my result (again, please bear in mind half my upstream goes to Tor atm):

Code:

networkQuality -v

==== SUMMARY ====

Upload capacity: 23.947 Mbps

Download capacity: 816.964 Mbps

Upload flows: 12

Download flows: 12

Responsiveness: High (1283 RPM)

Base RTT: 23

Start: 10/07/2022, 00:25:08

End: 10/07/2022, 00:25:20

OS Version: Version 12.4 (Build 21F79)

Last edited:

Soldato

Tdlr. Ditch using consumer level routers and get a proper routergreat analogies @Rainmaker - is it any comfort that after you are a bufferbloat-beating-advocate for a year or two more it does get easier to hold your temper?

The new speedtest.net app (and website) are finally measuring loaded latency: https://www.ookla.com/articles/introducing-loaded-latency - the principal flaw of this (and waveform) is that they don't test up and down simultaneously, as various tools in the flent suite do. A lot of gear does do weird things in this scenario.

In terms of explaining the even micro-burst nature of bufferbloat, recently I did a talk to explain the doubling that happens until you get a loss in tcp's slow start, https://www.youtube.com/watch?v=TWViGcBlnm0&t=1005s as entertainingly as I know how to do. Each round trip, at the RTT, doubles throughput until it hits the buffer and suffers a loss. However, the reaction to that overshoot reduces the rate of the flow by half (tcp reno) or 1/3 (tcp cubic), so with very few flows on the link, and reasonably short buffers, it's really hard to sustainedly saturate the link or notice bufferbloat effects, and a lot of fiber has fairly minimal buffering in the first place. Reliably detecting it needs more flows or packet captures.

My overall goal was to get induced latency and jitter below 30ms on all the technologies we have, and at a gbit fiber,

the problems largely move to the wifi. Asymmetric cable has major problems, and probably the worst bufferbloat offenders are 5G/LTE DSL and Starlink today. We can get to essentially 0 induced latency for most interactive traffic by leveraging fair queueing technologies, at any speed.

IMHO, for most people, 25/25Mbits - with good queue management - would enough. Seeing so many other technologies showing hundreds or even thousands of ms at rates below a gbit, really sucks. If it were possible to roll out fiber to everyone in the world, I'd support that, until then other technologies doing better queue management (esp on wireless), seem desperately needed, still.

Perhaps have another read, what's being discussed here is software based, not hardware basedTdlr. Ditch using consumer level routers and get a proper router

VM business (don't need to be a business) gave me a larger Hitron router with what looks like an identical connection as VM residential, wonder if that's making the difference:That axiom could be said for many other things.

Here is that test run on my 600Mb VM BB.

Currently have 600Mbps with VM package. The past 3-4 weeks my speeds have been capped to no more than 45Mbps wired in during speedtests on all devices including, PC, PS5, PS4. Every so often I hit 600/700Mbps, then dips to capped 45Mbps again. Any ideas? Could this be due to works in the area?

Soldato

- Joined

- 14 Aug 2018

- Posts

- 3,565

Most interesting! I'm curious if @Rainmaker can confirm it's the Hitron router giving you much better bufferbloat.VM business (don't need to be a business) gave me a larger Hitron router with what looks like an identical connection as VM residential, wonder if that's making the difference:

I've just come to the end of my contract where I was paying £79 on the Ultimate Oomph. I've put in my cancellation (which in itself was an exercise in mental torture!) so It might be prudent to try and see if I can get the Hitron router as part of any new deal.

What’s a proper router?Tdlr. Ditch using consumer level routers and get a proper router

I'm on the same cable, all I did was unscrew my Superhub and plug in the Hitron (and also my phones are now plugged into the router too) but I expect they'll say no because of segmentation and support.see if I can get the Hitron router as part of any new deal.

I had a look and its a new router for vm business as of this year: https://www.ispreview.co.uk/index.p...business-uk-prep-new-hitron-chita-router.html

Actually I have no idea why it exists alongside superficially similar but different routers on vm residential.

Soldato

https://store.ui.com/collections/unifi-network-unifi-os-consoles/products/udm-pro one of these bad boysWhat’s a proper router?

Meh. Runs out of cpu, too. Buggy. It can be improved w/cake: https://github.com/fabianishere/udm-kernel

I have in general given up on ubnt, since they laid off their california tech crew and went public, and abandoned edgeos.

YMMV.

Thanks for this.Finally got around to upgrading from Catalina to Monterey, so now have access tonetworkQuality. Here's my result (again, please bear in mind half my upstream goes to Tor atm):

Code:networkQuality -v ==== SUMMARY ==== Upload capacity: 23.947 Mbps Download capacity: 816.964 Mbps Upload flows: 12 Download flows: 12 Responsiveness: High (1283 RPM) Base RTT: 23 Start: 10/07/2022, 00:25:08 End: 10/07/2022, 00:25:20 OS Version: Version 12.4 (Build 21F79)

I really need to build my own router :/

Soldato

The unfortunate thing is that UBNT punt the kit out to YouTubers and very, very few will give them a genuine review. As much as I like Chris Sherwood’s Crosstalk Solutions channel he’s very much on the UniFi train and that’s not so great. Willie Howe is much more neutral as are the people at Lawrence Systems.

And waaaay too many people review the spec sheet rather than the real world performance.

That said, I’m still very much a fan of their access points and the controller is getting better, albeit very slowly. Only the routing lets it down.

I am acquainted with some of the people in the Kraków development team and it doesn’t sound like an easy gig. Big targets, short timescales and little/no sympathy for failure from the community.

And waaaay too many people review the spec sheet rather than the real world performance.

That said, I’m still very much a fan of their access points and the controller is getting better, albeit very slowly. Only the routing lets it down.

I am acquainted with some of the people in the Kraków development team and it doesn’t sound like an easy gig. Big targets, short timescales and little/no sympathy for failure from the community.

I can see why the Ubiquiti community are like that to be honest, there's been years of products pushed out that just don't do what the spec sheet says they can do. Yes sure it's to be expected by now but someone new to the company's product ranges doesn't know that that's how they operate.

Like everywhere there are people who just do no research into what they are buying and then get upset, but it was possible for years to buy L3 switches that wouldn't do L3.

They'd win a lot more people over if they bothered to explain what they were doing with their non-UniFi product range and would publish a vague roadmap for when they expect certain features to make it into the releases. It doesn't really help that some people within the community are nearly as toxic as the worst of the open source community and act like it's the fault of the person asking the question as to why something on the datasheet doesn't work.

Like everywhere there are people who just do no research into what they are buying and then get upset, but it was possible for years to buy L3 switches that wouldn't do L3.

They'd win a lot more people over if they bothered to explain what they were doing with their non-UniFi product range and would publish a vague roadmap for when they expect certain features to make it into the releases. It doesn't really help that some people within the community are nearly as toxic as the worst of the open source community and act like it's the fault of the person asking the question as to why something on the datasheet doesn't work.

Last edited: