The best option is not to buy either, saying the pricing is poor then buying a 4070ti has just told Nvidia you're happy with current pricing so expect more of the same going forward.Why would you be loyal to a brand for a computer component? It's not a status symbol like an iPhone, just get the product that offers the best value to you. For me it was the 30 series a couple of years ago because AMD weren't selling them at MSRP in the UK so Nvidia was massively better value. This gen it was much closer but I ended up with a 4070 Ti because they were simply offering superior overall value for money to me compared to the 7900 XT and XTX. If you want to vote with your wallet then the only alternative at that performance level is AMD who are just as bad if not worse. They were given a massive open goal with Nvidia overpricing their GPUs this gen, and they have lower manufacturing costs from the chiplet design, but the only thing they're interested in competing with is profit margins. They're content to offer slightly better raster price/performance but inferior overall price/performance when factoring in other things like DLSS, power efficiency etc

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

When the Gpu's prices will go down ?

- Thread starter sys6em

- Start date

More options

Thread starter's postsThe best option is not to buy either, saying the pricing is poor then buying a 4070ti has just told Nvidia you're happy with current pricing so expect more of the same going forward.

Are you allowed to buy a used Nvidia GPU?

Associate

- Joined

- 2 Sep 2013

- Posts

- 2,114

I think the main point of contention for buying a used GPU this generation (last few years) is that there was that mining craze too. Prior to that, owners of GPU's took a lot of care over their GPU in order to sell or make sure it lives much longer, so usually most of the time, the GPU was good for a second hand sale and as a potentially buyer, you feel safer going for it.Are you allowed to buy a used Nvidia GPU?

But this generation was tainted by some (not all) terrible owners of mining rigs, that ran the GPU's haggered and also in unknown (sometimes terrible) conditions. Resulting in GPU hardware that is not fit for service. And quite frankly there is no way to know this from a visual inspection (owned by someone who looked after their mining rig GPU's, and one who did not). And once a sale is done, there's little to get back after the fact (and some issues are not apparent until much later). So unlike many years prior, when grabbing a GPU second hand was viable, right now, it's a crap shoot and you could easily end up losing unless if the sale price was a steal when compared to grabbing it new from retail.

I think the main point of contention for buying a used GPU this generation (last few years) is that there was that mining craze too. Prior to that, owners of GPU's took a lot of care over their GPU in order to sell or make sure it lives much longer, so usually most of the time, the GPU was good for a second hand sale and as a potentially buyer, you feel safer going for it.

But this generation was tainted by some (not all) terrible owners of mining rigs, that ran the GPU's haggered and also in unknown (sometimes terrible) conditions. Resulting in GPU hardware that is not fit for service. And quite frankly there is no way to know this from a visual inspection (owned by someone who looked after their mining rig GPU's, and one who did not). And once a sale is done, there's little to get back after the fact (and some issues are not apparent until much later). So unlike many years prior, when grabbing a GPU second hand was viable, right now, it's a **** shoot and you could easily end up losing unless if the sale price was a steal when compared to grabbing it new from retail.

Sure. But what that still does not answer my question

Lucky for me the 3080 Ti I got was purchased after the mining craze and if there was mining done it, there would not have been too much as it was only 8-9 months old when I got it as I recall.

Plus it was from a reputable member here who I know would help with warranty if ever needed. Not like it is hard to help with warranty, so unless one is a total dick it is a non issue. Now if you are buying on eBay, Facebook and the like, that is different.

I did put off buying one for a while to see what happened with pricing, the 4070 Ti seemed to be consistently selling well at the £800 price point so from my point of view people had already voted with their wallets. Even if you buy a used GPU you'd still be indirectly supporting the current pricing of new GPUs, it increases demand of used GPUs which pushes prices up, and if used GPU prices are high like they are now then it makes more sense for someone else to buy new. Easy to say the best option is not to buy either if you already have a capable gaming GPU.The best option is not to buy either, saying the pricing is poor then buying a 4070ti has just told Nvidia you're happy with current pricing so expect more of the same going forward.

The card has only been out a couple of months and Nvidia are not going to cut pricing after that long, the 4080 proves that despite selling like sand in a desert. To force a price drop it means holding until Nvidia blinks first.I did put off buying one for a while to see what happened with pricing, the 4070 Ti seemed to be consistently selling well at the £800 price point so from my point of view people had already voted with their wallets. Even if you buy a used GPU you'd still be indirectly supporting the current pricing of new GPUs, it increases demand of used GPUs which pushes prices up, and if used GPU prices are high like they are now then it makes more sense for someone else to buy new. Easy to say the best option is not to buy either if you already have a capable gaming GPU.

Nothing wrong with going used, I got a 1070ti for nearly half the price of a new 2060 was when it launched, coming from a 660ti it was a huge upgrade.

If you have a low end or don't have a card at all then pretty much anything is a huge upgrade and even more reason not to pay over the odds,

This was your advice to people back in December and good advice so its a shame you caved so soon.We do collectively have a say on the pricing, everyone votes with their wallet. If enough people vote to reject overpriced cards then the prices will come down, if enough people vote to buy them then prices stay overpriced.

The problem is the price doesn't currently make much sense compared to just buying a new card with a warranty and better power efficiency.Nothing wrong with going used, I got a 1070ti for nearly half the price of a new 2060 was when it launched, coming from a 660ti it was a huge upgrade.

It seemed clear people had already voted on the 4070 Ti and prices won't come down much but I hope I'm proved wrong.This was your advice to people back in December and good advice so its a shame you caved so soon.

Associate

- Joined

- 14 Aug 2017

- Posts

- 1,196

It seemed clear people had already voted on the 4070 Ti and prices won't come down much but I hope I'm proved wrong.

We've been seeing deals on the 4080 at around £925 and 4070Ti at about £650 here in Australia, I imagine it can only be a matter of time before the UK sees some reductions... unless y'all are still buying them at stupid prices of course! Apparently we aren't...

Yeap, just checked some AUS sites and seems the 4070ti is regularly available for £650 so maybe its just an issue with UK stores.We've been seeing deals on the 4080 at around £925 and 4070Ti at about £650 here in Australia, I imagine it can only be a matter of time before the UK sees some reductions... unless y'all are still buying them at stupid prices of course! Apparently we aren't...

Soldato

- Joined

- 31 Jan 2022

- Posts

- 3,325

- Location

- UK

NVIDIA do not really have us in a closed market though, do they? AMD and Intel have products that compete up to RTX 4080 levels. Their only halo product is the RTX 4090, and sales have dropped off the cliff, now that early adopters are no longer buying in droves (price has dropped on RTX 4090 since its launch).

Also the price of the RTX 4080, has also slightly dropped since launch.

Roll on RTX 5090, we can hopefully get the AMD RT 8000 series at the same time and see if the AMD chiplets tech matures to a level which properly competes, at the high-end.

Also, AMD produce so many chips for Sony and Microsoft, while NVIDIA have the low powered Nintendo Switch to their name that at some point their (NVIDIA'S) market dominance must erode.

Myself and others have for so long bought NVIDIA cards with brand loyalty, but I think we should now step away and vote with our wallets and leave the "Apple" of GPU makers behind and see if we can reduce the Jensen retirement fund a bit...

I don't really know. But it seems to me that the dedicated NVIDIA buyer is very reluctant to go the AMD way and need encouragement that AMD are just scared to give. AMD need to stop the copy-cat price setting of their products.

intel really aren't in the game yet.

The 4080 has dropped in price, but only a little. It still needs to come down a lot more if NVIDIA are serious about selling it.

But then really I am not sure what NVIDIA's plan is. Are they being greedy or did they forsee the downturn and decide they had to charge more to keep things trundling along? Are these prices entirely aimed at miners who will pay more than gamers? Or maybe they have a longer term strategy to break scalpers? We really don't know and because we don't know it's pretty much impossible to determine what they will do next.

When it comes to stepping away, people talk about this, but it's like Climate Change - everyone wants everyone else to change so they can continue doing what they have always done. But, I do think you are right, we have to really change our expectations. It's hard to do that, we are creatures of habit.

Last edited:

Yeap, just checked some AUS sites and seems the 4070ti is regularly available for £650 so maybe its just an issue with UK stores.

Does that include 20% Vat?

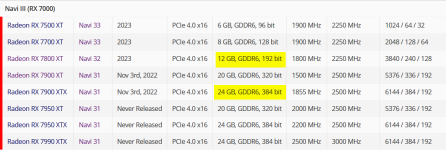

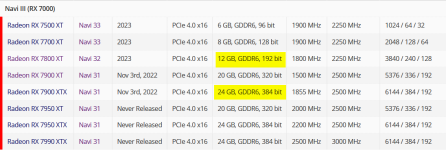

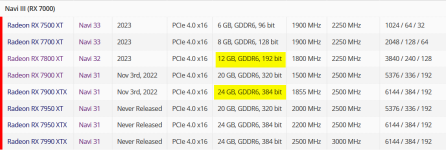

The only Navi 32 rumours still had it as 192-bit card, didn't they?I've just seen the 6950XT drop under 700€ in Italy, something tells me AMD is about to clear stock for a new release.

Is anyone willing to bet for a 7800XT with similar performance and 16GB RAM?

That's what TPU have in their placeholder in their database

TechPowerUp

Graphics card and GPU database with specifications for products launched in recent years. Includes clocks, photos, and technical details.

If it is 192-but it can either be 12GB or 24GB

What I would question, is such a cut-down part worth going multi-chip for?

A 256-bit max would make far more sense.

However, since Navi31 was the 6900XT/6800XT and 6800 replacement, then if Navi32 is only the 6700 replacement then 12GB might make sense.

Leaves a huge gab between them all though.

Navi32 reaching 6900XT performance while using 100W isn't that bad, but 12GB is a downgrade. Price had better be good then (as if!)

Associate

- Joined

- 14 Aug 2017

- Posts

- 1,196

Does that include 20% Vat?

10% GST included in those, so do a quick ($PRICE/11)*12 to get the UK price equivalent with VAT - comes to about 1010 quid for the 4080, 710 for the 4070Ti

10% GST included in those, so do a quick ($PRICE/11)*12 to get the UK price equivalent with VAT - comes to about 1010 quid for the 4080, 710 for the 4070Ti

Still a decent chunk cheaper. Rip off Britain as usual.

Such a huge gap IMHO makes no sense.The only Navi 32 rumours still had it as 192-bit card, didn't they?

That's what TPU have in their placeholder in their database

TechPowerUp

Graphics card and GPU database with specifications for products launched in recent years. Includes clocks, photos, and technical details.www.techpowerup.com

If it is 192-but it can either be 12GB or 24GB

What I would question, is such a cut-down part worth going multi-chip for?

A 256-bit max would make far more sense.

However, since Navi31 was the 6900XT/6800XT and 6800 replacement, then if Navi32 is only the 6700 replacement then 12GB might make sense.

Leaves a huge gab between them all though.

Navi32 reaching 6900XT performance while using 100W isn't that bad, but 12GB is a downgrade. Price had better be good then (as if!)

There is plenty of room for a 256 bit, 16GB part so what they cite as 7800 is the 7700, the 7700 is the 7800 and so on.

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,336

- Location

- Planet Earth

I can't see how Navi 33 can replace Navi22 with far less shaders,and not a massive increase in clockspeeds,ie,because Navi 33 is using an improved 7NM node:The only Navi 32 rumours still had it as 192-bit card, didn't they?

That's what TPU have in their placeholder in their database

TechPowerUp

Graphics card and GPU database with specifications for products launched in recent years. Includes clocks, photos, and technical details.www.techpowerup.com

If it is 192-but it can either be 12GB or 24GB

What I would question, is such a cut-down part worth going multi-chip for?

A 256-bit max would make far more sense.

However, since Navi31 was the 6900XT/6800XT and 6800 replacement, then if Navi32 is only the 6700 replacement then 12GB might make sense.

Leaves a huge gab between them all though.

Navi32 reaching 6900XT performance while using 100W isn't that bad, but 12GB is a downgrade. Price had better be good then (as if!)

TSMC 6NM is essentially a higher density 7NM process node:

AMD basically die shrunk Navi 23,added some RDNA3.0 improvements and came up with Navi 33. Also the laptop RX6600M/Navi 23 is being directly replaced by the RX7600M/Navi 33:

AMD Radeon RX 7600M GPU - Benchmarks and Specs

Specifications and benchmarks of the AMD Radeon RX 7600M GPU.

www.notebookcheck.net

www.notebookcheck.net

The shader count hasn't changed! That means if AMD rebranded a Navi 33 dGPU as the RX7700XT it would be a downgrade in core speed and VRAM.

I think this is the most likely line-up:

1.)RX7800XT - Navi 31 with a 256 bit memory bus and 16GB of VRAM. Might be a slightly cut down version of the core in the RX7900XT,but with less VRAM. But it could be AMD won't bother,and drops the price on the RX7900XT to fight the RTX4070TI.

2.)RX7800 - Navi 32 with a 192 bit memory bus and 12GB/24GB of VRAM. Would have more shaders than an RX6800. If it is about RX6800XT level,might be the competitor to the RTX4070 12GB.

3.)RX7700XT - Navi 32 with a 192 bit memory bus and 12GB of VRAM. Cut down core,but more shaders than an RX6700XT. 3072 shaders? Would make the 4096 shader RX7800 a big upgrade. Might push it upto RX6800 level.

4.)RX7700 - Navi 32 with a 160 bit memory bus and 10GB of VRAM. Probably quicker than an RX6700XT. Same amount of shaders as an RX6700XT?

5.)RX7600XT -Navi 33 and 8GB of VRAM

6.)RX7600 - Navi 33 and 8GB of VRAM

That range would technically make no SKU a downgrade in shaders or VRAM amounts. But the bigger issue will be any price rises. The RX6600XT was a decent upgrade over the RX5600XT,ie,more shaders and more VRAM but cost almost as much as the previous generation RX5700/RX5700XT 8GB.

Lets say AMD does decide to not be stingy with VRAM compared to the last generation. So it could be:

1.)RX7800XT - Navi 32 with a 192 bit memory bus and 24GB of VRAM

2.)RX7800 - Navi 32 with a 160 bit bus and 20GB of VRAM

3.)RX7700XT -Navi 32 with a 128 bit bus and 16GB of VRAM

4.)RX7600XT - Navi 33 and 16GB of VRAM

5.)RX7600 - Navi 33 and 8GB of VRAM.

Not sure about the second scenario,as Navi 32 only has 4096 shaders,so not sure how it would beat the RX6800XT with 4608 shaders that much? If it can,then we might see the second scenario then.

If AMD tried to make the RX7700XT based on Navi 33 with 16GB of VRAM,it could only happen if Navi 33 really clocks high. But the issue is TSMC 6NM,which is density optimised 7NM,going to clock much higher?

Sadly,if AMD was actually bothered about competing in a minimal way the line-up would have been:

1.)RX7900XT 24GB - Navi 31(384 bit memory controller).Competes with RTX4080 16GB.£900~£1000.

2.)RX7800XT 20GB - Navi 31(320 bit memory controller).Competes with RTX4070TI 12GB.£700~£750.

3.)RX7800 16GB - Navi 31(256 bit memory controller).Competes with RTX4070 12GB.£550~£600.

3.)RX7700XT 12GB/24GB - Navi 32(192 bit memory controller). Competes with RTX4060TI 8GB.£450.

4.)RX7700 10GB/20GB - Navi 32(160 bit memory controller).Competes with RTX4060 8GB.£350~£400.

5.)RX7600XT 8GB/16GB - Navi 33(128 bit memory controller).Competes with RTX4050 6GB. £250~£300.

6.)RX7600 8GB - Navi 33(128 bit memory controller).£200~£250.

Instead AMD has made Nvidia look competitive with their own stupid pricing of the RX7900XT. They still could have increased pricing generation on generation and probably gotten away with it!

Last edited:

Associate

- Joined

- 13 Jun 2012

- Posts

- 379

i'm not sure i buy the idea that Navi 32 is a native 192bit bus:

doesn't allow for good product positioning between Navi33 (128bit) and Navi31 (384bit).

made sense in the Navi2x era, as the product stack topped out at 256bit for Navi21.

my money is on a 16GB 256bit Navi 32, with an afforable cutdown product using 12GB (192bit).

doesn't allow for good product positioning between Navi33 (128bit) and Navi31 (384bit).

made sense in the Navi2x era, as the product stack topped out at 256bit for Navi21.

my money is on a 16GB 256bit Navi 32, with an afforable cutdown product using 12GB (192bit).

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,336

- Location

- Planet Earth

i'm not sure i buy the idea that Navi 32 is a native 192bit bus:

doesn't allow for good product positioning between Navi33 (128bit) and Navi31 (384bit).

made sense in the Navi2x era, as the product stack topped out at 256bit for Navi21.

my money is on a 16GB 256bit Navi 32, with an afforable cutdown product using 12GB (192bit).

It could go that way,but the problem it tops out at 4096 shaders according to the rumours,unless they are wrong and it has far more shaders. Adding more memory controllers would be easy as Navi 32 is still a chiplet design AFAIK.

But I can't see how Navi 32,even running at higher clockspeeds,could beat a 4608 shader RX6800XT by a decent amount? Even if it ran at 3GHZ consistently,ie,about 35% more than an RX6800XT,it would barely be 20% to 25% faster overall(at best). In that sense it would make more sense to release a 4608 shader version of Navi 32 with a 256 bit memory controller.

The RX6800 only had 3840 shaders,so a fully enabled Navi 32 would have more shaders and a higher clockspeed. So it could be quite possible that a 256 bit memory bus is attached to a Navi 32 based RX7800. So if we went that way:

1.)RX7900XTX 24GB - Navi 31(384 bit memory controller).

2.)RX7900XT 20GB - Navi 31(320 bit memory controller).

3.)RX7800XT 16GB - Navi 31(256 bit memory controller).

4.)RX7800 16GB - Navi 32(256 bit memory controller).

5.)RX7700XT 12GB - Navi 32(192 bit memory controller).

6.)RX7700 10GB - Navi 32(160 bit memory controller).

7.)RX7600XT 8GB - Navi 33.

8.)RX7600 8GB - Navi 33.

If the RX7800XT ends up being a 4096 shader Navi 32,it will be a small generational increase. It could happen,but I hope it doesn't because it will be as bad as what Nvidia is doing now.

Last edited: