My god the amount of pro amd crap over better colours and (it just pop's, where nvidia is washed out) in this thread, is just unreal. I included a link in my earlier post which explains the exact mythbuster, it's funny I included the same link in the other thread and it was also ignored.

There is barely any difference when the nvidia rgb or ycbcr is set to full. I own both.

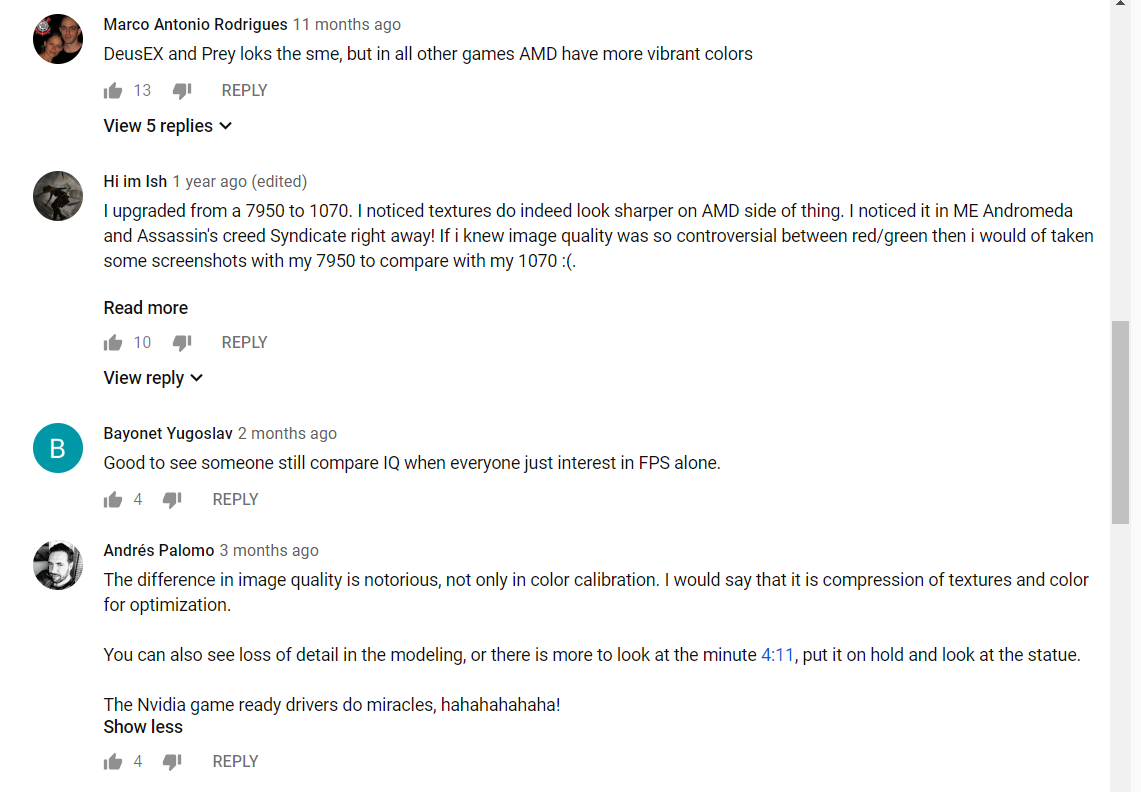

Now show me difference in rendering iq in modern games at the exact same camera angles and sure I'll gladly make a comment, contribute and test.

There is barely any difference when the nvidia rgb or ycbcr is set to full. I own both.

Now show me difference in rendering iq in modern games at the exact same camera angles and sure I'll gladly make a comment, contribute and test.