-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Vs. Nvidia Image Quality - Old man yells at cloud

- Thread starter shankly1985

- Start date

More options

Thread starter's postsI read all kinds of comments. Look, people know it best:

Stop reading other people's comments and start thinking for yourself.

My Panasonic 4KTV has a Studio Master Colours processed by a Quad Core Pro.

And in the menu, it have selected the following:

*Noise Reduction is set to Max

*MPEG Remaster is set to Max

*Resolution Remaster is set to Max

*Intelligent Frame Creation is set to Max

Now, explain what these functions are for?!

This just shows how clueless you are.

IF you want the best picture quality from a HD source you should turn off all that stuff.

Noise Reduction:- is throwback to the days of Standard definition TV.

Mpeg Remaster:- reduces mosquito noise artifacts. Again more use for SD TV.

Resolution Master:- Very Subtle sharpening filter.

Intelligent Frame Creation:- Reduces motion blur and judder by adding in black frames to smooth the frame rate.

None of these do any of the things that you implied earlier in the thread. They don't add detail that isn't there or replace lost pixels.

Quite telling that if you Google 'AMD caught cheating' you get 12.6 million results, where as 'NVidia caught cheating' only returns 1.7 Millom results. hmmmm interesting.

Simples, that's proof that there are more Nvidia fanboys spouting nonsense than there are AMD fanboys.

Simples, that's proof that there are more Nvidia fanboys spouting nonsense than there are AMD fanboys.

Nah just NVidia have more money to silence people better.

Quite telling that if you Google 'AMD caught cheating' you get 12.6 million results, where as 'NVidia caught cheating' only returns 1.7 Millom results. hmmmm interesting.

4K will say it's a Google conspiracy theory to make Nvidia look good

Go on then, I will play...........I read all kinds of comments. Look, people know it best:

https://techreport.com/review/3089/how-atis-drivers-optimize-quake-iii/MOST OF YOU ARE probably familiar by now with the controversy surrounding the current drivers for ATI’s Radeon 8500 card. It’s become quite clear, thanks to this article at the HardOCP, that ATI is “optimizing” for better performance in Quake III Arena—and, most importantly, for the Quake III timedemo benchmarks that hardware review sites like us use to evaluate 3D cards. Kyle Bennett at the HardOCP found that replacing every instance of “quake” with “quack” in the Quake III executable changed the Radeon 8500’s performance in the game substantially.

It’s not hard to see why the Radeon 8500 produces better benchmarks with this driver “issue” doing its thing. The amount of detailed texture data the card has to throw around is much lower.

How the cheat works

The big question has been this: How was ATI able to produce textures that looked a little worse than the standard “high quality” textures, but in close-up detail screenshots, still looked better than the next notch down the slider?

The answer: They are futzing with the mip map level of detail settings in the card’s driver whenever the Quake III executable is running. Mip maps—lower resolution versions of textures used to avoid texture shimmer when textured objects are far away—are everywhere; they’re the product of good ol’ bilinear filtering. ATI is simply playing with them. When the quake3.exe executable is detected, the Radeon 8500 drivers radically alter the card’s mip map level of detail settings.

Conclusions

As you can see, mip mapping is usually affected by texture quality settings, but only a little bit. ATI’s hyper-aggressive “optimization” of mip maps goes well beyond that. Also, quake3.exe shows more aggressive mip mapping than quaff3.exe with at least three of Quake III’s four texture quality settings: high, medium, and low.

(It’s also worth noting that the Radeon 8500’s mip maps are are bounded by two lines that intersect at the middle of the screen. The GeForce3’s mip map boundaries come in a smooth arc determined by their distance from the user’s point of view. But I’m getting ahead of myself.)

I find it very difficult to believe that what ATI was doing here wasn’t 100% intentional. Judge for yourself, but personally, I find the evidence mighty compelling.

I remember this and it was a big thing back then and ATI were very naughty cheating a benchmark with forced lowered settings, even when you chose "highest". Now I don't really care and the date is so old but you are digging up crap from years ago and in truth, you are quoting "Jo Bloggs" and making him right. WTF dude? I mean, at least use a tech site for your miserable rantings about cheating lol.[/QUOTE]

Go on then, I will play...........

https://techreport.com/review/3089/how-atis-drivers-optimize-quake-iii/

I remember this and it was a big thing back then and ATI were very naughty cheating a benchmark with forced lowered settings, even when you chose "highest". Now I don't really care and the date is so old but you are digging up crap from years ago and in truth, you are quoting "Jo Bloggs" and making him right. WTF dude? I mean, at least use a tech site for your miserable rantings about cheating lol.

18 year old tech discussion though, how is the point not absolutely relevant

None of it mattered a year later because 9700 pro. I upgraded to the 9700 pro from the gf3 ti 500 back then or some time around then. Back when cards were 'sensibly' priced

None of it mattered a year later because 9700 pro. I upgraded to the 9700 pro from the gf3 ti 500 back then or some time around then. Back when cards were 'sensibly' priced

True that and I just couldn't resist.18 year old tech discussion though, how is the point not absolutely relevantNone of it mattered a year later because 9700 pro. I upgraded to the 9700 pro from the gf3 ti 500 back then or some time around then. Back when cards were 'sensibly' priced

18 year old tech discussion though, how is the point not absolutely relevantNone of it mattered a year later because 9700 pro. I upgraded to the 9700 pro from the gf3 ti 500 back then or some time around then. Back when cards were 'sensibly' priced

Then you had the nVidia FX series (5xxx cards) which lacked precision in many shaders resulting in colour banding, etc. heh and rendered some effects at 1/4 resolution like the detail on water in Far Cry and Halo.

I nearly always had ATI. ATI/AMD always offered better value back then. They lost the plot around the time Fury X came out.Only time I had an ATI card was during the Geforce FX range

Oh god I remember that like night and day, I went and got myself from the computer fair at Edgbaston Cricket Ground an ATi 8500 was all chuffed, then started reading all that a few days laterTrue that and I just couldn't resist.

People keep denying it, but it's there.

Several people have posted links that likely show why there is a difference (for instance James Miller) and I've also posted plenty of evidence that people seeing a significant difference are almost certainly seeing so because of user error and/or not comparing like for like intentionally or unintentionally.

I'll repeat again that several big name tech channels, etc. have actually done tests side by side using professional equipment and struggled to find anything other than very very minor differences and if there was as big difference as some here are saying channels like Gamers Nexus, Hardware Unboxed, etc. would be all over it - it would be a big story that got them a lot of attention so no way with all their experience they'd have passed it by by now.

As I've said before I've always considered there being a slight advantage to AMD in terms of the perception of colour vibrancy from building many many systems but I've never seen a dramatic difference.

Every time someone has posted images showing that supposed difference in this thread or elsewhere on other forums, etc. I've been able to conclusively debunk it so happy to take any other evidence into account

Several people have posted links that likely show why there is a difference (for instance James Miller) and I've also posted plenty of evidence that people seeing a significant difference are almost certainly seeing so because of user error and/or not comparing like for like intentionally or unintentionally.

I'll repeat again that several big name tech channels, etc. have actually done tests side by side using professional equipment and struggled to find anything other than very very minor differences and if there was as big difference as some here are saying channels like Gamers Nexus, Hardware Unboxed, etc. would be all over it - it would be a big story that got them a lot of attention so no way with all their experience they'd have passed it by by now.

As I've said before I've always considered there being a slight advantage to AMD in terms of the perception of colour vibrancy from building many many systems but I've never seen a dramatic difference.

Every time someone has posted images showing that supposed difference in this thread or elsewhere on other forums, etc. I've been able to conclusively debunk it so happy to take any other evidence into account

It's that colour vibrancy you need to watch out for. AMD are not without there faults - The Oculus rift thingy made me update my drivers the other day and it loaded this new driver thing with a whole new set of features without properly notifying me. That is properly out of order that is.

I maintain that I prefer the AMD image for whatever reason, to me it just looks better. TBH I genuinely couldn't care less if it's a thing or not my eyes tell me what I like, there is absolutely no way I would have gone to the lengths others have to "prove" anything to anybody though. I like the AMD presentation and visual fidelity but it's a plus rather than a must have imo.

Edit: Also post 8000

- I guarantee you 99% of those posts, complete junk.

- I guarantee you 99% of those posts, complete junk.

Last edited:

just drop it mate

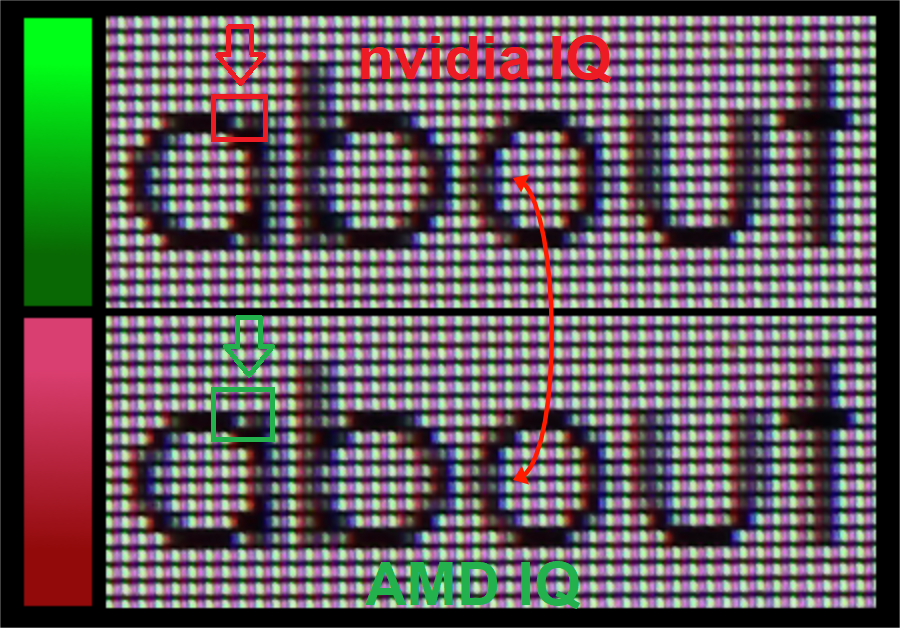

Right, having another look at this. Below is a comparison picture between my AMD and nVidia cards.

Source is a Word template document. Standard black text on white background. TV is a Sony 43" 4k effort. Nothing changed between the shots ... not even the mouse was moved.

Photos and images were done by the following method:

- Canon 80D 50mm F1.4 sat on tripod in front of screen. ( 1/50 , f9.0, ISO400 ) pictures saved to RAW.

- Autofocused first, then turned to manual focus so that the focal point wouldn't change between shots.

- Shutter on timer so my hands were't touching the camera at the time of shot.

- Picture taken from AMD source, swapped hdmi cable to GPU, picture then taken from nVidia source.

- Time difference between shots - 15 seconds on overcast day, so ambient light unchanged between shots too.

- Image files taken into Canon DPP.

- RAW files exported with no adjustments into 32bit TIFF.

- Tiff's overlayed in Affinity Photo to ensure matched up OK (They were 1 pixel out between the RAW files).

- Same section cropped and copied into the photo below.

Picture as below. nVidia on top. AMD below. Picture is 100% zoom ... there has been no image size adjustments to get to this point.

Is there a difference ? Yes, there is.

If you look at 9 o'clock area of the insides of letters as arrowed below: (pic was zoomed to 200% of original)

You can see that there is the AMD source is making the leading edge of the change from the black to white a bit sharper, and that this sort of change is consistent across most of the leading edges.

Another are you would be the vertical sections of the letter 'u', where the nVidia's letter seems ever so slightly heavier compared to the AMD one.

Like I say, its very very subtle, but these little differences do seem to make an impression when you use the screen as a whole. The AMD is just that little bit crisper and sharper with text.

Edit:

Coming at this fresh again today, I still notice the difference in text - even just browsing the BBC website. The AMD is a cleaner feeling image. Its bugging me now as most of my use of the computer is text based, and its making me wonder whether to change to an AMD card. ( even though I may lose some performance in games which I rarely play )

Clearly visible difference in the image crispness - where AMD offers detail, nvidia offers smearing.

This is what they are writing about:

"With the microsoft basic drivers text looked sharper but as soon as i installed the nvidia drivers, it would look like either a filter was applied or something was up with the subpixel order or something."

https://www.reddit.com/r/Amd/comments/e8co6p/my_experience_with_the_5700xt_from_the_titan_x/