I mean how can they miss that the Ampere based CPUs,have large monolithic dies? AFAIK,they don't appear to be MCMs,and the package is the biggest ever made for a server CPU.

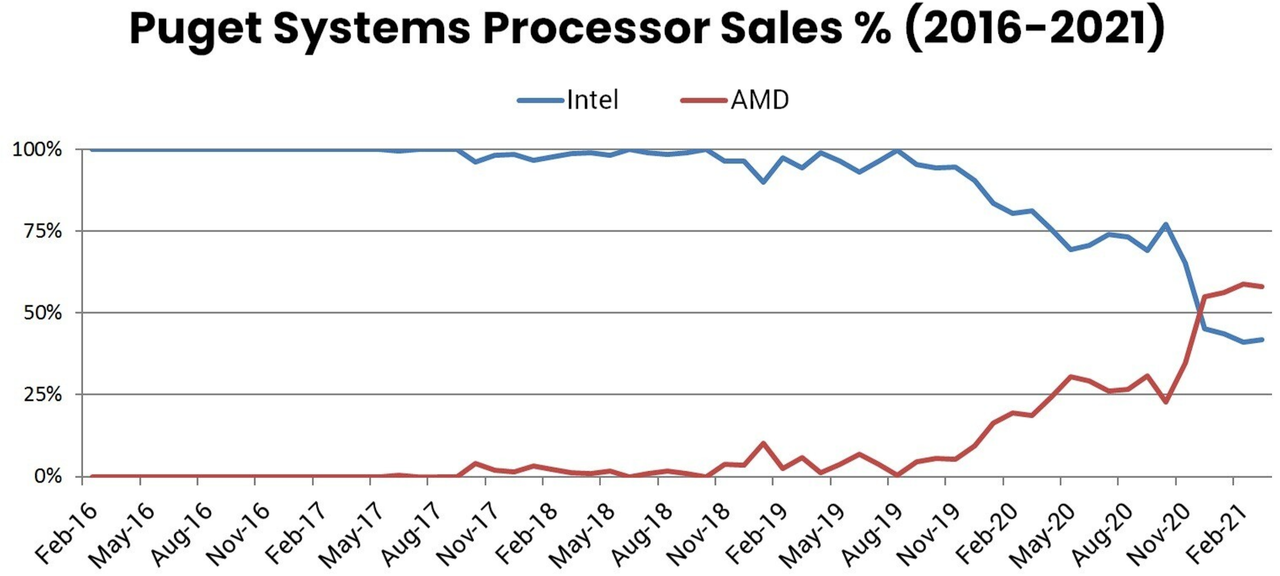

AMD are using small chiplets,and have the I/O made on lagging nodes,which massively reduces the amount of risk. This is why Intel has so many problems,and we have seen going to chiplets means you need to invest a lot in keep I/O power down,which monolithic designs have less of an issue with. But then making bigger and bigger chips is a risk too.

Plus existing customers can probably use drop in upgrades to existing infrastucture(AMD is famous for doing this - IIRC even the BD based HPC CPUs could drop into ones with the Phenom II based ones). Intel might have problems now,but they have a ton of experience in packaging too,ie,like AMD they are probably going to use different nodes for different parts on the same CPU(they are after all doing their own chiplets).

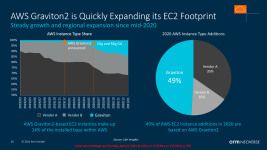

There is no indication that the Ampere CPUs currently or the immediate future are going that way. So they are going to be huge dies,on a cutting edge process node. That is the issue with many of these ARM based designs,they are very dependent on being on the best nodes.

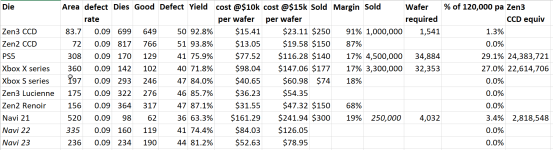

Unlike the Wafer Scale Engine,which is design to get around yields by huge redundancy,what is the yields on these kind of CPUs? It's all fine and dandy showing the top models,if they end up having yield or volume issues. I would say AMD would have a better chance of making a top tier Epyc and are pricing stuff because they can.

Plus the issue is Ampere might even be paying more per 7NM or 5NM wafer than AMD. So it makes me wonder how much of this pricing is because they having lower margins,and and hope to gain share before prices go up. Is this level of pricing sustainable for them?

If there is an ARM based chip which seems actually groundbreaking - its the Fujitsu A64FX. A CPU system design for homgeneous scalability by having the functionality of a CPU and GPU,and designed to scale. Its utterly ignored by the tech press.

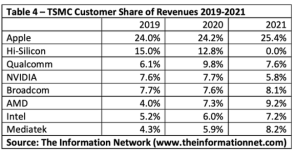

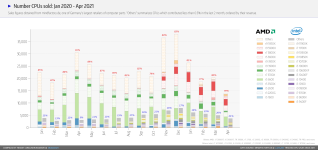

but it does go to show how much more profit is in the Zen 3 CPU's vs Consoles and GPU's

but it does go to show how much more profit is in the Zen 3 CPU's vs Consoles and GPU's