-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Vs. Nvidia Image Quality - Old man yells at cloud

- Thread starter shankly1985

- Start date

More options

Thread starter's postsStandard = Quality on nVidia, Performance = Performance and High = High Quality. AFAIK AMD doesn't have the same equivalent option as High Performance on nVidia. If there was any significant difference in it one or the other company wouldn't hesitate to take the other to task as it would be a huge PR win.

People use those options?

People use those options?

I'll try anything to see if there's any texture streaming improvements to be had. It's the #1 thing I want for games, that's why I hold Wolfenstein 2 in such regard - at least graphically.

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

I have an nVidia discrete and an AMD 2400G in my system so I could give it a try. I already have a personal impression from these that the AMD gives a crisper picture on 2d/desktop type environments. Taking screenshots wouldn't work, but I might be able to setup a dSLR on a tripod and set the same exposure for comparative pictures.

Associate

- Joined

- 21 Sep 2018

- Posts

- 895

I have an nVidia discrete and an AMD 2400G in my system so I could give it a try. I already have a personal impression from these that the AMD gives a crisper picture on 2d/desktop type environments. Taking screenshots wouldn't work, but I might be able to setup a dSLR on a tripod and set the same exposure for comparative pictures.

Still gonna depemd on rhe viewers gpu brand, isnt it?

Can anyone who has both Radeon and GeForce systems compare the text fonts in internet articles and post impressions here?

As @Donnie Fisher said, is bit difficult to take representative sample on both my laptop (1060) and pc (5700XT)

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

Still gonna depemd on rhe viewers gpu brand, isnt it?

With a screen shot yes. But with a picture from a camera where the resolution is higher than the screen dpi, then you should be able to see differences in the edges of text.

As @Donnie Fisher said, is bit difficult to take representative sample on both my laptop (1060) and pc (5700XT)

Absolutely ... different screens would be completely different results. But mine is a single desktop system with the AMD graphics on the CPU, as well as a discrete nVidia 1080ti. I should be able to have the same desktop window setup on the same display, same refresh rate, using the same camera and exposure, and all I change is whether the display is fed by the port on the GPU, or the port on the motherboard.

I might do a quick check and see if I can switch the hdmi on the fly.

In relation to the subject. On AMD reddit, there is at least 1 new post every day with people asking why their new AMD 5700/XT displays such better graphics on same settings than their GTX card they came from.

Worth to dig through several of those discussions.

Worth to dig through several of those discussions.

Placebo?

I never noticed any difference

Not actually. To boot there is a different in colour spectrum because by default Nvidia has all their cards with culled RGB to improve bandwidth and performance.

Has to be manually activated.

Other are posting screenshots mainly with trees, bushes, fires or distant objects.

Still gonna depemd on rhe viewers gpu brand, isnt it?

Doesn't the image shot and freeze the quality of the text font at the time of its original display, so everyone should clearly see the differences?

I will also try but later because my old machine with a GeForce went again to sleep in the wardrobe...

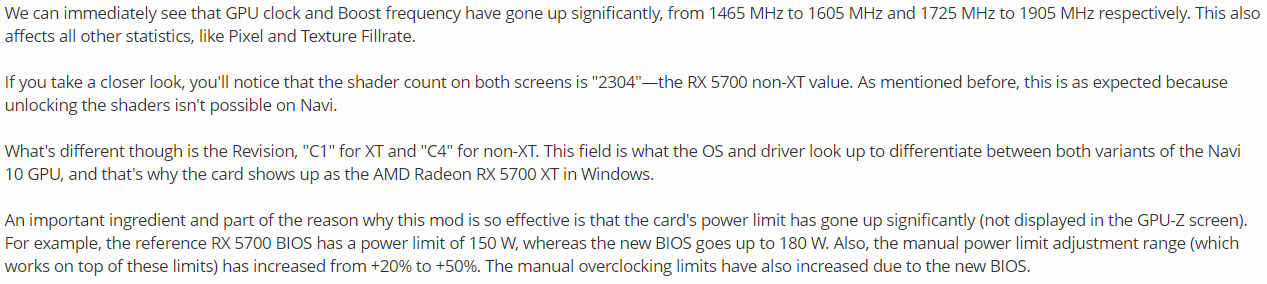

Ok, for now:

Ryzen 5 2500U with Vega 8 and Radeon RX 560X.

Text sample with PNG file format, 1920x1080, 100% Recommended scaling in the Display Settings -> Scaling and Layout | Google Chrome Page Zoom set to 110%:

Text taken from there: https://www.techpowerup.com/review/flashing-amd-radeon-rx-5700-with-xt-bios-performance-guide/

Last edited:

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

OK, I'm back, typing on the desktop now. I've updated the AMD drivers and am happy with the nVidia ones that are installed at the moment. (not sure what level they are at, but it doesn't really matter at the moment I dont reckon).

I can now pull the HDMI cable and swap it live between the AMD motherboard and nVidia card outputs without a reboot. So the desktop stays 100% the same between each output.

.

.

.

The AMD output is clearer at 4k.

Its a very very very small difference, but as soon as I switched to the AMD for the first time, I instantly thought it looked a bit clearer. Going back and forth multiple times now, and its still the case.

Each time I go from AMD to nVidia, my instant thought sitting at a normal distance is that there is just the merest hint of a softness. Its almost like the cleartype setting has been tweaked slightly to give a little more anti-alias.

Each time I go from the nVidia to the AMD, I think, oh that feels a little cripser.

In some places, like a text box, the sharper AMD text looks better. In other areas, such as icons and their text, the nVidia's softening makes the text nicer. ( but thats very subjective personal opinion on whether sharper/softer text looks better )

I'm not 100% sure whether a dSLR picture of the 2 outputs would show it ... but i'll give it a try when I get the chance ... i'll need to setup something to keep the camera in the same position.

I can now pull the HDMI cable and swap it live between the AMD motherboard and nVidia card outputs without a reboot. So the desktop stays 100% the same between each output.

.

.

.

The AMD output is clearer at 4k.

Its a very very very small difference, but as soon as I switched to the AMD for the first time, I instantly thought it looked a bit clearer. Going back and forth multiple times now, and its still the case.

Each time I go from AMD to nVidia, my instant thought sitting at a normal distance is that there is just the merest hint of a softness. Its almost like the cleartype setting has been tweaked slightly to give a little more anti-alias.

Each time I go from the nVidia to the AMD, I think, oh that feels a little cripser.

In some places, like a text box, the sharper AMD text looks better. In other areas, such as icons and their text, the nVidia's softening makes the text nicer. ( but thats very subjective personal opinion on whether sharper/softer text looks better )

I'm not 100% sure whether a dSLR picture of the 2 outputs would show it ... but i'll give it a try when I get the chance ... i'll need to setup something to keep the camera in the same position.

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

Right, having another look at this. Below is a comparison picture between my AMD and nVidia cards.

Source is a Word template document. Standard black text on white background. TV is a Sony 43" 4k effort. Nothing changed between the shots ... not even the mouse was moved.

Photos and images were done by the following method:

Picture as below. nVidia on top. AMD below. Picture is 100% zoom ... there has been no image size adjustments to get to this point.

Is there a difference ? Yes, there is.

If you look at 9 o'clock area of the insides of letters as arrowed below: (pic was zoomed to 200% of original)

You can see that there is the AMD source is making the leading edge of the change from the black to white a bit sharper, and that this sort of change is consistent across most of the leading edges.

Another are you would be the vertical sections of the letter 'u', where the nVidia's letter seems ever so slightly heavier compared to the AMD one.

Like I say, its very very subtle, but these little differences do seem to make an impression when you use the screen as a whole. The AMD is just that little bit crisper and sharper with text.

Edit:

Coming at this fresh again today, I still notice the difference in text - even just browsing the BBC website. The AMD is a cleaner feeling image. Its bugging me now as most of my use of the computer is text based, and its making me wonder whether to change to an AMD card. ( even though I may lose some performance in games which I rarely play )

Source is a Word template document. Standard black text on white background. TV is a Sony 43" 4k effort. Nothing changed between the shots ... not even the mouse was moved.

Photos and images were done by the following method:

- Canon 80D 50mm F1.4 sat on tripod in front of screen. ( 1/50 , f9.0, ISO400 ) pictures saved to RAW.

- Autofocused first, then turned to manual focus so that the focal point wouldn't change between shots.

- Shutter on timer so my hands were't touching the camera at the time of shot.

- Picture taken from AMD source, swapped hdmi cable to GPU, picture then taken from nVidia source.

- Time difference between shots - 15 seconds on overcast day, so ambient light unchanged between shots too.

- Image files taken into Canon DPP.

- RAW files exported with no adjustments into 32bit TIFF.

- Tiff's overlayed in Affinity Photo to ensure matched up OK (They were 1 pixel out between the RAW files).

- Same section cropped and copied into the photo below.

Picture as below. nVidia on top. AMD below. Picture is 100% zoom ... there has been no image size adjustments to get to this point.

Is there a difference ? Yes, there is.

If you look at 9 o'clock area of the insides of letters as arrowed below: (pic was zoomed to 200% of original)

You can see that there is the AMD source is making the leading edge of the change from the black to white a bit sharper, and that this sort of change is consistent across most of the leading edges.

Another are you would be the vertical sections of the letter 'u', where the nVidia's letter seems ever so slightly heavier compared to the AMD one.

Like I say, its very very subtle, but these little differences do seem to make an impression when you use the screen as a whole. The AMD is just that little bit crisper and sharper with text.

Edit:

Coming at this fresh again today, I still notice the difference in text - even just browsing the BBC website. The AMD is a cleaner feeling image. Its bugging me now as most of my use of the computer is text based, and its making me wonder whether to change to an AMD card. ( even though I may lose some performance in games which I rarely play )

Last edited:

Doesn't the image shot and freeze the quality of the text font at the time of its original display, so everyone should clearly see the differences?

I will also try but later because my old machine with a GeForce went again to sleep in the wardrobe...

Ok, for now:

Ryzen 5 2500U with Vega 8 and Radeon RX 560X.

Text sample with PNG file format, 1920x1080, 100% Recommended scaling in the Display Settings -> Scaling and Layout | Google Chrome Page Zoom set to 110%:

Text taken from there: https://www.techpowerup.com/review/flashing-amd-radeon-rx-5700-with-xt-bios-performance-guide/

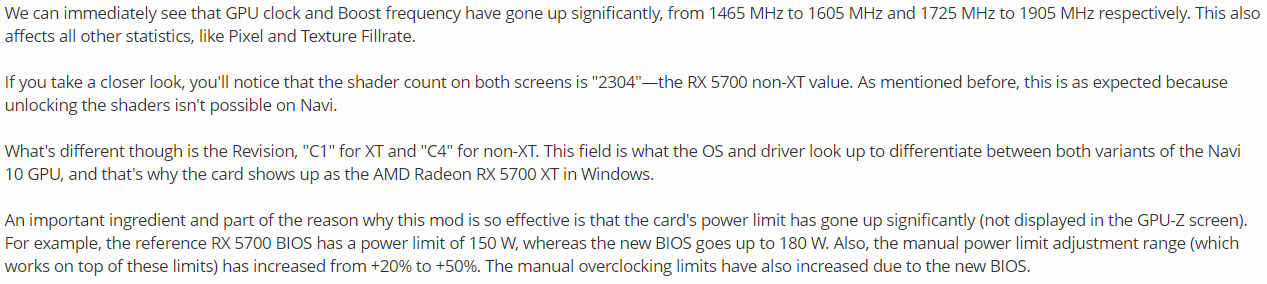

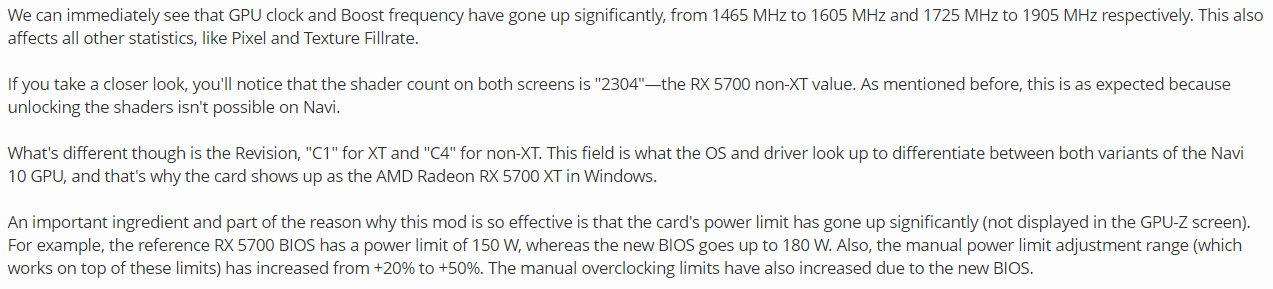

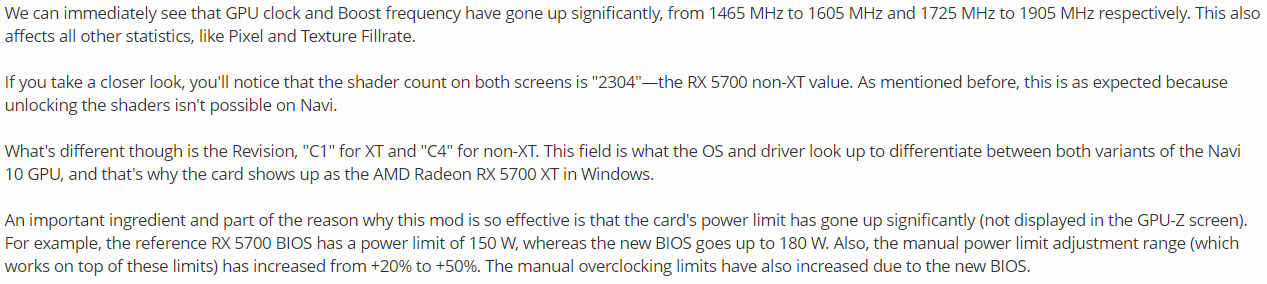

So, now GeForce with the same settings:

GeForce:

Radeon:

Right, having another look at this. Below is a comparison picture between my AMD and nVidia cards.

Source is a Word template document. Standard black text on white background. TV is a Sony 43" 4k effort. Nothing changed between the shots ... not even the mouse was moved.

Photos and images were done by the following method:

- Canon 80D 50mm F1.4 sat on tripod in front of screen. ( 1/50 , f9.0, ISO400 ) pictures saved to RAW.

- Autofocused first, then turned to manual focus so that the focal point wouldn't change between shots.

- Shutter on timer so my hands were't touching the camera at the time of shot.

- Picture taken from AMD source, swapped hdmi cable to GPU, picture then taken from nVidia source.

- Time difference between shots - 15 seconds on overcast day, so ambient light unchanged between shots too.

- Image files taken into Canon DPP.

- RAW files exported with no adjustments into 32bit TIFF.

- Tiff's overlayed in Affinity Photo to ensure matched up OK (They were 1 pixel out between the RAW files).

- Same section cropped and copied into the photo below.

Picture as below. nVidia on top. AMD below. Picture is 100% zoom ... there has been no image size adjustments to get to this point.

Is there a difference ? Yes, there is.

If you look at 9 o'clock area of the insides of letters as arrowed below: (pic was zoomed to 200% of original)

You can see that there is the AMD source is making the leading edge of the change from the black to white a bit sharper, and that this sort of change is consistent across most of the leading edges.

Another are you would be the vertical sections of the letter 'u', where the nVidia's letter seems ever so slightly heavier compared to the AMD one.

Like I say, its very very subtle, but these little differences do seem to make an impression when you use the screen as a whole. The AMD is just that little bit crisper and sharper with text.

Edit:

Coming at this fresh again today, I still notice the difference in text - even just browsing the BBC website. The AMD is a cleaner feeling image. Its bugging me now as most of my use of the computer is text based, and its making me wonder whether to change to an AMD card. ( even though I may lose some performance in games which I rarely play )

Thanks for the efforts.

Yup, it is clearly seen that the nvidia letters are fatter and blurred.

If you zoom to 90% in the browser, you will see that the nvidia text font looks like bugged and the letters have very strange shapes, making it quite ugly in the end.

Associate

- Joined

- 22 Jun 2018

- Posts

- 1,786

- Location

- Doon the watah ... Scotland

Does taking a screenshot actually work though to show differences ? Reason I say that is that I thought a screenshot was a record of what the system originally wanted to display, but saved directly to a file without passing through a GPU output.

Whereas, the differences in picture quality being discussed here are about the differences in GPU output which will be coming from variations in how GPU's take what the system wants to display and interpretign it into a signal for the monitor.

( I stand to be corrected... )

Whereas, the differences in picture quality being discussed here are about the differences in GPU output which will be coming from variations in how GPU's take what the system wants to display and interpretign it into a signal for the monitor.

( I stand to be corrected... )

You can sometimes see small differences on screenshots, but you need to see it in person really. Using the same monitor.

Going from Nvidia to AMD I noticed it straight away. Colours seem stronger on AMD and textures a bit sharper. I've switched between Nvidia and AMD cards a number of times and the difference is there. You can tweak geforce settings but you can't quite get it to match, if you try to add vibrance for stronger colours you get banding which doesn't appear on AMD.

Going from Nvidia to AMD I noticed it straight away. Colours seem stronger on AMD and textures a bit sharper. I've switched between Nvidia and AMD cards a number of times and the difference is there. You can tweak geforce settings but you can't quite get it to match, if you try to add vibrance for stronger colours you get banding which doesn't appear on AMD.

Last edited:

Associate

- Joined

- 21 Sep 2018

- Posts

- 895

You can sometimes see small differences on screenshots, but you need to see it in person really. Using the same monitor.

Going from Nvidia to AMD I noticed it straight away. Colours seem stronger on AMD and textures a bit sharper. I've switched between Nvidia and AMD cards a number of times and the difference is there. You can tweak geforce settings but you can't quite get it to match, if you try to add vibrance for stronger colours you get banding which doesn't appear on AMD.

I have a GTX 1060 and 2 GTX 1050s. I switch from RGB to YCbCr 444 on these cards and it makes IQ a bit better.