-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Vs. Nvidia Image Quality - Old man yells at cloud

- Thread starter shankly1985

- Start date

More options

Thread starter's postsDon't know if both images can be compared directly because they are so different - the left one has more haze in the distance, not quite clear if the skies are clear or just dense cloudiness over the haze, and plenty of sun shafts which also tend to be compute resources heavy.

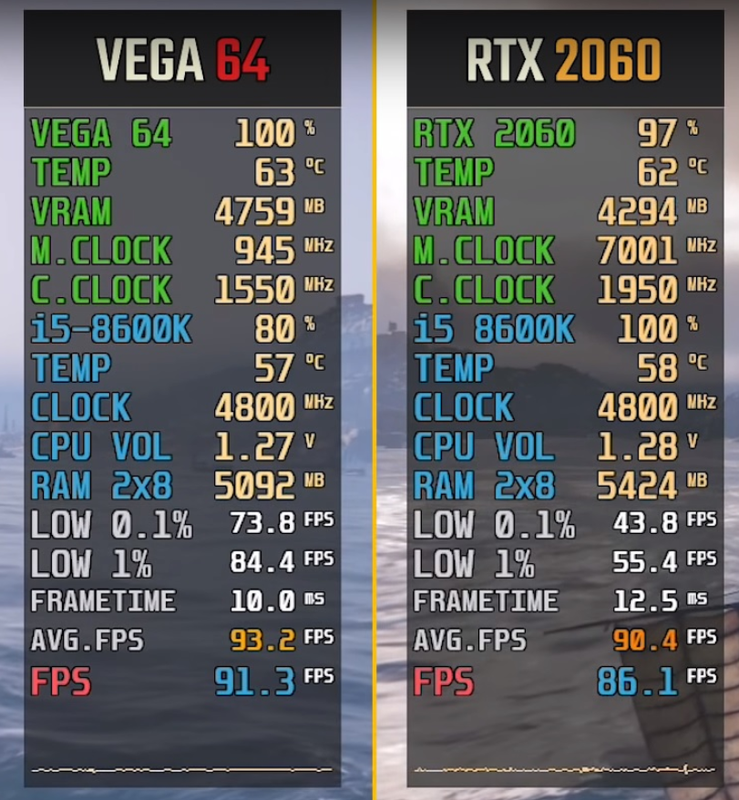

Not to mention what the pair of RTX 2060 does to that poor i5 8600 - 100% load and poor 1% and 0.1% percentiles.

The Amd one has diss enabled.

The Amd one has diss enabled.

My bet is that the AMD one is the graphically correct with the haze all over the place and the sun through it. But Ubisoft needs to confirm it.

My bet is that the AMD one is the graphically correct with the haze all over the place and the sun through it. But Ubisoft needs to confirm it.

The right side has completely different sky - dunno if the game has dynamic weather conditions but the lighting is also different with more overcast effects. That isn't just DLSS.

My bet is that the AMD one is the graphically correct with the haze all over the place and the sun through it. But Ubisoft needs to confirm it.

As with most of the other comparisons, the maker of this video clearly hasn't captured identical moments in terms of weather, time in-game, etc.

By this argument we could say AMD is cheating because they've not rendered any clouds!

Speaking as someone whose favourite piece of hardware is probably still the 9700Pro, most of the stuff presented here is nonsense and isn't apples to apples. I was an arch critic of Nvidia's IQ practices around the 5800Ultra era and subsequent couple of years and I do think the IQ situation still needs periodic investigation by the community. But those shots of Assassin's Creed above for example, veers into the realm of comedy fantasy. Look at the clouds

I was blown away with the image quality of V56. Pin sharp, whereas 2060 looked pale and blurred/washed out. Tbh though i didn't mess with the video setting and just got a V64 instead and washed my hands of Nvidia. Still blown away at the render quality of this Vega card. Nvidia seem to go for a corner cutting graphics solution.

These are the conclusions I've come to from my own experience but I could be wrong. I use Display port btw. The old 780ti was on HDMI and i'm sure it used to look better than the 2060 did so maybe Nvidia don't work, stock out of the box, as well with DP? I don't know other than i loved Vega and hated RTX so went back to Vega with the sapphire nitro and i'm loving it! AMD wins in my home!

These are the conclusions I've come to from my own experience but I could be wrong. I use Display port btw. The old 780ti was on HDMI and i'm sure it used to look better than the 2060 did so maybe Nvidia don't work, stock out of the box, as well with DP? I don't know other than i loved Vega and hated RTX so went back to Vega with the sapphire nitro and i'm loving it! AMD wins in my home!

I was blown away with the image quality of V56. Pin sharp, whereas 2060 looked pale and blurred/washed out. Tbh though i didn't mess with the video setting and just got a V64 instead and washed my hands of Nvidia. Still blown away at the render quality of this Vega card. Nvidia seem to go for a corner cutting graphics solution.

These are the conclusions I've come to from my own experience but I could be wrong. I use Display port btw. The old 780ti was on HDMI and i'm sure it used to look better than the 2060 did so maybe Nvidia don't work, stock out of the box, as well with DP? I don't know other than i loved Vega and hated RTX so went back to Vega with the sapphire nitro and i'm loving it! AMD wins in my home!

Someone can try a Matrox G200 8MB and GeForce 2 MX200 32MB on a CRT monitor. It is even a more shocking difference.

Matrox's cards back then provided better image quality than Radeons.

Wait, people recall Nvidia having their delta compression as a big thing right?

Do people know what compression does on media does?

There is no perfect compression and decompression without some loss on the fly, AMD compresses its images less and retains more of the original render, this is also likely why Nvidia has a bit better performance as it processes an image that has detail in it.

Do people know what compression does on media does?

There is no perfect compression and decompression without some loss on the fly, AMD compresses its images less and retains more of the original render, this is also likely why Nvidia has a bit better performance as it processes an image that has detail in it.

There is no perfect compression and decompression without some loss on the fly

Yes there is. ZIP is a lossless compression.

It's just that in this area, vendors choose lossy compression for less CPU and memory requirements.

Yes there is. ZIP is a lossless compression.

It's just that in this area, vendors choose lossy compression for less CPU and memory requirements.

Like I said, on the fly, AMD nor Nvidia are going to use ZIP to compress and decompress gaming rendering.

But this is partly if not mostly why Nvidia suffers from image quality, this was very notable when I went from the GTX 780 to the RX480, AMD was just sharper.

I was blown away with the image quality of V56. Pin sharp, whereas 2060 looked pale and blurred/washed out. Tbh though i didn't mess with the video setting and just got a V64 instead and washed my hands of Nvidia. Still blown away at the render quality of this Vega card. Nvidia seem to go for a corner cutting graphics solution.

These are the conclusions I've come to from my own experience but I could be wrong. I use Display port btw. The old 780ti was on HDMI and i'm sure it used to look better than the 2060 did so maybe Nvidia don't work, stock out of the box, as well with DP? I don't know other than i loved Vega and hated RTX so went back to Vega with the sapphire nitro and i'm loving it! AMD wins in my home!

You are not alone. Saying that since moved from 1080Ti to Vega 64. I rather have 120fps instead of 150 and better image quality.

You are not alone. Saying that since moved from 1080Ti to Vega 64. I rather have 120fps instead of 150 and better image quality.

On the Radeon and its settings, you can also worsen the quality settings, so that matches the maximum geforces can provide. The nvidia panel has no option for quality texture filtering, it all goes performance texture filtering.

some dude on reddit on this topic

if someone with nvidia gpu could test

Nvidia decided to compress textures the visual impact will be higher or lower. Amd uses the Nvidia equivalent of "High Quality" setting (in control panel) as standard, that reduces performance on Nvidia gpu's by 10 to 25% (mass effect Andromeda has a huge performance penalty).

As for color, contrast and vibrancy a screenshot wont show you that for obvious reasons, they are driver level calibrations displayed to the display, not created GPU side.

Nvidia likes to use this compression for two main reasons, the first is to gain FPS comparable to AMD, the second is to use less vram (up to 1.9GB's in 4k, equivalently less in 1080p).

If you don't believe me you can actually set setting in Nvidia control panel to output the same image AMD gpu's output by default.

dynamic output range: full video output range: full (0-255) Anisotropic Filtering 16x Shader Cache: On Texture Filtering - Anisotropic sample optimization : Off Texture Filtering - Negative LOD bias : Clamp Texture Filtering - Quality : Higher Quality Texture Filtering - Trilinear optimization: Off

All of these : optimizations are ironicaly visual downgrades and/or compression to increase frames.

In color settings (to have same output as AMD)

Use Nvidia settings Contrast 65% (+15% offset) Digital vibrance 55% (+5% offset)

Enjoy what every AMD owner knows as the "amd image"

if someone with nvidia gpu could test

nVidia's compression is fully lossless.

Several people have done tests using external cameras and external capture cards like Hardware Unboxed and found in most cases no difference in comparisons - some edge cases where AMD had very slight (under 1%) better colour saturation.

Several people have done tests using external cameras and external capture cards like Hardware Unboxed and found in most cases no difference in comparisons - some edge cases where AMD had very slight (under 1%) better colour saturation.

Last edited:

Soldato

- Joined

- 17 Aug 2003

- Posts

- 20,168

- Location

- Woburn Sand Dunes

some dude on reddit on this topic

if someone with nvidia gpu could test

Do you really believe this? Would you say the watch dogs 2 image is more vibrant on the Vega? because they are near identical bar that weird floor tile texture being different.

some dude on reddit on this topic

if someone with nvidia gpu could test

Good luck to match this on an nvidia: (CS Source, map Militia, resolution 1920 x 1080, all settings maxed out both in-game and Radeon Settings, Ryzen 5 2500U + Radeon RX 560X):

This is in the house, behind the front door. What you should look for is multiple dust particles visible in the air (The same can be seen in the Dust and Dust 2 tunnels, as well as darker zones in other maps):