Humbug

"If indeed the RX7800XT does not beat an RX6800XT significantly and AMD want to charge over £500,one has to question WTF is happening inside the company."

What if it comes in at £600 and sits between 6800XT and 6950XT?

Are you an AMD insider?

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Humbug

"If indeed the RX7800XT does not beat an RX6800XT significantly and AMD want to charge over £500,one has to question WTF is happening inside the company."

What if it comes in at £600 and sits between 6800XT and 6950XT?

I think AMD will come in at $500 so in reviews it will be compared to the 4060ti 16gb as that card is so bad it'll make anything look better but overall it still won't look great against the 6800XT, if AMD come in a $550 then it'll be compared to the 4070 which is selling for under $600 while also costing more than a 6800XT then it won't look good all. I agree that $450 should be the MSRP and would mean it'll get good reviews, besides if AMD price it higher it will be selling for that in 6 months time anyway.7600 has 32CU compared to the 6600 28CU count. It is a tiny bit faster than the 6600XT with the same CU count and slower memory. power consumption is about the same. I can see a 60CU GPU performing maybe 10% better than a 6800 but worse than the 6800XT. Given the current prices of the 6800XT (OC has one for £499) it will have to cost £450-460 at the most. 4070 will beat it in raster and obliterate it in RT.

I’m making worst case scenario predictions given AMDs propensity to miss open goals. They are literally that stupid with their pricing this round.

I’m glad I took the plunge rather than waiting for RDNA4I'm not sure it's stupidity because I'm not sure it's unintentional. If the people at a decision-making level at AMD have an agreement with the people at a decision-making level at nvidia to cede the consumer dgpu market to nvidia while maintaining just enough market presence to allow nvidia to deny being a monopoly then the choices taken by AMD make a lot of sense. They also make sense if the people at a decision-making level at AMD have unilaterally chosen to avoid competing with nvidia. The decisions are only stupid if AMD intend to compete with nvidia.

It certainly doesn't help that AMD have gambled on chiplets and lost, so they can't compete at the top. Maybe they just decided that it wasn't worth trying. RDNA3 is mediocre, barely better than RDNA2. RDNA4 doesn't seem to work properly. Chiplets have potential, but it seems that AMD can't realise that potential. It's a similar situation to SLI, but it has to be made to work close to perfectly without requiring per-game support. It has to be completely transparent, to appear to the OS as a single device. RDNA4 was due to take it a step further and separate the GPU into 2+ separate chips. Maybe it's not stupidity to concede the market and limp along as an also-ran in the low to midrange, maybe making some profit but concentrating on other areas, areas more useful to the business. Maybe AMD will be able to get it working by RDNA5. Maybe well enough to have a go at being a major player again. Maybe.

I'm not sure it's stupidity because I'm not sure it's unintentional. If the people at a decision-making level at AMD have an agreement with the people at a decision-making level at nvidia to cede the consumer dgpu market to nvidia while maintaining just enough market presence to allow nvidia to deny being a monopoly then the choices taken by AMD make a lot of sense. They also make sense if the people at a decision-making level at AMD have unilaterally chosen to avoid competing with nvidia. The decisions are only stupid if AMD intend to compete with nvidia.

It certainly doesn't help that AMD have gambled on chiplets and lost, so they can't compete at the top. Maybe they just decided that it wasn't worth trying. RDNA3 is mediocre, barely better than RDNA2. RDNA4 doesn't seem to work properly. Chiplets have potential, but it seems that AMD can't realise that potential. It's a similar situation to SLI, but it has to be made to work close to perfectly without requiring per-game support. It has to be completely transparent, to appear to the OS as a single device. RDNA4 was due to take it a step further and separate the GPU into 2+ separate chips. Maybe it's not stupidity to concede the market and limp along as an also-ran in the low to midrange, maybe making some profit but concentrating on other areas, areas more useful to the business. Maybe AMD will be able to get it working by RDNA5. Maybe well enough to have a go at being a major player again. Maybe.

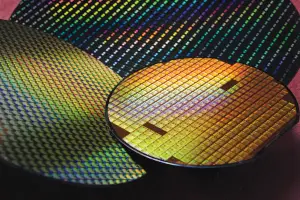

In addition, TSMC plans to launch 4nm (N4) technology, an enhanced version of N5 technology. N4 provides further enhancement in performance, power and density for the next wave of N5 products. The development of N4 technology is on schedule with good progress, and volume production is expected to start in 2022.

fuse.wikichip.org

fuse.wikichip.org

Nvidia calling it 4nm always leaves me scratching my head, it's not IMO. It's an enhanced 5nm and in the past TSMC have called enhancements to nodes things like N7+, N7P, N5P, etc, etc.

Not having a go at you calling it that humbug, it's just personally I'd call it a marketing exercise on TSMC's behalf..

I know, that's what i said.TSMC call it 4nm...

Navi 31 only matches AD103 though which is 379mm2 and even the 4080 isn’t using the full die while quite a bit of that die also makes up the cache.Navi 31 is a 300mm die on 5nm with 6 37mm dies on 6nm, compare that to ADA A102 which is 600mm on 4nm, the former is 83% the performance of the later. Yes ADA A102 is 20% faster but the expensive bit is 100% larger on a smaller more expensive node, i count that as a win for chiplets.

If you count that as a loss you're only doing it because it doesn't win performance, and yet you're also saying AMD doesn't intend to win, so which is it?

I know, that's what i said.

My point was it's not 4nm IMO because in the past, and future plans, they've been retained the same number and add designators to indicate that a particular fab is an enhancement and not an entirely new node.

It shouldn't be call 4nm IMO, it should be called something like N5P, N5+, N5PP or something, something 5.

Navi 31 only matches AD103 though which is 379mm2 and even the 4080 isn’t using the full die while quite a bit of that die also makes up the cache.

Navi 31 has 222mm2 of 6nm so altogether you're looking at a total die area of 522mm2 vs 379mm2 on the 4080. Also chiplets were supposed to be cheaper but is 79mm2 more expensive than 220mm2 of 6nm + all the interconnects.You say that as if the GPU can function without cache, like it shouldn't be counted.

You're right its a better comparison, its still quite a bit bigger despite only having a 256Bit bus and being on a better node

I know, that's why it should be called N5P, N5+, N5PP or whatever and not N4, it shouldn't be called N4 because it's not a full node shrink, it's an enhancement to the existing 5N.N4 has a 6% higher transistor density, 11% higher performance and 22% higher power efficiency vs N5, its more than just N5 under a different name. its a better node in every measurable way.

You're drifting off point, Its not about the overall size of the GPU, the GPU's components have not changed, they still need to be made up and packaged somehow, the point is chiplets is a better way to package the architecture than monolithic.Navi 31 has 222mm2 of 6nm so altogether you're looking at a total die area of 522mm2 vs 379mm2 on the 4080. Also chiplets were supposed to be cheaper but is 79mm2 more expensive than 220mm2 of 6nm + all the interconnects.

How’s it better when it’s worse efficiency and more expensive to build while performance per due area is worse. Maybe in the future chiplets will be better but right now monolithic is the way to go.You're drifting off point, Its not about the overall size of the GPU, the GPU's components have not changed, they still need to be made up and packaged somehow, the point is chiplets is a better way to package the architecture than monolithic.

How’s it better when it’s worse efficiency and more expensive to build while performance per due area is worse. Maybe in the future chiplets will be better but right now monolithic is the way to go.

and more expensive to build

while performance per due area is worse

Navi 31 is a 300mm die on 5nm with 6 37mm dies on 6nm, compare that to ADA A102 which is 600mm on 4nm, the former is 83% the performance of the later. Yes ADA A102 is 20% faster but the expensive bit is 100% larger on a smaller more expensive node, i count that as a win for chiplets.

If you count that as a loss you're only doing it because it doesn't win performance, and yet you're also saying AMD doesn't intend to win, so which is it?

Why do you think those two things are mutually exclusive? AMD might have intended to lose less than they have done. They might even have intended to win to some extent. Plans can be changed. Plans should be changed when the product turns out to be inferior to expectations.

In no particular order:

i) The die size of Navi 31 is not 300mm^2, at least not in a functional form. The other dies are not an optional extra just because they're physically separate. So the full N31 config is 522mm^2, not 300mm^2.

ii) Navi 31 does not compare with ADA102. There isn't currently a card using full ADA102, but even a card using less than full ADA102 (RTX 4090) is in a different tier to the 7900XTX. The 7900XTX is in the same tier as a 4080, which uses AD103, which is 379mm^2. So your claim about performance and die size is the reverse of true - it's nvidia's architecture that gives comparable performance with a much smaller die size, not the other way around.

iii) On a like for like basis, RDNA3 is marginally better than RDNA2. That's not a win.

iv) With RDNA3, AMD has lost a lot of market share, dropping below 10%. That's not a win.

v) Even with inflated prices, that's a reduction in profit. That's not a win.

vi) AMD couldn't even get midrange cards to market at all. That's not a win.

I'm not counting it as a loss only because it doesn't beat nvidia on performance. That's one reason, but not the only one or the main one. The main reason I'm counting it as a loss is because it's not better than monolithic. It failed at its core purpose. It also failed as a product, i.e. it didn't make AMD more profit. Not even at inflated prices.

You're counting it as a win solely because it's cheaper to manufacture. But that's based on a comparison with AD102, which is the wrong comparison (Navi31 actually compares with AD103, which is much smaller than AD102 and much smaller than Navi31) and it's based on your estimates of manufacturing costs. Which is something you don't know (and in post #797 you state clearly that you don't know it). I think it's likely that a 7900XTX has a higher manufacturing cost than a 4080. In any case, manufacturing costs aren't total costs and total costs isn't profit and it's profit that matters.

The only way in which RDNA3 can be spun as a win is something you mentioned in a later post - that AMD got chiplets to work at all. Which is an impressive engineering achievement. But they didn't get chiplets to work in the sense of being better than monolithic. They didn't make a better product and they didn't make more profit and they didn't gain market share. It's not a win. If the rumours are true they've already rejected trying to make it a win with RDNA4. It might become a win with RDNA5. Maybe.

i) The die size of Navi 31 is not 300mm^2, at least not in a functional form. The other dies are not an optional extra just because they're physically separate. So the full N31 config is 522mm^2, not 300mm^2.

ii) Navi 31 does not compare with ADA102. There isn't currently a card using full ADA102, but even a card using less than full ADA102 (RTX 4090) is in a different tier to the 7900XTX. The 7900XTX is in the same tier as a 4080, which uses AD103, which is 379mm^2. So your claim about performance and die size is the reverse of true - it's nvidia's architecture that gives comparable performance with a much smaller die size, not the other way around.

iii) On a like for like basis, RDNA3 is marginally better than RDNA2. That's not a win.

iv) With RDNA3, AMD has lost a lot of market share, dropping below 10%. That's not a win.

v) Even with inflated prices, that's a reduction in profit. That's not a win.

I'm not counting it as a loss only because it doesn't beat nvidia on performance. That's one reason, but not the only one or the main one. The main reason I'm counting it as a loss is because it's not better than monolithic

It failed at its core purpose

You're counting it as a win solely because it's cheaper to manufacture.

it's based on your estimates of manufacturing costs.

I think it's likely that a 7900XTX has a higher manufacturing cost than a 4080.

I made no such estimates.

Why?