Associate

- Joined

- 20 Oct 2011

- Posts

- 674

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Unpopular opinion of mine: the game's actually not that good.

Of course, people won't admit that since it's been hyped to hell and back, and loads of people have been "preparing" their systems for CP77 or using it as justification to buy a next gen console.

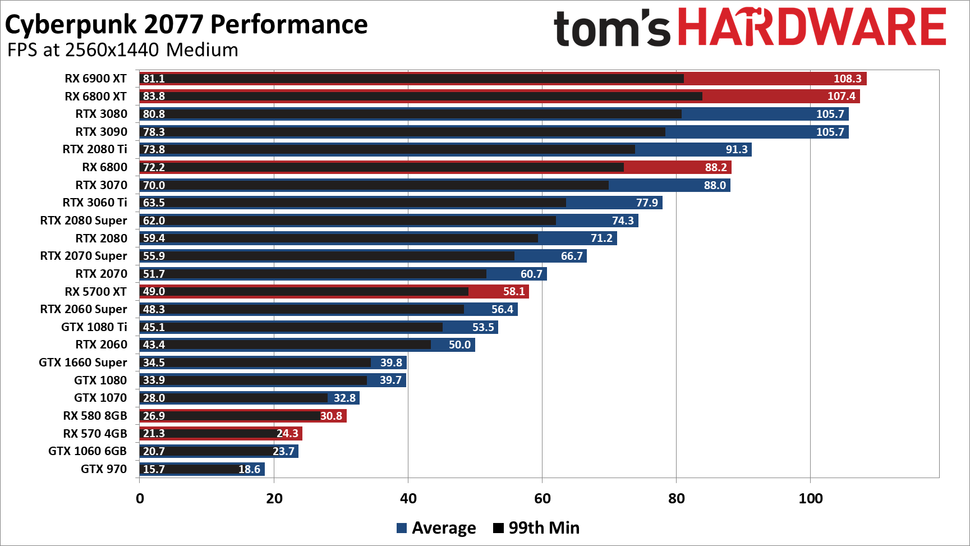

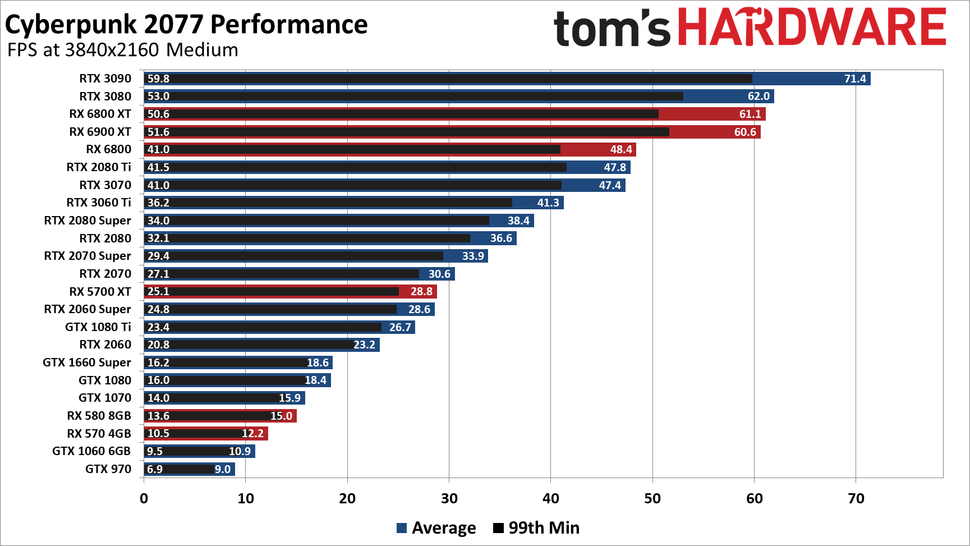

I'm not blown away by it graphically from what I've seen so far - there are some bits that look good but other areas you quickly notice ropey stuff like repeatedly tiled textures and performance isn't that great versus what is on screen when comparing to something like The Division. While not really surprising there is a huge amount of reuse of assets, etc. but in the cheapest way possible which is less excusable - again TD reuses a lot of stuff but goes to the effort to mix it up a bit.

Seems a lot of sloppy development in many areas causing odd bugs and crashes, etc. a long with a host of things that developers have no excuse for not getting right in this day and age like key binding.

Combat looks really rubbish as well so I hope the story is bang on.

All the usual problems that game developers come out with these days.

Well there are issues that are understandable with a big complex game and there are issues that should never have reached release - then somewhat mitigated depending on how the developer deals with them - quickly acknowledging and addressing them takes away a lot of the negatives but still doesn't excuse some things reaching release in the state they sometimes do.

I don't have much tolerance for it personally as a lot of this stuff isn't hard to get right.

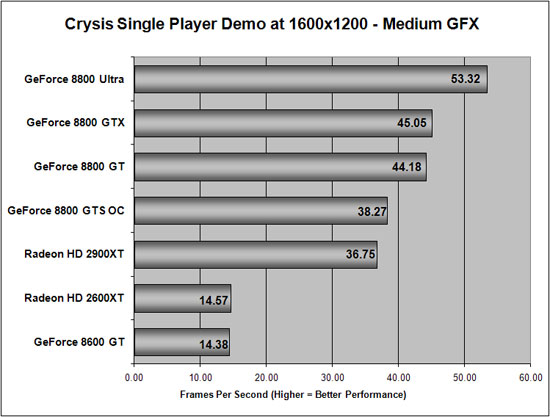

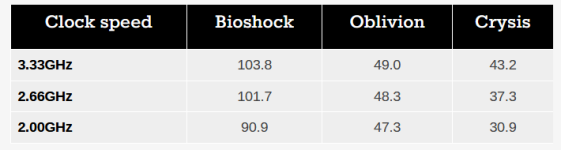

It runs worse than Crysis did at launch though. You barely break 14 FPS without DLSS with RTX on. If we had DLSS in 2007, Crysis would have run pretty much the same.I think you really don't seem to appreciate what Crysis did,and it was not only just the graphics,but the whole interactable semi-open world environment(and the ability to destroy so many objects),the way unlike so many modern games it used multiple forms of AI models(both bipedal and flying enemies),etc and the way you could tailor gameplay in an FPS. The fact is it was essentially DX9 game,and Crytek on purpose dialed down menu graphics settings - once you edited settings and ramped them up even more,you could see how forward looking it was. In fact,it was the pinacle of what DX9 could do and could still hold its own with later DX10/DX11 games to a degree.Its just that nowadays what it did was copied by many other games after it.

I would also say Cyberpunk 2077 is also much easier to run relative to Crysis. Even with a 8800 Ultra good luck with maxing out settings on the 1080p monitors of the era and expecting decent FPS. The game actually needed more than the common 512MB framebuffers on GPUs of the era,and even the most overclocked fastest CPUs,were a bottleneck.

I had an 8800GTS 512MB and a heavily overclocked Core2 CPU,and it still wasn't enough.

This for me has nowhere near the impact Crysis had at the time WRT to graphics. Crysis was a huge jump up in many areas,and Cyberpunk is a pretty game,just not quite what Crysis did back then.

This is partly because Cyberpunk 2077 is an intergenerational game. It has to be able to run on the older consoles and older PCs,unlike Crytek who didn't give a damn on whether older systems could actually run it.

Literally if you had anything before the 8800 series which was released the year before,you might as well have not bothered. If you didn't have an overclocked Core2 then you were hitting even worse CPU limitations.Cyberpunk 2077 still runs OK on much older hardware.

Crysis will be remembered for its graphics,Cyberpunk 2077 won't be as much just like the Witcher 3 won't be. It will live or die on its RPG experience. If anything I think Atomic Heart might be closer to a real next generation graphical experience from the demos I have seen.

Ultra performance dropped the image quality quite a lot on other games I tried, lots of out of focus. I haven't tried it on this but was pretty noticeable drop on cod and WD.

On New call of duty they say ultra performance is only for people aiming for 8k.

to help out here RTX ultra preset (everything maxed) DLSS qaulity (crowds high) 4K 120hz - 55-90FPS core clock 1980Mhz (stock)

what i did notice though & is a biggie

my 2950X 4.4ghz was at 45-75% total. yes thats 75% of 16 cores!

To compare vahalla/Watch Dogs/Witcher 3 uses 10-20% if that on my CPU the CPU is doing a lot more work

I'll be looking to turn a few things down most likely to ensure i can get FPS as high as possible as i hate low FPS.With RT on?

It runs worse than Crysis did at launch though. You barely break 14 FPS without DLSS with RTX on. If we had DLSS in 2007, Crysis would have run pretty much the same.

It may be an intergenerational game but it runs at sub 30 FPS and 720p on the older consoles. Not exactly a good experience.

ultra performance = 1080p/720p miught as well play native 1440p at that pointUltra performance dropped the image quality quite a lot on other games I tried, lots of out of focus. I haven't tried it on this but was pretty noticeable drop on cod and WD.

On New call of duty they say ultra performance is only for people aiming for 8k.

Ugh, I didn't want to see that. I hope that's not what it's like or I'll put off playing it till the game is fixed.Recorded using phone is my system(GPU) messed up or is this the game?. Seeing all sorts of weird disappearing details and shadows.

Thanks a lot. I wonder what hardware they use to run the game on GeForce nowTried it, performance felt pretty good (I can't measure the framerate) at max settings, including raytracing,1080P with DLSS performance. The only problem was the game looked quite blurry no matter whether DLSS was on or off, but that might be due to high load on Nvidia's servers.