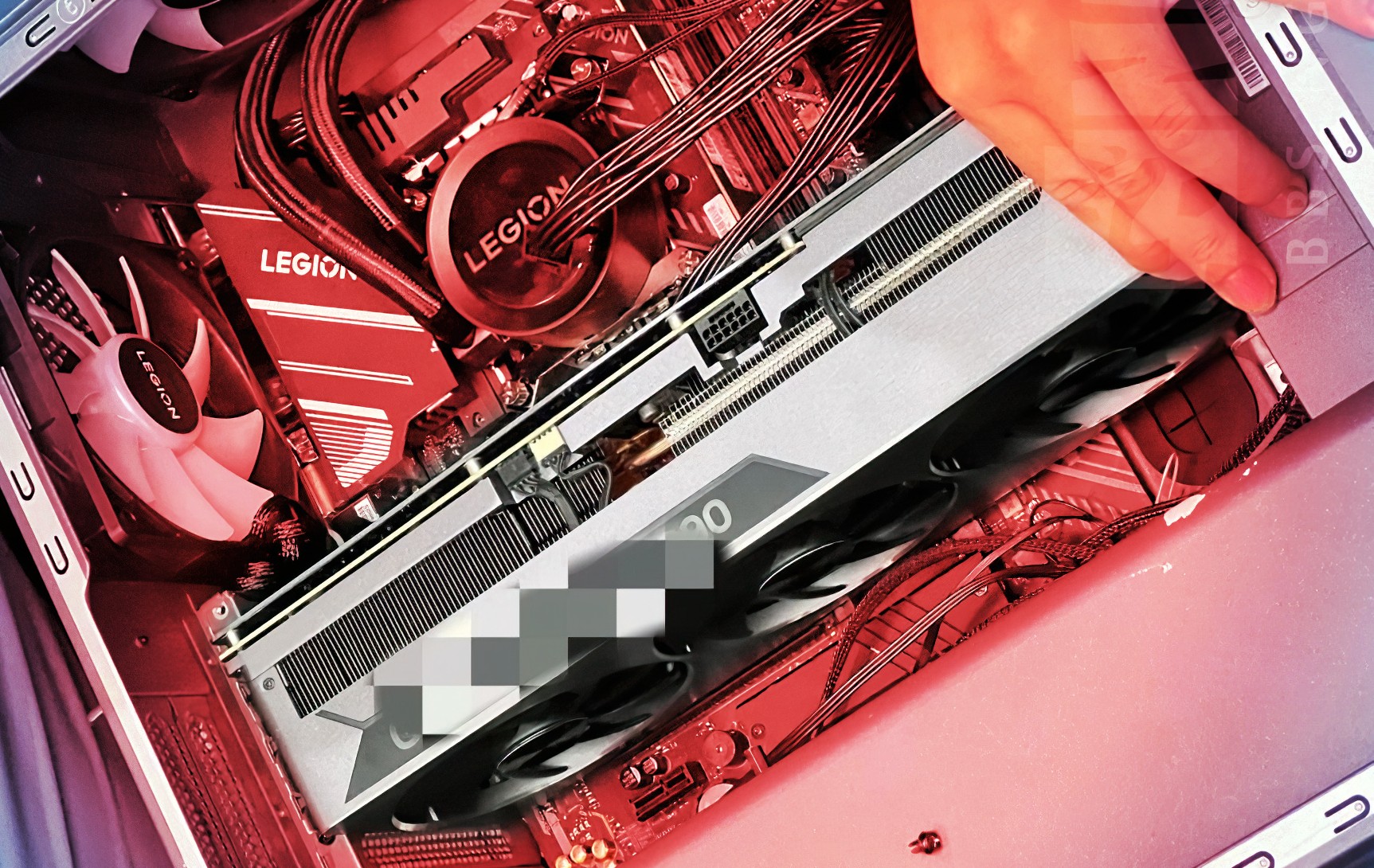

GeForce RTX 4090

Starting with RTX 4090, this model features AD102-300 GPU, 16384 CUDA cores and boost clock up to 2520 MHz. The card features 24GB GDDR6X memory, supposedly clocked at 21 Gbps. This means that it will reach 1 TB/s bandwidth, just as the RTX 3090 Ti did. Thus far, we have only heard about a default TGP of 450W, but according to our information, the maximum configurable TGP is 660W. Just note, this is the maximum TGP to be set through BIOS, and it may not be available for all custom models.

GeForce RTX 4080 16GB

The RTX 4080 16GB has AD103-300 GPU and 9728 CUDA cores. The boost clock is 2505 MHz, so just about the same as RTX 4090. This model comes with 16GB GDDR6X memory clocked at 23 Gbps, and as far as we know, this is the only model with such a memory clock. The TGP is set to 340W, and it can be modified up to 516W (again, that’s max power limit).

GeForce RTX 4080 12GB

GeForce RTX 4080 12GB is what we knew as RTX 4070 Ti or RTX 4070. NVIDIA has made a last-minute name change for this model. It is equipped with AD104-400 GPU with 7680 CUDA cores and boost up to 2610 MHz. Memory capacity is 12GB, and it uses GDDR6X 21Gbps modules. RTX 4080 12GB’s TGP is 285W, and it can go up to 366W.

As you can see, there is no RTX 4070 listed for now, and AIBs do not expect this to change (after all, it’s just 6 days until announcement). As far as the launch timeline is concerned, RTX 4090 is expected in the first half of October, while RTX 4080 series should launch in the first two weeks of November. We are waiting for detailed embargo information, so we should have more accurate data soon. To be confirmed are still PCIe Gen compatibility and obviously pricing.