-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA 4000 Series

- Thread starter MrClippy

- Start date

More options

Thread starter's postsAssociate

- Joined

- 24 Sep 2020

- Posts

- 342

The 4080 is 50-60% faster than the 3080, about the same difference as 2080 to 3080. The pricing is ****ing diabolical and no one should buy one till they're down to $800 for the 16GB tops, but I don't think you can say it's not 'getting much of an upgrade'. It's the same of better than most previous gens.Other than 4090 buyers no one else is really getting much of an upgrade this gen so nvidia will have plenty of room for manoeuvre lower down the stack on the 5000 series.

All nvidia have to do is a +30% for a 5090 with better RT and maybe throw in a exclusive DLSS4.0. even if they don't change the node should be possible by utilising a full 102 chip with some optimisations.

Associate

- Joined

- 19 Sep 2022

- Posts

- 1,084

- Location

- Pyongyang

Chiplets are not efficient, they're just supposed to be cheaper to produce and provide a path for scalability that's independent of process technology..Nvidia is in a bit of a pickle now, they've pushed this architecture about as hard as they can and they've manufactured it on the most cutting edge node available for this product size (TSMC 4nm).

There is no significant new node past 4nm with a significant boost in efficiency that they'll be able to leverage next - to produce these 4090's and push the architecture this hard Nvidia essentially went from 8nm/10nm straight down to 4nm/5nm, it's a massive jump and they won't get that again.

I don't know what Nvidia is doing, but I know what they should be doing - working on a new ground up architecture that they can launch with in 2024 and it needs serious efficiency improvements.

Nvidia has a couple other options available to them if they don't want to build a ground up architecture like AMD did with RDNA. They can continue to work with the Lovelace GPU and tweak the core layout to favour Ray Tracing as more games move over to RT. They could move to chiplets, keep the underlying Lovelace core but split it into small chiplets thus allowing more cores and more efficiency

Signals outside a chip are expensive by an order of magnitude than those dealt within a single chip.

On the efficiency front you have got technologies like dlss.. which is 4-5x as efficient as rasterization.

Last edited:

The biggest factor for me is that most PSU's at 1KW or above are really overpriced, I bought a 1KW back in 2010 for just 135 quid and it was no basic unit.

Was the SST-1000P from Silverstone, it now powers a powerful amp and subwoofer. 13 years almost and it only had 1 fan replacement.

The one I have now is almost the same cost as the 1KW.

MSI MPG A850GF UK PSU 850W, 80 Plus Gold certified, Fully Modular

Order MSI MPG A850GF UK PSU 850W, 80 Plus Gold certified, Fully Modular now online and benefit from fast delivery.www.overclockers.co.uk

Strange, I paid £240 for a high quality 1kw PSU back in 2007 (Enermax Galaxy)..... See below.

Which model PSU did you go for?

Nvidia is in a bit of a pickle now, they've pushed this architecture about as hard as they can and they've manufactured it on the most cutting edge node available for this product size (TSMC 4nm).

There is no significant new node past 4nm with a significant boost in efficiency that they'll be able to leverage next - to produce these 4090's and push the architecture this hard Nvidia essentially went from 8nm/10nm straight down to 4nm/5nm, it's a massive jump and they won't get that again.

I don't know what Nvidia is doing, but I know what they should be doing - working on a new ground up architecture that they can launch with in 2024 and it needs serious efficiency improvements.

Nvidia has a couple other options available to them if they don't want to build a ground up architecture like AMD did with RDNA. They can continue to work with the Lovelace GPU and tweak the core layout to favour Ray Tracing as more games move over to RT. They could move to chiplets, keep the underlying Lovelace core but split it into small chiplets thus allowing more cores and more efficiency

Nvidia need a Kepler > Maxwell moment. As you say, a huge increase in efficiency is required to keep driving forward performance increases.

Next gen they can't just increase TDP to 900W, it's not practical, unless they'd start selling whole PC units, with PSU, GPU, CPU etc all Nvidia controlled and integrated into a shiny big box. I don't think even Nvidia would get away with that for the 5000 series (Would be ~£8k), maybe for the 6000 series!

So They have to go the new architecture/efficiency route, which is extremely difficult. If they pull it off, AMD will be completely removed from the dGPU market at this point IMO.

Last edited:

They can and they will! All the power!They can't just keep increasing power usage every gen it has to give at some point

Associate

- Joined

- 30 Aug 2022

- Posts

- 897

- Location

- Earth

Doesn't fit inside one of the most popular PC gaming cases.

Massive Fail.

It's as bad as the new terminal at an international airport, that is too small to be used by the most popular planes used in international flights.

Last edited:

Blackwell

That'll be getting cancelled.

The 4080 16gb isn't that much faster than a 3090ti in raster which in itself is around 25% faster than a 3080 so it'll more than likely average around 40-45% faster.The 4080 is 50-60% faster than the 3080, about the same difference as 2080 to 3080. The pricing is ****ing diabolical and no one should buy one till they're down to $800 for the 16GB tops, but I don't think you can say it's not 'getting much of an upgrade'. It's the same of better than most previous gens.

Soldato

- Joined

- 28 Sep 2014

- Posts

- 3,774

- Location

- Scotland

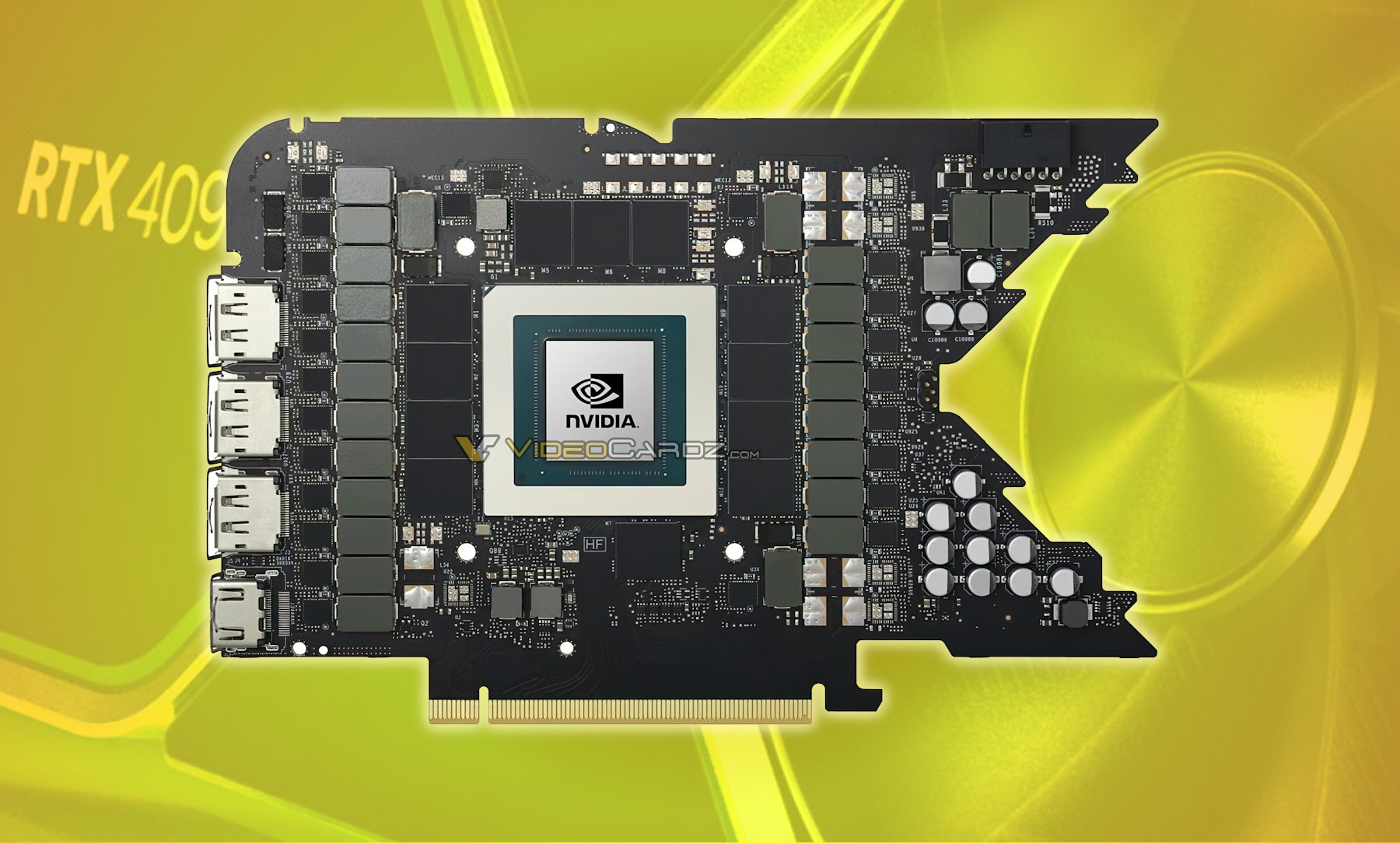

NVIDIA GeForce RTX 4090 PCB shows minor changes from RTX 3090 Ti - VideoCardz.com

RTX 4090 PCB looks a lot like RTX 3090 Ti We have official picture of the RTX 4090 Ti board design. Up to 28 phases can be added to AD102 Founder Edition

Associate

- Joined

- 24 Sep 2020

- Posts

- 342

Mate, the benchmarks are already leaked and out there. It's 50-60% just as previous gen. I have no idea why you're comparing it to the 3090ti to try to make a point. Thing is, we agree. The 4080 is terrible value for money, NVIDIA should be ashamed and no one should buy one. We don't need to make up low figures for performance gains to back that up.The 4080 16gb isn't that much faster than a 3090ti in raster which in itself is around 25% faster than a 3080 so it'll more than likely average around 40-45% faster.

Also, percentage gain on top of percentage gain, the way you're calculating, will utilise compounding. Which is why your sums aren't right. Once you add 25% to one quantity, adding another 25% does not equal +50% overall, because the second 25% is 25% of 125%. That's compounding.

Last edited:

I'm going by Nvidias own benchmarks which show it as an average of 20% faster than a 3090ti.Mate, the benchmarks are already leaked and out there. It's 50-60% just as previous gen. I have no idea why you're comparing it to the 3090ti to try to make a point. Thing is, we agree. The 4080 is terrible value for money, NVIDIA should be ashamed and no one should buy one. We don't need to make up low figures for performance gains to back that up.

Tomorrow for FE I think. Although they seem to all have AIB's as well.When are the tech guys getting to test these 4090s, just before the release?.

Last edited:

When are the tech guys getting to test these 4090s, just before the release?.

They've had them for a few weeks, did some unboxing videos last week and memed at the size of them, their reviews will drop when the embargo lifts.

If that is Mike Benchmark then it's complete ********, did the same at the upcoming 3000 release.

All fake.

Wete are these benches,Mate, the benchmarks are already leaked and out there. It's 50-60% just as previous gen. I have no idea why you're comparing it to the 3090ti to try to make a point. Thing is, we agree. The 4080 is terrible value for money, NVIDIA should be ashamed and no one should buy one. We don't need to make up low figures for performance gains to back that up.

Also, percentage gain on top of percentage gain, the way you're calculating, will utilise compounding. Which is why your sums aren't right. Once you add 25% to one quantity, adding another 25% does not equal +50% overall, because the second 25% is 25% of 125%. That's compounding.

Associate

- Joined

- 24 Sep 2020

- Posts

- 342

The 3090ti is on average 32% faster than the 3080 at rasterisation: https://gpu.userbenchmark.com/Compare/Nvidia-RTX-3090-Ti-vs-Nvidia-RTX-3080/I'm going by Nvidias own benchmarks which show it as an average of 20% faster than a 3090ti.

If the 4080 is another 20% on top of that, as you yourself state, that's 59% total gain over the 3080 (including compounding because you're doing it in a weird way). So you agree with me. You just want to frame those numbers in a more negative-looking way because you're rightly upset by the pricing. As I said, we don't need to exaggerate. It's unhelpful.

NVIDIA GeForce RTX 4080 16 GB 3DMark Benchmark Leaks Out, Up To 62% Faster Than RTX 3080

An alleged NVIDIA GeForce RTX 4080 16 GB graphics card benchmark within 3DMark has leaked out and shows up to 60% gain over the RTX 3080.

The biggest factor for me is that most PSU's at 1KW or above are really overpriced, I bought a 1KW back in 2010 for just 135 quid and it was no basic unit.

Was the SST-1000P from Silverstone

I'm a bit confused. Sticking 135 in the inflation calculator gives £192. OCUK are currently selling a Plat 1000W Silverstone for £195?

Is it the difference between 1000W and 750 ish? I picked up an EVGA 750W gold for £69 last year. Thats the lowest price I've ever paid for a new PSU.

Last edited:

Associate

- Joined

- 24 Sep 2020

- Posts

- 342

BTW, just seen another retailer (naming no names) put up some early pricing on the ROG Strix 4090 at £2,269. Hahahahahahahaha! Yeah, good luck with that. Best set aside a nice permenant spot in your warehouse for those to gather dust.

Last edited: