Fixing the symptom, not the cause.

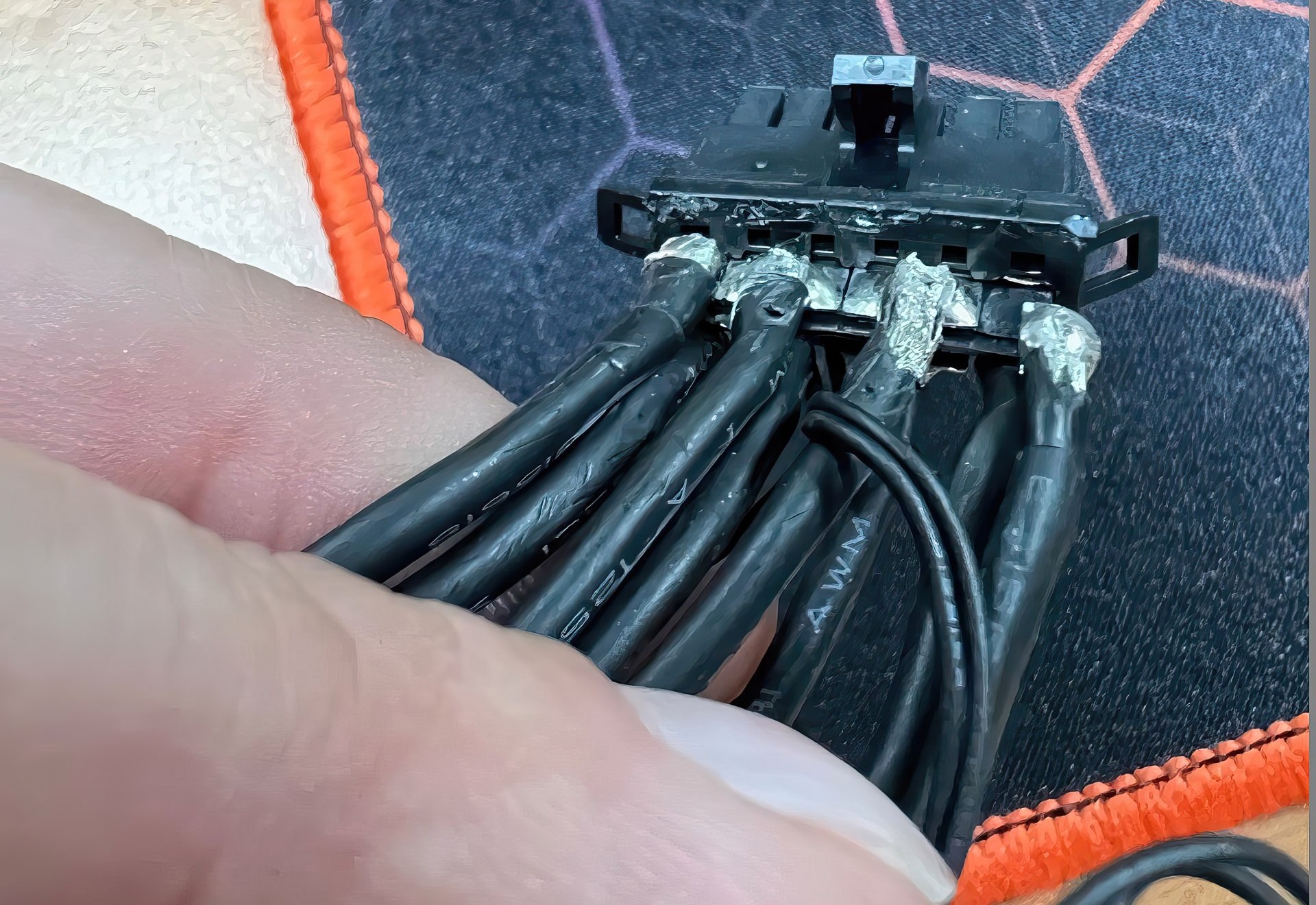

The issue of excessive heat, leading to melting, is due to poor electrical contact. The solution is to fix the contact, not just dissipate the additional heat.

Everything points to a bend in the cable causing some of the pins to be partially retracted, reducing contact area, increasing resistance and thus producing more heat.

Solution for now is to keep the cable as straight as possible, even if it means running without a side panel and having it poking out the side, until you can get hold of a better cable or something like CableMod's forthcoming right-angle adapter.

I'm willing to bet that, after a suitable period of claiming there's no issue, NVidia will be forced to provide updated cables and/or adapters to solve this.

Its so much nicer having a single cable to deal with and get a nice clean run to the psu in the back where it terminates to 3 x 8 pin ( 600w if needed ) .

Its so much nicer having a single cable to deal with and get a nice clean run to the psu in the back where it terminates to 3 x 8 pin ( 600w if needed ) .