Perhaps because they over charged MRSP for last gen, https://youtu.be/VFHOZN5AV6E?t=1377Yet AMD managed to either cut or keep the same prices for its CPUs built on 5nm

Last edited:

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Perhaps because they over charged MRSP for last gen, https://youtu.be/VFHOZN5AV6E?t=1377Yet AMD managed to either cut or keep the same prices for its CPUs built on 5nm

Its pretty nuts watching him rant about that card at the time, $380. oof....Perhaps because they over charged MRSP for last gen, https://youtu.be/VFHOZN5AV6E?t=1377

Its pretty nuts watching him rant about that card at the time, $380. oof....

Its £210 now. a really good card for that.

Sapphire Radeon RX 6600 Pulse Gaming 8GB GDDR6 PCI-Express Graphics Card

Order Sapphire Radeon RX 6600 Pulse Gaming 8GB GDDR6 PCI-Express Graphics Card now online and benefit from fast delivery.www.overclockers.co.uk

RTX 3060, £300... still oof.

Gainward GeForce RTX 3060 Ghost LHR 12GB GDDR6 PCI-Express Graphics Card

Order Gainward GeForce RTX 3060 Ghost LHR 12GB GDDR6 PCI-Express Graphics Card now online and benefit from fast delivery.www.overclockers.co.uk

12gb though

I mean, sure, it has that going for it, but its not a £300 card and its a shame Nvidia didn't do that for GPU's higher up with more grunt.

Sorry, how much are you paid?, I see you make more excuses for Nvidia than Jensen has leather jackets!! LOLCapped rate for my home in the UK. For the USA since 1st April, "The new rate for residential accounts will be 11.71 cents per kilowatt-hour."

Don't forget since the MRSP of the 3070 was announced not only is the smaller node more expensive but TSMC announced 10 to 20% price increase. Substrate prices went up, other component prices went up, even the price of the cardboard box went up. When the selling price goes up even extra vat goes up, so if the selling price goes up by £50 you have to add an extra £10 for VAT.

We heard that companies such as nvidia were booking long term supply contracts to ensure supply of components to make gpus, so are they locked into higher prices? Also it has been claimed that they booked more capacity at TSMC than they likely need so is the extra cost of any unused capacity figured into the price. While gamers shouldn't have to pay extra for gpus because Nvidia signed expensive contracts that they no longer need for components that are now readily available, is this factored into the BOM cost for the 4000 series. My experience in business is that it would be, if it has occured

There is no need to buy at launch, wait and see if they go down in price eg EOL, remember Jay2cent getting excited about price reductions on gpus in the Nvidia shop lol, https://youtu.be/p79H_XOwpZo?t=61

The extra 4GB isn't free, neither is the node shrink so of course the 4070 costs more than the 3070, even before inflation.

As I have said previously when going to a newer node Nvidia has to decide how much of the benefit goes on performance increase and how much on reduced power consumption. Many people on here complain that they wanted more performance than power savings, however, that ignores growing pressure on Nvidia to reduce power usage, eg as above, https://www.pcworld.com/article/394956/why-california-isnt-banning-gaming-pcs-yet.html

Is the fact that the 4070 isn't selling well because people have checked out reviews on its performance and decided that it doesn't give enough of an upgrade on the Nvidia 3000/AMD 6000 series that they bought?

I agree with you that the 4070 isn't a good performance increase over 3000/6000 for the price, however, the energy reduction compared to the 3080 is significant and lowers cost to own over life, the more you play the more you save should be the new Nvidia phrase. If AMD get FSR3 working on my 6800 then the 4070 could be seen as a down grade because of the reduced VRAM.

I use Radeon chill to limit power usage on the 6800, plus the power slider. There is a limit to how much you can get the card down to though, eg just compare the vram, 16GB on the 6800 takes more to power than just 4Gb on the 6500xt.

Taken from the review of the 2060 12GB by Techpowerup, "Compared to the RTX 2060, non-gaming power consumption is increased because the extra memory draws additional power." https://www.techpowerup.com/review/nvidia-geforce-rtx-2060-12-gb/35.html

Even the 6500xt I frame cap in less demanding games. I get that many, perhaps most, people wish that the 4070 had used the same amount of power as the 3080 and significantly improved performance, however, as I have shown above there is growing pressure on Nvidia and other computer hardware companies to limit the power usage of computers, eg by the state of California.

Not only does a 300 watt gpu cost electricity to run but it costs money for the air conditioning to remove that heat in some parts of the world, such as my home in Missouri, USA

If people don't think that the 4070 is acceptable over the 3070 I can't wait to read what they think about the 4060ti/4060.

Sorry, how much are you paid?, I see you make more excuses for Nvidia than Jensen has leather jackets!! LOL

But seriously, this compay is raking in 60% margins, their actions are for greed only, they are not satisfied with "profit" they need megga growth to prop up their bloated share price due to covid and crypto mining, now they expect the general pucblic, the typical low spec PC gamer to make sure they don't lose investors.....

We do not care about Nvidia profits, make products the delight your customers with solid performance & value for money or GTFO and just stop making GPUs, because you're just wasting my time, yes I am talking to you Nvidia. And AMD, stop watching what big daddy does before you make a move, either make a solid product with solid value for money or you can do one too!, its an utter joke your 7800xt might be slower than a 4070ti, it just goes to show what the 7900xt really is and should have been, urine takers....both of them.

Ever heard of don't attack the person, challenge the action? The 4070 offers around 3080 performance, indeed better than it if using dlss3 and the 3080 is limited by 10GB vram. While doing so using much less power.Sorry, how much are you paid?, I see you make more excuses for Nvidia than Jensen has leather jackets!! LOL

But seriously, this company is raking in 60% margins, their actions are for greed only, they are not satisfied with "profit" they need megga growth to prop up their bloated share price due to covid and crypto mining, now they expect the general pucblic, the typical low spec PC gamer to make sure they don't lose investors.....

We do not care about Nvidia profits, make products that delight your customers with solid performance & value for money or GTFO and just stop making GPUs, because you're just wasting my time, yes I am talking to you Nvidia. And AMD, stop watching what big daddy does before you make a move, either make a solid product with solid value for money or you can do one too!, its an utter joke your 7800xt might be slower than a 4070ti, it just goes to show what the 7900xt really is and should have been, urine takers....both of them.

VRAM prices have crashed. Components have gone down in price since the pandemic - companies in other areas have even said so. The PCB is much simpler and so is the cooler. Stop reading Nvidia marketing.

If people care so much about cost,then maybe people shouldn't ever moan about energy prices or drug prices at all. Big Oil and Big Pharma have costs too.

I thought that my 6500xt is basically a laptop gpu, it uses 2 watts at idle!Which again is OK for people have no clue. For PC gamers who have a clue,they can drop power a huge amount. I had SFF PCs for over 15 years,so no I don't agree with the of the selling lower end trash for high prices make sense.

The RTX4070 is just over 40% faster than an RTX3060TI for 60% more money. The RTX3060TI was 40% faster than an RTX2060 Super for similar money.

This marketing nonsense about saving money with power existed years ago. People were spending £200 on GTX960 cards because the R9 290,which destroyed it in performance,would cost more in power and these people had full sized tower cases not even a SFF PC.Yet those same people just had to upgrade quicker so how is this saving money?

So all this spin of saving money is secondary to performance. If people care so much about power,an XBox Series S sips power - DF measured 84W at the wall under full load.

The XBox Series X consumes between 150W~170W. Laptops destroy most desktops on power effiency too. The best binned consumer parts go to laptops.

Owning a desktop and large PC monitors is not an indication of really wanting to save power.

Why is USA states like California trying to reduce power usage of computer parts like gpus making excuses for Nvidia? Why is it not a good thing? https://www.makeuseof.com/why-the-california-ban-on-power-hogging-pcs-is-a-good-thing/An example of anti-consumerists. PCMR and gamers in general are some of the weakest willed,most easily manipulated consumers in the world. I thought Apple uberfans were bad,but Apple hardware sales have crashed showing they have gotten enough.

Apple has much lower GAAP gross margins than Nvidia. Soon people will be defending Big Pharma and Big Oil profits on here. It is Big Oil who are doing the same as Nvidia and jacking up prices because they can,and taking advantage of the war.

Yet PCMR who is complaining about energy prices,now makes excuses for tech companies who are doing the same. You can't even make this cognitive dissonance up.

If you wanted to spend that little bit more on the 4070.

Colorful $829 RTX 4070 Neptune is now available, the only liquid-cooled RTX 4070 - VideoCardz.com

Neptune makes a splash with a high price tag Colorful GPU is only water-cooled RTX 4070 on the market. Japanese GDM that the iGame RTX 4070 Neptune OC is now available. This is a high-end SKU based on the most powerful PCB Colorful designed for the RTX 4070 GPU. The iGame Neptune is a...videocardz.com

Magic 8 Ball says "don't count on it".

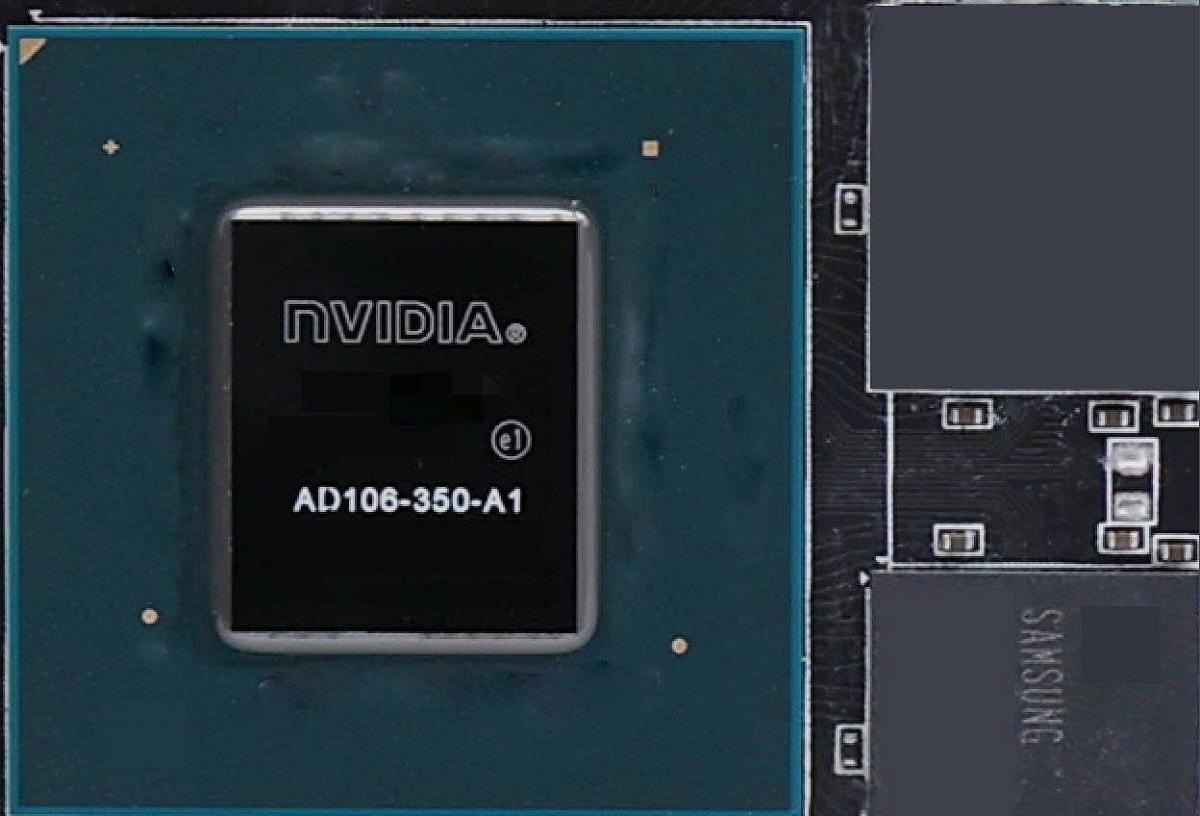

4080 used AD 103 which was also used in 4090 Laptop with 16GB

4070Ti/4070 used AD104 which was also used in 4080 Laptop with 12GB

So I imagine 4060 will use AD106 which is the 4070 Laptop part that currently has 8GB on a 128-Bit bus

GeForce 40 series - Wikipedia

en.wikipedia.org

Edit:

6750XT is the ~£400 12Gb option now

So anyone on a 2000 series who skipped the 3080 and buys a 4070 is getting IMO a better product at a cheaper price. Some people might have wanted more fps from the 4070 over the 3080, which dlss3 will give them in games like cyberpunk, and others might welcome the lower power usage.

Bumping for posterity, now that news articles are cropping up with this

4060Ti also doesn't even look likely to get the full quota of AD106's shaders

GeForce RTX 4060 Ti GPU pictured

The competition for the mid-range GPU market is heating up, with AMD and NVIDIA both preparing to launch new graphics cards based on the RDNA3 and Ada Lovelace architectures. ...www.guru3d.com

It is good progress just more on wattage than fps, many people might not like that much of the progress is by way of power consumption over fps, but in regards to frames per watt it has made good performance progress, which is the way states like California are directing computer hardware companies as they are banning PCs that use to much energy according to certain criteria.It's only a slightly better product, is the point. It's not great progress from gen to gen. You are right that it's a better product than the 3080, for slightly less money, but that is performance that could be had over 2 years ago.

People want progress at all levels in the hierarchy not the holding back of performance in order to push people into the more expensive models.

It is good progress just more on wattage than fps, many people might not like that much of the progress is by way of power consumption over fps, but in regards to frames per watt it has made good performance progress, which is the way states like California are directing computer hardware companies as they are banning PCs that use to much energy according to certain criteria.

Your idea of progress eg fps, differs from mine fps per watt. There will be people who agree with your view and people who welcome the progress on performance per watt rather than fps. This review recognises the progress on power consumption, https://youtu.be/DNX6fSeYYT8?t=1106

Progress on watts per frame is still progress

This is generally what happens when a new gen comes out but instead of a £600 70 class offering previous gen 80 performance its the £300-400 60 class which offers or beats the previous gen 80 while using a lot less power.The 4070 offers around 3080 performance, indeed better than it if using dlss3 and the 3080 is limited by 10GB vram. While doing so using much less power.

We are not carbon neutral in the UK so any reduction in energy usage is kinder to the environment, kinder on budget gamers wallets and reduces the need of the UK to import energy.

It is good progress just more on wattage than fps, many people might not like that much of the progress is by way of power consumption over fps, but in regards to frames per watt it has made good performance progress, which is the way states like California are directing computer hardware companies as they are banning PCs that use to much energy according to certain criteria.

Your idea of progress eg fps, differs from mine fps per watt. There will be people who agree with your view and people who welcome the progress on performance per watt rather than fps. This review recognises the progress on power consumption, https://youtu.be/DNX6fSeYYT8?t=1106