Bumping for posterity, now that news articles are cropping up with this

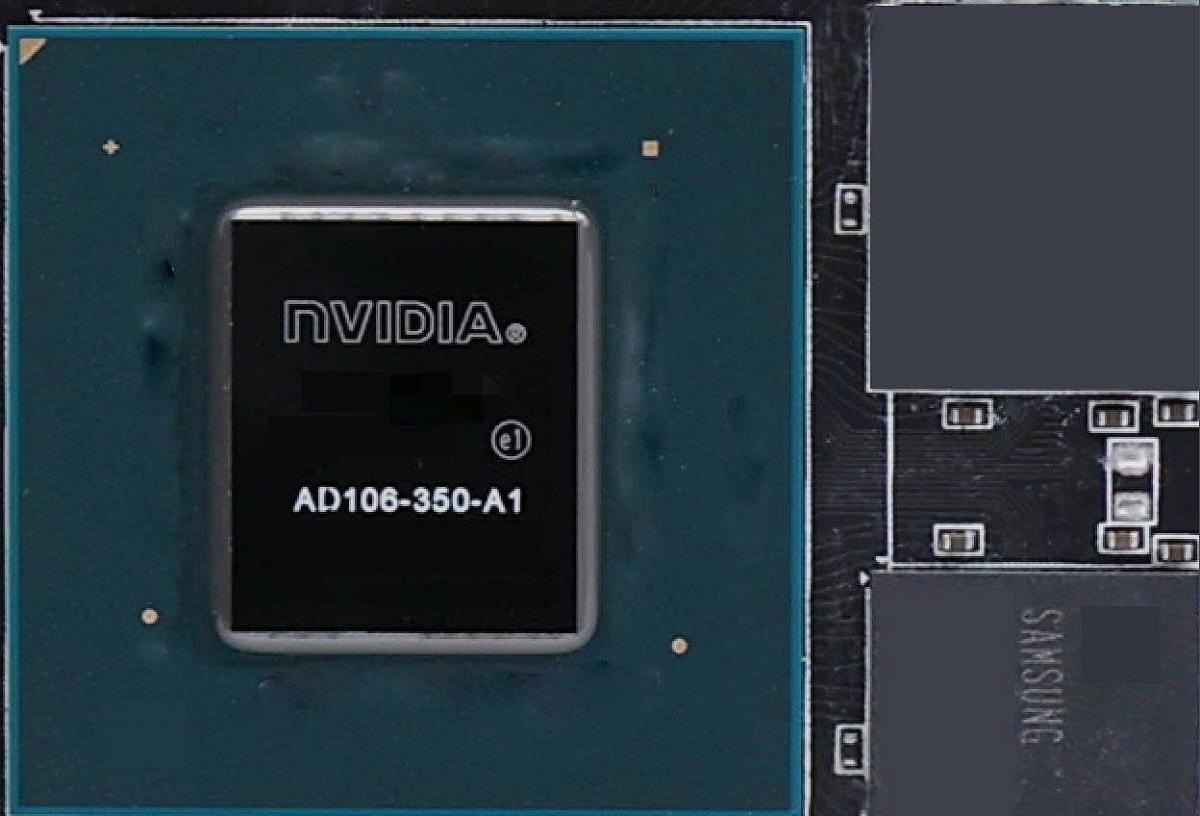

4060Ti also doesn't even look likely to get the full quota of AD106's shaders

4060Ti also doesn't even look likely to get the full quota of AD106's shaders

The competition for the mid-range GPU market is heating up, with AMD and NVIDIA both preparing to launch new graphics cards based on the RDNA3 and Ada Lovelace architectures. ...

www.guru3d.com

The more you pay the more you are gimped!

Meh.... so long as its PCie 4 that's fine for a card like that.

It has 10% less shaders and 45% less memory bandwith, i know it says 22TFlops 4060Ti vs 16.2 3060Ti but that's as massive loss in memory bandwidth which the FP32 just doesn't account for, in reality IMO this is barley quicker than the card its replacing, sometimes it might even lose. I hope reviewers find where it does and ridicule it.

Most people are still on PCI-E 3.0 systems though. The RX6600XT in some circumstances could lose a decent amount of performance.

This is an even faster dGPU,with only 8GB of VRAM,etc so there could be many instances where the card runs out of VRAM before it runs out of grunt.

Except for the 4090. Only one worth buying just to get the frontier performance. Everyone else should hold if they can.

To a degree. But in terms of percentage of the full die the RTX4090 uses 89% of the full chip,the RTX3080 81% of the full chip and the RTX3090 98% of the full chip.

The following article sums up why I do not think Nvidia is that bothered by low consumer GPU sales.

On a day that saw the second-largest bank failure in US history, NVIDIA shares reached a fresh 1-year high as the AI mania continues.

wccftech.com

Its another craze, like crypto mining, that has hurt your ordinary gamer.

The problem with market capitalisation its another false speculative metric,based on

predicted revenue and hype can push share prices up. The problem is if the actual revenue even misses the mark by a few percent it will all go down the drain. Just look at what happened to Intel.

The tech market is hitting serious headwinds,so all the speculators are loading onto AI,but the issue so is EVERY large tech company. The excessive amount of money printing has lead to insane amounts of money being pushed around the tech sector - this is what you need to blame for all of this. It was

some of this money which enabled low interest rates for people to buy consumer goods. How interesting the moment interest rates start going up,etc we suddenly are seeing collapsing sales everywhere.

Now you are slowly starting to see Quantitative Tightening being a thing,with increasing interest rates. So in the next few years when the money tap starts getting turned down it will be a double whammy for the tech sector:

1.)Consumers can borrow less to fund the massive increases in purchase prices

2.)Companies will find it harder to borrow

Things like the CHIPS Act might still prolong the amount of cheap credit available,but even that is targetted towards certain things.

The tech sector has dined too long on cheap credit.