-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The RT Related Games, Benchmarks, Software, Etc Thread.

- Thread starter Kaapstad

- Start date

- Status

- Not open for further replies.

More options

Thread starter's posts

Portal with RTX Review - Amazing Raytracing

Valve has teamed up with NVIDIA to remaster their smash-hit Portal with ray tracing. Unlike most other titles out there, which combine rasterization and ray tracing, Portal with RTX is fully path traced, which enables astonishing realism, but also comes with a huge performance hit.

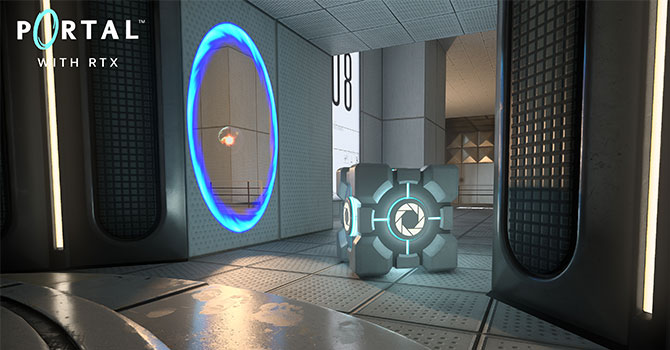

If you take a look at our comparison screenshots it becomes apparent what a monumental improvement the new version of the game is. Especially lighting looks incredible now. While in the original Portal you could easily spot incorrect and unrealistic lighting and shadows, things look completely different in the RTX version. Everything looks perfect and physically accurate. I did play through the game, trying to find issues and there are indeed some locations where things don't look right—if you spend time thinking about how "correct" results should look like. This is nothing you'd ever notice during normal gameplay, and I feel it's certainly within the boundaries of what game artists are allowed to do to make things look slightly different for effect.

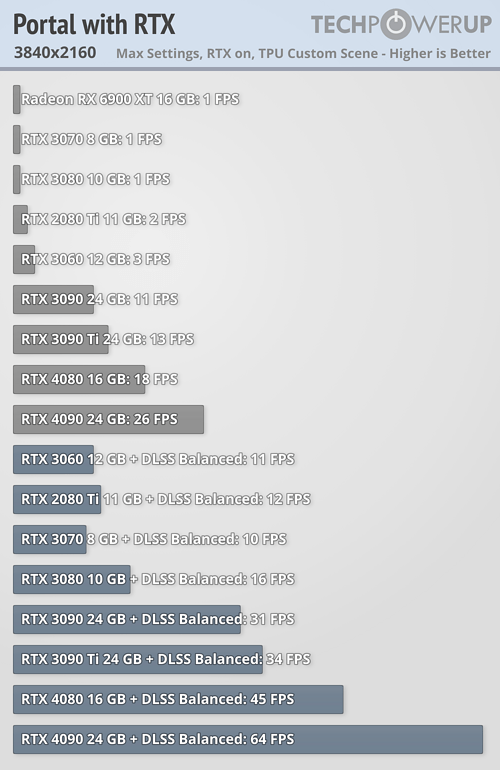

As expected, the performance hit from ray tracing is massive. The original Portal runs at around 245 FPS in any resolution on our test system, no matter the graphics card, it's purely CPU-limited. With RTX enabled, framerates drop considerably. Even the mighty RTX 3090 Ti only gets 49 FPS at 1080p (!!). NVIDIA's new Ada GPUs do better here, RTX 4080 gets 67 FPS and RTX 4090 reaches 93 FPS. Remember, that's at 1080p. Once you dial up the resolution, FPS go down even more. At 1440p, the 3090 Ti gives you 28 FPS, 4080: 39 FPS and 4090 manages slightly below 60, with 56 FPS. At 4K, everything ends up below 20 frames per second, only the 4090 is able to reach 26 FPS. These numbers are hardly "playable" by PCMasterrace standards, that's why it's essential that you use DLSS to cushion the render load. With DLSS enabled, things are looking much better and Portal is extremely playable. Still, even with DLSS, the requirements are high. For example RTX 3070 with DLSS Balanced at 1080p is barely able to break the 60 FPS barrier. The AMD Radeon RX 6900 XT really isn't fit for this level of ray tracing. It starts out with 5 FPS at 1080p and almost runs in "seconds per frame" mode at 4K.

During testing I was surprised that several "decent" cards like RTX 3080 and RTX 3070 ended up with just a single frame-per-second at 4K. Digging down further it turns out the VRAM requirements of Portal RTX are really high. We plopped in a 24 GB RTX 4090 and tested various resolutions. At the outdated resolution of 1600x900, the VRAM usage already hits 7.5 GB. At 1080p you need 8 GB VRAM, at 1440p you better have 10 GB, and at 4K the game allocates over 16 GB memory—a new record. Somehow I suspect that the game isn't really optimized for VRAM usage, but this goes to show that future games could end up with serious memory requirements. Enabling DLSS will drastically reduce memory usage though, because it dials down the rendering resolution, so that's always an option.

Overall I have to say I'm very pleased and impressed with how Portal turned out visually. It's also great to see how NVIDIA's various innovations in the RT space come together to unlock additional performance and stunning visuals. On the other hand, the high hardware requirements make it a tough pill to swallow. Portal has been a huge success even with outdated graphics, just like many other titles from Valve. Games are played because they provide entertainment through game mechanics—challenging puzzles in this case. No doubt, stunning and realistic graphics are a big deal in the gaming industry, but I have to wonder.. is it worth spending $1000+ on a graphics card just to play Portal? Is the gaming experience really that much better? That's an answer only you can give.

Looks like them SER efficiency/performance RT improvements for the 40xx, which were talked about in nvidias show are VERY real with dlss! So essentially any/all future games, which implements these optimisations will see the 40xx pull ahead in RT workloads compared to all the non SER RT titles we have had so far

Can imagine the cp RT overdrive mode is also going to be brutal.....

Can imagine the cp RT overdrive mode is also going to be brutal.....Come on nvidia knock another £100 or 2 of the 4090 now

Last edited:

Obviously needs more vram....

Yes some card's actually do; if you look at the TPU benchmark, at native 4k any GPU with less than 12GB VRAM runs the game at 1fps, yes one frame. So yeah, 2080ti? 1fps. 3070? 1fps. 3080? 1fps. RTX 3060 12GB? 3fps.

Even when DLSS is enabled, performance on the 3090 is 200% higher than the 3080 and the 3060 12GB still runs the game faster than a 3070

These VRAM problems don't seem to occur at 1440p, only if the output resolution is at 4k and turning dlss on at 4k doesn't solve the issue it still needs lots of memory

Last edited:

Yes some card's actually do; if you look at the TPU benchmark, at native 4k any GPU with less than 12GB VRAM runs the game at 1fps, yes one frame. So yeah, 2080ti? 1fps. 3070? 1fps. 3080? 1fps. RTX 3060 12GB? 3fps.

Even when DLSS is enabled, performance on the 3090 is 200% higher than the 3080 and the 3060 12GB still runs the game faster than a 3070

These VRAM problems don't seem to occur at 1440p, only if the output resolution is at 4k and turning dlss on at 4k doesn't solve the issue it still needs lots of memory

Yup because 3090 at native 4k and a whopping 11 fps is so much better and that 1 extra fps of a 3060 12gb over a 3070 is also so much better, right.....

Looking like an issue, which will most likely/hopefully get fixed with a patch, as per TPU comment:

Somehow I suspect that the game isn't really optimized for VRAM usage

Same way rdna 2 will likely see some improvements too as clearly 1-2 fps is not right on a 6900xt

EDIT:

Still don't get why TPU are using a 5800x when it has been shown how much it bottlenecks the 4090 and even the 4080 to some extent.....

Last edited:

Yup because 3090 at native 4k and a whopping 11 fps is so much better and that 1 extra fps of a 3060 12gb

EDIT:

Still don't get why TPU are using a 5800x when it has been shown how much it bottlenecks the 4090 and even the 4080 to some extent.....

Absolutely yes the maths doesn't lie, it's 1000% better, huge

And because TPU are idiots who have yet to come up with a reason for not updating their test pc

Last edited:

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,533

Well one could say Unreal Engine 5 games could be next gen. But I get what you mean.

In terms of graphics perhaps. In terms of gameplay features, even physics, things haven't changed much.

24, for a cinematic experience.Wonder how the AMD 7000 series runs Portal RTX, may reach 20fps

Portal with RTX Review - Amazing Raytracing

Valve has teamed up with NVIDIA to remaster their smash-hit Portal with ray tracing. Unlike most other titles out there, which combine rasterization and ray tracing, Portal with RTX is fully path traced, which enables astonishing realism, but also comes with a huge performance hit.www.techpowerup.com

Looks like them SER efficiency/performance RT improvements for the 40xx, which were talked about in nvidias show are VERY real with dlss! So essentially any/all future games, which implements these optimisations will see the 40xx pull ahead in RT workloads compared to all the non SER RT titles we have had so farCan imagine the cp RT overdrive mode is also going to be brutal.....

Come on nvidia knock another £100 or 2 of the 4090 now

10GB is not enough…

…anymore

3080 owners when they try to play portal

Last edited:

3080 owners when they try to play portal

4090 owners when they look into their wallet

Well that's one less version of Portal to worry about, 6900 XT can barely do slideshows in power point with 2 FPS LOL.

Good job we have the original Portal.

I am still impressed with the 6900 XT, completely stomps on the competition in performance for the money, can anyone say 650 quid?

Heck I have been beting 3090 / Ti owners in The Callisto Protocol with RT on for sheer perf numbers, but the devs really need to fix the CPU bottlenecking with RT on, this happens on everyone's systems.

Unsure of the settings used by TPU, considering the numbers I am likely to guess that RT is off because the 6900 XT was not this fast on the prior patch.

Good job we have the original Portal.

I am still impressed with the 6900 XT, completely stomps on the competition in performance for the money, can anyone say 650 quid?

Heck I have been beting 3090 / Ti owners in The Callisto Protocol with RT on for sheer perf numbers, but the devs really need to fix the CPU bottlenecking with RT on, this happens on everyone's systems.

Unsure of the settings used by TPU, considering the numbers I am likely to guess that RT is off because the 6900 XT was not this fast on the prior patch.

Last edited:

Well that's one less version of Portal to worry about, 6900 XT can barely do slideshows in power point with 2 FPS LOL.

Good job we have the original Portal.

I am still impressed with the 6900 XT, completely stomps on the competition in performance for the money, can anyone say 650 quid?

Heck I have been beting 3090 / Ti owners in The Callisto Protocol with RT on for sheer perf numbers, but the devs really need to fix the CPU bottlenecking with RT on, this happens on everyone's systems.

Unsure of the settings used by TPU, considering the numbers I am likely to guess that RT is off because the 6900 XT was not this fast on the prior patch.

Beaten by a 10gb card

In a game most people don't play anymore, we knew Nvidia were going to release a hype game for the 4090, they need to, we will be back to normal after the mindshare clears though and we will find a healthy split between favoring Green and Red.Beaten by a 10gb card

Soldato

- Joined

- 19 Feb 2007

- Posts

- 15,565

- Location

- Northampton

All this stuff tells me is to wait another five years before caring about Ray tracing.

When it's done well RT is really nice, The problem is devs tend to do a half assed job of it half the time to fill a marketing checkbox.

All this stuff tells me, is to wait another five years before caring about Ray tracing.

Or buy GPU with more than 10gb vram

All this stuff tells me is to wait another five years before caring about Ray tracing.

Overthinking if you ask me. So far I have been able to play every RT game just fine. Hell, even the new Portal game will be playable with DLSS with a 3080 Ti from the graphs I saw.

can anyone say 650 quid?

I can say £575 as that’s how much I paid for my 3080 Ti

In a game most people don't play anymore, we knew Nvidia were going to release a hype game for the 4090, they need to, we will be back to normal after the mindshare clears though and we will find a healthy split between favoring Green and Red.

Did Callisto not just come out? Still loads playing it I would imagine. I means that’s the graph you used.

I have always wanted a healthy split, but that is not happening any time soon unfortunately. AMD will need to do more to win back that kind of market share.

The benchmark shown by TPU is without RT which cripples performance by half on literally all hardware, the 6900 XT on patch 1.07 is matching non RT numbers, both are Ultra by the way, the games max graphics settings is "High" they never made that clear in the GPU tests though.Overthinking if you ask me. So far I have been able to play every RT game just fine. Hell, even the new Portal game will be playable with DLSS with a 3080 Ti from the graphs I saw.

I can say £575 as that’s how much I paid for my 3080 Ti

Did Callisto not just come out? Still loads playing it I would imagine. I means that’s the graph you used.

I have always wanted a healthy split, but that is not happening any time soon unfortunately. AMD will need to do more to win back that kind of market share.

Also RT bottlenecks the crap out of all GPU's as there is severe CPU overhead.

Or buy GPU with more than 10gb vram

Tell that to Tired9.

As usual with Grim, all the gear no idea

Them 3080's, Portal was played years ago, I mean it may be pretty to look at but I bet 95% of people who try it won't finish it, lose interest.Tell that to Tired9.

As usual with Grim, all the gear no idea

- Status

- Not open for further replies.