I'm guessing a freeby. A certain company is giving these away with orders at the moment.240GB SSD is an interesting choice.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

More options

Thread starter's postsSoldato

- Joined

- 28 Oct 2011

- Posts

- 8,595

..Yes, Doctor. Definitely another diagnosed case of 'Copium Copiatus'

..its spreading, rapidly, it must be airborne, what shall we do?

Could Nexus18 be a super-spreader? He's been looking a bit peaky since his 10GB 3080 aged like milk in Ray-Tracing.

Oh wow just seen the prices and have Nvidia badged the RTX 4070? Yeah this is a hard pass from me. Will see what AMD bring to the table later on.

Last edited:

Could Nexus18 be a super-spreader? He's been looking a bit peaky since his 10GB 3080 aged like milk in Ray-Tracing.

Aged like milk? Yup damn paying £650 2 years ago where it still holds up relatively well compared to £1+k RDNA 3 RT

I agree with you that usually textures are the most important settings when it comes to visual fidelity. But on the other hand, as an owner of the notorious 1060 3gb ( as well as the 6gb model), people were going nuts back then about the vram as well. And let me tell you, besides pubg that didnt run ultra textures without stuttering here and there, with every other game it was fine. In fact, i changed both cards after a bunch of games (mafia 3, watchdogs 2 and ghost recon) were running like complete crap regardless of textures being used. The raster performance killed the card, not the lack of vram.Just because X game does a terrible job of scaling textures does not mean there are not amazing textures that increase visual fidelity to a massive degree.

The reason people are arguing with you is because you are deluded and will not accept that the 4070ti is not a perfect product. The 4090 is a perfect product and has the price to go with it ,no flaws with this products performance at all. The 4070ti has pretty much the same vram as a gpu from 5 years ago , times move on and it will be a limiting factor in a lot of cases.

The 4070ti is a better slab of silicon than the 7900xt, there I have said it and I am willing to make a concession. the Nv card has similar performance whilst using less power and it does cost less if you buy the right card. It does not make it perfect so open your eyes and see that it does have some flaws. If you want a gpu with no flaws then Nv has you covered with the 4090.

So yeah, whenever people are talking about vram not being enough for future games im like yeah okay whateva. Sure the 4070ti doesn't inspire confidence as a native 4k card, i would only buy it for 1440p or DLSS 4k. But then again - because i like RT, the 7900xt isn't a 4k card either despite the massive amount of vram.

There is no doubt in my mind that 5 years down the line the XT will be the faster card (at least excluding rt) , but are you going to keep it that long?

Yeah it has it's place, just overpriced by at least £200. Paying inflated prices will only get us less value for money in future.THE THREAD TITLE IS :

What do you think of the 4070Ti?

And my answer is I think it fits the BILL Perfectly for me!!! why ? the options where ......buy a rtx 4070ti for £800 , buy a rtx 3080 for £700 or buy a rtx 3090 for £1300 .................so i purchaced a rtx 4070ti and have the same framerate in games I play on 1440p as i would on a rtx £3090, BUT IF I HAD TO GO BY HALF THE POSTS ON HERE I SHOULD HAVE PAID £500 more for the same Framerate and purchaced the rtx 3090 !!!!!! give your heads a shake ..................... please stop bashing the rtx4070ti it has its place , a newby on here might listen /read this verbal diareah written on this tread and spend more money than he needs to !!!

Yeah there's been a steady price creep on top of inflation for a long time but a big jump starting with the RTX cards.I'm in complete agreement with you but a lot of you are way too late now as there was people rasing concerns over the way pricing of gpus was going years ago and all these people was met with was mocking, stop being poor, get better jobs, muh inflation, stop complaining. I'll take a guess that a lot of the people that where mocking the people years ago now are saying something now that its effecting them and they are priced out of the market.

We also had years of fanboys/vendor reps complaing about how 1 company was the good guy and they need the money to compete with the evil nasty evil company and how we needed competetion and now this company is pretty much the same as the evil nasty company they stop complaining. We now have a situation where 2 companies that are a duoply with price fixing, such competetion much wow.

Soldato

- Joined

- 28 Oct 2011

- Posts

- 8,595

Yeah there's been a steady price creep on top of inflation for a long time but a big jump starting with the RTX cards.

Yep, Turing was the watershed, it's been been heavy lube gouging ever since.

What the...

Didn't you used to always buy whatever the top end card was? Well at least back in the day. What's up with buying a 4070 Ti Boomy?

Soldato

- Joined

- 2 Jan 2012

- Posts

- 12,229

- Location

- UK.

£799 DeliveredWhat you pay for it?

Yeah it was a freebie!I'm guessing a freeby. A certain company is giving these away with orders at the moment.

Prices have been crazy, been trying my best to resist buying anything. My 3070 lasted me two years and I want an upgrade for VR, if I get a couple years from this it's not too bad.What the...

Didn't you used to always buy whatever the top end card was? Well at least back in the day. What's up with buying a 4070 Ti Boomy?

Last edited:

You got a free graphics card with your new SSD? Good for you.

Soldato

- Joined

- 2 Jan 2012

- Posts

- 12,229

- Location

- UK.

It's Alive!

Firstly, I'm talking about multiple games, not just one. Secondly, it was about RT performance. Lastly if you only play Warzone 2 then you should only care about Warzone 2 performance and happily buy an AMD card - of course!In Warzone 2 AMD crushes Nvidia so thats the only game that matters. Is that a fair assessment or have I cherry picked an individual result to best highlight the differnces ?

What does equally optimised even mean? We're talking about radically different architectures particularly as it relates to how they both handle RT. It's literally impossible that they could be "equally optimised". Moreover it would be your burden to prove how exactly these are "unequally optimised" when all these games are made with consoles in mind first, which have been on AMD-only hardware for over a decade now! Not only that but EVEN IF it were true that NV dominates cause of their sponsorships rather than its superior hardware, so what? It's still AMD's problem to solve (and an AMD customer's to suffer)! Ultimately as a regular PC user you can't choose who sponsors what, only what card you buy. So it's a big fat L for AMD regardless.Preach.

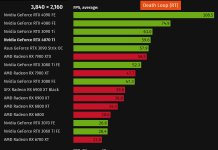

To add to this, Cyberpunk is Nvidia sponsored so it's really not a fair example. If you think the RT implementation is equally optimised for both vendors, you'd be mistaken. This should be obvious to anyone with a modicum of common sense. Regarding Warzone 2 however, that title is vendor neutral, despite the game performing better on AMD hardware. It also uses DLSS/FSR, although i would strongly recommend neither is used in that title as they are both bad.

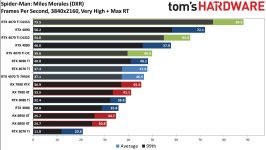

Besides, I can think of AMD sponsored titles where Nvidia still dominates like Riftbreaker or Deathloop. Or should we point out how FSR 2 was also faster on Ampere than RDNA 2? Then what's the excuse, Nvidia sponsored AMD to defeat itself? Or former console-only titles where even with a mild RT implementation Nvidia is clearly superior all the same (Miles Morales). Or a title like Metro Exodus EE where it HAD to be optimised for AMD because it had to run with RT at 60 fps even on consoles (as puny as the Series S, which is essentially an RX 580 w/ DX12 features).

Just accept RDNA is crap when RT is turned on to any significant extent, no need to deny reality because you like one corpo over the other. None of them give a **** about us anyway. Let's at least not spread falsehoods.

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,336

- Location

- Planet Earth

But,but the RTX4070TI is not too expensive,according to the PCMR cultists excuse makers. The tech companies need our monies because they are poor and the tax breaks and US government funds are not enough!

They are both **** poor and the best thing is to keep all of it on the shelf. I don't understand why FOMO is so strong,people have to find some way to salvage any release from tech companies?

Gamers and PCMR cultists on tech forums have become some of the biggest Whales I have ever seen.

Literally none of my gaming mates,including those who tend to buy Nvidia thinks this generation is nothing but overpriced.All I can hear them saying is how much Nvidia and AMD are taking the micky,including those who have RTX3080 and RTX3090 cards.

Go on gaming websites,other PC websites,Twitter,Reddit or even HUKD,etc people are just are fed up with the prices of this current generation. It was the same with the Zen4 CPUs too,apart from some defenders on forums. There were people defending the useless pricing of those too!

In the end AMD had to drop prices,but Zen4 is nowhere as bad as the pricing of the RTX4000 series,and even the RX7900 series.

It just shows you how much of a bubble some people are in. They are on a PC Hardware forum which itself is niche part of the internet,and yet they are trying their best to spin stuff despite most people not agreeing with them. These were the same people defending Turing,etc until Nvidia admitted they were wrong and had to refresh the whole range to be better value for money. The excuse makers should have eaten humble pie,but OFC they went silent when it was proven even Nvidia realised Turing MK1 was overpriced.

Remember in August Nvidia had to write off over a billion USD! They are trying their best to keep margins high as sales overall are not great. Not sure why there are people on here acting like unpaid marketing for Nvidia and AMD. This whole set of releases,from the RTX4000 series,the RX7900XT and Zen4 have been cash grabs.

A Hobson's Choice isn't a choice,its more a an unofficial cartel in this case.

They are both **** poor and the best thing is to keep all of it on the shelf. I don't understand why FOMO is so strong,people have to find some way to salvage any release from tech companies?

Gamers and PCMR cultists on tech forums have become some of the biggest Whales I have ever seen.

Literally none of my gaming mates,including those who tend to buy Nvidia thinks this generation is nothing but overpriced.All I can hear them saying is how much Nvidia and AMD are taking the micky,including those who have RTX3080 and RTX3090 cards.

Go on gaming websites,other PC websites,Twitter,Reddit or even HUKD,etc people are just are fed up with the prices of this current generation. It was the same with the Zen4 CPUs too,apart from some defenders on forums. There were people defending the useless pricing of those too!

In the end AMD had to drop prices,but Zen4 is nowhere as bad as the pricing of the RTX4000 series,and even the RX7900 series.

It just shows you how much of a bubble some people are in. They are on a PC Hardware forum which itself is niche part of the internet,and yet they are trying their best to spin stuff despite most people not agreeing with them. These were the same people defending Turing,etc until Nvidia admitted they were wrong and had to refresh the whole range to be better value for money. The excuse makers should have eaten humble pie,but OFC they went silent when it was proven even Nvidia realised Turing MK1 was overpriced.

Remember in August Nvidia had to write off over a billion USD! They are trying their best to keep margins high as sales overall are not great. Not sure why there are people on here acting like unpaid marketing for Nvidia and AMD. This whole set of releases,from the RTX4000 series,the RX7900XT and Zen4 have been cash grabs.

A Hobson's Choice isn't a choice,its more a an unofficial cartel in this case.

Last edited:

Soldato

- Joined

- 6 Jan 2012

- Posts

- 5,505

It's simple, there's a close relationship with that developer. That means more time for optimisation in game code and development that one vendor is the beneficiary of. Look at the Witcher 3, the same developer, same sponsored title. Now with RT, but prior to that it was the tessellation stuff in The Witcher 3. Seeing a pattern here? Good, I know you are not stupid mate so surprised to see you act like you are. Don't pretend that this does not play a part in overall performance.What does equally optimised even mean? We're talking about radically different architectures particularly as it relates to how they both handle RT. It's literally impossible that they could be "equally optimised". Moreover it would be your burden to prove how exactly these are "unequally optimised" when all these games are made with consoles in mind first, which have been on AMD-only hardware for over a decade now! Not only that but EVEN IF it were true that NV dominates cause of their sponsorships rather than its superior hardware, so what? It's still AMD's problem to solve (and an AMD customer's to suffer)! Ultimately as a regular PC user you can't choose who sponsors what, only what card you buy. So it's a big fat L for AMD regardless.

Besides, I can think of AMD sponsored titles where Nvidia still dominates like Riftbreaker or Deathloop. Or should we point out how FSR 2 was also faster on Ampere than RDNA 2? Then what's the excuse, Nvidia sponsored AMD to defeat itself? Or former console-only titles where even with a mild RT implementation Nvidia is clearly superior all the same (Miles Morales). Or a title like Metro Exodus EE where it HAD to be optimised for AMD because it had to run with RT at 60 fps even on consoles (as puny as the Series S, which is essentially an RX 580 w/ DX12 features).

Just accept RDNA is crap when RT is turned on to any significant extent, no need to deny reality because you like one corpo over the other. None of them give a **** about us anyway. Let's at least not spread falsehoods.

Those two titles you mention, RT was added at a later date. The big difference, RT was playable on RDNA2 but of course at lower frame rates vs Ampere. To be clear, no one (myself included since you quoted me) ever said AMD is stronger at RT than Nvidia. RDNA2 was always weaker. But to be clear, there's a difference between 1 gen weaker and 2 gens weaker and software can play a big part in that, this much should be obvious to you. I guess now that 7900 XTX is around 3090-3090TI level in Ray Tracing performance on various games, Ampere/RDNA3 is now rubbish at RT, when looking at those cherry picked results 2nd gen RT on both vendors is pretty similar, with Nvidia being 1 gen ahead on the 4000 series still.

Last edited:

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,336

- Location

- Planet Earth

It's simple, there's a close relationship with that developer. That means more time for optimisation in game code and development that one vendor is the beneficiary of. Look at the Witcher 3, the same developer, same sponsored title. Now with RT, but prior to that it was the tessellation stuff in The Witcher 3. Seeing a pattern here? Good, I know you are not stupid mate so surprised to see you act like you are. Don't pretend that this does not play a part in overall performance.

Those two titles you mention, RT was added at a later date. The big difference, RT was playable on RDNA2 but of course at lower frame rates vs Ampere. To be clear, no one (myself included since you quoted me) ever said AMD is stronger at RT than Nvidia. RDNA2 was always weaker. But to be clear, there's a difference between 1 gen weaker and 2 gens weaker and software can play a big part in that, this much should be obvious to you. I guess now that 7900 XTX is around 3090-3090TI level in Ray Tracing performance on various games, Ampere/RDNA3 is now rubbish at RT, when looking at those cherry picked results 2nd gen RT on both vendors is pretty similar, with Nvidia being 1 gen ahead on the 4000 series still.

It's a attempt to salvage the RTX4070TI by comparing it to the overpriced RX7900XT(which no one thinks isn't a cynical cash grab),which is faster in most games,as they are rasterised,and is competitive in most RT games,because they are hybrid. We can have awesome path traced games like Quake 2 RTX,which was a rejigged version of a 22 year old game and Portal RTX(not even Portal 2) which is a rejigged version of a 15 year old game. Both of these games were not graphical masterpieces at launch as Quake 2 was made to produce high FPS as an FPS game,and Portal would run on a toaster.

I am sure when Atomic Heart comes out and has 3 times the performance of the RX7000 series on the RTX4000 series and 2 times the performance of the RTX3000 series at ULTRA SUPER DUPER ARTX ARGB OVERDRIVE mode,suddenly the only game worth playing for the next three years will be that. Then someone on a console,which the settings are going to be more optimised on,will get a nice looking game,which will run fine for under £500. The rest of us will turn down a few settings on our "old" and "slow" cards,and probably enjoy it too. Then get the threads swamped with people trying to examine screenshots!

If you listen to some here,then look at the type of hardware people have,one thinks how 99% of gamers seem to be able to run games,with their RTX3060,GTX1060 or GTX1650 on their budget six core CPU.

IT'S CALLED A SETTINGS MENU!

This reminded me about the E-PEEN wars about tessellation.

Last edited:

- Status

- Not open for further replies.

...

...