People do notice it because of the latency.

ICDP explained it and so have many others.Would people want to use this in a competitive, ranked fps? If the prediction algorithm goes off, then your aim will be off! It is the same when people said lcds were off wrt to crts for years. Most said it was imagined. Then it was shown display latency was off. Then we had amd and nvidia introducing antilag tech recently.

Just when people said frametimes and frame pacing were not important, just average fps. Have people forgotten when xfire and sli were a thing? Nvidia went to great lengths to prove fps didn't matter just frametimes,etc.

Now we have gone full circle and now latency isn't important, frametimes are not important, lag isn't important, image quality isn't important, but average fps.

The reality is fake frames is just there for companies to sell you less for more and pcmr falls for it. Plus fake frame technology increases vram usage. Just limit those vram increases, and you can force an upgrade a bit earlier.

Hence why nvidia was trying to push the rtx4060ti performance figures using frame insertion. AMD will do the same. Less for more. More profits. That is the way.

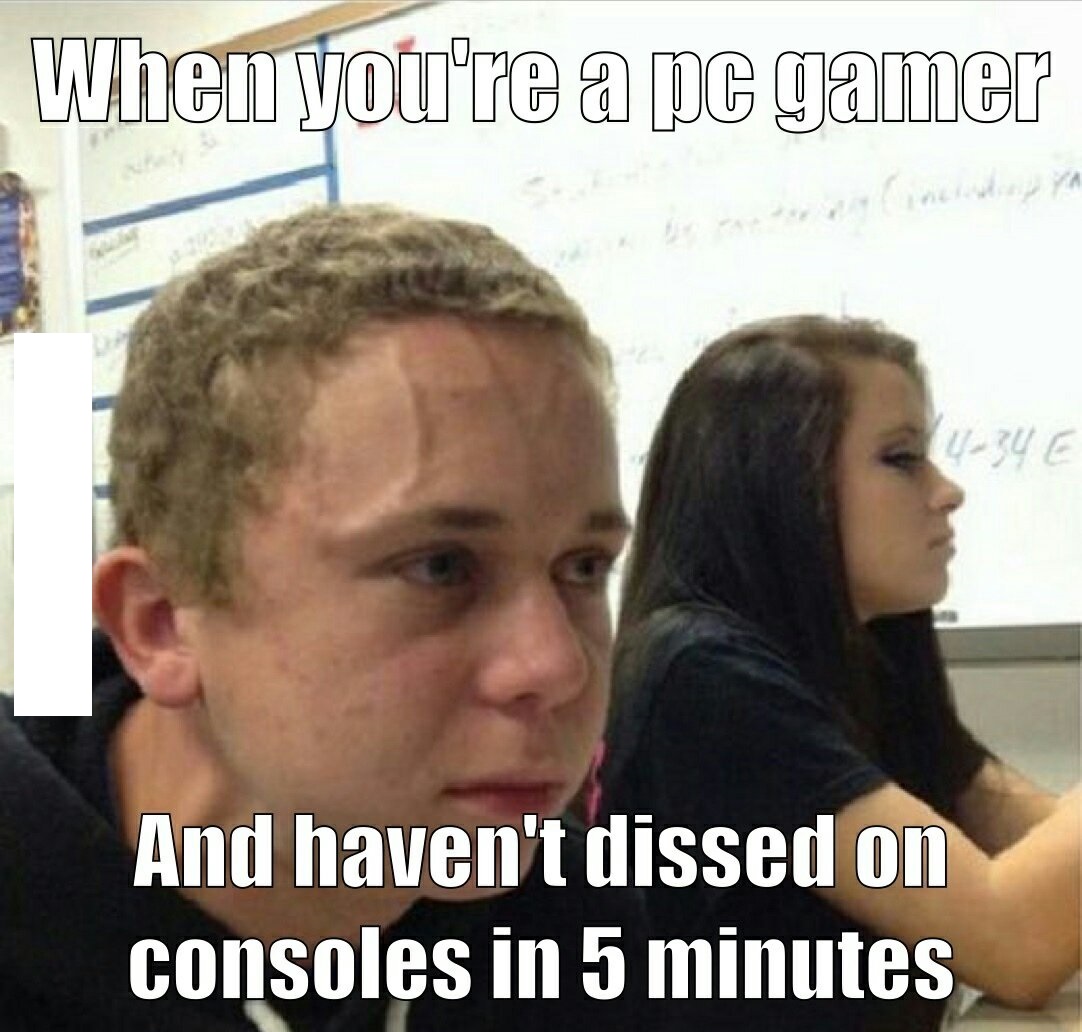

I am sure when consoles do fake frames suddenly the magnifying glasses will come out! PCMR suddenly cares about fps, frametimes and quality of upscaling then. But pcmr goes on how consoles suck due to technical measurements. But let's not get too technical on PC right?

If people were to sit down and just play, many would find a console is perfectly fine at 30 to 60fps instead of a pc. Even games on their phones, which make more money than pc games.